JHRI Template

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

To the Strategy of Amazon Prime

to the strategy of Amazon Prime “Even if a brick and mortar store does everything right, even if the store is exactly where you parked your car and it puts the thing you want right in the window and is having a sale on it that day— if you’re a Prime customer, it’s easier to buy from Amazon.” Mike Shatzkin, CEO of The Idea Logical Company Side 2 af 2 Overview: Main points and conclusions • Amazon is the world’s leading e- third of Amazon’s turnover in the commerce business with an annual US derives from Prime member- turnover of more than 100 billion ships. Prime is also an important USD and its growth is still expo- part of Amazon’s strategy for the nential. At the same time, Amazon future that revolves around a com- is one of the world’s leading sub- plete disruption of the interplay scription businesses with Amazon between e-commerce and retail Prime. The service is believed to and a domination of the same-day have above 80 million members delivery market. worldwide. • To win the position as the same- • Amazon prime is considered a sig- day delivery dominator in the mar- nificant part of Amazon’s great ket, Amazon has entered the mar- success. Amazon Prime members ket for groceries in the US. Ama- pay an annual sum of 99 USD or a zonFresh delivers groceries and monthly sum of 10.99 USD and get other goods directly to the cus- free two-day delivery on more than tomer’s doorstep on the same day 15 million different items. -

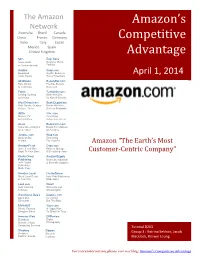

Amazon's Competitive Advantage

The Amazon Amazon’s Network Australia Brazil Canada China France Germany Competitive India Italy Japan Mexico Spain United Kingdom Advantage 6pm East Dane Score deals Designer Men's on fashion brands Fashion Audible Soap.com Download Health, Beauty & April 1, 2014 Audio Books Home Essentials AbeBooks BeautyBar.com Rare Books Prestige Beauty & Textbooks Delivered Fabric TenMarks.com Sewing, Quilting Math Activities & Knitting for Kids & Schools AfterSchool.com Book Depository Kids’ Sports, Outdoor Books With Free & Dance Gear Delivery Worldwide IMDb Vine.com Movies, TV Everything & Celebrities to Live Life Green Alexa Bookworm.com Actionable Analytics Books For Children for the Web Of All Ages Junglee.com Wag.com Shop Online Everything in India For Your Pet Amazon “The Earth’s Most AmazonFresh Casa.com Groceries & More Kitchen, Storage Right To Your Door & Everything Home Customer-Centric Company” Kindle Direct AmazonSupply Publishing Business, Industrial Indie Digital & Scientific Supplies Publishing Made Easy Amazon Local CreateSpace Great Local Deals Indie Print Publishing in Your City Made Easy Look.com Woot! Kids' Clothing Discounts and & Shoes Shenanigans Warehouse Deals Diapers.com Open-Box Everything Discounts But The Baby MYHABIT Yoyo.com Private Fashion A Happy Place Designer Sales To Shop For Toys Amazon Web DPReview Services Digital Scalable Cloud Photography Computing Services Tutorial D203 Shopbop Zappos Group 3 : Katrina Sekhon, Jacob Designer Shoes & Fashion Brands Clothing Blacklock, Kerwin Leung For more information, please visit our blog: Amazon's Competitive Advantage When it first began in the 1990s Amazon aimed to be “The Earth’s Largest Bookseller.” In the past twenty years, its goal has since evolved to being How Does Amazon “The Earth’s most Customer-Centric Company.” This company-wide focus is at the heart of its long-term competitive advantage. -

Design Perspectives on Delivery Drones

C O R P O R A T I O N Design Perspectives on Delivery Drones Jia Xu For more information on this publication, visit www.rand.org/t/RR1718z2 Published by the RAND Corporation, Santa Monica, Calif. © Copyright 2017 RAND Corporation R® is a registered trademark. Limited Print and Electronic Distribution Rights This document and trademark(s) contained herein are protected by law. This representation of RAND intellectual property is provided for noncommercial use only. Unauthorized posting of this publication online is prohibited. Permission is given to duplicate this document for personal use only, as long as it is unaltered and complete. Permission is required from RAND to reproduce, or reuse in another form, any of its research documents for commercial use. For information on reprint and linking permissions, please visit www.rand.org/pubs/permissions. The RAND Corporation is a research organization that develops solutions to public policy challenges to help make communities throughout the world safer and more secure, healthier and more prosperous. RAND is nonprofit, nonpartisan, and committed to the public interest. RAND’s publications do not necessarily reflect the opinions of its research clients and sponsors. Support RAND Make a tax-deductible charitable contribution at www.rand.org/giving/contribute www.rand.org Preface Delivery drones may become widespread over the next five to ten years, particularly for what is known as the “last-mile” logistics of small, light items. Companies such as Amazon, Google, the United Parcel Service (UPS), DHL, and Alibaba have been running high-profile experiments testing drone delivery systems, and the development of such systems reached a milestone when the first commercial drone delivery approved by the Federal Aviation Administration took place on July 17, 2015. -

A Motion Is Requested to Authorize the Execution of a Contract for Amazon Business Procurement Services Through the U.S. Communities Government Purchasing Alliance

MOT 2019-8118 Page 1 of 98 VILLAGE OF DOWNERS GROVE Report for the Village Council Meeting 3/19/2019 SUBJECT: SUBMITTED BY: Authorization of a contract for Amazon Business procurement Judy Buttny services Finance Director SYNOPSIS A motion is requested to authorize the execution of a contract for Amazon Business procurement services through the U.S. Communities Government Purchasing Alliance. STRATEGIC PLAN ALIGNMENT The goals for 2017-2019 includes Steward of Financial Sustainability, and Exceptional, Continual Innovation. FISCAL IMPACT There is no cost to utilize Amazon Business procurement services through the U.S. Communities Government Purchasing Alliance. RECOMMENDATION Approval on the March 19, 2019 Consent Agenda. BACKGROUND U.S. Communities Government Purchasing Alliance is the largest public sector cooperative purchasing organization in the nation. All contracts are awarded by a governmental entity utilizing industry best practices, processes and procedures. The Village of Downers Grove has been a member of the U.S. Communities Government Purchasing Alliance since 2008. Through cooperative purchasing, the Village is able to take advantage of economy of scale and reduce the cost of goods and services. U.S. Communities has partnered with Amazon Services to offer local government agencies the ability to utilize Amazon Business for procurement services at no cost to U.S. Communities members. Amazon Business offers business-only prices on millions of products in a competitive digital market place and a multi-level approval workflow. Staff can efficiently find quotes and purchase products for the best possible price, and the multi-level approval workflow ensures this service is compliant with the Village’s competitive process for purchases under $7,000. -

Strategic Network Design for Parcel Delivery with Drones Under Competition

Strategic Network Design for Parcel Delivery with Drones under Competition Gohram Baloch, Fatma Gzara Department of Management Sciences, University of Waterloo, ON Canada N2L 3G1 [email protected] [email protected] This paper studies the economic desirability of UAV parcel delivery and its effect on e-retailer distribution network while taking into account technological limitations, government regulations, and customer behavior. We consider an e-retailer offering multiple same day delivery services including a fast UAV service and develop a distribution network design formulation under service based competition where the services offered by the e-retailer not only compete with the stores (convenience, grocery, etc.), but also with each other. Competition is incorporated using the Multinomial Logit market share model. To solve the resulting nonlinear mathematical formulation, we develop a novel logic-based Benders decomposition approach. We build a case based on NYC, carry out extensive numerical testing, and perform sensitivity analyses over delivery charge, delivery time, government regulations, technological limitations, customer behavior, and market size. The results show that government regulations, technological limitations, and service charge decisions play a vital role in the future of UAV delivery. Key words : UAV; drone; market share models; facility location; logic-based benders decomposition 1. Introduction Unmanned aerial vehicles (UAVs) or drones have been used in military applications as early as 1916 (Cook 2007). As the technology improved, their applications extended to surveillance and moni- toring (Maza et al. 2010, Krishnamoorthy et al. 2012), weather research (Darack 2012), delivery of medical supplies (Wang 2016, Thiels et al. 2015), and emergency response (Adams and Friedland 2011). -

AWS Architecture Monthly February 2021

Notices Customers are responsible for making their own independent assessment of the information in this document. This document: (a) is for informational purposes only, (b) represents current AWS product offerings and practices, which are subject to change without notice, and (c) does not create any commitments or assurances from AWS and its affiliates, suppliers, or licensors. AWS products or services are provided “as is” without warranties, representations, or conditions of any kind, whether express or implied. The responsibilities and liabilities of AWS to its customers are controlled by AWS agreements, and this document is not part of, nor does it modify, any agreement between AWS and its customers. © 2020 Amazon Web Services, Inc. or its affiliates. All rights reserved. Editor's note How is operational data being used to transform manufacturing? Steve Blackwell, AWS Worldwide Tech Leader for manufacturing, speaks about considerations for architecting for manufacturing, considerations for Industry 4.0 applications and data, and AWS for Industrial. We hope you’ll find this edition of Architecture Monthly useful. My team would like your feedback, so please give us a rating and include your comments on the Amazon Kindle page. You can view past issues and reach out to [email protected] anytime with your questions and comments. In The Manufacturing issue: • Ask an Expert: Steve Blackwell, Tech Leader, Manufacturing, AWS • Case Study: Amazon Prime Air’s Drone Takes Flight with AWS and Siemens • Reference Architecture: -

1 the Warehouse of the Future MSG Ortega, Johanny Sergeants Major Course Class 71 Mr. Torres April 8, 2021

1 Adobe Acrobat Document The Warehouse of the Future MSG Ortega, Johanny Sergeants Major Course Class 71 Mr. Torres April 8, 2021 2 The Warehouse of the Future Imagine the future. The year is 2035, and drones fly in the sky. A Supply Support Activity (SSA) Soldier prepares high-priority parts for shipment to a forward element and loads them. The drone takes off. The joint logistic system tracks its route and feeds the information to the awaiting customer, giving them live estimated shipment time across a secured cyber communication. When it arrives, the drone scans the customer’s identification card, validating the receipt. It promptly sends a notification to the SSA that the customer received the part. The customer then verifies it against their requisition to ensure serviceability and correctness. If there are no high-priority retrogrades, the customer dispatches the drone. Before takeoff, the drone submits the customer’s part verification receipt, effectively closing the request. Is this science fiction? No. Amazon is testing and refining a version of this and named it Amazon Prime Air (Palmer, 2020). Unfortunately, the Army has yet to catch on to this process. However, to be fair, Amazon began testing drone deliveries in 2013 while the Army was still conducting combat operations in Afghanistan under Operation Enduring Freedom (Palmer, 2020; Torreon, 2020). Since then, Amazon has steadily modernized to meet current and future demand and overmatched most competitors. Nevertheless, the Army’s procurement and budgeting processes are different. Therefore, its trajectory to modernization will differ. Despite these differences, modernization is knocking at the Army’s door. -

Amazon.Com, Inc. for the Exclusive Use of D. Regresado, 2018

For the exclusive use of D. Regresado, 2018. MH0053 1259927628 REV: MAY 25, 2017 FRANK T. ROTHAERMEL Amazon.com, Inc. It was late on a dreary winter day in Seattle in early January 2017. As Jeff Bezos studied the report for final quarter in 2016, he hoped investors would be pleased with the results. Amazon’s share price was up 43% compared to the same time last year, and market cap had grown almost 12% from $318 billion to $356 billion over the same time.1 Exhibit 1 depicts Amazon’s revenue, net income, and oper- ating expenses from 1996 to 2016. As Amazon evolved with technology, traditional boundaries between hardware and software, products and services, and online and bricks- and-mortar stores had become increasingly blurred. As a result, Amazon found itself engaged in a fierce competitive battle for control of the emerging digital ecosys- tem, pitted itself against technology giants such as Alphabet (Google’s parent company), Alibaba, and even Microsoft and Apple. Throughout this digital transformation, Amazon’s investors remained focused on the long-term, because consistent profitability continued to elude the company. Sales, however, increased quarter after quarter, fueling optimism in what the long-term projects the company invested in could mean for future profitability and growth. Investors are by now quite familiar with the three pillars of Amazon that Bezos likes to talk about: the e-commerce marketplace, the Prime membership program, and Amazon Web Services (AWS). But will this be enough going forward? While Amazon was starting to post some profits, its stock was down 7% after the last quarterly results were posted in 2016.2 As Bezos gazed out of the office window into the darkness, he could not help but ask himself that very question: What should be the fourth pillar of Amazon? After all, Amazon had launched a smart- phone, a set-top box for video streaming, a music streaming service, in-house video programming, and expanded same-day delivery services. -

5 Inspiring Companies With

Inspiring Companies With "Future of Work" Leaders ...AND WHAT WE CAN LEARN FROM THEM Tony Hsieh Zappos future focused CEO since 1999 a poster #1 The one thing I’m company for holacracy absolutely sure of is that Also pioneered the "Downtown Project", a group committed to transforming downtown Las the future is about self- Vegas into the most community-focused large city in the world. This transformation included the management. Fall 2013 relocation of Zappos.com offices from Henderson, Nevada to downtown Las Vegas. What we can learn from Tony Hsieh public tours of . businesses to date under the . Zappos a week . Focus on employee Disrupt the ‘norms’ of corporate 50 Downtown Project umbrella 16 . happiness and business will structure which he did by prosper introducing holacracy Facebook's COO Sheryl Sandberg focus on female leaders We cannot change what #2 we are not aware of, and for the future once we are aware we In 2012 she became the eighth member (and the first female member) of Facebook's board of cannot help but change. directors. Advocates for female leaders and wrote her book Lean IN in 2013 and founded leanin.org. Today, there are over What we can learn from . Sheryl Sandberg . Circles in 97 countries registered with LEANIN.ORG . Women are key to the future of Great adversity can lead to . including circles in all five branches of the US Military. 21,000 work and women leaders are greater resilience and . needed to get to the future of work happiness #3 Jeff Bezos CEO of Amazon If you double the number of experiments you do per year Pioneered ecommerce in 1995 you’re going to double your Pioneered drone delivery technology with Amazon Prime Air in 2013 inventiveness. -

Amazon's Stranglehold: How the Company's Tightening Grip Is

Amazon’s Stranglehold: How the Company’s Tightening Grip Is Stifling Competition, Eroding Jobs, and Threatening Communities By Stacy Mitchell and Olivia LaVecchia November 2016 About the Institute for Local Self-Reliance The Institute for Local Self-Reliance (ILSR) is a 42-year-old national nonprofit research and educational organization. ILSR’s mission is to provide innovative strategies, working models, and timely information to support strong, community rooted, environmentally sound, and equitable local economies. To this end, ILSR works with citizens, policymakers, and businesses to design systems, policies, and enterprises that meet local needs; to maximize human, material, natural, and financial resources, and to ensure that the benefits of these systems and resources accrue to all local citizens. More at www.ilsr.org. About the Authors Stacy Mitchell is co-director of ILSR and director of its Community-Scaled Economy Initiative. Her research and writing on the advantages of devolving economic power have influenced policy-makers and helped guide grassroots strategies. She has produced numerous reports and written articles for a variety of national publications, from Bloomberg Businessweek to The Nation, and is the author of Big-Box Swindle: The True Cost of Mega-Retailers and the Fight for America’s Independent Businesses. Contact her at [email protected] or via Twitter at @stacyfmitchell. Olivia LaVecchia is a researcher with ILSR’s Community-Scaled Economy Initiative, where she produces reports and articles on concentrated economic power and locally rooted alternatives. Her work reaches wide audiences, spurs grassroots action, and influences policy-making. Contact her at [email protected] or via Twitter at @olavecchia. -

E-Commerce Impact and US Air Trade Market Outlook: 2019-2023

E-Commerce Impact and US Air Trade Market Outlook: 2019-2023 Confidential May 5, 2019 2018 versus 2017 Structural versus cyclical growth Air cargo supply curve Implications and initiatives 2 US air trade weight grew at 5.1%, which was roughly 1x nominal US GDP US GROWTH RATES: 17-18 US AIR IMPORT WEIGHT BY REGION: 17-18 Percent growth, US GDP growth multiple Percent growth, US GDP growth multiple 17.0% 13.7% 6.1% 3.1% 3.5% 5.2% 5.1% 2.0% US Air trade weight E-Commerce AS EU LA Intra NA ROW GDP Decreasing US air import market weight NOMINAL GDP BY REGION: 17-18 US AIR EXPORT WEIGHT BY REGION: 17-18 Percent growth Percent growth, Destination region GDP growth multiple 7.9% 7.9% 7.1% 6.6% 5.3% 3.0% 1.3% -5.2% AS EU ROW LA AS EU ROW LA Decreasing region GDP size Decreasing US air export market weight Note: Air trade consists of air express package and standard air freight. Intra NA import includes US domestic. ROW export is Africa, Middle East, and North America Source: LogCapStrat analysis, IMF, Euromonitor, LogCapStrat Air Cargo Workbench 3 Transatlantic market from/to Europe was the star performer in 2018 US AIR IMPORT GROWTH BY REGION: 17-18 Absolute growth (kilos, millions), YoY air weight growth % 118.0 73.0 44.0 +7.9% +6.1% +3.1% Percent growth Percent 4.4+3.5% EU AS LA ROW New air import market weight US AIR EXPORT GROWTH BY REGION: 17-18 Absolute growth (kilos, millions), YoY air weight growth % 73.0 76.0 +6.7% +5.3% 5.0 12.5 Percent growth Percent +1.3% +3.0% EU AS LA ROW New air export market weight Note: Air trade consists of air express package and standard air freight. -

Omnia Amazon Business R-TC-17006

amazonbusiness.,.,,_,__, October 14, 2016 Mr. Anthony Crosby Coordinator Prince William County Public Schools Financial Services/Purchasing Room #1500 RFP #R-TC-17006 P.O. Box 389 Manassas, VA 20108 Dear Mr. Crosby, It is with great pleasure that we enclose our response to your request for proposal for the on-line marketplace for the purchase of products and services. Amazon Services, LLC. (Amazon.com) is an e-commerce company offering a range of products and services through our website. Amazon herewith offers Prince William County Public Schools (PWCPS) our Amazon Business solution to assist PWCPS in your on-line marketplace needs. Please review our responses and feel free to contact Daniel Smith, General Manager, (206) 708-9895 or via email at [email protected] to answer any questions you may have. The entire team at Amazon Business looks forward to building a mutually beneficial relationship with Prince William County Public Schools. Respectfully, Prentis Wilson Vice President Amazon Business Amazon Services, LLC • 410 Terry Avenue North • Seattle, WA 98109-5210 • USA Prince William County Schools RFP R-TC-17006 On-line Marketplace for the Purchases of Products and Services Table of Contents 1.0 Title Sheet (Tab 1) ................................................................................................ 1 2.0 Executive Summary (Tab 2) ................................................................................. 3 Product Benefits .........................................................................................................