Bayesian Analysis of Fmri Data and RNA-Seq Time Course Experiment Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Genetic Background of Clinical Mastitis in Holstein- Friesian Cattle

Animal (2019), 13:1 0p , p 2156–2163 © The Animal Consortium 2019 animal doi:10.1017/S1751731119000338 The genetic background of clinical mastitis in Holstein- Friesian cattle J. Szyda1,2†, M. Mielczarek1,2,M.Frąszczak1, G. Minozzi3, J. L. Williams4 and K. Wojdak-Maksymiec5 1Biostatistics Group, Department of Genetics, Wroclaw University of Environmental and Life Sciences, Kozuchowska 7, 51-631 Wroclaw, Poland; 2Institute of Animal Breeding, Krakowska 1, 32-083 Balice, Poland; 3Department of Veterinary Medicine, Università degli Studi di Milano, Via Giovanni Celoria 10, 20133 Milano, Italy; 4Davies Research Centre, School of Animal and Veterinary Sciences, University of Adelaide, Roseworthy, SA 5371, Australia; 5Department of Animal Genetics, West Pomeranian University of Technology, Doktora Judyma 26, 71-466 Szczecin, Poland (Received 15 September 2018; Accepted 28 January 2019; First published online 5 March 2019) Mastitis is an inflammatory disease of the mammary gland, which has a significant economic impact and is an animal welfare concern. This work examined the association between single nucleotide polymorphisms (SNPs) and copy number variations (CNVs) with the incidence of clinical mastitis (CM). Using information from 16 half-sib pairs of Holstein-Friesian cows (32 animals in total) we searched for genomic regions that differed between a healthy (no incidence of CM) and a mastitis-prone (multiple incidences of CM) half-sib. Three cows with average sequence depth of coverage below 10 were excluded, which left 13 half-sib pairs available for comparisons. In total, 191 CNV regions were identified, which were deleted in a mastitis-prone cow, but present in its healthy half-sib and overlapped in at least nine half-sib pairs. -

RNF122: a Novel Ubiquitin Ligase Associated with Calcium-Modulating Cyclophilin Ligand BMC Cell Biology 2010, 11:41 References 1

Peng et al. BMC Cell Biology 2010, 11:41 http://www.biomedcentral.com/1471-2121/11/41 RESEARCH ARTICLE Open Access RNF122:Research article A novel ubiquitin ligase associated with calcium-modulating cyclophilin ligand Zhi Peng1,4, Taiping Shi*1,2,3 and Dalong Ma1,2,3 Abstract Background: RNF122 is a recently discovered RING finger protein that is associated with HEK293T cell viability and is overexpressed in anaplastic thyroid cancer cells. RNF122 owns a RING finger domain in C terminus and transmembrane domain in N terminus. However, the biological mechanism underlying RNF122 action remains unknown. Results: In this study, we characterized RNF122 both biochemically and intracellularly in order to gain an understanding of its biological role. RNF122 was identified as a new ubiquitin ligase that can ubiquitinate itself and undergoes degradation in a RING finger-dependent manner. From a yeast two-hybrid screen, we identified calcium- modulating cyclophilin ligand (CAML) as an RNF122-interacting protein. To examine the interaction between CAML and RNF122, we performed co-immunoprecipitation and colocalization experiments using intact cells. What is more, we found that CAML is not a substrate of ubiquitin ligase RNF122, but that, instead, it stabilizes RNF122. Conclusions: RNF122 can be characterized as a C3H2C3-type RING finger-containing E3 ubiquitin ligase localized to the ER. RNF122 promotes its own degradation in a RING finger-and proteasome-dependent manner. RNF122 interacts with CAML, and its E3 ubiquitin ligase activity was noted to be dependent on the RING finger domain. Background RING finger proteins contain a RING finger domain, The ubiquitin-proteasome system is involved in protein which was first identified as being encoded by the Really degradation and many biological processes such as tran- Interesting New Gene in the early 1990 s [5]. -

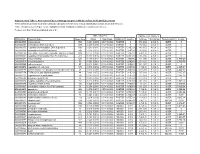

Association of Gene Ontology Categories with Decay Rate for Hepg2 Experiments These Tables Show Details for All Gene Ontology Categories

Supplementary Table 1: Association of Gene Ontology Categories with Decay Rate for HepG2 Experiments These tables show details for all Gene Ontology categories. Inferences for manual classification scheme shown at the bottom. Those categories used in Figure 1A are highlighted in bold. Standard Deviations are shown in parentheses. P-values less than 1E-20 are indicated with a "0". Rate r (hour^-1) Half-life < 2hr. Decay % GO Number Category Name Probe Sets Group Non-Group Distribution p-value In-Group Non-Group Representation p-value GO:0006350 transcription 1523 0.221 (0.009) 0.127 (0.002) FASTER 0 13.1 (0.4) 4.5 (0.1) OVER 0 GO:0006351 transcription, DNA-dependent 1498 0.220 (0.009) 0.127 (0.002) FASTER 0 13.0 (0.4) 4.5 (0.1) OVER 0 GO:0006355 regulation of transcription, DNA-dependent 1163 0.230 (0.011) 0.128 (0.002) FASTER 5.00E-21 14.2 (0.5) 4.6 (0.1) OVER 0 GO:0006366 transcription from Pol II promoter 845 0.225 (0.012) 0.130 (0.002) FASTER 1.88E-14 13.0 (0.5) 4.8 (0.1) OVER 0 GO:0006139 nucleobase, nucleoside, nucleotide and nucleic acid metabolism3004 0.173 (0.006) 0.127 (0.002) FASTER 1.28E-12 8.4 (0.2) 4.5 (0.1) OVER 0 GO:0006357 regulation of transcription from Pol II promoter 487 0.231 (0.016) 0.132 (0.002) FASTER 6.05E-10 13.5 (0.6) 4.9 (0.1) OVER 0 GO:0008283 cell proliferation 625 0.189 (0.014) 0.132 (0.002) FASTER 1.95E-05 10.1 (0.6) 5.0 (0.1) OVER 1.50E-20 GO:0006513 monoubiquitination 36 0.305 (0.049) 0.134 (0.002) FASTER 2.69E-04 25.4 (4.4) 5.1 (0.1) OVER 2.04E-06 GO:0007050 cell cycle arrest 57 0.311 (0.054) 0.133 (0.002) -

140503 IPF Signatures Supplement Withfigs Thorax

Supplementary material for Heterogeneous gene expression signatures correspond to distinct lung pathologies and biomarkers of disease severity in idiopathic pulmonary fibrosis Daryle J. DePianto1*, Sanjay Chandriani1⌘*, Alexander R. Abbas1, Guiquan Jia1, Elsa N. N’Diaye1, Patrick Caplazi1, Steven E. Kauder1, Sabyasachi Biswas1, Satyajit K. Karnik1#, Connie Ha1, Zora Modrusan1, Michael A. Matthay2, Jasleen Kukreja3, Harold R. Collard2, Jackson G. Egen1, Paul J. Wolters2§, and Joseph R. Arron1§ 1Genentech Research and Early Development, South San Francisco, CA 2Department of Medicine, University of California, San Francisco, CA 3Department of Surgery, University of California, San Francisco, CA ⌘Current address: Novartis Institutes for Biomedical Research, Emeryville, CA. #Current address: Gilead Sciences, Foster City, CA. *DJD and SC contributed equally to this manuscript §PJW and JRA co-directed this project Address correspondence to Paul J. Wolters, MD University of California, San Francisco Department of Medicine Box 0111 San Francisco, CA 94143-0111 [email protected] or Joseph R. Arron, MD, PhD Genentech, Inc. MS 231C 1 DNA Way South San Francisco, CA 94080 [email protected] 1 METHODS Human lung tissue samples Tissues were obtained at UCSF from clinical samples from IPF patients at the time of biopsy or lung transplantation. All patients were seen at UCSF and the diagnosis of IPF was established through multidisciplinary review of clinical, radiological, and pathological data according to criteria established by the consensus classification of the American Thoracic Society (ATS) and European Respiratory Society (ERS), Japanese Respiratory Society (JRS), and the Latin American Thoracic Association (ALAT) (ref. 5 in main text). Non-diseased normal lung tissues were procured from lungs not used by the Northern California Transplant Donor Network. -

A Novel Resveratrol Analog: Its Cell Cycle Inhibitory, Pro-Apoptotic and Anti-Inflammatory Activities on Human Tumor Cells

A NOVEL RESVERATROL ANALOG : ITS CELL CYCLE INHIBITORY, PRO-APOPTOTIC AND ANTI-INFLAMMATORY ACTIVITIES ON HUMAN TUMOR CELLS A dissertation submitted to Kent State University in partial fulfillment of the requirements for the degree of Doctor of Philosophy by Boren Lin May 2006 Dissertation written by Boren Lin B.S., Tunghai University, 1996 M.S., Kent State University, 2003 Ph. D., Kent State University, 2006 Approved by Dr. Chun-che Tsai , Chair, Doctoral Dissertation Committee Dr. Bryan R. G. Williams , Co-chair, Doctoral Dissertation Committee Dr. Johnnie W. Baker , Members, Doctoral Dissertation Committee Dr. James L. Blank , Dr. Bansidhar Datta , Dr. Gail C. Fraizer , Accepted by Dr. Robert V. Dorman , Director, School of Biomedical Sciences Dr. John R. Stalvey , Dean, College of Arts and Sciences ii TABLE OF CONTENTS LIST OF FIGURES……………………………………………………………….………v LIST OF TABLES……………………………………………………………………….vii ACKNOWLEDGEMENTS….………………………………………………………….viii I INTRODUCTION….………………………………………………….1 Background and Significance……………………………………………………..1 Specific Aims………………………………………………………………………12 II MATERIALS AND METHODS.…………………………………………….16 Cell Culture and Compounds…….……………….…………………………….….16 MTT Cell Viability Assay………………………………………………………….16 Trypan Blue Exclusive Assay……………………………………………………...18 Flow Cytometry for Cell Cycle Analysis……………..……………....……………19 DNA Fragmentation Assay……………………………………………...…………23 Caspase-3 Activity Assay………………………………...……….….…….………24 Annexin V-FITC Staining Assay…………………………………..…...….………28 NF-kappa B p65 Activity Assay……………………………………..………….…29 -

Ubiquitin-Dependent Regulation of the WNT Cargo Protein EVI/WLS Handelt Es Sich Um Meine Eigenständig Erbrachte Leistung

DISSERTATION submitted to the Combined Faculty of Natural Sciences and Mathematics of the Ruperto-Carola University of Heidelberg, Germany for the degree of Doctor of Natural Sciences presented by Lucie Magdalena Wolf, M.Sc. born in Nuremberg, Germany Date of oral examination: 2nd February 2021 Ubiquitin-dependent regulation of the WNT cargo protein EVI/WLS Referees: Prof. Dr. Michael Boutros apl. Prof. Dr. Viktor Umansky If you don’t think you might, you won’t. Terry Pratchett This work was accomplished from August 2015 to November 2020 under the supervision of Prof. Dr. Michael Boutros in the Division of Signalling and Functional Genomics at the German Cancer Research Center (DKFZ), Heidelberg, Germany. Contents Contents ......................................................................................................................... ix 1 Abstract ....................................................................................................................xiii 1 Zusammenfassung .................................................................................................... xv 2 Introduction ................................................................................................................ 1 2.1 The WNT signalling pathways and cancer ........................................................................ 1 2.1.1 Intercellular communication ........................................................................................ 1 2.1.2 WNT ligands are conserved morphogens ................................................................. -

The Role of the Transmembrane RING Finger Proteins in Cellular and Organelle Function

Membranes 2011, 1, 354-393; doi:10.3390/membranes1040354 OPEN ACCESS membranes ISSN 2077-0375 www.mdpi.com/journal/membranes Review The Role of the Transmembrane RING Finger Proteins in Cellular and Organelle Function Nobuhiro Nakamura Department of Biological Sciences, Tokyo Institute of Technology, 4259-B13 Nagatsuta-cho, Midori-ku, Yokohama 226-8501, Japan; E-Mail: [email protected]; Tel.: +81-45-924-5726; Fax: +81-45-924-5824 Received: 26 October 2011; in revised form: 24 November 2011 / Accepted: 5 December 2011 / Published: 9 December 2011 Abstract: A large number of RING finger (RNF) proteins are present in eukaryotic cells and the majority of them are believed to act as E3 ubiquitin ligases. In humans, 49 RNF proteins are predicted to contain transmembrane domains, several of which are specifically localized to membrane compartments in the secretory and endocytic pathways, as well as to mitochondria and peroxisomes. They are thought to be molecular regulators of the organization and integrity of the functions and dynamic architecture of cellular membrane and membranous organelles. Emerging evidence has suggested that transmembrane RNF proteins control the stability, trafficking and activity of proteins that are involved in many aspects of cellular and physiological processes. This review summarizes the current knowledge of mammalian transmembrane RNF proteins, focusing on their roles and significance. Keywords: endocytosis; ERAD; immune regulation; membrane trafficking; mitochondrial dynamics; proteasome; quality control; RNF; ubiquitin; ubiquitin ligase 1. Introduction Ubiquitination is a posttranslational modification that mediates the covalent attachment of ubiquitin (Ub), a small, highly conserved, cytoplasmic protein of 76 amino acid residues, to target proteins. -

Transcriptional Reporter Plasmids Derived from 5’-Regulatory Regions of Genes

SUPPLEMENTARY TABLES Supplementary Table I: Transcriptional reporter plasmids derived from 5’-regulatory regions of genes. Promoter –Luciferase Constructs 5’ Regulatory Region Plasmid (R.E. Sites) Description Mouse SP-A, Sftpa-0.45-luc -399/+45 bps pGL3 (Hind III / Pst I) 1 Mouse SP-B, Sftpb-1.8-luc -1797/+42 bps pGL3 (Hind III / Sal I) 2 Mouse SP-C, Sftpc-4.8-luc -4800/+18 bps pGL2 (Xho I / Xho I) 3 Mouse Abca3, Abca3-2.6-luc -2591/+11 pGL3 (Kpn I / Xho I) This Study Mouse Foxa1, Foxa1-3.4-luc -3400/+47 bps pGL3 (Sac I /Xma I) This Study Mouse Foxa2, Foxa2-1.6-luc -1529/+68 pGL3 (Nhe I/ Hind III) 4 Supplemental References for Table I 1. Bruno, M.D., Korfhagen, T.R., Liu, C., Morrisey, E.E. & Whitsett, J.A. GATA-6 activates transcription of surfactant protein A. J Biol Chem 275, 1043-9 (2000). 2. Sever-Chroneos, Z., Bachurski, C.J., Yan, C. & Whitsett, J.A. Regulation of mouse SP-B gene promoter by AP-1 family members. Am J Physiol 277, L79-88 (1999). 3. Kelly, S.E., Bachurski, C.J., Burhans, M.S. & Glasser, S.W. Transcription of the lung- specific surfactant protein C gene is mediated by thyroid transcription factor 1. J Biol Chem 271, 6881-8 (1996). 4. Kaestner, K.H., Montoliu, L., Kern, H., Thulke, M. & Schutz, G. Universal beta- galactosidase cloning vectors for promoter analysis and gene targeting. Gene 148, 67-70 (1994). Supplementary Table II: Oligonucleotides used in EMSA, ChIP and Real-Time PCR Assays. -

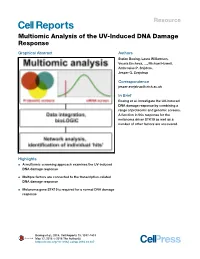

Multiomic Analysis of the UV-Induced DNA Damage Response

Resource Multiomic Analysis of the UV-Induced DNA Damage Response Graphical Abstract Authors Stefan Boeing, Laura Williamson, Vesela Encheva, ..., Michael Howell, Ambrosius P. Snijders, Jesper Q. Svejstrup Correspondence [email protected] In Brief Boeing et al. investigate the UV-induced DNA damage response by combining a range of proteomic and genomic screens. A function in this response for the melanoma driver STK19 as well as a number of other factors are uncovered. Highlights d A multiomic screening approach examines the UV-induced DNA damage response d Multiple factors are connected to the transcription-related DNA damage response d Melanoma gene STK19 is required for a normal DNA damage response Boeing et al., 2016, Cell Reports 15, 1597–1610 May 17, 2016 ª 2016 The Author(s) http://dx.doi.org/10.1016/j.celrep.2016.04.047 Cell Reports Resource Multiomic Analysis of the UV-Induced DNA Damage Response Stefan Boeing,1,5 Laura Williamson,1 Vesela Encheva,2 Ilaria Gori,3 Rebecca E. Saunders,3 Rachael Instrell,3 Ozan Aygun,€ 1,7 Marta Rodriguez-Martinez,1 Juston C. Weems,4 Gavin P. Kelly,5 Joan W. Conaway,4,6 Ronald C. Conaway,4,6 Aengus Stewart,5 Michael Howell,3 Ambrosius P. Snijders,2 and Jesper Q. Svejstrup1,* 1Mechanisms of Transcription Laboratory, the Francis Crick Institute, Clare Hall Laboratories, South Mimms EN6 3LD, UK 2Protein Analysis and Proteomics Laboratory, the Francis Crick Institute, Clare Hall Laboratories, South Mimms EN6 3LD, UK 3High Throughput Screening Laboratory, the Francis Crick Institute, 44 Lincoln’s -

RNF12 Activates Xist and Is Essential for X Chromosome Inactivation

RNF12 Activates Xist and Is Essential for X Chromosome Inactivation Tahsin Stefan Barakat1, Nilhan Gunhanlar1, Cristina Gontan Pardo1, Eskeatnaf Mulugeta Achame1, Mehrnaz Ghazvini1,2, Ruben Boers1, Annegien Kenter1, Eveline Rentmeester1, J. Anton Grootegoed1, Joost Gribnau1* 1 Department of Reproduction and Development, Erasmus MC, University Medical Center, Rotterdam, The Netherlands, 2 Erasmus Stem Cell Institute, Erasmus MC, University Medical Center, Rotterdam, The Netherlands Abstract In somatic cells of female placental mammals, one of the two X chromosomes is transcriptionally silenced to accomplish an equal dose of X-encoded gene products in males and females. Initiation of random X chromosome inactivation (XCI) is thought to be regulated by X-encoded activators and autosomally encoded suppressors controlling Xist. Spreading of Xist RNA leads to silencing of the X chromosome in cis. Here, we demonstrate that the dose dependent X-encoded XCI activator RNF12/RLIM acts in trans and activates Xist. We did not find evidence for RNF12-mediated regulation of XCI through Tsix or the Xist intron 1 region, which are both known to be involved in inhibition of Xist. In addition, we found that Xist intron 1, which contains a pluripotency factor binding site, is not required for suppression of Xist in undifferentiated ES cells. Analysis of female Rnf122/2 knockout ES cells showed that RNF12 is essential for initiation of XCI and is mainly involved in the regulation of Xist. We conclude that RNF12 is an indispensable factor in up-regulation of Xist transcription, thereby leading to initiation of random XCI. Citation: Barakat TS, Gunhanlar N, Gontan Pardo C, Mulugeta Achame E, Ghazvini M, et al. -

A Role for the RNA Pol II–Associated PAF Complex in AID-Induced Immune Diversification

Article A role for the RNA pol II–associated PAF complex in AID-induced immune diversification Katharina L. Willmann,1,2 Sara Milosevic,4 Siim Pauklin,1 Kerstin-Maike Schmitz,2 Gopinath Rangam,1,2 Maria T. Simon,1 Sarah Maslen,3 Mark Skehel,3 Isabelle Robert,4 Vincent Heyer,4 Ebe Schiavo,4 Bernardo Reina-San-Martin,4 Svend K. Petersen-Mahrt1,2 1DNA Editing Laboratory, London Research Institute, South Mimms EN6 3LD, England, UK 2DNA Editing in Immunity and Epigenetics, IFOM-Fondazione Instituto FIRC di Oncologia Molecolare, Via Adamello 16, 20139 Milano, Italy 3Protein Analysis and Proteomics Laboratory, London Research Institute, South Mimms EN6 3LD, England, UK 4Institut de Génétique et de Biologie Moléculaire et Cellulaire (IGBMC), Institut National de la Santé et de la Recherche Médicale (INSERM) U964, Centre National de la Recherche Scientifique (CNRS) UMR7104, Université de Strasbourg, 67404 Illkirch, France Antibody diversification requires the DNA deaminase AID to induce DNA instability at immunoglobulin (Ig) loci upon B cell stimulation. For efficient cytosine deamination, AID requires single-stranded DNA and needs to gain access to Ig loci, with RNA pol II transcrip- tion possibly providing both aspects. To understand these mechanisms, we isolated and characterized endogenous AID-containing protein complexes from the chromatin of diversi- fying B cells. The majority of proteins associated with AID belonged to RNA polymerase II elongation and chromatin modification complexes. Besides the two core polymerase sub- units, members of the PAF complex, SUPT5H, SUPT6H, and FACT complex associated with AID. We show that AID associates with RNA polymerase-associated factor 1 (PAF1) through its N-terminal domain, that depletion of PAF complex members inhibits AID-induced immune diversification, and that the PAF complex can serve as a binding platform for AID on chromatin. -

Oncogenic Potential of the Dual-Function Protein MEX3A

biology Review Oncogenic Potential of the Dual-Function Protein MEX3A Marcell Lederer 1,*, Simon Müller 1, Markus Glaß 1 , Nadine Bley 1, Christian Ihling 2, Andrea Sinz 2 and Stefan Hüttelmaier 1 1 Charles Tanford Protein Center, Faculty of Medicine, Institute of Molecular Medicine, Section for Molecular Cell Biology, Martin Luther University Halle-Wittenberg, Kurt-Mothes-Str. 3a, 06120 Halle, Germany; [email protected] (S.M.).; [email protected] (M.G.).; [email protected] (N.B.); [email protected] (S.H.) 2 Center for Structural Mass Spectrometry, Department of Pharmaceutical Chemistry & Bioanalytics, Institute of Pharmacy, Martin Luther University Halle-Wittenberg, Kurt-Mothes-Str. 3, 06120 Halle (Saale), Germany; [email protected] (C.I.); [email protected] (A.S.) * Correspondence: [email protected] Simple Summary: RNA-binding proteins (RBPs) are involved in the post-transcriptional control of gene expression, modulating the splicing, turnover, subcellular sorting and translation of (m)RNAs. Dysregulation of RBPs, for instance, by deregulated expression in cancer, disturbs key cellular processes such as proliferation, cell cycle progression or migration. Accordingly, RBPs contribute to tumorigenesis. Members of the human MEX3 protein family harbor RNA-binding capacity and E3 ligase activity. Thus, they presumably combine post-transcriptional and post-translational regulatory mechanisms. In this review, we discuss recent studies to emphasize emerging evidence for a pivotal role of the MEX3 protein family, in particular MEX3A, in human cancer. Citation: Lederer, M.; Müller, S.; Glaß, M.; Bley, N.; Ihling, C.; Sinz, A.; Abstract: MEX3A belongs to the MEX3 (Muscle EXcess) protein family consisting of four members Hüttelmaier, S.