Lecture Notes to Be Used in Conjunction with 233 Computational

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linear Independence, Span, and Basis of a Set of Vectors What Is Linear Independence?

LECTURENOTES · purdue university MA 26500 Kyle Kloster Linear Algebra October 22, 2014 Linear Independence, Span, and Basis of a Set of Vectors What is linear independence? A set of vectors S = fv1; ··· ; vkg is linearly independent if none of the vectors vi can be written as a linear combination of the other vectors, i.e. vj = α1v1 + ··· + αkvk. Suppose the vector vj can be written as a linear combination of the other vectors, i.e. there exist scalars αi such that vj = α1v1 + ··· + αkvk holds. (This is equivalent to saying that the vectors v1; ··· ; vk are linearly dependent). We can subtract vj to move it over to the other side to get an expression 0 = α1v1 + ··· αkvk (where the term vj now appears on the right hand side. In other words, the condition that \the set of vectors S = fv1; ··· ; vkg is linearly dependent" is equivalent to the condition that there exists αi not all of which are zero such that 2 3 α1 6α27 0 = v v ··· v 6 7 : 1 2 k 6 . 7 4 . 5 αk More concisely, form the matrix V whose columns are the vectors vi. Then the set S of vectors vi is a linearly dependent set if there is a nonzero solution x such that V x = 0. This means that the condition that \the set of vectors S = fv1; ··· ; vkg is linearly independent" is equivalent to the condition that \the only solution x to the equation V x = 0 is the zero vector, i.e. x = 0. How do you determine if a set is lin. -

Discover Linear Algebra Incomplete Preliminary Draft

Discover Linear Algebra Incomplete Preliminary Draft Date: November 28, 2017 L´aszl´oBabai in collaboration with Noah Halford All rights reserved. Approved for instructional use only. Commercial distribution prohibited. c 2016 L´aszl´oBabai. Last updated: November 10, 2016 Preface TO BE WRITTEN. Babai: Discover Linear Algebra. ii This chapter last updated August 21, 2016 c 2016 L´aszl´oBabai. Contents Notation ix I Matrix Theory 1 Introduction to Part I 2 1 (F, R) Column Vectors 3 1.1 (F) Column vector basics . 3 1.1.1 The domain of scalars . 3 1.2 (F) Subspaces and span . 6 1.3 (F) Linear independence and the First Miracle of Linear Algebra . 8 1.4 (F) Dot product . 12 1.5 (R) Dot product over R ................................. 14 1.6 (F) Additional exercises . 14 2 (F) Matrices 15 2.1 Matrix basics . 15 2.2 Matrix multiplication . 18 2.3 Arithmetic of diagonal and triangular matrices . 22 2.4 Permutation Matrices . 24 2.5 Additional exercises . 26 3 (F) Matrix Rank 28 3.1 Column and row rank . 28 iii iv CONTENTS 3.2 Elementary operations and Gaussian elimination . 29 3.3 Invariance of column and row rank, the Second Miracle of Linear Algebra . 31 3.4 Matrix rank and invertibility . 33 3.5 Codimension (optional) . 34 3.6 Additional exercises . 35 4 (F) Theory of Systems of Linear Equations I: Qualitative Theory 38 4.1 Homogeneous systems of linear equations . 38 4.2 General systems of linear equations . 40 5 (F, R) Affine and Convex Combinations (optional) 42 5.1 (F) Affine combinations . -

Linear Independence, the Wronskian, and Variation of Parameters

LINEAR INDEPENDENCE, THE WRONSKIAN, AND VARIATION OF PARAMETERS JAMES KEESLING In this post we determine when a set of solutions of a linear differential equation are linearly independent. We first discuss the linear space of solutions for a homogeneous differential equation. 1. Homogeneous Linear Differential Equations We start with homogeneous linear nth-order ordinary differential equations with general coefficients. The form for the nth-order type of equation is the following. dnx dn−1x (1) a (t) + a (t) + ··· + a (t)x = 0 n dtn n−1 dtn−1 0 It is straightforward to solve such an equation if the functions ai(t) are all constants. However, for general functions as above, it may not be so easy. However, we do have a principle that is useful. Because the equation is linear and homogeneous, if we have a set of solutions fx1(t); : : : ; xn(t)g, then any linear combination of the solutions is also a solution. That is (2) x(t) = C1x1(t) + C2x2(t) + ··· + Cnxn(t) is also a solution for any choice of constants fC1;C2;:::;Cng. Now if the solutions fx1(t); : : : ; xn(t)g are linearly independent, then (2) is the general solution of the differential equation. We will explain why later. What does it mean for the functions, fx1(t); : : : ; xn(t)g, to be linearly independent? The simple straightforward answer is that (3) C1x1(t) + C2x2(t) + ··· + Cnxn(t) = 0 implies that C1 = 0, C2 = 0, ::: , and Cn = 0 where the Ci's are arbitrary constants. This is the definition, but it is not so easy to determine from it just when the condition holds to show that a given set of functions, fx1(t); x2(t); : : : ; xng, is linearly independent. -

Span, Linear Independence and Basis Rank and Nullity

Remarks for Exam 2 in Linear Algebra Span, linear independence and basis The span of a set of vectors is the set of all linear combinations of the vectors. A set of vectors is linearly independent if the only solution to c1v1 + ::: + ckvk = 0 is ci = 0 for all i. Given a set of vectors, you can determine if they are linearly independent by writing the vectors as the columns of the matrix A, and solving Ax = 0. If there are any non-zero solutions, then the vectors are linearly dependent. If the only solution is x = 0, then they are linearly independent. A basis for a subspace S of Rn is a set of vectors that spans S and is linearly independent. There are many bases, but every basis must have exactly k = dim(S) vectors. A spanning set in S must contain at least k vectors, and a linearly independent set in S can contain at most k vectors. A spanning set in S with exactly k vectors is a basis. A linearly independent set in S with exactly k vectors is a basis. Rank and nullity The span of the rows of matrix A is the row space of A. The span of the columns of A is the column space C(A). The row and column spaces always have the same dimension, called the rank of A. Let r = rank(A). Then r is the maximal number of linearly independent row vectors, and the maximal number of linearly independent column vectors. So if r < n then the columns are linearly dependent; if r < m then the rows are linearly dependent. -

Homogeneous Systems (1.5) Linear Independence and Dependence (1.7)

Math 20F, 2015SS1 / TA: Jor-el Briones / Sec: A01 / Handout Page 1 of3 Homogeneous systems (1.5) Homogenous systems are linear systems in the form Ax = 0, where 0 is the 0 vector. Given a system Ax = b, suppose x = α + t1α1 + t2α2 + ::: + tkαk is a solution (in parametric form) to the system, for any values of t1; t2; :::; tk. Then α is a solution to the system Ax = b (seen by seeting t1 = ::: = tk = 0), and α1; α2; :::; αk are solutions to the homoegeneous system Ax = 0. Terms you should know The zero solution (trivial solution): The zero solution is the 0 vector (a vector with all entries being 0), which is always a solution to the homogeneous system Particular solution: Given a system Ax = b, suppose x = α + t1α1 + t2α2 + ::: + tkαk is a solution (in parametric form) to the system, α is the particular solution to the system. The other terms constitute the solution to the associated homogeneous system Ax = 0. Properties of a Homegeneous System 1. A homogeneous system is ALWAYS consistent, since the zero solution, aka the trivial solution, is always a solution to that system. 2. A homogeneous system with at least one free variable has infinitely many solutions. 3. A homogeneous system with more unknowns than equations has infinitely many solu- tions 4. If α1; α2; :::; αk are solutions to a homogeneous system, then ANY linear combination of α1; α2; :::; αk is also a solution to the homogeneous system Important theorems to know Theorem. (Chapter 1, Theorem 6) Given a consistent system Ax = b, suppose α is a solution to the system. -

Linear Algebra Review

Linear Algebra Review Kaiyu Zheng October 2017 Linear algebra is fundamental for many areas in computer science. This document aims at providing a reference (mostly for myself) when I need to remember some concepts or examples. Instead of a collection of facts as the Matrix Cookbook, this document is more gentle like a tutorial. Most of the content come from my notes while taking the undergraduate linear algebra course (Math 308) at the University of Washington. Contents on more advanced topics are collected from reading different sources on the Internet. Contents 3.8 Exponential and 7 Special Matrices 19 Logarithm...... 11 7.1 Block Matrix.... 19 1 Linear System of Equa- 3.9 Conversion Be- 7.2 Orthogonal..... 20 tions2 tween Matrix Nota- 7.3 Diagonal....... 20 tion and Summation 12 7.4 Diagonalizable... 20 2 Vectors3 7.5 Symmetric...... 21 2.1 Linear independence5 4 Vector Spaces 13 7.6 Positive-Definite.. 21 2.2 Linear dependence.5 4.1 Determinant..... 13 7.7 Singular Value De- 2.3 Linear transforma- 4.2 Kernel........ 15 composition..... 22 tion.........5 4.3 Basis......... 15 7.8 Similar........ 22 7.9 Jordan Normal Form 23 4.4 Change of Basis... 16 3 Matrix Algebra6 7.10 Hermitian...... 23 4.5 Dimension, Row & 7.11 Discrete Fourier 3.1 Addition.......6 Column Space, and Transform...... 24 3.2 Scalar Multiplication6 Rank......... 17 3.3 Matrix Multiplication6 8 Matrix Calculus 24 3.4 Transpose......8 5 Eigen 17 8.1 Differentiation... 24 3.4.1 Conjugate 5.1 Multiplicity of 8.2 Jacobian...... -

A SHORT PROOF of the EXISTENCE of JORDAN NORMAL FORM Let V Be a Finite-Dimensional Complex Vector Space and Let T : V → V Be A

A SHORT PROOF OF THE EXISTENCE OF JORDAN NORMAL FORM MARK WILDON Let V be a finite-dimensional complex vector space and let T : V → V be a linear map. A fundamental theorem in linear algebra asserts that there is a basis of V in which T is represented by a matrix in Jordan normal form J1 0 ... 0 0 J2 ... 0 . . .. . 0 0 ...Jk where each Ji is a matrix of the form λ 1 ... 0 0 0 λ . 0 0 . . .. 0 0 . λ 1 0 0 ... 0 λ for some λ ∈ C. We shall assume that the usual reduction to the case where some power of T is the zero map has been made. (See [1, §58] for a characteristically clear account of this step.) After this reduction, it is sufficient to prove the following theorem. Theorem 1. If T : V → V is a linear transformation of a finite-dimensional vector space such that T m = 0 for some m ≥ 1, then there is a basis of V of the form a1−1 ak−1 u1, T u1,...,T u1, . , uk, T uk,...,T uk a where T i ui = 0 for 1 ≤ i ≤ k. At this point all the proofs the author has seen (even Halmos’ in [1, §57]) become unnecessarily long-winded. In this note we present a simple proof which leads to a straightforward algorithm for finding the required basis. Date: December 2007. 1 2 MARK WILDON Proof. We work by induction on dim V . For the inductive step we may assume that dim V ≥ 1. -

Lecture 29: Jordan Chains & Tableaux

Jordan chain Aim lecture: We introduce the notions of Jordan chains & Jordan form tableaux which are the key notions to proving the Jordan canonical form theorem. Throughout this lecture we fix the following notn. Let T : V −! V be linear & λ 2 F be an e-value & N = T − λI . Defn n n−1 Let v 2 Eλ(n) − Eλ(n − 1) so N v = 0 but N v 6= 0.A Jordan chain of length n is a row matrix of the form C = (Nn−1v Nn−2v ::: v) 2 V n. We say v is a seed for the Jordan chain & Ni v are vectors of the chain. The Jordan chain n space associated to C is im (C : F −! V ) ≤ V . E.g. T = J3(λ) Daniel Chan (UNSW) Lecture 29: Jordan chains & tableaux Semester 2 2012 1 / 10 T -invariance of Jordan chain spaces Prop Let C = (vn−1 vn−2 ::: v0) be a Jordan chain. The Jordan chain space im C is T -invariant. In fact 1 T vi = vi+1 + λvi for i = 0;:::; n − 2. 2 T vn−1 = λvn−1. Proof. By our criterion for T -invariance lect. 20, suffice prove 1) & 2). But i vi = N v0 so i T vi = (N + λI )vi = Nvi + λvi = NN v0 + λvi = vi+1 + λvi n if we define vn = N v0 = 0. The propn follows. Daniel Chan (UNSW) Lecture 29: Jordan chains & tableaux Semester 2 2012 2 / 10 Linear independence of Jordan chain Prop Let C = (Nn−1v Nn−2v ::: v) be a Jordan chain of length n. -

Maxmalgebra M the Linear Algebra of Combinatorics?

Max-algebra - the linear algebra of combinatorics? Peter Butkoviÿc School of Mathematics and Statistics The University of Birmingham Edgbaston Birmingham B15 2TT United Kingdom Submitted by ABSTRACT Let a b =max(a, b),a b = a + b for a, b R := R .Bymax- algebra we⊕ understand the analogue⊗ of linear algebra∈ developed∪ {−∞ for} the pair of operations ( , ) extended to matrices and vectors. Max-algebra, which has been studied⊕ for⊗ more than 40 years, is an attractive way of describing a class of nonlinear problems appearing for instance in machine-scheduling, information technology and discrete-event dynamic systems. This paper focuses on presenting a number of links between basic max-algebraic problems like systems of linear equations, eigenvalue-eigenvector problem, linear independence, regularity and characteristic polynomial on one hand and combinatorial or combinatorial opti- misation problems on the other hand. This indicates that max-algebra may be regarded as a linear-algebraic encoding of a class of combinatorial problems. The paper is intended for wider readership including researchers not familiar with max-algebra. 1. Introduction Let a b =max(a, b) ⊕ and a b = a + b ⊗ for a, b R := R . By max-algebra we understand in this paper the analogue∈ of linear∪ {−∞ algebra} developed for the pair of operations ( , ) extended to matrices and vectors formally in the same way as in linear⊕ ⊗ algebra, that is if A =(aij),B=(bij) and C =(cij ) are matrices with 1 2 elements from R of compatible sizes, we write C = A B if cij = aij bij ⊕ ⊕ for all i, j, C = A B if cij = ⊕ aik bkj =maxk(aik + bkj) for all i, j ⊗ k ⊗ and α A =(α aij) for α R. -

Linear Algebra

LINEAR ALGEBRA T.K.SUBRAHMONIAN MOOTHATHU Contents 1. Vector spaces and subspaces 2 2. Linear independence 5 3. Span, basis, and dimension 7 4. Linear operators 11 5. Linear functionals and the dual space 15 6. Linear operators = matrices 18 7. Multiplying a matrix left and right 22 8. Solving linear equations 28 9. Eigenvalues, eigenvectors, and the triangular form 32 10. Inner product spaces 36 11. Orthogonal projections and least square solutions 39 12. Unitary operators: they preserve distances and angles 43 13. Orthogonal diagonalization of normal and self-adjoint operators 46 14. Singular value decomposition 50 15. Determinant of a square matrix: properties 51 16. Determinant: existence and expressions 54 17. Minimal and characteristic polynomials 58 18. Primary decomposition theorem 61 19. The Jordan canonical form (JCF) 65 20. Trace of a square matrix 71 21. Quadratic forms 73 Developing effective techniques to solve systems of linear equations is one important goal of Linear Algebra. Our study of Linear Algebra will proceed through two parallel roads: (i) the theory of linear operators, and (ii) the theory of matrices. Why do we say parallel roads? Well, here is an illustrative example. Consider the following system of linear equations: 1 2 T.K.SUBRAHMONIAN MOOTHATHU 2x1 + 3x2 = 7 4x1 − x2 = 5. 2 We are interested in two questions: is there a solution (x1; x2) 2 R to the above? and if there is a solution, is the solution unique? This problem can be formulated in two other ways: 2 2 First reformulation: Let T : R ! R be T (x1; x2) = (2x1 + 3x2; 4x1 − x2). -

Linear Algebra Review Vectors

Linear Algebra Review By Tim K. Marks UCSD Borrows heavily from: Jana Kosecka [email protected] http://cs.gmu.edu/~kosecka/cs682.html Virginia de Sa Cogsci 108F Linear Algebra review UCSD Vectors The length of x, a.k.a. the norm or 2-norm of x, is 2 2 2 x = x1 + x2 +L+ xn e.g., x = 32 + 22 + 52 = 38 ! ! 1 Good Review Materials http://www.imageprocessingbook.com/DIP2E/dip2e_downloads/review_material_downloads.htm (Gonzales & Woods review materials) Chapt. 1: Linear Algebra Review Chapt. 2: Probability, Random Variables, Random Vectors Online vector addition demo: http://www.pa.uky.edu/~phy211/VecArith/index.html 2 Vector Addition u+v v u Vector Subtraction u-v u v 3 Example (on board) Inner product (dot product) of two vectors # 6 & "4 % % ( $ ' a = % 2 ( b = $1 ' $%" 3'( #$5 &' a" b = aT b ! ! #4 & % ( = [6 2 "3] %1 ( ! $%5 '( = 6" 4 + 2"1+ (#3)" 5 =11 ! ! ! 4 Inner (dot) Product The inner product is a SCALAR. v α u 5 Transpose of a Matrix Transpose: Examples: If , we say A is symmetric. Example of symmetric matrix 6 7 8 9 Matrix Product Product: A and B must have compatible dimensions In Matlab: >> A*B Examples: Matrix Multiplication is not commutative: 10 Matrix Sum Sum: A and B must have the same dimensions Example: Determinant of a Matrix Determinant: A must be square Example: 11 Determinant in Matlab Inverse of a Matrix If A is a square matrix, the inverse of A, called A-1, satisfies AA-1 = I and A-1A = I, Where I, the identity matrix, is a diagonal matrix with all 1’s on the diagonal. -

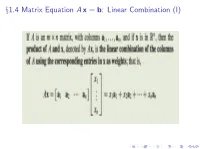

§1.4 Matrix Equation Ax = B: Linear Combination

x1.4 Matrix Equation A x = b: Linear Combination (I) Matrix-vector Product () Linear Combination (II) Example: 2 4 3 1 2 −1 A = ; x = 3 0 −5 3 4 5 7 Linear Equations in terms of Matrix-vector Product Example: Linear Equations in terms of Matrix-vector Product Example: Linear Equations in terms of Linear Combinations Linear Equations in terms of Linear Combinations 2 3 1 3 4 b1 4 0 14 10 b2 + 4 b1 5 0 7 5 b3 + 3 b1 2 3 1 3 4 b1 (`3)−(1=2)×(`2)!(`3) =) 4 0 14 10 b2 + 4 b1 5 0 0 0 b3 + 3 b1 − 1=2(b2 + 4 b1) Answer: equation Ax = b consistent () b3 − 1=2 b2 + b1 = 0: Existence of Solutions 2 3 2 3 1 3 4 b1 Example: Let A = 4 −4 2 −6 5 ; and b = 4 b2 5 : −3 −2 −7 b3 Question: For what values of b1; b2; b3 is equation Ax = b consistent? 2 3(`2) + (4) × (`1) ! (`2) 1 3 4 b1 (`3) + (3) × (`1) ! (`3) 4 −4 2 −6 b2 5 =) −3 −2 −7 b3 2 3 1 3 4 b1 4 0 14 10 b2 + 4 b1 5 0 0 0 b3 + 3 b1 − 1=2(b2 + 4 b1) Answer: equation Ax = b consistent () b3 − 1=2 b2 + b1 = 0: Existence of Solutions 2 3 2 3 1 3 4 b1 Example: Let A = 4 −4 2 −6 5 ; and b = 4 b2 5 : −3 −2 −7 b3 Question: For what values of b1; b2; b3 is equation Ax = b consistent? 2 3(`2) + (4) × (`1) ! (`2) 2 3 1 3 4 b1 1 3 4 b1 (`3) + (3) × (`1) ! (`3) 4 −4 2 −6 b2 5 =) 4 0 14 10 b2 + 4 b1 5 −3 −2 −7 b3 0 7 5 b3 + 3 b1 (` )−(1=2)×(` )!(` ) 3 =) 2 3 Answer: equation Ax = b consistent () b3 − 1=2 b2 + b1 = 0: Existence of Solutions 2 3 2 3 1 3 4 b1 Example: Let A = 4 −4 2 −6 5 ; and b = 4 b2 5 : −3 −2 −7 b3 Question: For what values of b1; b2; b3 is equation Ax = b consistent? 2 3(`2) + (4) × (`1) !