Section 7.3. Systems of Linear Algebraic Equations; Linear Independence, Eigenvalues, Eigenvectors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MATH 2370, Practice Problems

MATH 2370, Practice Problems Kiumars Kaveh Problem: Prove that an n × n complex matrix A is diagonalizable if and only if there is a basis consisting of eigenvectors of A. Problem: Let A : V ! W be a one-to-one linear map between two finite dimensional vector spaces V and W . Show that the dual map A0 : W 0 ! V 0 is surjective. Problem: Determine if the curve 2 2 2 f(x; y) 2 R j x + y + xy = 10g is an ellipse or hyperbola or union of two lines. Problem: Show that if a nilpotent matrix is diagonalizable then it is the zero matrix. Problem: Let P be a permutation matrix. Show that P is diagonalizable. Show that if λ is an eigenvalue of P then for some integer m > 0 we have λm = 1 (i.e. λ is an m-th root of unity). Hint: Note that P m = I for some integer m > 0. Problem: Show that if λ is an eigenvector of an orthogonal matrix A then jλj = 1. n Problem: Take a vector v 2 R and let H be the hyperplane orthogonal n n to v. Let R : R ! R be the reflection with respect to a hyperplane H. Prove that R is a diagonalizable linear map. Problem: Prove that if λ1; λ2 are distinct eigenvalues of a complex matrix A then the intersection of the generalized eigenspaces Eλ1 and Eλ2 is zero (this is part of the Spectral Theorem). 1 Problem: Let H = (hij) be a 2 × 2 Hermitian matrix. Use the Min- imax Principle to show that if λ1 ≤ λ2 are the eigenvalues of H then λ1 ≤ h11 ≤ λ2. -

Linear Independence, Span, and Basis of a Set of Vectors What Is Linear Independence?

LECTURENOTES · purdue university MA 26500 Kyle Kloster Linear Algebra October 22, 2014 Linear Independence, Span, and Basis of a Set of Vectors What is linear independence? A set of vectors S = fv1; ··· ; vkg is linearly independent if none of the vectors vi can be written as a linear combination of the other vectors, i.e. vj = α1v1 + ··· + αkvk. Suppose the vector vj can be written as a linear combination of the other vectors, i.e. there exist scalars αi such that vj = α1v1 + ··· + αkvk holds. (This is equivalent to saying that the vectors v1; ··· ; vk are linearly dependent). We can subtract vj to move it over to the other side to get an expression 0 = α1v1 + ··· αkvk (where the term vj now appears on the right hand side. In other words, the condition that \the set of vectors S = fv1; ··· ; vkg is linearly dependent" is equivalent to the condition that there exists αi not all of which are zero such that 2 3 α1 6α27 0 = v v ··· v 6 7 : 1 2 k 6 . 7 4 . 5 αk More concisely, form the matrix V whose columns are the vectors vi. Then the set S of vectors vi is a linearly dependent set if there is a nonzero solution x such that V x = 0. This means that the condition that \the set of vectors S = fv1; ··· ; vkg is linearly independent" is equivalent to the condition that \the only solution x to the equation V x = 0 is the zero vector, i.e. x = 0. How do you determine if a set is lin. -

Discover Linear Algebra Incomplete Preliminary Draft

Discover Linear Algebra Incomplete Preliminary Draft Date: November 28, 2017 L´aszl´oBabai in collaboration with Noah Halford All rights reserved. Approved for instructional use only. Commercial distribution prohibited. c 2016 L´aszl´oBabai. Last updated: November 10, 2016 Preface TO BE WRITTEN. Babai: Discover Linear Algebra. ii This chapter last updated August 21, 2016 c 2016 L´aszl´oBabai. Contents Notation ix I Matrix Theory 1 Introduction to Part I 2 1 (F, R) Column Vectors 3 1.1 (F) Column vector basics . 3 1.1.1 The domain of scalars . 3 1.2 (F) Subspaces and span . 6 1.3 (F) Linear independence and the First Miracle of Linear Algebra . 8 1.4 (F) Dot product . 12 1.5 (R) Dot product over R ................................. 14 1.6 (F) Additional exercises . 14 2 (F) Matrices 15 2.1 Matrix basics . 15 2.2 Matrix multiplication . 18 2.3 Arithmetic of diagonal and triangular matrices . 22 2.4 Permutation Matrices . 24 2.5 Additional exercises . 26 3 (F) Matrix Rank 28 3.1 Column and row rank . 28 iii iv CONTENTS 3.2 Elementary operations and Gaussian elimination . 29 3.3 Invariance of column and row rank, the Second Miracle of Linear Algebra . 31 3.4 Matrix rank and invertibility . 33 3.5 Codimension (optional) . 34 3.6 Additional exercises . 35 4 (F) Theory of Systems of Linear Equations I: Qualitative Theory 38 4.1 Homogeneous systems of linear equations . 38 4.2 General systems of linear equations . 40 5 (F, R) Affine and Convex Combinations (optional) 42 5.1 (F) Affine combinations . -

Linear Independence, the Wronskian, and Variation of Parameters

LINEAR INDEPENDENCE, THE WRONSKIAN, AND VARIATION OF PARAMETERS JAMES KEESLING In this post we determine when a set of solutions of a linear differential equation are linearly independent. We first discuss the linear space of solutions for a homogeneous differential equation. 1. Homogeneous Linear Differential Equations We start with homogeneous linear nth-order ordinary differential equations with general coefficients. The form for the nth-order type of equation is the following. dnx dn−1x (1) a (t) + a (t) + ··· + a (t)x = 0 n dtn n−1 dtn−1 0 It is straightforward to solve such an equation if the functions ai(t) are all constants. However, for general functions as above, it may not be so easy. However, we do have a principle that is useful. Because the equation is linear and homogeneous, if we have a set of solutions fx1(t); : : : ; xn(t)g, then any linear combination of the solutions is also a solution. That is (2) x(t) = C1x1(t) + C2x2(t) + ··· + Cnxn(t) is also a solution for any choice of constants fC1;C2;:::;Cng. Now if the solutions fx1(t); : : : ; xn(t)g are linearly independent, then (2) is the general solution of the differential equation. We will explain why later. What does it mean for the functions, fx1(t); : : : ; xn(t)g, to be linearly independent? The simple straightforward answer is that (3) C1x1(t) + C2x2(t) + ··· + Cnxn(t) = 0 implies that C1 = 0, C2 = 0, ::: , and Cn = 0 where the Ci's are arbitrary constants. This is the definition, but it is not so easy to determine from it just when the condition holds to show that a given set of functions, fx1(t); x2(t); : : : ; xng, is linearly independent. -

Section 5 Summary

Section 5 summary Brian Krummel November 4, 2019 1 Terminology Eigenvalue Eigenvector Eigenspace Characteristic polynomial Characteristic equation Similar matrices Similarity transformation Diagonalizable Matrix of a linear transformation relative to a basis System of differential equations Solution to a system of differential equations Solution set of a system of differential equations Fundamental solutions Initial value problem Trajectory Decoupled system of differential equations Repeller / source Attractor / sink Saddle point Spiral point Ellipse trajectory 1 2 How to find eigenvalues, eigenvectors, and a similar ma- trix 1. First we find eigenvalues of A by finding the roots of the characteristic equation det(A − λI) = 0: Note that if A is upper triangular (or lower triangular), then the eigenvalues λ of A are just the diagonal entries of A and the multiplicity of each eigenvalue λ is the number of times λ appears as a diagonal entry of A. 2. For each eigenvalue λ, find a basis of eigenvectors corresponding to the eigenvalue λ by row reducing A − λI and find the solution to (A − λI)x = 0 in vector parametric form. Note that for a general n × n matrix A and any eigenvalue λ of A, dim(Eigenspace corresp. to λ) = # free variables of (A − λI) ≤ algebraic multiplicity of λ. 3. Assuming A has only real eigenvalues, check if A is diagonalizable. If A has n distinct eigenvalues, then A is definitely diagonalizable. More generally, if dim(Eigenspace corresp. to λ) = algebraic multiplicity of λ for each eigenvalue λ of A, then A is diagonalizable and Rn has a basis consisting of n eigenvectors of A. -

Diagonalizable Matrix - Wikipedia, the Free Encyclopedia

Diagonalizable matrix - Wikipedia, the free encyclopedia http://en.wikipedia.org/wiki/Matrix_diagonalization Diagonalizable matrix From Wikipedia, the free encyclopedia (Redirected from Matrix diagonalization) In linear algebra, a square matrix A is called diagonalizable if it is similar to a diagonal matrix, i.e., if there exists an invertible matrix P such that P −1AP is a diagonal matrix. If V is a finite-dimensional vector space, then a linear map T : V → V is called diagonalizable if there exists a basis of V with respect to which T is represented by a diagonal matrix. Diagonalization is the process of finding a corresponding diagonal matrix for a diagonalizable matrix or linear map.[1] A square matrix which is not diagonalizable is called defective. Diagonalizable matrices and maps are of interest because diagonal matrices are especially easy to handle: their eigenvalues and eigenvectors are known and one can raise a diagonal matrix to a power by simply raising the diagonal entries to that same power. Geometrically, a diagonalizable matrix is an inhomogeneous dilation (or anisotropic scaling) — it scales the space, as does a homogeneous dilation, but by a different factor in each direction, determined by the scale factors on each axis (diagonal entries). Contents 1 Characterisation 2 Diagonalization 3 Simultaneous diagonalization 4 Examples 4.1 Diagonalizable matrices 4.2 Matrices that are not diagonalizable 4.3 How to diagonalize a matrix 4.3.1 Alternative Method 5 An application 5.1 Particular application 6 Quantum mechanical application 7 See also 8 Notes 9 References 10 External links Characterisation The fundamental fact about diagonalizable maps and matrices is expressed by the following: An n-by-n matrix A over the field F is diagonalizable if and only if the sum of the dimensions of its eigenspaces is equal to n, which is the case if and only if there exists a basis of Fn consisting of eigenvectors of A. -

Span, Linear Independence and Basis Rank and Nullity

Remarks for Exam 2 in Linear Algebra Span, linear independence and basis The span of a set of vectors is the set of all linear combinations of the vectors. A set of vectors is linearly independent if the only solution to c1v1 + ::: + ckvk = 0 is ci = 0 for all i. Given a set of vectors, you can determine if they are linearly independent by writing the vectors as the columns of the matrix A, and solving Ax = 0. If there are any non-zero solutions, then the vectors are linearly dependent. If the only solution is x = 0, then they are linearly independent. A basis for a subspace S of Rn is a set of vectors that spans S and is linearly independent. There are many bases, but every basis must have exactly k = dim(S) vectors. A spanning set in S must contain at least k vectors, and a linearly independent set in S can contain at most k vectors. A spanning set in S with exactly k vectors is a basis. A linearly independent set in S with exactly k vectors is a basis. Rank and nullity The span of the rows of matrix A is the row space of A. The span of the columns of A is the column space C(A). The row and column spaces always have the same dimension, called the rank of A. Let r = rank(A). Then r is the maximal number of linearly independent row vectors, and the maximal number of linearly independent column vectors. So if r < n then the columns are linearly dependent; if r < m then the rows are linearly dependent. -

Math 1553 Introduction to Linear Algebra

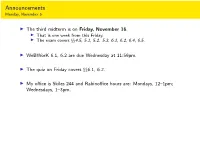

Announcements Monday, November 5 I The third midterm is on Friday, November 16. I That is one week from this Friday. I The exam covers xx4.5, 5.1, 5.2. 5.3, 6.1, 6.2, 6.4, 6.5. I WeBWorK 6.1, 6.2 are due Wednesday at 11:59pm. I The quiz on Friday covers xx6.1, 6.2. I My office is Skiles 244 and Rabinoffice hours are: Mondays, 12{1pm; Wednesdays, 1{3pm. Section 6.4 Diagonalization Motivation Difference equations Many real-word linear algebra problems have the form: 2 3 n v1 = Av0; v2 = Av1 = A v0; v3 = Av2 = A v0;::: vn = Avn−1 = A v0: This is called a difference equation. Our toy example about rabbit populations had this form. The question is, what happens to vn as n ! 1? I Taking powers of diagonal matrices is easy! I Taking powers of diagonalizable matrices is still easy! I Diagonalizing a matrix is an eigenvalue problem. 2 0 4 0 8 0 2n 0 D = ; D2 = ; D3 = ;::: Dn = : 0 −1 0 1 0 −1 0 (−1)n 0 −1 0 0 1 0 1 0 0 1 0 −1 0 0 1 1 2 1 3 1 D = @ 0 2 0 A ; D = @ 0 4 0 A ; D = @ 0 8 0 A ; 1 1 1 0 0 3 0 0 9 0 0 27 0 (−1)n 0 0 1 n 1 ::: D = @ 0 2n 0 A 1 0 0 3n Powers of Diagonal Matrices If D is diagonal, then Dn is also diagonal; its diagonal entries are the nth powers of the diagonal entries of D: (CDC −1)(CDC −1) = CD(C −1C)DC −1 = CDIDC −1 = CD2C −1 (CDC −1)(CD2C −1) = CD(C −1C)D2C −1 = CDID2C −1 = CD3C −1 CDnC −1 Closed formula in terms of n: easy to compute 1 1 2n 0 1 −1 −1 1 2n + (−1)n 2n + (−1)n+1 = : 1 −1 0 (−1)n −2 −1 1 2 2n + (−1)n+1 2n + (−1)n Powers of Matrices that are Similar to Diagonal Ones What if A is not diagonal? Example 1=2 3=2 Let A = . -

Contents 5 Eigenvalues and Diagonalization

Linear Algebra (part 5): Eigenvalues and Diagonalization (by Evan Dummit, 2017, v. 1.50) Contents 5 Eigenvalues and Diagonalization 1 5.1 Eigenvalues, Eigenvectors, and The Characteristic Polynomial . 1 5.1.1 Eigenvalues and Eigenvectors . 2 5.1.2 Eigenvalues and Eigenvectors of Matrices . 3 5.1.3 Eigenspaces . 6 5.2 Diagonalization . 9 5.3 Applications of Diagonalization . 14 5.3.1 Transition Matrices and Incidence Matrices . 14 5.3.2 Systems of Linear Dierential Equations . 16 5.3.3 Non-Diagonalizable Matrices and the Jordan Canonical Form . 19 5 Eigenvalues and Diagonalization In this chapter, we will discuss eigenvalues and eigenvectors: these are characteristic values (and characteristic vectors) associated to a linear operator T : V ! V that will allow us to study T in a particularly convenient way. Our ultimate goal is to describe methods for nding a basis for V such that the associated matrix for T has an especially simple form. We will rst describe diagonalization, the procedure for (trying to) nd a basis such that the associated matrix for T is a diagonal matrix, and characterize the linear operators that are diagonalizable. Then we will discuss a few applications of diagonalization, including the Cayley-Hamilton theorem that any matrix satises its characteristic polynomial, and close with a brief discussion of non-diagonalizable matrices. 5.1 Eigenvalues, Eigenvectors, and The Characteristic Polynomial • Suppose that we have a linear transformation T : V ! V from a (nite-dimensional) vector space V to itself. We would like to determine whether there exists a basis of such that the associated matrix β is a β V [T ]β diagonal matrix. -

Homogeneous Systems (1.5) Linear Independence and Dependence (1.7)

Math 20F, 2015SS1 / TA: Jor-el Briones / Sec: A01 / Handout Page 1 of3 Homogeneous systems (1.5) Homogenous systems are linear systems in the form Ax = 0, where 0 is the 0 vector. Given a system Ax = b, suppose x = α + t1α1 + t2α2 + ::: + tkαk is a solution (in parametric form) to the system, for any values of t1; t2; :::; tk. Then α is a solution to the system Ax = b (seen by seeting t1 = ::: = tk = 0), and α1; α2; :::; αk are solutions to the homoegeneous system Ax = 0. Terms you should know The zero solution (trivial solution): The zero solution is the 0 vector (a vector with all entries being 0), which is always a solution to the homogeneous system Particular solution: Given a system Ax = b, suppose x = α + t1α1 + t2α2 + ::: + tkαk is a solution (in parametric form) to the system, α is the particular solution to the system. The other terms constitute the solution to the associated homogeneous system Ax = 0. Properties of a Homegeneous System 1. A homogeneous system is ALWAYS consistent, since the zero solution, aka the trivial solution, is always a solution to that system. 2. A homogeneous system with at least one free variable has infinitely many solutions. 3. A homogeneous system with more unknowns than equations has infinitely many solu- tions 4. If α1; α2; :::; αk are solutions to a homogeneous system, then ANY linear combination of α1; α2; :::; αk is also a solution to the homogeneous system Important theorems to know Theorem. (Chapter 1, Theorem 6) Given a consistent system Ax = b, suppose α is a solution to the system. -

Diagonalization of Matrices

DIAGONALIZATION OF MATRICES AMAJOR QUALIFYING PROJECT REPORT SUBMITTED TO THE FACULTY OF WORCESTER POLYTECHNIC INSTITUTE IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF BACHELOR OF SCIENCE WRITTEN BY THEOPHILUS MARKS APPROVED BY:PADRAIG OC´ ATHAIN´ MAY 5, 2021 THIS REPORT REPRESENTS THE WORK OF WPI UNDERGRADUATE STUDENTS SUBMITTED TO THE FACULTY AS EVIDENCE OF COMPLETION OF A DEGREE REQUIREMENT. 1 Abstract This thesis aims to study criteria for diagonalizability over finite fields. First we review basic and major results in linear algebra, including the Jordan Canonical Form, the Cayley-Hamilton theorem, and the spectral theorem; the last of which is an important criterion for for matrix diagonalizability over fields of characteristic 0, but fails in finite fields. We then calculate the number of diagonalizable and non-diagonalizable 2 × 2 matrices and 2 × 2 symmetric matrices with repeated and distinct eigenvalues over finite fields. Finally, we look at results by Brouwer, Gow, and Sheekey for enumerating symmetric nilpotent matrices over finite fields. 2 Summary (1) In Chapter 1 we review basic linear algebra including eigenvectors and eigenval- ues, diagonalizability, generalized eigenvectors and eigenspaces, and the Cayley- Hamilton theorem (we provide an alternative proof in the appendix). We explore the Jordan canonical form and the rational canonical form, and prove some necessary and sufficient conditions for diagonalizability related to the minimal polynomial and the JCF. (2) In Chapter 2 we review inner products and inner product spaces, adjoints, and isome- tries. We prove the spectral theorem, which tells us that symmetric, orthogonal, and self-adjoint matrices are all diagonalizable over fields of characteristic zero. -

Linear Algebra Review

Linear Algebra Review Kaiyu Zheng October 2017 Linear algebra is fundamental for many areas in computer science. This document aims at providing a reference (mostly for myself) when I need to remember some concepts or examples. Instead of a collection of facts as the Matrix Cookbook, this document is more gentle like a tutorial. Most of the content come from my notes while taking the undergraduate linear algebra course (Math 308) at the University of Washington. Contents on more advanced topics are collected from reading different sources on the Internet. Contents 3.8 Exponential and 7 Special Matrices 19 Logarithm...... 11 7.1 Block Matrix.... 19 1 Linear System of Equa- 3.9 Conversion Be- 7.2 Orthogonal..... 20 tions2 tween Matrix Nota- 7.3 Diagonal....... 20 tion and Summation 12 7.4 Diagonalizable... 20 2 Vectors3 7.5 Symmetric...... 21 2.1 Linear independence5 4 Vector Spaces 13 7.6 Positive-Definite.. 21 2.2 Linear dependence.5 4.1 Determinant..... 13 7.7 Singular Value De- 2.3 Linear transforma- 4.2 Kernel........ 15 composition..... 22 tion.........5 4.3 Basis......... 15 7.8 Similar........ 22 7.9 Jordan Normal Form 23 4.4 Change of Basis... 16 3 Matrix Algebra6 7.10 Hermitian...... 23 4.5 Dimension, Row & 7.11 Discrete Fourier 3.1 Addition.......6 Column Space, and Transform...... 24 3.2 Scalar Multiplication6 Rank......... 17 3.3 Matrix Multiplication6 8 Matrix Calculus 24 3.4 Transpose......8 5 Eigen 17 8.1 Differentiation... 24 3.4.1 Conjugate 5.1 Multiplicity of 8.2 Jacobian......