Diagonalizable Matrix - Wikipedia, the Free Encyclopedia

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linear Systems and Control: a First Course (Course Notes for AAE 564)

Linear Systems and Control: A First Course (Course notes for AAE 564) Martin Corless School of Aeronautics & Astronautics Purdue University West Lafayette, Indiana [email protected] August 25, 2008 Contents 1 Introduction 1 1.1 Ingredients..................................... 4 1.2 Somenotation................................... 4 1.3 MATLAB ..................................... 4 2 State space representation of dynamical systems 5 2.1 Linearexamples.................................. 5 2.1.1 Afirstexample .............................. 5 2.1.2 Theunattachedmass........................... 6 2.1.3 Spring-mass-damper ........................... 6 2.1.4 Asimplestructure ............................ 7 2.2 Nonlinearexamples............................... 8 2.2.1 Afirstnonlinearsystem ......................... 8 2.2.2 Planarpendulum ............................. 9 2.2.3 Attitudedynamicsofarigidbody. .. 9 2.2.4 Bodyincentralforcemotion. 10 2.2.5 Ballisticsindrag ............................. 11 2.2.6 Doublependulumoncart . .. .. 12 2.2.7 Two-linkroboticmanipulator . .. 13 2.3 Discrete-timeexamples . ... 14 2.3.1 Thediscreteunattachedmass . 14 2.4 Generalrepresentation . ... 15 2.4.1 Continuous-time ............................. 15 2.4.2 Discrete-time ............................... 18 2.4.3 Exercises.................................. 20 2.5 Vectors....................................... 22 2.5.1 Vector spaces and IRn .......................... 22 2.5.2 IR2 andpictures.............................. 24 2.5.3 Derivatives ............................... -

Diagonalizing a Matrix

Diagonalizing a Matrix Definition 1. We say that two square matrices A and B are similar provided there exists an invertible matrix P so that . 2. We say a matrix A is diagonalizable if it is similar to a diagonal matrix. Example 1. The matrices and are similar matrices since . We conclude that is diagonalizable. 2. The matrices and are similar matrices since . After we have developed some additional theory, we will be able to conclude that the matrices and are not diagonalizable. Theorem Suppose A, B and C are square matrices. (1) A is similar to A. (2) If A is similar to B, then B is similar to A. (3) If A is similar to B and if B is similar to C, then A is similar to C. Proof of (3) Since A is similar to B, there exists an invertible matrix P so that . Also, since B is similar to C, there exists an invertible matrix R so that . Now, and so A is similar to C. Thus, “A is similar to B” is an equivalence relation. Theorem If A is similar to B, then A and B have the same eigenvalues. Proof Since A is similar to B, there exists an invertible matrix P so that . Now, Since A and B have the same characteristic equation, they have the same eigenvalues. > Example Find the eigenvalues for . Solution Since is similar to the diagonal matrix , they have the same eigenvalues. Because the eigenvalues of an upper (or lower) triangular matrix are the entries on the main diagonal, we see that the eigenvalues for , and, hence, are . -

MATH 2370, Practice Problems

MATH 2370, Practice Problems Kiumars Kaveh Problem: Prove that an n × n complex matrix A is diagonalizable if and only if there is a basis consisting of eigenvectors of A. Problem: Let A : V ! W be a one-to-one linear map between two finite dimensional vector spaces V and W . Show that the dual map A0 : W 0 ! V 0 is surjective. Problem: Determine if the curve 2 2 2 f(x; y) 2 R j x + y + xy = 10g is an ellipse or hyperbola or union of two lines. Problem: Show that if a nilpotent matrix is diagonalizable then it is the zero matrix. Problem: Let P be a permutation matrix. Show that P is diagonalizable. Show that if λ is an eigenvalue of P then for some integer m > 0 we have λm = 1 (i.e. λ is an m-th root of unity). Hint: Note that P m = I for some integer m > 0. Problem: Show that if λ is an eigenvector of an orthogonal matrix A then jλj = 1. n Problem: Take a vector v 2 R and let H be the hyperplane orthogonal n n to v. Let R : R ! R be the reflection with respect to a hyperplane H. Prove that R is a diagonalizable linear map. Problem: Prove that if λ1; λ2 are distinct eigenvalues of a complex matrix A then the intersection of the generalized eigenspaces Eλ1 and Eλ2 is zero (this is part of the Spectral Theorem). 1 Problem: Let H = (hij) be a 2 × 2 Hermitian matrix. Use the Min- imax Principle to show that if λ1 ≤ λ2 are the eigenvalues of H then λ1 ≤ h11 ≤ λ2. -

Scientific Computing: an Introductory Survey

Eigenvalue Problems Existence, Uniqueness, and Conditioning Computing Eigenvalues and Eigenvectors Scientific Computing: An Introductory Survey Chapter 4 – Eigenvalue Problems Prof. Michael T. Heath Department of Computer Science University of Illinois at Urbana-Champaign Copyright c 2002. Reproduction permitted for noncommercial, educational use only. Michael T. Heath Scientific Computing 1 / 87 Eigenvalue Problems Existence, Uniqueness, and Conditioning Computing Eigenvalues and Eigenvectors Outline 1 Eigenvalue Problems 2 Existence, Uniqueness, and Conditioning 3 Computing Eigenvalues and Eigenvectors Michael T. Heath Scientific Computing 2 / 87 Eigenvalue Problems Eigenvalue Problems Existence, Uniqueness, and Conditioning Eigenvalues and Eigenvectors Computing Eigenvalues and Eigenvectors Geometric Interpretation Eigenvalue Problems Eigenvalue problems occur in many areas of science and engineering, such as structural analysis Eigenvalues are also important in analyzing numerical methods Theory and algorithms apply to complex matrices as well as real matrices With complex matrices, we use conjugate transpose, AH , instead of usual transpose, AT Michael T. Heath Scientific Computing 3 / 87 Eigenvalue Problems Eigenvalue Problems Existence, Uniqueness, and Conditioning Eigenvalues and Eigenvectors Computing Eigenvalues and Eigenvectors Geometric Interpretation Eigenvalues and Eigenvectors Standard eigenvalue problem : Given n × n matrix A, find scalar λ and nonzero vector x such that A x = λ x λ is eigenvalue, and x is corresponding eigenvector -

3.3 Diagonalization

3.3 Diagonalization −4 1 1 1 Let A = 0 1. Then 0 1 and 0 1 are eigenvectors of A, with corresponding @ 4 −4 A @ 2 A @ −2 A eigenvalues −2 and −6 respectively (check). This means −4 1 1 1 −4 1 1 1 0 1 0 1 = −2 0 1 ; 0 1 0 1 = −6 0 1 : @ 4 −4 A @ 2 A @ 2 A @ 4 −4 A @ −2 A @ −2 A Thus −4 1 1 1 1 1 −2 −6 0 1 0 1 = 0−2 0 1 − 6 0 11 = 0 1 @ 4 −4 A @ 2 −2 A @ @ −2 A @ −2 AA @ −4 12 A We have −4 1 1 1 1 1 −2 0 0 1 0 1 = 0 1 0 1 @ 4 −4 A @ 2 −2 A @ 2 −2 A @ 0 −6 A 1 1 (Think about this). Thus AE = ED where E = 0 1 has the eigenvectors of A as @ 2 −2 A −2 0 columns and D = 0 1 is the diagonal matrix having the eigenvalues of A on the @ 0 −6 A main diagonal, in the order in which their corresponding eigenvectors appear as columns of E. Definition 3.3.1 A n × n matrix is A diagonal if all of its non-zero entries are located on its main diagonal, i.e. if Aij = 0 whenever i =6 j. Diagonal matrices are particularly easy to handle computationally. If A and B are diagonal n × n matrices then the product AB is obtained from A and B by simply multiplying entries in corresponding positions along the diagonal, and AB = BA. -

R'kj.Oti-1). (3) the Object of the Present Article Is to Make This Estimate Effective

TRANSACTIONS OF THE AMERICAN MATHEMATICAL SOCIETY Volume 259, Number 2, June 1980 EFFECTIVE p-ADIC BOUNDS FOR SOLUTIONS OF HOMOGENEOUS LINEAR DIFFERENTIAL EQUATIONS BY B. DWORK AND P. ROBBA Dedicated to K. Iwasawa Abstract. We consider a finite set of power series in one variable with coefficients in a field of characteristic zero having a chosen nonarchimedean valuation. We study the growth of these series near the boundary of their common "open" disk of convergence. Our results are definitive when the wronskian is bounded. The main application involves local solutions of ordinary linear differential equations with analytic coefficients. The effective determination of the common radius of conver- gence remains open (and is not treated here). Let K be an algebraically closed field of characteristic zero complete under a nonarchimedean valuation with residue class field of characteristic p. Let D = d/dx L = D"+Cn_lD'-l+ ■ ■■ +C0 (1) be a linear differential operator with coefficients meromorphic in some neighbor- hood of the origin. Let u = a0 + a,jc + . (2) be a power series solution of L which converges in an open (/>-adic) disk of radius r. Our object is to describe the asymptotic behavior of \a,\rs as s —*oo. In a series of articles we have shown that subject to certain restrictions we may conclude that r'KJ.Oti-1). (3) The object of the present article is to make this estimate effective. At the same time we greatly simplify, and generalize, our best previous results [12] for the noneffective form. Our previous work was based on the notion of a generic disk together with a condition for reducibility of differential operators with unbounded solutions [4, Theorem 4]. -

Section 5 Summary

Section 5 summary Brian Krummel November 4, 2019 1 Terminology Eigenvalue Eigenvector Eigenspace Characteristic polynomial Characteristic equation Similar matrices Similarity transformation Diagonalizable Matrix of a linear transformation relative to a basis System of differential equations Solution to a system of differential equations Solution set of a system of differential equations Fundamental solutions Initial value problem Trajectory Decoupled system of differential equations Repeller / source Attractor / sink Saddle point Spiral point Ellipse trajectory 1 2 How to find eigenvalues, eigenvectors, and a similar ma- trix 1. First we find eigenvalues of A by finding the roots of the characteristic equation det(A − λI) = 0: Note that if A is upper triangular (or lower triangular), then the eigenvalues λ of A are just the diagonal entries of A and the multiplicity of each eigenvalue λ is the number of times λ appears as a diagonal entry of A. 2. For each eigenvalue λ, find a basis of eigenvectors corresponding to the eigenvalue λ by row reducing A − λI and find the solution to (A − λI)x = 0 in vector parametric form. Note that for a general n × n matrix A and any eigenvalue λ of A, dim(Eigenspace corresp. to λ) = # free variables of (A − λI) ≤ algebraic multiplicity of λ. 3. Assuming A has only real eigenvalues, check if A is diagonalizable. If A has n distinct eigenvalues, then A is definitely diagonalizable. More generally, if dim(Eigenspace corresp. to λ) = algebraic multiplicity of λ for each eigenvalue λ of A, then A is diagonalizable and Rn has a basis consisting of n eigenvectors of A. -

EIGENVALUES and EIGENVECTORS 1. Diagonalizable Linear Transformations and Matrices Recall, a Matrix, D, Is Diagonal If It Is

EIGENVALUES AND EIGENVECTORS 1. Diagonalizable linear transformations and matrices Recall, a matrix, D, is diagonal if it is square and the only non-zero entries are on the diagonal. This is equivalent to D~ei = λi~ei where here ~ei are the standard n n vector and the λi are the diagonal entries. A linear transformation, T : R ! R , is n diagonalizable if there is a basis B of R so that [T ]B is diagonal. This means [T ] is n×n similar to the diagonal matrix [T ]B. Similarly, a matrix A 2 R is diagonalizable if it is similar to some diagonal matrix D. To diagonalize a linear transformation is to find a basis B so that [T ]B is diagonal. To diagonalize a square matrix is to find an invertible S so that S−1AS = D is diagonal. Fix a matrix A 2 Rn×n We say a vector ~v 2 Rn is an eigenvector if (1) ~v 6= 0. (2) A~v = λ~v for some scalar λ 2 R. The scalar λ is the eigenvalue associated to ~v or just an eigenvalue of A. Geo- metrically, A~v is parallel to ~v and the eigenvalue, λ. counts the stretching factor. Another way to think about this is that the line L := span(~v) is left invariant by multiplication by A. n An eigenbasis of A is a basis, B = (~v1; : : : ;~vn) of R so that each ~vi is an eigenvector of A. Theorem 1.1. The matrix A is diagonalizable if and only if there is an eigenbasis of A. -

Math 1553 Introduction to Linear Algebra

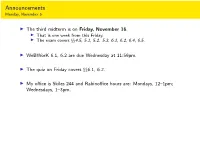

Announcements Monday, November 5 I The third midterm is on Friday, November 16. I That is one week from this Friday. I The exam covers xx4.5, 5.1, 5.2. 5.3, 6.1, 6.2, 6.4, 6.5. I WeBWorK 6.1, 6.2 are due Wednesday at 11:59pm. I The quiz on Friday covers xx6.1, 6.2. I My office is Skiles 244 and Rabinoffice hours are: Mondays, 12{1pm; Wednesdays, 1{3pm. Section 6.4 Diagonalization Motivation Difference equations Many real-word linear algebra problems have the form: 2 3 n v1 = Av0; v2 = Av1 = A v0; v3 = Av2 = A v0;::: vn = Avn−1 = A v0: This is called a difference equation. Our toy example about rabbit populations had this form. The question is, what happens to vn as n ! 1? I Taking powers of diagonal matrices is easy! I Taking powers of diagonalizable matrices is still easy! I Diagonalizing a matrix is an eigenvalue problem. 2 0 4 0 8 0 2n 0 D = ; D2 = ; D3 = ;::: Dn = : 0 −1 0 1 0 −1 0 (−1)n 0 −1 0 0 1 0 1 0 0 1 0 −1 0 0 1 1 2 1 3 1 D = @ 0 2 0 A ; D = @ 0 4 0 A ; D = @ 0 8 0 A ; 1 1 1 0 0 3 0 0 9 0 0 27 0 (−1)n 0 0 1 n 1 ::: D = @ 0 2n 0 A 1 0 0 3n Powers of Diagonal Matrices If D is diagonal, then Dn is also diagonal; its diagonal entries are the nth powers of the diagonal entries of D: (CDC −1)(CDC −1) = CD(C −1C)DC −1 = CDIDC −1 = CD2C −1 (CDC −1)(CD2C −1) = CD(C −1C)D2C −1 = CDID2C −1 = CD3C −1 CDnC −1 Closed formula in terms of n: easy to compute 1 1 2n 0 1 −1 −1 1 2n + (−1)n 2n + (−1)n+1 = : 1 −1 0 (−1)n −2 −1 1 2 2n + (−1)n+1 2n + (−1)n Powers of Matrices that are Similar to Diagonal Ones What if A is not diagonal? Example 1=2 3=2 Let A = . -

§9.2 Orthogonal Matrices and Similarity Transformations

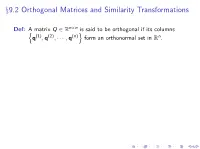

n×n Thm: Suppose matrix Q 2 R is orthogonal. Then −1 T I Q is invertible with Q = Q . n T T I For any x; y 2 R ,(Q x) (Q y) = x y. n I For any x 2 R , kQ xk2 = kxk2. Ex 0 1 1 1 1 1 2 2 2 2 B C B 1 1 1 1 C B − 2 2 − 2 2 C B C T H = B C ; H H = I : B C B − 1 − 1 1 1 C B 2 2 2 2 C @ A 1 1 1 1 2 − 2 − 2 2 x9.2 Orthogonal Matrices and Similarity Transformations n×n Def: A matrix Q 2 R is said to be orthogonal if its columns n (1) (2) (n)o n q ; q ; ··· ; q form an orthonormal set in R . Ex 0 1 1 1 1 1 2 2 2 2 B C B 1 1 1 1 C B − 2 2 − 2 2 C B C T H = B C ; H H = I : B C B − 1 − 1 1 1 C B 2 2 2 2 C @ A 1 1 1 1 2 − 2 − 2 2 x9.2 Orthogonal Matrices and Similarity Transformations n×n Def: A matrix Q 2 R is said to be orthogonal if its columns n (1) (2) (n)o n q ; q ; ··· ; q form an orthonormal set in R . n×n Thm: Suppose matrix Q 2 R is orthogonal. Then −1 T I Q is invertible with Q = Q . n T T I For any x; y 2 R ,(Q x) (Q y) = x y. -

Mata Glossary of Common Terms

Title stata.com Glossary Description Commonly used terms are defined here. Mata glossary arguments The values a function receives are called the function’s arguments. For instance, in lud(A, L, U), A, L, and U are the arguments. array An array is any indexed object that holds other objects as elements. Vectors are examples of 1- dimensional arrays. Vector v is an array, and v[1] is its first element. Matrices are 2-dimensional arrays. Matrix X is an array, and X[1; 1] is its first element. In theory, one can have 3-dimensional, 4-dimensional, and higher arrays, although Mata does not directly provide them. See[ M-2] Subscripts for more information on arrays in Mata. Arrays are usually indexed by sequential integers, but in associative arrays, the indices are strings that have no natural ordering. Associative arrays can be 1-dimensional, 2-dimensional, or higher. If A were an associative array, then A[\first"] might be one of its elements. See[ M-5] asarray( ) for associative arrays in Mata. binary operator A binary operator is an operator applied to two arguments. In 2-3, the minus sign is a binary operator, as opposed to the minus sign in -9, which is a unary operator. broad type Two matrices are said to be of the same broad type if the elements in each are numeric, are string, or are pointers. Mata provides two numeric types, real and complex. The term broad type is used to mask the distinction within numeric and is often used when discussing operators or functions. -

Contents 5 Eigenvalues and Diagonalization

Linear Algebra (part 5): Eigenvalues and Diagonalization (by Evan Dummit, 2017, v. 1.50) Contents 5 Eigenvalues and Diagonalization 1 5.1 Eigenvalues, Eigenvectors, and The Characteristic Polynomial . 1 5.1.1 Eigenvalues and Eigenvectors . 2 5.1.2 Eigenvalues and Eigenvectors of Matrices . 3 5.1.3 Eigenspaces . 6 5.2 Diagonalization . 9 5.3 Applications of Diagonalization . 14 5.3.1 Transition Matrices and Incidence Matrices . 14 5.3.2 Systems of Linear Dierential Equations . 16 5.3.3 Non-Diagonalizable Matrices and the Jordan Canonical Form . 19 5 Eigenvalues and Diagonalization In this chapter, we will discuss eigenvalues and eigenvectors: these are characteristic values (and characteristic vectors) associated to a linear operator T : V ! V that will allow us to study T in a particularly convenient way. Our ultimate goal is to describe methods for nding a basis for V such that the associated matrix for T has an especially simple form. We will rst describe diagonalization, the procedure for (trying to) nd a basis such that the associated matrix for T is a diagonal matrix, and characterize the linear operators that are diagonalizable. Then we will discuss a few applications of diagonalization, including the Cayley-Hamilton theorem that any matrix satises its characteristic polynomial, and close with a brief discussion of non-diagonalizable matrices. 5.1 Eigenvalues, Eigenvectors, and The Characteristic Polynomial • Suppose that we have a linear transformation T : V ! V from a (nite-dimensional) vector space V to itself. We would like to determine whether there exists a basis of such that the associated matrix β is a β V [T ]β diagonal matrix.