Computer Vision, CS766

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Management of Large Sets of Image Data Capture, Databases, Image Processing, Storage, Visualization Karol Kozak

Management of large sets of image data Capture, Databases, Image Processing, Storage, Visualization Karol Kozak Download free books at Karol Kozak Management of large sets of image data Capture, Databases, Image Processing, Storage, Visualization Download free eBooks at bookboon.com 2 Management of large sets of image data: Capture, Databases, Image Processing, Storage, Visualization 1st edition © 2014 Karol Kozak & bookboon.com ISBN 978-87-403-0726-9 Download free eBooks at bookboon.com 3 Management of large sets of image data Contents Contents 1 Digital image 6 2 History of digital imaging 10 3 Amount of produced images – is it danger? 18 4 Digital image and privacy 20 5 Digital cameras 27 5.1 Methods of image capture 31 6 Image formats 33 7 Image Metadata – data about data 39 8 Interactive visualization (IV) 44 9 Basic of image processing 49 Download free eBooks at bookboon.com 4 Click on the ad to read more Management of large sets of image data Contents 10 Image Processing software 62 11 Image management and image databases 79 12 Operating system (os) and images 97 13 Graphics processing unit (GPU) 100 14 Storage and archive 101 15 Images in different disciplines 109 15.1 Microscopy 109 360° 15.2 Medical imaging 114 15.3 Astronomical images 117 15.4 Industrial imaging 360° 118 thinking. 16 Selection of best digital images 120 References: thinking. 124 360° thinking . 360° thinking. Discover the truth at www.deloitte.ca/careers Discover the truth at www.deloitte.ca/careers © Deloitte & Touche LLP and affiliated entities. Discover the truth at www.deloitte.ca/careers © Deloitte & Touche LLP and affiliated entities. -

Spatial Frequency Response of Color Image Sensors: Bayer Color Filters and Foveon X3 Paul M

Spatial Frequency Response of Color Image Sensors: Bayer Color Filters and Foveon X3 Paul M. Hubel, John Liu and Rudolph J. Guttosch Foveon, Inc. Santa Clara, California Abstract Bayer Background We compared the Spatial Frequency Response (SFR) The Bayer pattern, also known as a Color Filter of image sensors that use the Bayer color filter Array (CFA) or a mosaic pattern, is made up of a pattern and Foveon X3 technology for color image repeating array of red, green, and blue filter material capture. Sensors for both consumer and professional deposited on top of each spatial location in the array cameras were tested. The results show that the SFR (figure 1). These tiny filters enable what is normally for Foveon X3 sensors is up to 2.4x better. In a black-and-white sensor to create color images. addition to the standard SFR method, we also applied the SFR method using a red/blue edge. In this case, R G R G the X3 SFR was 3–5x higher than that for Bayer filter G B G B pattern devices. R G R G G B G B Introduction In their native state, the image sensors used in digital Figure 1 Typical Bayer filter pattern showing the alternate sampling of red, green and blue pixels. image capture devices are black-and-white. To enable color capture, small color filters are placed on top of By using 2 green filtered pixels for every red or blue, each photodiode. The filter pattern most often used is 1 the Bayer pattern is designed to maximize perceived derived in some way from the Bayer pattern , a sharpness in the luminance channel, composed repeating array of red, green, and blue pixels that lie mostly of green information. -

Megaplus Conversion Lenses for Digital Cameras

Section2 PHOTO - VIDEO - PRO AUDIO Accessories LCD Accessories .......................244-245 Batteries.....................................246-249 Camera Brackets ......................250-253 Flashes........................................253-259 Accessory Lenses .....................260-265 VR Tools.....................................266-271 Digital Media & Peripherals ..272-279 Portable Media Storage ..........280-285 Digital Picture Frames....................286 Imaging Systems ..............................287 Tripods and Heads ..................288-301 Camera Cases............................302-321 Underwater Equipment ..........322-327 PHOTOGRAPHIC SOLUTIONS DIGITAL CAMERA CLEANING PRODUCTS Sensor Swab — Digital Imaging Chip Cleaner HAKUBA Sensor Swabs are designed for cleaning the CLEANING PRODUCTS imaging sensor (CMOS or CCD) on SLR digital cameras and other delicate or hard to reach optical and imaging sur- faces. Clean room manufactured KMC-05 and sealed, these swabs are the ultimate Lens Cleaning Kit in purity. Recommended by Kodak and Fuji (when Includes: Lens tissue (30 used with Eclipse Lens Cleaner) for cleaning the DSC Pro 14n pcs.), Cleaning Solution 30 cc and FinePix S1/S2 Pro. #HALCK .........................3.95 Sensor Swabs for Digital SLR Cameras: 12-Pack (PHSS12) ........45.95 KA-11 Lens Cleaning Set Includes a Blower Brush,Cleaning Solution 30cc, Lens ECLIPSE Tissue Cleaning Cloth. CAMERA ACCESSORIES #HALCS ...................................................................................4.95 ECLIPSE lens cleaner is the highest purity lens cleaner available. It dries as quickly as it can LCDCK-BL Digital Cleaning Kit be applied leaving absolutely no residue. For cleaing LCD screens and other optical surfaces. ECLIPSE is the recommended optical glass Includes dual function cleaning tool that has a lens brush on one side and a cleaning chamois on the other, cleaner for THK USA, the US distributor for cleaning solution and five replacement chamois with one 244 Hoya filters and Tokina lenses. -

Cameras • Video Camera

Outline • Pinhole camera •Film camera • Digital camera Cameras • Video camera Digital Visual Effects, Spring 2007 Yung-Yu Chuang 2007/3/6 with slides by Fredo Durand, Brian Curless, Steve Seitz and Alexei Efros Camera trial #1 Pinhole camera pinhole camera scene film scene barrier film Add a barrier to block off most of the rays. • It reduces blurring Put a piece of film in front of an object. • The pinhole is known as the aperture • The image is inverted Shrinking the aperture Shrinking the aperture Why not making the aperture as small as possible? • Less light gets through • Diffraction effect High-end commercial pinhole cameras Adding a lens “circle of confusion” scene lens film A lens focuses light onto the film $200~$700 • There is a specific distance at which objects are “in focus” • other points project to a “circle of confusion” in the image Lenses Exposure = aperture + shutter speed F Thin lens equation: • Aperture of diameter D restricts the range of rays (aperture may be on either side of the lens) • Any object point satisfying this equation is in focus • Shutter speed is the amount of time that light is • Thin lens applet: allowed to pass through the aperture http://www.phy.ntnu.edu.tw/java/Lens/lens_e.html Exposure Effects of shutter speeds • Two main parameters: • Slower shutter speed => more light, but more motion blur – Aperture (in f stop) – Shutter speed (in fraction of a second) • Faster shutter speed freezes motion Aperture Depth of field • Aperture is the diameter of the lens opening, usually specified by f-stop, f/D, a fraction of the focal length. -

About Introduction

ABOUT TRIPOD, HEAD AND VIBRATION MARCH 2005 PAGE 1 OF 24 ABOUT This article is an update of my previous writing about vibration in camera support. Even with some decades of advancement in camera technologies, stable support with tripod is the most promis- ing way to get a sharper picture. Even with fancy control algorithms for vibration reduction, using tripod is the only practical solution that gives us freedom in photography. True understanding is about a different manner of doing something. When you understand how much you lose by not using tripod, you may not be able to make a single shot without one. It was first published in January 2004 and this is the 4th major revision. [Testing tripod-head vibration at the Markins office using Nikon D2H, laser vibrometer and data acquisition system] March 2005 at Boston, Charlie Kim INTRODUCTION I begin this article showing two most common and well known examples of camera related vibrations. One is about MLU (Mirror Lock Up Function) and the other is about the need for a higher shutter speed. Mirror Lock Up Mirror and shutter are the main moving parts inside the camera. If there is no external vibration, those are the only source of camera vibration. The vibration caused by mirror collision is quite big in most cameras.1 Follow- ing graphs suggest how much the vibrations caused by it would be. 1 John Shaw said that the camera without MLU is not a camera. ABOUT TRIPOD, HEAD AND VIBRATION MARCH 2005 PAGE 2 OF 24 For this measurement Hasselblad 205FCC with standard 80mm lens was used.2 mirror up 40 40 1st curtain stop 1st curtain stop 2nd curtain stop 20 35.4um 2nd curtain stop 20 5.1um 0 0 -20 -20 1/8 sec mirror down 1/8 sec VIBRATION AMPLITUDE [um] VIBRATION AMPLITUDE[um] -40 -40 mirror down 0.1 0.2 0.3 0.4 0.5 0.0 0.1 0.2 0.3 0.4 TIME IN SECOND TIME IN SECOND You can see the start and stop signs of every event. -

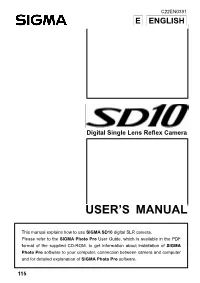

User's Manual

C22EN0391 E ENGLISH Digital Single Lens Reflex Camera USER’S MANUAL This manual explains how to use SIGMA SD10 digital SLR camera. Please refer to the SIGMA Photo Pro User Guide, which is available in the PDF format of the supplied CD-ROM, to get information about installation of SIGMA Photo Pro software to your computer, connection between camera and computer and for detailed explanation of SIGMA Photo Pro software. 115 Thank you for purchasing the Sigma Digital Autofocus Camera The Sigma SD10 Digital SLR camera is a technical breakthrough! It is powered by the Foveon® X3™ image sensor, the world’s first image sensor to capture red, green and blue light at each and every pixel. A high-resolution digital single-lens reflex camera, the SD10 delivers superior-quality digital images by combining Sigma’s extensive interchangeable lens line-up with the revolutionary Foveon X3 image sensor. You will get the greatest performance and enjoyment from your new SD10 camera’s features by reading this instruction manual carefully before operating it. Enjoy your new Sigma camera! SPECIAL FEATURES OF THE SD10 ■ Powered by Foveon X3 technology. ■ Uses a lossless compression RAW data format to eliminate image deterioration, giving superior pictures without sacrificing original image quality. ■ "Sports finder" covers action outside the immediate frame. ■ Dust protector keeps dust from adhering to the image sensor. ■ Mirror-up mechanism and depth-of-field preview button support advanced photography techniques. • Please keep this instruction booklet handy for future reference. Doing so will allow you to understand and take advantage of the camera’s unique features at any time. -

CS559: Computer Graphics

CS559: Computer Graphics Lecture 2: Image Formation in Eyes and Cameras Li Zhang Spring 2008 Today • Eyes • Cameras Why can we see? Light Visible Light and Beyond Infrared, e.g. radio wave longer wavelength Newton’s prism experiment, 1666. shorter wavelength Ultraviolet, e.g. X ray Cones and Rods Light Photomicrographs at increasing distances from the fovea. The large cells are cones; the small ones are rods. Color Vision Rods rod-shaped Light highly sensitive operate at night gray-scale vision Cones cone-shaped Photomicrographs at increasing less sensitive distances from the fovea. The large cells are cones; the small operate in high light ones are rods. color vision Color Vision Three kinds of cones: Electromagnetic Spectrum Human Luminance Sensitivity Function http://www.yorku.ca/eye/photopik.htm Lightness contrast • Also know as – Simultaneous contrast – Color contrast (for colors) Why is it important? • This phenomenon helps us maintain a consistent mental image of the world, under dramatic changes in illumination. But, It causes Illusion as well • http://www.michaelbach.de/ot/lum_white- illusion/index.html Noise • Noise can be thought as randomness added to the signal • The eyes are relatively insensitive to noise. Vision vs. Graphics Computer Graphics Computer Vision Image Capture • Let’s design a camera – Idea 1: put a piece of film in front of an object – Do we get a reasonable image? Pinhole Camera • Add a barrier to block off most of the rays – This reduces blurring – The opening known as the aperture – How does this transform the image? Camera Obscura • The first camera – 5th B.C. -

Evaluation of the Foveon X3 Sensor for Astronomy

Evaluation of the Foveon X3 sensor for astronomy Anna-Lea Lesage, Matthias Schwarz [email protected], Hamburger Sternwarte October 2009 Abstract Foveon X3 is a new type of CMOS colour sensor. We present here an evaluation of this sensor for the detection of transit planets. Firstly, we determined the gain , the dark current and the read out noise of each layer. Then the sensor was used for observations of Tau Bootes. Finally half of the transit of HD 189733 b could be observed. 1 Introduction The detection of exo-planet with the transit method relies on the observation of a diminution of the ux of the host star during the passage of the planet. This is visualised in time as a small dip in the light curve of the star. This dip represents usually a decrease of 1% to 3% of the magnitude of the star. Its detection is highly dependent of the stability of the detector, providing a constant ux for the star. However ground based observations are limited by the inuence of the atmosphere. The latter induces two eects : seeing which blurs the image of the star, and scintillation producing variation of the apparent magnitude of the star. The seeing can be corrected through the utilisation of an adaptive optic. Yet the eect of scintillation have to be corrected by the observation of reference stars during the observation time. The perturbation induced by the atmosphere are mostly wavelength independent. Thus, record- ing two identical images but at dierent wavelengths permit an identication of the wavelength independent eects. -

ELEC 7450 - Digital Image Processing Image Acquisition

I indirect imaging techniques, e.g., MRI (Fourier), CT (Backprojection) I physical quantities other than intensities are measured I computation leads to 2-D map displayed as intensity Image acquisition Digital images are acquired by I direct digital acquisition (digital still/video cameras), or I scanning material acquired as analog signals (slides, photographs, etc.). I In both cases, the digital sensing element is one of the following: Line array Area array Single sensor Stanley J. Reeves ELEC 7450 - Digital Image Processing Image acquisition Digital images are acquired by I direct digital acquisition (digital still/video cameras), or I scanning material acquired as analog signals (slides, photographs, etc.). I In both cases, the digital sensing element is one of the following: Line array Area array Single sensor I indirect imaging techniques, e.g., MRI (Fourier), CT (Backprojection) I physical quantities other than intensities are measured I computation leads to 2-D map displayed as intensity Stanley J. Reeves ELEC 7450 - Digital Image Processing Single sensor acquisition Stanley J. Reeves ELEC 7450 - Digital Image Processing Linear array acquisition Stanley J. Reeves ELEC 7450 - Digital Image Processing Two types of quantization: I spatial: limited number of pixels I gray-level: limited number of bits to represent intensity at a pixel Array sensor acquisition I Irradiance incident at each photo-site is integrated over time I Resulting array of intensities is moved out of sensor array and into a buffer I Quantized intensities are stored as a grayscale image Stanley J. Reeves ELEC 7450 - Digital Image Processing Array sensor acquisition I Irradiance incident at each photo-site is integrated over time I Resulting array of intensities is moved out of sensor array and into a buffer I Quantized Two types of quantization: intensities are stored as a I spatial: limited number of pixels grayscale image I gray-level: limited number of bits to represent intensity at a pixel Stanley J. -

Comparison of Color Demosaicing Methods Olivier Losson, Ludovic Macaire, Yanqin Yang

Comparison of color demosaicing methods Olivier Losson, Ludovic Macaire, Yanqin Yang To cite this version: Olivier Losson, Ludovic Macaire, Yanqin Yang. Comparison of color demosaicing methods. Advances in Imaging and Electron Physics, Elsevier, 2010, 162, pp.173-265. 10.1016/S1076-5670(10)62005-8. hal-00683233 HAL Id: hal-00683233 https://hal.archives-ouvertes.fr/hal-00683233 Submitted on 28 Mar 2012 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Comparison of color demosaicing methods a, a a O. Losson ∗, L. Macaire , Y. Yang a Laboratoire LAGIS UMR CNRS 8146 – Bâtiment P2 Université Lille1 – Sciences et Technologies, 59655 Villeneuve d’Ascq Cedex, France Keywords: Demosaicing, Color image, Quality evaluation, Comparison criteria 1. Introduction Today, the majority of color cameras are equipped with a single CCD (Charge- Coupled Device) sensor. The surface of such a sensor is covered by a color filter array (CFA), which consists in a mosaic of spectrally selective filters, so that each CCD ele- ment samples only one of the three color components Red (R), Green (G) or Blue (B). The Bayer CFA is the most widely used one to provide the CFA image where each pixel is characterized by only one single color component. -

Thank You for Purchasing the Sigma Digital Autofocus Camera

Thank you for purchasing the Sigma Digital Autofocus Camera The Sigma SD14 Digital SLR camera is a technical breakthrough! It is powered by the Foveon® X3™ image sensor, the world’s first image sensor to capture red, green and blue light at each and every pixel. A high-resolution digital single-lens reflex camera, the SD14 delivers superior-quality digital images by combining Sigma’s extensive interchangeable lens line-up with the revolutionary Foveon X3 image sensor. You will get the greatest performance and enjoyment from your new SD14 camera’s features by reading this instruction manual carefully before operating it. Enjoy your new Sigma camera! SPECIAL FEATURES OF THE SD14 ■ Powered by Foveon X3 technology. ■ In addition to RAW format data recording system, this camera also incorporates easy to use and high quality JPEG recording format. Super high quality JPEG format recording mode makes the best use of the characteristic of FOVEON® X3TM image sensor. ■ Dust protector keeps dust from entering the camera and adhering to the image sensor. ■ Mirror-up mechanism and depth-of-field preview button support advanced photography techniques. • Please keep this instruction booklet handy for future reference. Doing so will allow you to understand and take advantage of the camera’s unique features at any time. • The warranty of this product is one year from the date of purchase. Warranty terms and warranty card are on a separate sheet, attached. Please refer to these materials for details. Disposal of Electric and Electronic Equipment in Private Households Disposal of used Electrical & Electronic Equipment (Applicable in the European Union and other European countries with separate collection systems) This symbol indicates that this product shall not be treated as household waste. -

2006 SPIE Invited Paper: a Brief History of 'Pixel'

REPRINT — author contact: <[email protected]> A Brief History of ‘Pixel’ Richard F. Lyon Foveon Inc., 2820 San Tomas Expressway, Santa Clara CA 95051 ABSTRACT The term pixel, for picture element, was first published in two different SPIE Proceedings in 1965, in articles by Fred C. Billingsley of Caltech’s Jet Propulsion Laboratory. The alternative pel was published by William F. Schreiber of MIT in the Proceedings of the IEEE in 1967. Both pixel and pel were propagated within the image processing and video coding field for more than a decade before they appeared in textbooks in the late 1970s. Subsequently, pixel has become ubiquitous in the fields of computer graphics, displays, printers, scanners, cameras, and related technologies, with a variety of sometimes conflicting meanings. Reprint — Paper EI 6069-1 Digital Photography II — Invited Paper IS&T/SPIE Symposium on Electronic Imaging 15–19 January 2006, San Jose, California, USA Copyright 2006 SPIE and IS&T. This paper has been published in Digital Photography II, IS&T/SPIE Symposium on Electronic Imaging, 15–19 January 2006, San Jose, California, USA, and is made available as an electronic reprint with permission of SPIE and IS&T. One print or electronic copy may be made for personal use only. Systematic or multiple reproduction, distribution to multiple locations via electronic or other means, duplication of any material in this paper for a fee or for commercial purposes, or modification of the content of the paper are prohibited. A Brief History of ‘Pixel’ Richard F. Lyon Foveon Inc., 2820 San Tomas Expressway, Santa Clara CA 95051 ABSTRACT The term pixel, for picture element, was first published in two different SPIE Proceedings in 1965, in articles by Fred C.