CS559: Computer Graphics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cameras • Video Camera

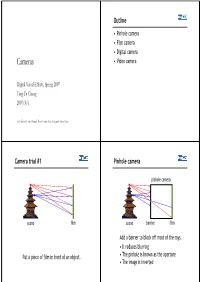

Outline • Pinhole camera •Film camera • Digital camera Cameras • Video camera Digital Visual Effects, Spring 2007 Yung-Yu Chuang 2007/3/6 with slides by Fredo Durand, Brian Curless, Steve Seitz and Alexei Efros Camera trial #1 Pinhole camera pinhole camera scene film scene barrier film Add a barrier to block off most of the rays. • It reduces blurring Put a piece of film in front of an object. • The pinhole is known as the aperture • The image is inverted Shrinking the aperture Shrinking the aperture Why not making the aperture as small as possible? • Less light gets through • Diffraction effect High-end commercial pinhole cameras Adding a lens “circle of confusion” scene lens film A lens focuses light onto the film $200~$700 • There is a specific distance at which objects are “in focus” • other points project to a “circle of confusion” in the image Lenses Exposure = aperture + shutter speed F Thin lens equation: • Aperture of diameter D restricts the range of rays (aperture may be on either side of the lens) • Any object point satisfying this equation is in focus • Shutter speed is the amount of time that light is • Thin lens applet: allowed to pass through the aperture http://www.phy.ntnu.edu.tw/java/Lens/lens_e.html Exposure Effects of shutter speeds • Two main parameters: • Slower shutter speed => more light, but more motion blur – Aperture (in f stop) – Shutter speed (in fraction of a second) • Faster shutter speed freezes motion Aperture Depth of field • Aperture is the diameter of the lens opening, usually specified by f-stop, f/D, a fraction of the focal length. -

User's Manual

C22EN0391 E ENGLISH Digital Single Lens Reflex Camera USER’S MANUAL This manual explains how to use SIGMA SD10 digital SLR camera. Please refer to the SIGMA Photo Pro User Guide, which is available in the PDF format of the supplied CD-ROM, to get information about installation of SIGMA Photo Pro software to your computer, connection between camera and computer and for detailed explanation of SIGMA Photo Pro software. 115 Thank you for purchasing the Sigma Digital Autofocus Camera The Sigma SD10 Digital SLR camera is a technical breakthrough! It is powered by the Foveon® X3™ image sensor, the world’s first image sensor to capture red, green and blue light at each and every pixel. A high-resolution digital single-lens reflex camera, the SD10 delivers superior-quality digital images by combining Sigma’s extensive interchangeable lens line-up with the revolutionary Foveon X3 image sensor. You will get the greatest performance and enjoyment from your new SD10 camera’s features by reading this instruction manual carefully before operating it. Enjoy your new Sigma camera! SPECIAL FEATURES OF THE SD10 ■ Powered by Foveon X3 technology. ■ Uses a lossless compression RAW data format to eliminate image deterioration, giving superior pictures without sacrificing original image quality. ■ "Sports finder" covers action outside the immediate frame. ■ Dust protector keeps dust from adhering to the image sensor. ■ Mirror-up mechanism and depth-of-field preview button support advanced photography techniques. • Please keep this instruction booklet handy for future reference. Doing so will allow you to understand and take advantage of the camera’s unique features at any time. -

Computer Vision, CS766

Announcement • A total of 5 (five) late days are allowed for projects. • Office hours – Me: 3:50-4:50pm Thursday (or by appointment) – Jake: 12:30-1:30PM Monday and Wednesday Image Formation Digital Camera Film Alexei Efros’ slide The Eye Image Formation • Let’s design a camera – Idea 1: put a piece of film in front of an object – Do we get a reasonable image? Steve Seitz’s slide Pinhole Camera • Add a barrier to block off most of the rays – This reduces blurring – The opening known as the aperture – How does this transform the image? Steve Seitz’s slide Camera Obscura • The first camera – 5th B.C. Aristotle, Mozi (Chinese: 墨子) – How does the aperture size affect the image? http://en.wikipedia.org/wiki/Pinhole_camera Shrinking the aperture • Why not make the aperture as small as possible? – Less light gets through – Diffraction effects... Shrinking the aperture Shrinking the aperture Sharpest image is obtained when: d 2 f d is diameter, f is distance from hole to film λ is the wavelength of light, all given in metres. Example: If f = 50mm, λ = 600nm (red), d = 0.36mm Srinivasa Narasimhan’s slide Pinhole cameras are popular Jerry Vincent's Pinhole Camera Impressive Images Jerry Vincent's Pinhole Photos What’s wrong with Pinhole Cameras? • Low incoming light => Long exposure time => Tripod KODAK Film or Paper Bright Sun Cloudy Bright TRI-X Pan 1 or 2 seconds 4 to 8 seconds T-MAX 100 Film 2 to 4 seconds 8 to 16 seconds KODABROMIDE Paper, F2 2 minutes 8 minutes http://www.kodak.com/global/en/consumer/education/lessonPlans/pinholeCamera/pinholeCanBox.shtml What’s wrong with Pinhole Cameras People are ghosted What’s wrong with Pinhole Cameras People become ghosts! Pinhole Camera Recap • Pinhole size (aperture) must be “very small” to obtain a clear image. -

COMING SOON! LIVE INTERNET BIDDING with SPECIAL AUCTION SERVICES We Are Delighted to Announce That You Will Soon Be Able To

COMING SOON! LIVE INTERNET BIDDING WITH SPECIAL AUCTION SERVICES We are delighted to announce that you will soon be able to bid online directly with SAS We will be launching the new SAS Live bidding platform from March/April 2019 Visit: www.specialauctionservices.com for more details www.specialauctionservices.com 1 Hugo Neil Thomas Marsh Shuttleworth Forrester (Director) (Director) (Director) Photographica Tuesday 12th March 2019 at 10.00 For enquiries relating to the auction, Viewing: please contact: Monday 11th March 2019 10.00-16.00 09.00 Morning of Auction Otherwise by Appointment Saleroom One 81 Greenham Business Park NEWBURY RG19 6HW Paul Mason Mike Spencer Cameras Cameras Telephone: 01635 580595 Fax: 0871 714 6905 Email: [email protected] www.specialauctionservices.com Bid Here Without Being Here All you need is your computer and an internet connection and you can make real-time bids in real-world auctions at the-saleroom.com. You don’t have to be a computer whizz. All you have to do is visit www.the-saleroom.com and register to bid - its just like being in the auction room. A live audio feed means you hear the auctioneer at the same time as other bidders. You see the lots on your computer screen as they appear in the auction room, and the auctioneer is aware of your bids the moment you make them. Just register and click to bid! 1. A Siemens Magazine 16mm 5. A Group of Pentax K and M42 9. A Pentax SFXn AF SLR Cine Camera, original model made by Lenses, including a SMC Pentax-M Camera Outfit, serial no 4964345, Siemens -

Cameras Supporting DNG the Following Cameras Are Automatically Supported by Ufraw Since They Write Their Raw Files in the DNG Format

Cameras supporting DNG The following cameras are automatically supported by UFRaw since they write their raw files in the DNG format • Casio Exilim PRO EX-F1 • Casio Exilim EX-FH20 • Hasselblad H2D • Leica Digital Modul R (DMR) for R8/R9 • Leica M8 • Leica M9 • Pentax K10D • Pentax K20D • Pentax K200D • Pentax K-m • Ricoh Digital GR • Ricoh Caplio GX100 • Ricoh Caplio GX200 • Samsung GX-10 • Samsung GX-20 • Samsung Pro815 • Sea&Sea DX-1G • Seitz D3 digital scan back Other Supported Cameras (RAW) • Adobe Digital Negative (DNG) • AgfaPhoto DC-833m • Alcatel 5035D • Apple QuickTake 100 • Apple QuickTake 150 • Apple QuickTake 200 • ARRIRAW format • AVT F-080C • AVT F-145C • AVT F-201C • AVT F-510C • AVT F-810C • Baumer TXG14 • Blackmagic URSA • Canon PowerShot 600 • Canon PowerShot A5 • Canon PowerShot A5 Zoom • Canon PowerShot A50 • Canon PowerShot A460 (CHDK hack) • Canon PowerShot A470 (CHDK hack) • Canon PowerShot A530 (CHDK hack) • Canon PowerShot A570 (CHDK hack) • Canon PowerShot A590 (CHDK hack) • Canon PowerShot A610 (CHDK hack) • Canon PowerShot A620 (CHDK hack) • Canon PowerShot A630 (CHDK hack) • Canon PowerShot A640 (CHDK hack) • Canon PowerShot A650 (CHDK hack) • Canon PowerShot A710 IS (CHDK hack) • Canon PowerShot A720 IS (CHDK hack) • Canon PowerShot A3300 IS (CHDK hack) • Canon PowerShot Pro70 • Canon PowerShot Pro90 IS • Canon PowerShot Pro1 • Canon PowerShot G1 • Canon PowerShot G1 X • Canon PowerShot G1 X Mark II • Canon PowerShot G2 • Canon PowerShot G3 • Canon PowerShot G5 • Canon PowerShot G6 • Canon PowerShot G7 (CHDK -

34 595776 Bindex.Qxd 11/15/05 8:27 PM Page 431

34_595776 bindex.qxd 11/15/05 8:27 PM Page 431 Index Straighten Tool (A), 222–22 Numbers and Symbols synchronizing filmstrip settings, - (dash) character, file naming 230–233 conventions, 152 White Balance Tool (1), 218, 225–227 ... (ellipses) characters, dialog box workflow settings, 238–239 indicator, 154 Zoom Tool (Z), 216 _(underscore) character, file naming actions conventions, 153 disc proofing, 178–187 8-bit files saving, 186 bit depth, 127 verifying, 186–187 color channels, 138–139 adjustment layers versus 16-bit files, 129–137 color corrections in Adobe Photoshop 16-bit files, versus 8-bit files, 129–137 CS2, 258–260 darkening pupils, 377–380 elements, 287 A layers, versus image menu ACR3 (Adobe Camera Raw 3) format adjustments, 259 brightness adjustments, 233 Adobe Bridge color casts, 226 batch file renaming, 153–158 color channels, 234–237 cache files, 160–162 Color Sampler Tool (S), 218, 228–230 color labels, 249 contrast adjustments, 234 digital light box, 243–244 Crop Tool (C), 218–222 enabling/disabling ACR3 (Adobe disabling auto adjustments, 50–51 Camera Raw 3) format, 49–51 exposure correction advantages, 272 exporting cache files, 160–162 exposure adjustments, 228–234 exposure display preferences, 52 filmstrip view, 251 image filtering, 250 Hand Tool (H/Spacebar), 217 image ranking, 198 JPEG format nonsupport, 251 image ratings, 249 marking images for deletion, 225 image selections, 197–198 opening Adobe BridgeCOPYRIGHTED image image MATERIAL sorts, 247–248 selections, 251 image views, 48–52 Rotate CCW (L), 223–225 keyword -

Announcement • by Wed 2:30Pm Send Your Group Partners for Project 1 to TA Photorealistic Rendering

Announcement • By Wed 2:30pm send your group partners for project 1 to TA Photorealistic Rendering An image created by using POV-Ray 3.6. http://en.wikipedia.org/wiki/Rendering_(computer_graphics) Image Formation Digital Camera Film Alexei Efros’ slide The Eye Image Formation film • Let’s design a camera – Idea 1: put a piece of film in front of an object Do we get a reasonable image? Steve Seitz’s slide Pinhole Camera barrier film • Add a barrier to block off most of the rays – This reduces blurring – The opening known as the aperture – How does this transform the image? Steve Seitz’s slide Camera Obscura • The first camera – 5th B.C. Aristotle, Mozi (Chinese: 墨子) – How does the aperture size affect the image? http://en.wikipedia.org/wiki/Pinhole_camera Shrinking the aperture • Why not make the aperture as small as possible? – Less light gets through – Diffraction effects... Shrinking the aperture Shrinking the aperture Sharpest image is obtained when: d is diameter, f is distance from hole to film λ is the wavelength of light, all given in metres. Example: If f = 50mm, λ = 600nm (red), d = 0.36mm Srinivasa Narasimhan’s slide Pinhole cameras are popular Jerry Vincent's Pinhole Camera Impressive Images Jerry Vincent's Pinhole Photos What’s wrong with Pinhole Cameras? • Low incoming light => Long exposure time => Tripod KODAK Film or Paper Bright Sun Cloudy Bright TRI-X Pan 1 or 2 seconds 4 to 8 seconds T-MAX 100 Film 2 to 4 seconds 8 to 16 seconds KODABROMIDE Paper, F2 2 minutes 8 minutes http://www.kodak.com/global/en/consumer/education/lessonPlans/pinholeCamera/pinholeCanBox.shtml What’s wrong with Pinhole Cameras People are ghosted What’s wrong with Pinhole Cameras People become ghosts! Pinhole Camera Recap • Pinhole size (aperture) must be “very small” to obtain a clear image. -

Supported Cameras • Adobe Digital Negative (DNG) • Agfaphoto DC

Supported Cameras • Adobe Digital • Canon • Canon Negative (DNG) PowerShot A570 PowerShot G1 • AgfaPhoto DC- (CHDK hack) • Canon 833m • Canon PowerShot G1 X • Alcatel 5035D PowerShot A590 • Canon • Apple QuickTake (CHDK hack) PowerShot G1 X 100 • Canon Mark II • Apple QuickTake PowerShot A610 • Canon 150 (CHDK hack) PowerShot G2 • Apple QuickTake • Canon • Canon 200 PowerShot A620 PowerShot G3 • ARRIRAW (CHDK hack) • Canon format • Canon PowerShot G3 X • AVT F-080C PowerShot A630 • Canon • AVT F-145C (CHDK hack) PowerShot G5 • AVT F-201C • Canon • Canon • AVT F-510C PowerShot A640 PowerShot G5 X • AVT F-810C (CHDK hack) • Canon • Baumer TXG14 • Canon PowerShot G6 • Blackmagic PowerShot A650 • Canon URSA (CHDK hack) PowerShot G7 • Canon • Canon (CHDK hack) PowerShot 600 PowerShot A710 • Canon • Canon IS (CHDK hack) PowerShot G7 X PowerShot A5 • Canon • Canon • Canon PowerShot A720 PowerShot G7 X PowerShot A5 IS (CHDK hack) Mark II Zoom • Canon • Canon • Canon PowerShot PowerShot G9 PowerShot A50 A3300 IS • Canon • Canon (CHDK hack) PowerShot G9 X PowerShot A460 • Canon • Canon (CHDK hack) PowerShot Pro70 PowerShot G10 • Canon • Canon • Canon PowerShot A470 PowerShot Pro90 PowerShot G11 (CHDK hack) IS • Canon • Canon • Canon PowerShot G12 PowerShot A530 PowerShot Pro1 • Canon (CHDK hack) • PowerShot G15 • Canon • Canon • Canon EOS 20D PowerShot G16 PowerShot • Canon EOS 30D • Canon SX110 IS • Canon EOS 40D PowerShot S2 IS (CHDK hack) • Canon EOS 50D (CHDK hack) • Canon • Canon EOS 60D • Canon PowerShot • Canon EOS 70D PowerShot S3 IS SX120 -

Short Preview of Nicholas Hellmuth's

Short Preview of Nicholas Hellmuth’s Course Units on Digital Photography DP 101: Achieving Quality in Digital Photography DP 201: Taking Digital Photography to the Next Level Digital Imaging Resource Center Introduction It is not required that you have any camera whatsoever. You can learn from our experience to suggest which next camera you might wish to consider. Since FLAAR does not sell cameras, we can be neutral, and honest, in recommending one camera over another. As you can see, FLAAR has experience with all sizes and shapes of digital cameras. Here Nicholas has an 8-megapixel Sony CyberShot F828, Nikon D100 (a Nikon D70 is in one of the shots too), medium format 22-megapixel Leaf Valeo wireless on a Mamiya 645 AFD, and a large format 48- megapixel Betterlight on Cambo 4x5. Half of the people who take this course have a 3, 4, 6, or 8 megapixel entry-level digital camera. We can teach you how to get exhibit-quality results from these cameras. Remember, we too started out with zoom-lens point-and-shoot cameras. You do not have to own a fancy expensive camera. The other half of the people who take this course have a 35mm SLR or medium format camera. Many are getting ready to buy a medium format back or a high-quality 35mm SLR with 11, 13, or 17 megapixels. Everyone, of all levels, are welcome to sign up for this course. If you aspire to do prize-winning nature photography with a digital camera, you can look forward to learning how to produce exhibit-quality results with any make or model of digital camera. -

Grazie Per Avere Acquistato Una Fotocamera Reflex Digitale Autofocus Sigma

Grazie per avere acquistato una fotocamera Reflex Digitale Autofocus Sigma La fotocamera reflex digitale Sigma SD10, grazie al sensore Foveon X3™, è la prima al mondo in grado di riprendere con ciascun pixel i tre colori della sintesi RGB (Red, Blu, Green). Una reflex ad alta risoluzione dotata del sensore Foveon X3™ è in grado di riprendere qualsiasi tipo di soggetto, grazie agli obiettivi intercambiabili Sigma, costruiti apposta per ottimizzarne le prestazioni. Per ottenere i migliori risultati possibili e la massima soddisfazione da questa fotocamera, leggere attentamente questo manuale, prima di iniziare a fotografare. Divertitevi a fotografare con la vostra fotocamera Sigma. IN PUNTI QUALIFICANTI DELLA SD10 ■ Dotata del rivoluzionario sensore d'immagine Foveon X3™ ■ Il sistema RAW, a bassa compressione di file, non provoca decadimento d'immagine e consente di ottenere fotografie di alta qualità, senza sacrificare nulla della qualità di partenza. ■ Il cosiddetto " mirino sportivo" permette di controllare anche quanto accade al di fuori del campo effettivo di ripresa. ■ Il sistema di protezione contro la polvere della SIGMA SD10 impedisce l'ingresso della polvere e il depositarsi sul sensore. ■ In grado di eseguire fotografie con tecniche di ripresa avanzate, grazie al meccanismo che solleva lo specchio, o al pulsante di controllo della profondità di campo. • Conservate con cura questo libretto d'istruzioni, per poterlo consultare in ogni momento. Vi consentirà di sfruttare sempre, nel migliore dei modi, le uniche prestazioni della fotocamera. • La durata della garanzia è di un anno, dalla data dell'acquisto. Le condizioni di garanzia e il certificato di garanzia sono su fogli separati. leggeteli per conoscerle. -

Book XI Image Processing

V VV VV Image Processing VVVVon.com VVVV Basic Photography in 180 Days Book XI - Image Processing Editor: Ramon F. aeroramon.com Contents 1 Day 1 1 1.1 Digital image processing ........................................ 1 1.1.1 History ............................................ 1 1.1.2 Tasks ............................................. 1 1.1.3 Applications .......................................... 2 1.1.4 See also ............................................ 2 1.1.5 References .......................................... 3 1.1.6 Further reading ........................................ 3 1.1.7 External links ......................................... 3 1.2 Image editing ............................................. 3 1.2.1 Basics of image editing .................................... 4 1.2.2 Automatic image enhancement ................................ 7 1.2.3 Digital data compression ................................... 7 1.2.4 Image editor features ..................................... 7 1.2.5 See also ............................................ 13 1.2.6 References .......................................... 13 1.3 Image processing ........................................... 20 1.3.1 See also ............................................ 20 1.3.2 References .......................................... 20 1.3.3 Further reading ........................................ 20 1.3.4 External links ......................................... 21 1.4 Image analysis ............................................. 21 1.4.1 Computer Image Analysis .................................. -

The Canon Eos 20D Digital Camera: an Extraordinary Combination of Performance and Value

C THE CANON EOS 20D DIGITAL CAMERA: AN EXTRAORDINARY COMBINATION OF PERFORMANCE AND VALUE Table of Contents I OVERVIEW 3 II SUMMARY OF FEATURES 5 Performance 5 Advanced Components 5 Controls and Display 6 Compatibility and Support 6 Power Management 6 Body and Exterior 7 Software and Accessories 7 III NEW AND IMPROVED DESIGN FEATURES 8 New Technology CMOS Image Sensor and Microlenses 8 New High-Speed Continuous Shooting 8 New High-Speed Response 8 New DIGIC II Image Processor 9 Noise Reduction 9 All-New Autofocus System 10 New E-TTL II Autoflash Algorithm 11 New Built-In Flash 12 New Precision Matte Focusing Screen 12 Picture Size Auto Zoom 13 New High Speed Shutter 13 Support of EF-S Lenses 13 New Power System Upgrades 13 New Multi-controller 14 White Balance System 14 White Balance Correction 15 New White Balance Bracketing 15 New Menu Features 15 New Superimposition Display in Viewfinder 16 New Custom Functions 16 Shooting Modes 17 New Processing Parameters 17 Recording System 18 New CR2 Raw Format 18 Compliance 18 Camera Direct Printing 18 Construction 19 New Accessories 20 IV SPECIFICATIONS 22 V CONCLUSION 26 I. OVERVIEW At first glance, the new Canon EOS 20D seems to be an evolutionary product, a thorough redesign of the highly successful EOS 10D. The 20D is, in fact, so remarkable that it completely redefines the mid-price category of digital single lens reflex cameras. At its full 8.2 megapixel resolution, it fires up to 23 frames continuously at 5 frames-per- second. With its quick start-up, fast write speeds, new autofocus unit, sophisticated E-TTL II autoflash algorithm and extensive controls, the EOS 20D performs above the level of most high-end D-SLRs of the recent past.