Computational Reproducibility in Archaeological Research: Basic Principles and a Case

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Radical Solutions and Open Science an Open Approach to Boost Higher Education Lecture Notes in Educational Technology

Lecture Notes in Educational Technology Daniel Burgos Editor Radical Solutions and Open Science An Open Approach to Boost Higher Education Lecture Notes in Educational Technology Series Editors Ronghuai Huang, Smart Learning Institute, Beijing Normal University, Beijing, China Kinshuk, College of Information, University of North Texas, Denton, TX, USA Mohamed Jemni, University of Tunis, Tunis, Tunisia Nian-Shing Chen, National Yunlin University of Science and Technology, Douliu, Taiwan J. Michael Spector, University of North Texas, Denton, TX, USA The series Lecture Notes in Educational Technology (LNET), has established itself as a medium for the publication of new developments in the research and practice of educational policy, pedagogy, learning science, learning environment, learning resources etc. in information and knowledge age, – quickly, informally, and at a high level. Abstracted/Indexed in: Scopus, Web of Science Book Citation Index More information about this series at http://www.springer.com/series/11777 Daniel Burgos Editor Radical Solutions and Open Science An Open Approach to Boost Higher Education Editor Daniel Burgos Research Institute for Innovation & Technology in Education (UNIR iTED) Universidad Internacional de La Rioja (UNIR) Logroño, La Rioja, Spain ISSN 2196-4963 ISSN 2196-4971 (electronic) Lecture Notes in Educational Technology ISBN 978-981-15-4275-6 ISBN 978-981-15-4276-3 (eBook) https://doi.org/10.1007/978-981-15-4276-3 © The Editor(s) (if applicable) and The Author(s) 2020. This book is an open access publication. Open Access This book is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made. -

Open Data Citation Advantage

Briefing Paper The Open Data Citation Advantage Introduction The impact of scientific research continues to be evaluated by mechanisms that rely on the citation rates of published literature, so researchers, funders and research-performing organisations (RPOs) are all understandably keen to increase citations. SPARC Europe ran the Open Access Citation Advantage Service, which tracked literature on whether or not there is a citation advantage for Open Access articles. This is now so well established that they have stopped tracking the evidence. There is now increasing evidence that open practice with data also has a positive effect in a range of domains so widely spread that those who disagree with the effect would need to find conclusive evidence of domains where it does not hold. Several studies have shown that papers where the underlying data is available receive more citations over a longer period, and help generate further research. Open practice with data boosts citations to papers it underlies, providing a clear payoff to researchers for their data sharing effort. Moreover, open data practice increases the likelihood that the data itself will be cited as a scholarly output. The situation is complex; ‘open practice’ with data does not always simply mean ‘open data’, and other factors can also increase citation counts. In some domains, open data and publication linking is the norm and so no comparative studies can be done between those that do and do not make data available. The evidence is strong enough, however, to warrant effort from funders and RPOs to support researchers in open data practice. This briefing reviews a sample of the evidence, and recommends closer attention to potential links between the domains where these benefits have been found, and their prior investment in research data infrastructure. -

The Effects of Open Access on Un-Published Documents: a Case Study of Economics Working Papers Tove Faber Frandsen

The effects of open access on un-published documents: A case study of economics working papers Tove Faber Frandsen To cite this version: Tove Faber Frandsen. The effects of open access on un-published documents: A case study ofeco- nomics working papers. Journal of Informetrics, Elsevier, 2009, In press. hprints-00352359v2 HAL Id: hprints-00352359 https://hal-hprints.archives-ouvertes.fr/hprints-00352359v2 Submitted on 12 Jan 2009 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. The effects of open access on un-published documents: A case study of economics working papers Tove Faber Frandsen Royal School of Library and Information Science Birketinget 6, DK-2300 Copenhagen S, DENMARK Phone: +45 3258 6066 Fax: +45 3284 0201 E-mail: [email protected] Abstract The use of scholarly publications that have not been formally published in e.g. journals is widespread in some fields. In the past they have been disseminated through various channels of informal communication. However, the Internet has enabled dissemination of these unpublished and often unrefereed publications to a much wider audience. This is particularly interesting seen in relation to the highly disputed open access advantage as the potential advantage for low visibility publications has not been given much attention in the literature. -

Altmetrics to Quantify the Impact of Scientific Research Published in Open Full Text Repositories Bertil Dorch

Altmetrics to quantify the impact of scientific research published in open full text repositories Bertil Dorch To cite this version: Bertil Dorch. Altmetrics to quantify the impact of scientific research published in open full text repositories. 2013, pp.6773. 10.5281/zenodo.6773. hprints-00822129 HAL Id: hprints-00822129 https://hal-hprints.archives-ouvertes.fr/hprints-00822129 Submitted on 14 May 2013 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Discussion paper Altmetrics to quantify the impact of scientific research published in open full text repositories S. B. F. Dorch The Royal Library / University of Copenhagen Library, Copenhagen, Denmark First version March 8, 2013 Abstract Measures of the impact of scientific research increasingly become tools for various administrative applications. Bibliometric measures of the impact of research typically rely on citation analyses, which may not be appropriately representing all areas of scholarship, e.g. Arts and humanities. Metrics alternative to citation analyses are referred to as altmetrics, and include parameters derived from usage data, e.g. the number of times a paper is downloaded. In this paper I consider usage data from the open full text repository hprints.org for Nordic arts and humanities, and on the basis of an analysis of a small dataset from hprints.org, suggest new altmetrics based on the normalized squared number of downloads d downloads that could prove to be a valuable tool to quantify the impact of research published in open full text repositories. -

Oration ''Audivi'' of Enea Silvio Piccolomini (16 November 1436

Oration ”Audivi” of Enea Silvio Piccolomini (16 November 1436, Basel). Edited and translated by Michael v. Cotta-Schönberg. Final edition, 16th version. (Orations of Enea Silvio Piccolomini / Pope Pius II; 1) Michael Cotta-Schønberg To cite this version: Michael Cotta-Schønberg. Oration ”Audivi” of Enea Silvio Piccolomini (16 November 1436, Basel). Edited and translated by Michael v. Cotta-Schönberg. Final edition, 16th version. (Orations of Enea Silvio Piccolomini / Pope Pius II; 1). 2019. hprints-00683151 HAL Id: hprints-00683151 https://hal-hprints.archives-ouvertes.fr/hprints-00683151 Submitted on 8 Jul 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. (Orations of Enea Silvio Piccolomini / Pope Pius II; 1) 0 Oration “Audivi” of Enea Silvio Piccolomini (16 November 1436, Basel). Edited and translated by Michael von Cotta- Schönberg Final edition, 2nd version July 2019 Copenhagen 1 Abstract On 16 November 1436, Enea Silvio Piccolomini delivered the oration Audivi to the fathers of the Council of Basel, concerning the venue for the Union Council between the Latin Church and the Greek Church. He argued for the City of Pavia in the territory of the Duke of Milan. -

3/7 2008 Open Access to Scientific Works of Nobel Prize Winners

3/7 2008 Open Access to Scientific Works of Nobel Prize Winners Sweden's OpenAcess.se, the National Library of Sweden, Lund University Libraries, the Royal Swedish Academy of Sciences, and the Nobel Assembly at Karolinska Institutet are developing a plan for retroactive OA to the major papers of Nobel laureates in physics, chemistry, and physiology or medicine. Read more: http://www.kb.se/english/about/projects/openacess/projects/#Open and: http://www.kb.se/english/about/news/openaccess/ Contact: Jörgen Eriksson (Lund University Libraries, Head Office), Project co-ordinator: [email protected] -------------------------------------------------------------------------------------------------------------------- 29/4 2008 Nordic Countries Hprints -The Nordic arts and humanities e-print archive – has been launched Hprints is an Open Access repository aiming at making scholarly documents from the Arts and Humanities publicly available to the widest possible audience. It is the first of its kind in the Nordic Countries for the Humanities. Hprints is supported by the Nordbib research funding programme Denmark, Norway and Sweden have signed Expressions of Interest for joining the SCOAP3 SCOAP3 (Sponsoring Consortium for Open Access Publishing in Particle Physics Sponsoring Consortium for Open Access Publishing in Particle Physics Denmark The Danish Research Council for Culture and Communication has decided to change its journal support policy. To receive support journals are obliged to offer Open Access after a certain time period. Sweden -

Teaching & Researching Big History: Exploring a New Scholarly Field

Teaching & Researching Big History: Exploring a New Scholarly Field Leonid Grinin, David Baker, Esther Quaedackers, Andrey Korotayev To cite this version: Leonid Grinin, David Baker, Esther Quaedackers, Andrey Korotayev. Teaching & Researching Big History: Exploring a New Scholarly Field. France. Uchitel, pp.368, 2014, 978-5-7057-4027-7. hprints- 01861224 HAL Id: hprints-01861224 https://hal-hprints.archives-ouvertes.fr/hprints-01861224 Submitted on 24 Aug 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Public Domain INTERNATIONAL BIG HISTORY ASSOCIATION RUSSIAN ACADEMY OF SCIENCES INSTITUTE OF ORIENTAL STUDIES The Eurasian Center for Big History and System Forecasting TEACHING & RESEARCHING BIG HISTORY: EXPLORING A NEW SCHOLARLY FIELD Edited by Leonid Grinin, David Baker, Esther Quaedackers, and Andrey Korotayev ‘Uchitel’ Publishing House Volgograd ББК 28.02 87.21 Editorial Council: Cynthia Stokes Brown Ji-Hyung Cho David Christian Barry Rodrigue Teaching & Researching Big History: Exploring a New Scholarly Field / Edited by Leonid E. Grinin, David Baker, Esther Quaedackers, and Andrey V. Korotayev. – Volgograd: ‘Uchitel’ Publishing House, 2014. – 368 pp. According to the working definition of the International Big History Association, ‘Big History seeks to understand the integrated history of the Cosmos, Earth, Life and Humanity, using the best available empirical evidence and scholarly methods’. -

Packaging Data Analytical Work Reproducibly Using R (And Friends)

University of Wollongong Research Online Faculty of Science, Medicine and Health - Papers: part A Faculty of Science, Medicine and Health 1-1-2018 Packaging Data Analytical Work Reproducibly Using R (and Friends) Ben Marwick University of Washington, [email protected] Carl Boettiger University of Wollongong Lincoln Mullen George Mason University Follow this and additional works at: https://ro.uow.edu.au/smhpapers Part of the Medicine and Health Sciences Commons, and the Social and Behavioral Sciences Commons Recommended Citation Marwick, Ben; Boettiger, Carl; and Mullen, Lincoln, "Packaging Data Analytical Work Reproducibly Using R (and Friends)" (2018). Faculty of Science, Medicine and Health - Papers: part A. 5389. https://ro.uow.edu.au/smhpapers/5389 Research Online is the open access institutional repository for the University of Wollongong. For further information contact the UOW Library: [email protected] Packaging Data Analytical Work Reproducibly Using R (and Friends) Abstract Computers are a central tool in the research process, enabling complex and large-scale data analysis. As computer-based research has increased in c omplexity, so have the challenges of ensuring that this research is reproducible. To address this challenge, we review the concept of the research compendium as a solution for providing a standard and easily recognizable way for organizing the digital materials of a research project to enable other researchers to inspect, reproduce, and extend the research. We investigate how the structure and tooling of software packages of the R programming language are being used to produce research compendia in a variety of disciplines. We also describe how software engineering tools and services are being used by researchers to streamline working with research compendia. -

Half Term Report: Licence to Publish – Promoting Open Access And

Half term report: Licence to publish – promoting Open Access and authors’ rights in the Nordic social sciences and humanities Simone Schipp von Branitz Nielsen, Thea Marie Drachen, Bertil Dorch To cite this version: Simone Schipp von Branitz Nielsen, Thea Marie Drachen, Bertil Dorch. Half term report: Licence to publish – promoting Open Access and authors’ rights in the Nordic social sciences and humanities. 2009. hprints-00437866 HAL Id: hprints-00437866 https://hal-hprints.archives-ouvertes.fr/hprints-00437866 Preprint submitted on 1 Dec 2009 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. hprints.org Half term report to Nordbib – August 2009 “Licence to publish – promoting Open Access and authors’ rights in the Nordic social sciences and humanities” Simone Schipp von Branitz Nielsen (Project Manager) et al. CULIS Knowledge Center for Scholarly Communication The Royal Library / Copenhagen University Library and Information Service Project period: April 1st 2009 – July 31st 2009 Grant: 225.000 DKK (total budget frame) and 90.000 DKK (from Nordbib) Summary of project results As stated in the original application to Nordbib’s Focus Area “Policy and visibility” (Work Package 1), the purpose of the project was to increase the awareness and understanding of the principles of Open Access by providing a dialogue among stakeholders on authors’ rights and Open Access principles. -

The 'State-Of-The-Art' in Providing Open Access to Scholarly Literature

This is a repository copy of Making open access work: The ‘state-of-the-art’ in providing open access to scholarly literature. White Rose Research Online URL for this paper: http://eprints.whiterose.ac.uk/87407/ Version: Accepted Version Article: Pinfield, S. (2015) Making open access work: The ‘state-of-the-art’ in providing open access to scholarly literature. Online Information Review, 39 (5). pp. 604-636. ISSN 1468-4535 https://doi.org/10.1108/OIR-05-2015-0167 Reuse Unless indicated otherwise, fulltext items are protected by copyright with all rights reserved. The copyright exception in section 29 of the Copyright, Designs and Patents Act 1988 allows the making of a single copy solely for the purpose of non-commercial research or private study within the limits of fair dealing. The publisher or other rights-holder may allow further reproduction and re-use of this version - refer to the White Rose Research Online record for this item. Where records identify the publisher as the copyright holder, users can verify any specific terms of use on the publisher’s website. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ Making open access work: The ‘state-of-the-art’ in providing open access to scholarly literature Abstract Purpose: This paper is designed to provide an overview of one of the most important and controversial areas of scholarly communication: open-access publishing and dissemination of research outputs. -

Open Research Data: from Vision to Practice

Open Research Data: From Vision to Practice Heinz Pampel and Sünje Dallmeier-Tiessen To make progress in science, we need to be open and share. —Neelie Kroes (2012) Abstract ‘‘To make progress in science, we need to be open and share.’’ This quote from Neelie Kroes (2012), vice president of the European Commission describes the growing public demand for an Open Science. Part of Open Science is, next to Open Access to peer-reviewed publications, the Open Access to research data, the basis of scholarly knowledge. The opportunities and challenges of Data Sharing are discussed widely in the scholarly sector. The cultures of Data Sharing differ within the scholarly disciplines. Well advanced are for example disciplines like biomedicine and earth sciences. Today, more and more funding agencies require a proper Research Data Management and the possibility of data re-use. Many researchers often see the potential of Data Sharing, but they act cautiously. This situation shows a clear ambivalence between the demand for Data Sharing and the current practice of Data Sharing. Starting from a baseline study on current discussions, practices and developments the article describe the challenges of Open Research Data. The authors briefly discuss the barriers and drivers to Data Sharing. Furthermore, the article analyses strategies and approaches to promote and implement Data Sharing. This comprises an analysis of the current landscape of data repositories, enhanced publications and data papers. In this context the authors also shed light on incentive mechanisms, data citation practises and the interaction between data repositories and journals. In the conclusions the authors outline requirements of a future Data Sharing culture. -

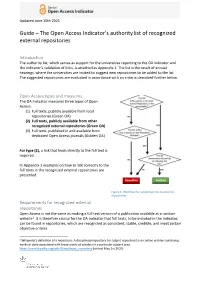

Guide – the Open Access Indicator's Authoritylist of Recognized External

Updated June 15th 2021 Guide – The Open Access Indicator’s authority list of recognized external repositories Introduction The authority list, which serves as support for the universities reporting to the OA Indicator and the indicator’s validation of links, is attached as Appendix 1. The list is the result of annual hearings, where the universities are invited to suggest new repositories to be added to the list. The suggested repositories are evaluated in accordance with six criteria described further below. Open Access types and measures The OA Indicator measures three types of Open Access: (1) Full texts, publicly available from local repositories (Green OA) (2) Full texts, publicly available from other recognized external repositories (Green OA) (3) Full texts, published in and available from dedicated Open Access journals (Golden OA) For type (2), a link that leads directly to the full text is required. In Appendix 1 examples on how to link correctly to the full texts in the recognized external repositories are presented. Figure 1: Workflow for validating links to external repositories Requirements for recognized external repositories Open Access is not the same as making a full text version of a publication available at a random website1. It is therefore crucial for the OA Indicator that full texts, to be included in the indicator, can be found in repositories, which are recognized as consistent, stable, credible, and meet certain objective criteria. 1 Wikipedia’s definition of a repository: A disciplinary repository (or subject repository) is an online archive containing works or data associated with these works of scholars in a particular subject area.