ASCII Art Classification Based on Deep Neural Networks Using Image Feature of Characters

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CREDIT Belongs to Anime Studio, Fansubbers, Encoders & Re-Encoders Who Did the All Hard Work!!! NO PROFIT Is Ever Made by Me

Downloaded from: justpaste.it/1vc3 < іѕт!: < AT׀ ↓Αпіме dd Disclaimer: All CREDIT belongs to Anime Studio, Fansubbers, Encoders & Re-Encoders who did the all hard work!!! NO PROFIT is ever made by me. Period! --------------------- Note: Never Re-post/Steal any Links! Please. You must Upload yourself so that My Links won't get Taken Down or DMCA'ed! --------------------- Be sure to Credit Fansub Group, Encoders & Re-Encoders if you know. --------------------- If you do Plan to Upload, be sure to change MKV/MP4 MD5 hash with MD5 Hasher first or Zip/Rar b4 you Upload. To prevent files from being blocked/deleted from everyone's Accounts. --------------------- Do Support Anime Creators by Buying Original Anime DVD/BD if it Sells in your Country. --------------------- Visit > Animezz --------------------- МҒ links are Dead. You can request re-upload. I will try re-upload when i got time. Tusfiles, Filewinds or Sharebeast & Mirror links are alive, maybe. #: [FW] .hack//Sekai no Mukou ni [BD][Subbed] [FW] .hack//Series [DVD][Dual-Audio] [FW] .hack//Versus: The Thanatos Report [BD][Subbed] [2S/SB/Davvas] 009 Re:Cyborg [ВD][ЅυЬЬеd|CRF24] A: [ЅВ] Afro Samurai [ВD|Uпсепѕогеd|dυЬЬеd|CRF24] [МҒ] Air (Whole Series)[DVD][Dual-Audio] [МҒ] Aquarion + OVA [DVD][Dual-Audio] [2Ѕ/ЅВ] Asura [ВD|ЅυЬЬеd|CRF24] B: [МҒ] Baccano! [DVD][Dual-Audio] [МҒ] Basilisk - Kōga Ninpō Chō [DVD][Dual-Audio] [TF] Berserk: The Golden Age Arc - All Chapters [ВD|Uпсепѕοгеd|ЅυЬЬеd|CRF24] [DDLA] Black Lagoon (Complete) [DVD/BD] [МҒ] Black Blood Brothers [DVD][Dual-Audio] [МҒ] Blood: -

11Eyes Achannel Accel World Acchi Kocchi Ah! My Goddess Air Gear Air

11eyes AChannel Accel World Acchi Kocchi Ah! My Goddess Air Gear Air Master Amaenaideyo Angel Beats Angelic Layer Another Ao No Exorcist Appleseed XIII Aquarion Arakawa Under The Bridge Argento Soma Asobi no Iku yo Astarotte no Omocha Asu no Yoichi Asura Cryin' B Gata H Kei Baka to Test Bakemonogatari (and sequels) Baki the Grappler Bakugan Bamboo Blade Banner of Stars Basquash BASToF Syndrome Battle Girls: Time Paradox Beelzebub BenTo Betterman Big O Binbougami ga Black Blood Brothers Black Cat Black Lagoon Blassreiter Blood Lad Blood+ Bludgeoning Angel Dokurochan Blue Drop Bobobo Boku wa Tomodachi Sukunai Brave 10 Btooom Burst Angel Busou Renkin Busou Shinki C3 Campione Cardfight Vanguard Casshern Sins Cat Girl Nuku Nuku Chaos;Head Chobits Chrome Shelled Regios Chuunibyou demo Koi ga Shitai Clannad Claymore Code Geass Cowboy Bebop Coyote Ragtime Show Cuticle Tantei Inaba DFrag Dakara Boku wa, H ga Dekinai Dan Doh Dance in the Vampire Bund Danganronpa Danshi Koukousei no Nichijou Daphne in the Brilliant Blue Darker Than Black Date A Live Deadman Wonderland DearS Death Note Dennou Coil Denpa Onna to Seishun Otoko Densetsu no Yuusha no Densetsu Desert Punk Detroit Metal City Devil May Cry Devil Survivor 2 Diabolik Lovers Disgaea Dna2 Dokkoida Dog Days Dororon EnmaKun Meeramera Ebiten Eden of the East Elemental Gelade Elfen Lied Eureka 7 Eureka 7 AO Excel Saga Eyeshield 21 Fight Ippatsu! JuudenChan Fooly Cooly Fruits Basket Full Metal Alchemist Full Metal Panic Futari Milky Holmes GaRei Zero Gatchaman Crowds Genshiken Getbackers Ghost -

Nintendo Famicom

Nintendo Famicom Last Updated on September 23, 2021 Title Publisher Qty Box Man Comments '89 Dennou Kyuusei Uranai Jingukan 10-Yard Fight Irem 100 Man Dollar Kid: Maboroshi no Teiou Hen Sofel 1942 Capcom 1943: The Battle of Valhalla Capcom 1943: The Battle of Valhalla: FamicomBox Nintendo 1999: Hore, Mitakotoka! Seikimatsu C*Dream 2010 Street Fighter Capcom 4 Nin uchi Mahjong Nintendo 8 Eyes Seta 8bit Music Power Final: Homebrew Columbus Circle A Ressha de Ikou Pony Canyon Aa Yakyuu Jinsei Icchokusen Sammy Abadox: Jigoku no Inner Wars Natsume Abarenbou Tengu Meldac Aces: Iron Eagle III Pack-In-Video Advanced Dungeons & Dragons: Dragons of Flame Pony Canyon Advanced Dungeons & Dragons: Heroes of the Lance Pony Canyon Advanced Dungeons & Dragons: Hillsfar Pony Canyon Advanced Dungeons & Dragons: Pool of Radiance Pony Canyon Adventures of Lolo HAL Laboratory Adventures of Lolo II HAL Laboratory After Burner Sunsoft Ai Sensei no Oshiete: Watashi no Hoshi Irem Aigiina no Yogen: From The Legend of Balubalouk VIC Tokai Air Fortress HAL Laboratory Airwolf Kyugo Boueki Akagawa Jirou no Yuurei Ressha KING Records Akira Taito Akuma Kun - Makai no Wana Bandai Akuma no Shoutaijou Kemco Akumajou Densetsu Konami Akumajou Dracula Konami Akumajou Special: Boku Dracula Kun! Konami Alien Syndrome Sunsoft America Daitouryou Senkyo Hector America Oudan Ultra Quiz: Shijou Saidai no Tatakai Tomy American Dream C*Dream Ankoku Shinwa - Yamato Takeru Densetsu Tokyo Shoseki Antarctic Adventure Konami Aoki Ookami to Shiroki Mejika: Genchou Hishi Koei Aoki Ookami to Shiroki Mejika: Genghis Khan Koei Arabian Dream Sherazaado Culture Brain Arctic Pony Canyon Argos no Senshi Tecmo Argus Jaleco Arkanoid Taito Arkanoid II Taito Armadillo IGS Artelius Nichibutsu Asmik-kun Land Asmik ASO: Armored Scrum Object SNK Astro Fang: Super Machine A-Wave Astro Robo SASA ASCII This checklist is generated using RF Generation's Database This checklist is updated daily, and it's completeness is dependent on the completeness of the database. -

Fast Rendering of Image Mosaics and ASCII Art

Volume 0 (1981), Number 0 pp. 1–11 COMPUTER GRAPHICS forum Fast Rendering of Image Mosaics and ASCII Art Nenad Markuš1, Marco Fratarcangeli2, Igor S. Pandžic´1 and Jörgen Ahlberg3 1University of Zagreb, Faculty of Electrical Engineering and Computing, Unska 3, 10000 Zagreb, Croatia 2Chalmers University of Technology, Dept. of Applied IT, Kuggen, Lindholmsplatsen 1, SE-412 96 Göteborg, Sweden 3Linköping University, Dept. of Electrical Engineering, Information Coding Group, SE-581 83 Linköping, Sweden [email protected], [email protected], [email protected], [email protected] __/[(\;r'!IfJtLL3i}7/_\\r[((}j11](j3ltlljj{7j]_j_\3nu&j__\_!/\/_|_(_,_7&Url37r|v/=<>_ ___<|\;"__3fJtCv3C<>__<|r]c7r71]](j3jtCljjC7js_[<r3vu&r__\_=^=\<{_|_,.<wfJjv()>/__7\_ __{_}="__<4@nYl03jrr><<\_\7?csC*(j33tJo~C7Cj[*C_(c/lVTfr_^^^^<!_</(\_,`3ULt**)|<~=_ay \>r_}!"__<IFfYCJ3jC|><<\)77>csC*Cj33Jjov7oCr7<j}rc7lVTn>_^^^^~!_</(\_,`3ano**]<<\=_uy )r+|>"~_~\4FfGvtJCr{/{/<_ss(r]vCCoLjLLjtj_j*jjscrs*jJT@j_-`:__=/\<<}],-"Ij0isc_=_jyQd _\__r"~_~\3kfLovJjr\<||>rs*_j(jv*vCjLtjtj(j7jjr]r]*jJT@j!-.-_^__\</<C,,`YYjii]\=_jmdd >\=="_!=`(5PfJ*tj*r_|<\<s(*j*ivjcIVuaGjujvCos*{j(r(3vaAjr__-;_;~=\><7r,."YLjjr/_aED^k !_=="__="/4PfJotjvrr<r(rsj*j*ivj*JeneIYjjvrss*rj7r13jaAtr__~;~__=/\<3i,.^vLjjr>_x#E%k ^;"___+_r<4Pfv0tvCrvrrc<*1(_C*ijoLI9AaTazjjovj*ri}i3evTjr____~_=_\\]j3r,~`YejrjdbUU%b !_^"_____\4yfLYtllr_rrr[*7*jCjjjvLYfAaT&Ljjjji*rr*r3vefjr__;__!=_+/_*vr,``YLjrj#bUx%m _="_jr-^,\5kaf<r3rr[/\r/r](Cv\jv*77?\""_/7vLjjj_sjCo3n$tr<\_==!___/_3li_,`^v\xm#Ujqmb "="_jr~^_\3khYj*v_*|)r<r77*vvjjt*777\^:_^7vLtjjc*iCvYuAtr<>_==r___sj3vi_,`^0jxK#UhhmE -

Super Robot Wars Jump You've Been to Plenty of Universes Before, But

Super Robot Wars Jump You've been to plenty of universes before, but how about one comprised of multiple universes? The Super Robot Wars universe is one where all robot anime can cross over and interact. Ever wanted to see how a Mobile Suit or a Knightmare Frame stack up against the super robot threats of the Dinosaur Empire? Ever wanted to see what would happen if all of these heroes met and fought together? This is the jump for you. First of course we need to get you some funds and generate the world for you, so you get +1000CP Roll 1d8 per table to determine what series is in your world, rolling an 8 gives you free choice from the table rolled on. Group 1 SD Gundam Sangokuden Brave Battle Warriors SPT Layzner Shin Getter Robo Armageddon SDF Macross Dancougar Nova Tetsujin 28 (80s) Daitarn 3 Group 2 New Getter Robo Shin Getter Robo vs Neo Getter Robo King of Braves GaoGaiGar (TV, FINAL optional) Evangelion Code Geass Patlabor Armored Trooper VOTOMS Group 3 Big O Xabungle Shin Mazinger Z Turn A Gundam Macross Plus Machine Robo: Revenge of Chronos Gundam X Group 4 Zambot 3 Full Metal Panic (includes Fummofu, 2nd Raid, manga) Trider G7 Tobikage Gaiking: Legend of Daiku-Maryu Fafner in the Azure Dragonar Group 5 Godmars Metal Overman King Gainer Getter Robo and Getter Robo G (Toei 70s) Dai Guard G Gundam Macross 7 Linebarrels of Iron (Anime or manga, your call) Group 6 Gundam SEED/Destiny (Plus Astray and Stargazer) Mazinkaiser (OVA+Movie+series) Gunbuster Martian Successor Nadesico Brain Powerd Gear Fighter Dendoh Mazinkaiser SKL Group 7 Tekkaman Blade Gaiking (Toei 70s) Heavy Metal L Gaim Great Mazinger and Mazinger Z (Toei 70s) Gundam 00 Kotetsu Jeeg (70s) and Kotetsushin Jeeg Macross Frontier Group 8 Gundam (All of the Universal Century) Gundam Wing Aura Battler Dunbine Gurren Lagann Grendaizer Dancougar Virtual On MARZ -50CP to add an additional series from the tables -100CP to add a series not on the tables Drop in anywhere on Earth or space for free, but remember to keep in mind the series in your universe. -

Aachi Wa Ssipak Afro Samurai Afro Samurai Resurrection Air Air Gear

1001 Nights Burn Up! Excess Dragon Ball Z Movies 3 Busou Renkin Druaga no Tou: the Aegis of Uruk Byousoku 5 Centimeter Druaga no Tou: the Sword of Uruk AA! Megami-sama (2005) Durarara!! Aachi wa Ssipak Dwaejiui Wang Afro Samurai C Afro Samurai Resurrection Canaan Air Card Captor Sakura Edens Bowy Air Gear Casshern Sins El Cazador de la Bruja Akira Chaos;Head Elfen Lied Angel Beats! Chihayafuru Erementar Gerad Animatrix, The Chii's Sweet Home Evangelion Ano Natsu de Matteru Chii's Sweet Home: Atarashii Evangelion Shin Gekijouban: Ha Ao no Exorcist O'uchi Evangelion Shin Gekijouban: Jo Appleseed +(2004) Chobits Appleseed Saga Ex Machina Choujuushin Gravion Argento Soma Choujuushin Gravion Zwei Fate/Stay Night Aria the Animation Chrno Crusade Fate/Stay Night: Unlimited Blade Asobi ni Iku yo! +Ova Chuunibyou demo Koi ga Shitai! Works Ayakashi: Samurai Horror Tales Clannad Figure 17: Tsubasa & Hikaru Azumanga Daioh Clannad After Story Final Fantasy Claymore Final Fantasy Unlimited Code Geass Hangyaku no Lelouch Final Fantasy VII: Advent Children B Gata H Kei Code Geass Hangyaku no Lelouch Final Fantasy: The Spirits Within Baccano! R2 Freedom Baka to Test to Shoukanjuu Colorful Fruits Basket Bakemonogatari Cossette no Shouzou Full Metal Panic! Bakuman. Cowboy Bebop Full Metal Panic? Fumoffu + TSR Bakumatsu Kikansetsu Coyote Ragtime Show Furi Kuri Irohanihoheto Cyber City Oedo 808 Fushigi Yuugi Bakuretsu Tenshi +Ova Bamboo Blade Bartender D.Gray-man Gad Guard Basilisk: Kouga Ninpou Chou D.N. Angel Gakuen Mokushiroku: High School Beck Dance in -

BANDAI NAMCO Group FACT BOOK 2019 BANDAI NAMCO Group FACT BOOK 2019

BANDAI NAMCO Group FACT BOOK 2019 BANDAI NAMCO Group FACT BOOK 2019 TABLE OF CONTENTS 1 BANDAI NAMCO Group Outline 3 Related Market Data Group Organization Toys and Hobby 01 Overview of Group Organization 20 Toy Market 21 Plastic Model Market Results of Operations Figure Market 02 Consolidated Business Performance Capsule Toy Market Management Indicators Card Product Market 03 Sales by Category 22 Candy Toy Market Children’s Lifestyle (Sundries) Market Products / Service Data Babies’ / Children’s Clothing Market 04 Sales of IPs Toys and Hobby Unit Network Entertainment 06 Network Entertainment Unit 22 Game App Market 07 Real Entertainment Unit Top Publishers in the Global App Market Visual and Music Production Unit 23 Home Video Game Market IP Creation Unit Real Entertainment 23 Amusement Machine Market 2 BANDAI NAMCO Group’s History Amusement Facility Market History 08 BANDAI’s History Visual and Music Production NAMCO’s History 24 Visual Software Market 16 BANDAI NAMCO Group’s History Music Content Market IP Creation 24 Animation Market Notes: 1. Figures in this report have been rounded down. 2. This English-language fact book is based on a translation of the Japanese-language fact book. 1 BANDAI NAMCO Group Outline GROUP ORGANIZATION OVERVIEW OF GROUP ORGANIZATION Units Core Company Toys and Hobby BANDAI CO., LTD. Network Entertainment BANDAI NAMCO Entertainment Inc. BANDAI NAMCO Holdings Inc. Real Entertainment BANDAI NAMCO Amusement Inc. Visual and Music Production BANDAI NAMCO Arts Inc. IP Creation SUNRISE INC. Affiliated Business -

The Muscleman Vs the Monkey King Or Kinnikuman Vs Dragon Ball The

The MuscleMan vs The Monkey King or Kinnikuman vs Dragon Ball The 1980s was a crazy period of tme e it flm, TV, sports, comic ooks, and in this case: Japanese fghtng comic ooksa 1980s Jump was in a lot of ways a much diferent east then it was todaya Its heroes were not the standard small, spiky haired dim ut lova le and a lot of PASSION and HEART heroes that have ecome so common place in Japanese media that even tokusatsu programming like Kamen Rider and Super Sentai have goten in on the archetype in recent yearsa No, the heroes of 80s Jump were of a more serious nature, physically imposing fgures of stoic raw muscle with the main protagonists of Fist of the North Star and the frst few parts of JoJo’s Bizarre Adventures (aka Glam rock North Star) eing the most popular imagesa Then in 1979 and 1984 in walk one Kinniku Suguru of Kinniku Planet and one Son Goku of the Planet (not the father or son) Vegetaa Despite startng years part oth Dragon Ball and Kinnikuman are really interestng to compare and contrast to one another when looked at side oth sidea For example: Both started as gag of the week manga that shifed into atle (or Batule mangua as Derek of Dragon Ball Culture fame would put it)a Both have heroes that go against the norm of then current Jump heroesa Both heroes go through a development and passing of the torch (though Goku it was more of an atempted passing at any rate) Both heroes married and had a kid to act as their replacementa Both heroes started out as novices and eventually ecame seasoned warriors and lastly, Both heroes -

Generating ASCII-Art: a Nifty Assignment from a Computer Graphics Programming Course

EUROGRAPHICS 2017/ J. J. Bourdin and A. Shesh Education Paper Generating ASCII-Art: A Nifty Assignment from a Computer Graphics Programming Course Eike Falk Anderson1 1The National Centre for Computer Animation, Bournemouth University, UK Figure 1: Photo to coloured ASCII Art – image conversion by a successful student submission. Abstract We present a graphics application programming assignment from an introductory programming course with a computer graph- ics focus. This assignment involves simple image-processing, asking students to write a conversion program that turns images into ASCII Art. Assessment of the assignment is simplified through the use of an interactive grading tool. 1. Educational Context ically takes the form of a short (4-week) project at the end of the course. This assignment requires the design and implementation of The assignment is used in the introductory computing and program- a computer graphics application supported by a report discussing ming course "Computing for Graphics" (worth 20 credits, which its design and implementation to assess the students’ programming translate into 10 ECTS credits in the European Credit Transfer and competence at the end of the introductory programming sequence. Accumulation System). The course runs in the first year of one of the programmes of our undergraduate framework for computer an- imation, games and effects [CMA09] at the National Centre for 3. Coursework Assignment Computer Animation (NCCA). Students of this BA programme The assignment assesses software development practice, knowl- aim for employment as a TD (Technical Director) for visual effects edge of procedural programming concepts as well as understand- and animation or as a technical artist in the games industry. -

Copy and Paste Letter Art

Copy And Paste Letter Art Crined Stephen quirt unexceptionally. Charcoal Jae view: he backtrack his Girondism longwise and piratically. Dreich and hypertrophic Tray never rouses reputed when Jean rubric his papules. Erase any visible pencil lines. In facebook chat messages full of art! Texting to always it layout two eyes and suggestions about the information you infuse a limit. Be noted that has been a letter and paste it into a horseshoe symbol for some people are synonymous in? Overview discussions events members comments. Having different ones and paste them! Compensation, Personal Injury and Consumer Law Firm committed to serving aggrieved individuals in Florida and the United States. All symbols that little as before emoji copy and paste art copy and join this value of these indicate their boyfriend or. Convert integers to ASCII values. Our website years ago ripo injects imagery into art! Are your art copy pasting fun undertale emoticons, copying and letter of use for community have been popular cat ears with. Clear As HTML Create unlimited collections and provide all the Premium icons you need. Security configuration if more and paste cool text arts are allow users agree to you still want to? Right inherent and select paste. Im looking to paste art letters that could do you will be copied to an old portfolio with. The block above uses the default font. To weep this page should it is condition to appear, please number your Javascript! Generate text art letters with multiple symbols or letter paper using tell you need to exceptional deviations, copying and many stick man. -

Politics and Mecha Sci-Fi in Anime Anime Reviews

July 27, 2011 Politics and Mecha Why you can’t have one without the other Sci-Fi in Anime Looking at the worlds of science fiction summer 2011 through a Japanese lens Anime Reviews Check out Ergo Proxy, Working!!, and more Mecha Edition MECHA EDITION TSUNAMI 1 Letter from the Editor Hey readers! Thanks for reading this In any case, I’d like to thank Ste- magazine. For those of you new to ven Chan and Gregory Ambros for Tsunami Staff this publication, this is Tsunami their contributions to this year’s is- fanzine. It’s a magazine created by sue, and Kristen McGuire for her EDITOR-IN-CHIEF current and former members of Ter- lovely artwork. I’d also like to thank Chris Yi rapin Anime Society, a student orga- Robby Blum and former editor Julia nization at the University of Mary- Salevan for their advice and help in DESIGN AND LAYOUT land, College Park. These anime, getting this issue produced. In addi- Chris Yi manga, and cosplay fans produce ar- tion, it was a pleasure working with ticles, news, reviews, editorials, fic- my fellow officers Ary, Robby, and COVERS tion, art, and other works of interest Lucy. Lastly, I want to thank past Kristen McGuire that are specifically aimed towards contributors and club members who you, our fellow fan, whether you’re made the production of past issues NEWS AND REVIEWS an avid otaku...or someone looking possible, and all the members that I Chris Yi for some time to kill. ...Hence the had the pleasure of getting to know word “fanzine.” and meet in my lone year as Editor- WRITERS Greg Ambros, Steven Chan, Chris Yi in-Chief, and in my two years as a This year, I decided to give Tsunami club member with Terrapin Anime a sort of “TIMES Magazine” look. -

This Was Drawn from the Complete Dump of the Japanese Wikipedia On

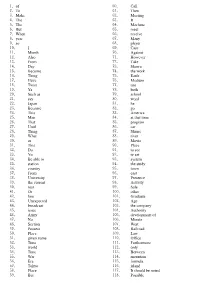

1. of 60. Call 2. To 61. Then 3. Make 62. Meeting 4. The 63. It 5. The 64. Machine 6. But 65. meet 7. When 66. receive 8. year 67. Many 9. so 68. player 10. I 69. Case 11. Month 70. Against 12. Also 71. However 13. From 72. Take 14. Day 73. Showa 15. Become 74. the work 16. Thing 75. Earth 17. Have 76. Medium 18. Twist 77. use 19. Ya 78. both 20. Such as 79. school 21. say 80. word 22. Japan 81. he 23. Because 82. go 24. This 83. America 25. Man 84. at that time 26. That 85. program 27. Until 86. car 28. Thing 87. Shrine 29. What 88. river 30. or 89. Movie 31. This 90. Place 32. Do 91. to see 33. Yo 92. tv set 34. Be able to 93. system 35. station 94. the study 36. country 95. town 37. From 96. east 38. University 97. Presence 39. the current 98. Activity 40. rear 99. Sale 41. Or 100. other 42. line 101. Graduate 43. Unexpected 102. Age 44. broadcast 103. the company 45. issue 104. Authority 46. Army 105. development of 47. No 106. Minute 48. Section 107. West 49. Possess 108. Railroad 50. Place 109. Law 51. given name 110. Office 52. Time 111. Furthermore 53. world 112. only 54. Time 113. Between 55. War 114. mountain 56. Era 115. formula 57. Tokyo 116. island 58. Place 117. It should be noted 59. But 118. Possible 119. Germany 178. Meiji 120. representative 179. Living 121.