15213 Lecture 16: System-Level I/O

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

System Calls and I/O

System Calls and I/O CS 241 January 27, 2012 Copyright ©: University of Illinois CS 241 Staff 1 This lecture Goals Get you familiar with necessary basic system & I/O calls to do programming Things covered in this lecture Basic file system calls I/O calls Signals Note: we will come back later to discuss the above things at the concept level Copyright ©: University of Illinois CS 241 Staff 2 System Calls versus Function Calls? Copyright ©: University of Illinois CS 241 Staff 3 System Calls versus Function Calls Function Call Process fnCall() Caller and callee are in the same Process - Same user - Same “domain of trust” Copyright ©: University of Illinois CS 241 Staff 4 System Calls versus Function Calls Function Call System Call Process Process fnCall() sysCall() OS Caller and callee are in the same Process - Same user - OS is trusted; user is not. - Same “domain of trust” - OS has super-privileges; user does not - Must take measures to prevent abuse Copyright ©: University of Illinois CS 241 Staff 5 System Calls System Calls A request to the operating system to perform some activity System calls are expensive The system needs to perform many things before executing a system call The computer (hardware) saves its state The OS code takes control of the CPU, privileges are updated. The OS examines the call parameters The OS performs the requested function The OS saves its state (and call results) The OS returns control of the CPU to the caller Copyright ©: University of Illinois CS 241 Staff 6 Steps for Making a System Call -

Newbrain Handbook for Disk Users

ADDITIONAL INFORMATION Any information on the system which could not be included in the Handbook for Disk Users will be found in a file on the system disk called `INFO'. To read the information, enter CP/M, type: TYPE INFO and press NEWLINE As the text is shown on the screen, hold down CONTROL and press S to stop and restart the display. How to use this Manual CONTENTS If you just want 1 Introduction ................................................................................ 3 to use prepared READ 2 Connecting up ............................................................................ 9 programs on your SECTIONS 1, 2,3 3 Getting started ........................................................................ 13 NewBrain, and don't 4 NewBrain BASIC with disk-Programs ................................... 25 want to know about LOOK THROUGH 5 NewBrain BASIC with disk-Data Files .................................. 31 how computers work ... SECTIONS 10-12 6 NewBrain BASIC with disk-Random Access Files ............... 41 7 Cataloguing files through BASIC ............................................ 51 8 Using cassettes ....................................................................... 53 If you know some WORK THROUGH THE 9 What is CP/M? ........................................................................ 55 BASIC already and WHOLE OF THIS 10 Working with disks .................................................................. 59 want to develop your MANUAL AS A BASIS 11 Using CP/M with NewBrain ................................................... -

RDS) Distribution

2001/10/30 Data Formats of the NSRL Reference Data Set (RDS) Distribution Change Record 1. 2001/01/25 – Editorial changes, corrections to record layouts, modification of distribution naming and versioning. 2. 2001/01/29 – Addition of Virus data element to File record, modification of data records to use ASCII 10 character instead of ASCII 13 to end a record, addition of information on physical distribution to indicate additional records or replacement database. 3. 2001/03/08 – Added section on fifth file, SHA-1.txt. 4. 2001/07/06 – Modified file structures, file names, distribution information. 5. 2001/09/05 – Editorial changes and corrections. 6. 2001/10/11 – Modified manufacturer file structure, added SpecialCode field to NSRLFILE.TXT structure, and added clarifications to physical file descriptions. 7. 2001/10/23 – Modified NSRLFILE structure to remove PathHash. 8. 2001/10/30 – Modified NSRLFILE structure to remove FileExt. Introduction This report describes the format of data included in the distribution of the National Software Reference Library (NSRL) Reference Data Set (RDS). The NSRL is a repository of commercial- off-the-shelf (COTS) software. The RDS consists of file profiles made up of file identifying information and hash values from the individual files provided in a software package’s installation media. An extract of the NSRL database is produced periodically and made available through the U.S. Department of Commerce’s (DoC) National Institute of Standards and Technology (NIST) Special Database program as NIST Special Database #28. (See “Physical Distribution Media.”) As software is received in the NSRL, it is processed on a production machine that runs programs to compute a file signature, or hash value, for each file within the installation media. -

Newline Characters Should Map to a Completely Ignorable Collation Element in the DUCET Marc Lodewijck (Brussels, Belgium) October 28, 2016

Proposal for consideration by UTC Newline characters should map to a completely ignorable collation element in the DUCET Marc Lodewijck (Brussels, Belgium) October 28, 2016 Assume the following records, which compare tertiary-equal: "xy#", "xy", "x#y" With the UCA “Shifted” option for handling variable collation elements, the sorting result is as follows: [3] x#y [1EFF 1F0B | 0020 0020 | 0002 0002 | FFFF 038F FFFF |] [2] xy [1EFF 1F0B | 0020 0020 | 0002 0002 | FFFF FFFF |] [1] xy# [1EFF 1F0B | 0020 0020 | 0002 0002 | FFFF FFFF 038F |] Inserting a variable character makes a string sort before the string without it; and appending a variable character makes a string sort after the string without it. With the “Shift-trimmed” option, this is the outcome: [2] xy [1EFF 1F0B | 0020 0020 | 0002 0002 | |] [3] x#y [1EFF 1F0B | 0020 0020 | 0002 0002 | FFFF 038F |] [1] xy# [1EFF 1F0B | 0020 0020 | 0002 0002 | FFFF FFFF 038F |] Inserting a variable character anywhere makes a string sort after the string without it; and the string having a variable character in the lowest position makes it sort before the other string. The “Shift-trimmed” option is the same as “Shifted”, except that trailing high-quaternary weights (from regular characters) are removed from the sort key. This means that, compared with “Shifted”, the “Shift-trimmed” option sorts strings without variable characters before ones with variable characters added—the string without variable characters has an empty fourth level. Now suppose we wish to sort the following text strings stored in a file: 1/6 It is obvious that we won’t strip off the newline character(s) at the end of each line, before it is processed further. -

Connect. Create. Collaborate

Connect. Create. Collaborate. Fall/Winter 2020 WHY NEWLINE? Easy for anyone to walk up and use. WE HELP YOU MAKE AN IMPACT If a solution isn’t easy to use, we won’t bring it to the market. All of our products are designed with IN YOUR CLASSROOM you in mind and it is the key to our success. Newline creates the classroom tools of the future. From interactive flat panels that keep students engaged through the lesson, to digital Completely Non-Proprietary tools that enhance your favorite software, Newline reinvents student We work in your world. No matter what software engagement and classroom communication in a time of remote and or hardware you want to use, our solutions give blended learning. you the freedom to collaborate how you choose. Newline Interactive is an award-winning manufacturer of interactive Distance Learning Flexibility displays, intuitive software, and other interactive innovations that are easy to use and increase student engagement in the classroom. Whether teaching students remotely, in-person, or in a blended learning environment, Newline’s We are a leading provider of software and hardware that helps schools solutions help keep your teachers connected to their students. find more ways to engage their students, train their teachers, and inspire more active learning. It is your passion to teach. Newline Interactive gets students excited to learn. NEWLINE NEWLINE INTERACTIVE & NON-TOUCH DISPLAYS DISPLAY SPECIFICATION SUPERIOR QUALITY FOR THE BEST EXPERIENCE RS+ NT X IP The driving power behind the Newline interactive ecosystem is a full range of superior Non-Proprietary √ √ √ √ interactive displays and large format displays. -

AVR244 AVR UART As ANSI Terminal Interface

AVR244: AVR UART as ANSI Terminal Interface Features 8-bit • Make use of standard terminal software as user interface to your application. • Enables use of a PC keyboard as input and ascii graphic to display status and control Microcontroller information. • Drivers for ANSI/VT100 Terminal Control included. • Interactive menu interface included. Application Note Introduction This application note describes some basic routines to interface the AVR to a terminal window using the UART (hardware or software). The routines use a subset of the ANSI Color Standard to position the cursor and choose text modes and colors. Rou- tines for simple menu handling are also implemented. The routines can be used to implement a human interface through an ordinary termi- nal window, using interactive menus and selections. This is particularly useful for debugging and diagnostics purposes. The routines can be used as a basic interface for implementing more complex terminal user interfaces. To better understand the code, an introduction to ‘escape sequences’ is given below. Escape Sequences The special terminal functions mentioned (e.g. text modes and colors) are selected using ANSI escape sequences. The AVR sends these sequences to the connected terminal, which in turn executes the associated commands. The escape sequences are strings of bytes starting with an escape character (ASCII code 27) followed by a left bracket ('['). The rest of the string decides the specific operation. For instance, the command '1m' selects bold text, and the full escape sequence thus becomes 'ESC[1m'. There must be no spaces between the characters, and the com- mands are case sensitive. The various operations used in this application note are described below. -

8. Character Strings

8. Character Strings 8.1. Introduction Until now we’ve avoided reading or writing text in our programs, and have worked exclusively with numbers. Even though we use a lot of Figure 8.1: Some very early text: The numbers in science and engineering, we still need to work with words Epic of Gilgamesh, first written down sometimes. Wouldn’t it be convenient to have a header at the top of around 2,000 BCE. It tells the story of King Gilgamesh and his friend Enkidu each column of numbers in a data file, saying what that column means? and their epic journey to visit the wise We might also want to use text for data values themselves, like “on” man Utnapishtim, who was a survivor off of the Deluge. New fragments of the or “ ” instead of zero or one, to make our data easier for humans Epic were discovered in an Iraqi to read. Even if we have a glossary of numerical values and their museum in 2015. meanings, like “32 = Virginia, 33 = Maryland”, it’s handy to be able Source: Wikimedia Commons to just look at the data in a file and see its meaning directly, without ’ T P having to go look up the meaning of each number. B Y S G Q Early writing systems used written symbols to represent the sounds of K speech. Learning to read requires that you learn a sort of glossary of D L R these symbols and their speech equivalents. Computers can only store H M Š data in the form of binary numbers, so somewhere there’s going to W N T need to be a glossary that matches up text with numerical equivalents. -

Learning the Vi Editor

Learning the vi Editor en.wikibooks.org December 29, 2013 On the 28th of April 2012 the contents of the English as well as German Wikibooks and Wikipedia projects were licensed under Creative Commons Attribution-ShareAlike 3.0 Unported license. A URI to this license is given in the list of figures on page 103. If this document is a derived work from the contents of one of these projects and the content was still licensed by the project under this license at the time of derivation this document has to be licensed under the same, a similar or a compatible license, as stated in section 4b of the license. The list of contributors is included in chapter Contributors on page 101. The licenses GPL, LGPL and GFDL are included in chapter Licenses on page 107, since this book and/or parts of it may or may not be licensed under one or more of these licenses, and thus require inclusion of these licenses. The licenses of the figures are given in the list of figures on page 103. This PDF was generated by the LATEX typesetting software. The LATEX source code is included as an attachment (source.7z.txt) in this PDF file. To extract the source from the PDF file, you can use the pdfdetach tool including in the poppler suite, or the http://www. pdflabs.com/tools/pdftk-the-pdf-toolkit/ utility. Some PDF viewers may also let you save the attachment to a file. After extracting it from the PDF file you have to rename it to source.7z. -

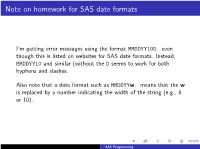

STAT579: SAS Programming

Note on homework for SAS date formats I'm getting error messages using the format MMDDYY10D. even though this is listed on websites for SAS date formats. Instead, MMDDYY10 and similar (without the D seems to work for both hyphens and slashes. Also note that a date format such as MMDDYYw. means that the w is replaced by a number indicating the width of the string (e.g., 8 or 10). SAS Programming SAS data sets (Chapter 4 of Cody book) SAS creates data sets internally once they are read in from a Data Step. The data sets can be stored in different locations and accessed later on. The default is to store them in WORK, so if you create a data set using data adress; the logfile will say that it created a SAS dataset called WORK.ADDRESS. You can nagivate to the newly created SAS dataset. In SAS Studio, go to the Libraries Tab on the left (Usually appears toward the bottom until you click on it). Then WORK.ADDRESS should appear. SAS Programming SAS data sets SAS Programming SAS data sets SAS Programming Making datasets permanent You can also make SAS datasets permanent. This is done using the libname statement. E.g. SAS Programming Permanent SAS datasets The new dataset should be available to be accessed directly from other SAS programs without reading in original data. This can save a lot of time for large datasets. If the SAS dataset is called mydata, the SAS dataset will be called mydata.sas7bdat, where the 7 refers to the datastructures used in version 7 (and which hasn't changed up to version 9). -

Operating System Lab Manual

OPERATING SYSTEM LAB MANUAL BASICS OF UNIX COMMANDS Ex.No:1.a INTRODUCTION TO UNIX AIM: To study about the basics of UNIX UNIX: It is a multi-user operating system. Developed at AT & T Bell Industries, USA in 1969. Ken Thomson along with Dennis Ritchie developed it from MULTICS (Multiplexed Information and Computing Service) OS. By1980, UNIX had been completely rewritten using C langua ge. LINUX: It is similar to UNIX, which is created by Linus Torualds. All UNIX commands works in Linux. Linux is a open source software. The main feature of Linux is coexisting with other OS such as windows and UNIX. STRUCTURE OF A LINUXSYSTEM: It consists of three parts. a)UNIX kernel b) Shells c) Tools and Applications UNIX KERNEL: Kernel is the core of the UNIX OS. It controls all tasks, schedule all Processes and carries out all the functions of OS. Decides when one programs tops and another starts. SHELL: Shell is the command interpreter in the UNIX OS. It accepts command from the user and analyses and interprets them 1 | P a g e BASICS OF UNIX COMMANDS Ex.No:1.b BASIC UNIX COMMANDS AIM: To study of Basic UNIX Commands and various UNIX editors such as vi, ed, ex and EMACS. CONTENT: Note: Syn->Syntax a) date –used to check the date and time Syn:$date Format Purpose Example Result +%m To display only month $date+%m 06 +%h To display month name $date+%h June +%d To display day of month $date+%d O1 +%y To display last two digits of years $date+%y 09 +%H To display hours $date+%H 10 +%M To display minutes $date+%M 45 +%S To display seconds $date+%S 55 b) cal –used to display the calendar Syn:$cal 2 2009 c)echo –used to print the message on the screen. -

Command Line Interface Overview

Command Line Interface Overview Note The ASR 5000 hardware platform has reached end of life and is not supported in this release. Any references to the ASR 5000 (specific or implied) or its components in this document are coincidental. Full details on the ASR 5000 hardware platform end of life are available at: https://www.cisco.com/c/en/us/products/collateral/wireless/asr-5000-series/eos-eol-notice-c51-735573.html. This chapter describes the numerous features in the command line interface (CLI). It includes information about the architecture of the CLI, its command modes and user privileges, how to obtain help within the CLI, and other key items. The operating system (StarOS™) controls the overall system logic, control processes, and the CLI. The CLI is a multi-threaded user interface that allows you to manipulate, configure, control and query the hardware and software components that make up the system and its hosted services. In addition, the CLI can host multiple instances of management and service configuration sessions. This allows multiple users to simultaneously access and manage multiple hosted services. This section provides the following information about the CLI: • CLI Structure, on page 1 • CLI Command Modes, on page 2 • CLI Administrative Users, on page 2 • CLI Contexts, on page 7 • Understanding the CLI Command Prompt, on page 8 • CLI Command Syntax, on page 9 • Entering and Viewing CLI Commands, on page 9 • Obtaining CLI Help, on page 13 • Exiting the CLI and CLI Command Modes, on page 14 • IP Address Notation, on page 15 • Alphanumeric Strings, on page 16 CLI Structure CLI commands are strings of commands or keywords and user-specified arguments that set or modify specific parameters of the system. -

CENG328 Operating Systems Laboratory Chapter 7

CENG328 Operating Systems Laboratory Chapter 7 1 File System Unix and Linux based operating systems use specific filesystems (ext2 / ext3 / ext4, xfs, btrfs, etc) for storing files, directories and other filesystem objects such as links, pipes and sockets. These filesystems usually store information such as filename, size, inode number, owning user / group info, access modes, timestamps, etc. When listing files with ls command, these different file types can be recognized by the first character next to the permissions: • Regular File: Stores data (text, image, audio...) that can be read/written by programs. Denoted by \-" sign. • Directory: A data structure that stores other files, directories, etc. Denoted by \d" sign. • Link: A softlink (symlink) is a file that acts as a pointer to another file. Denoted by \l" sign. • Socket: A filesystem-level IPC method to establish communication between processes. Denoted by \s" sign. • Pipe: A filesystem-level IPC method to establish communication between processes. Denoted by \p" sign. • Character device: A device which the driver communicates with single characters. Denoted by \c" sign. • Block device: A device which the driver communicates with blocks of data. Denoted by \b" sign. $ ls drwxrwxr-x 2 user user 4096 May 3 18:29 backups lrwxrwxrwx 1 user user 6 May 3 18:29 list -> list.1 -rw-rw-r-- 1 user user 132 May 3 18:29 list.0 -rw-rw-r-- 1 user user 218 May 3 18:29 list.1 -rw-rw-r-- 1 user user 63 May 3 18:29 list.2 brw-rw---- 1 root disk 7, 6 Apr 24 10:11 sda1 prw-rw-r-- 1 user user 0 May 3 18:29 somepipe