Automatic Category Label Coarsening for Syntax-Based MT

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Disk Clone Industrial

Disk Clone Industrial USER MANUAL Ver. 1.0.0 Updated: 9 June 2020 | Contents | ii Contents Legal Statement............................................................................... 4 Introduction......................................................................................4 Cloning Data.................................................................................................................................... 4 Erasing Confidential Data..................................................................................................................5 Disk Clone Overview.......................................................................6 System Requirements....................................................................................................................... 7 Software Licensing........................................................................................................................... 7 Software Updates............................................................................................................................. 8 Getting Started.................................................................................9 Disk Clone Installation and Distribution.......................................................................................... 12 Launching and initial Configuration..................................................................................................12 Navigating Disk Clone.....................................................................................................................14 -

Forest Quickstart Guide for Linguists

Forest Quickstart Guide for Linguists Guido Vanden Wyngaerd [email protected] June 28, 2020 Contents 1 Introduction 1 2 Loading Forest 2 3 Basic Usage 2 4 Adjusting node spacing 4 5 Triangles 7 6 Unlabelled nodes 9 7 Horizontal alignment of terminals 10 8 Arrows 11 9 Highlighting 14 1 Introduction Forest is a package for drawing linguistic (and other) tree diagrams de- veloped by Sašo Živanović. This manual provides a quickstart guide for linguists with just the essential things that you need to get started. More 1 extensive documentation is available from the CTAN-archive. Forest is based on the TikZ package; more information about its commands, in par- ticular those controlling the appearance of the nodes, the arrows, and the highlighting can be found in the TikZ documentation. 2 Loading Forest In your preamble, put \usepackage[linguistics]{forest} The linguistics option makes for nice trees, in which the branches meet above the two nodes that they join; it will also align the example number (provided by linguex) with the top of the tree: (1) CP C IP I VP V NP 3 Basic Usage Forest uses a familiar labelled brackets syntax. The code below will out- put the tree in (1) above (\ex. requires the linguex package and provides the example number): \ex. \begin{forest} [CP[C][IP[I][VP[V][NP]]]] \end{forest} Forest will parse the above code without problem, but you are likely to soon get lost in your labelled brackets with more complicated trees if you write the code this way. The better alternative is to arrange the nodes over multiple lines: 2 \ex. -

Metadata for Everyone a Simple, Low-Cost Methodology Timothy D

SAS Global Forum 2008 Data Integration Paper 138-2008 Metadata for Everyone A Simple, Low-Cost Methodology Timothy D. Brown, Altoona, IA ABSTRACT In the context of Base SAS® programming, this paper uses “hardcoded” values as an introduction to “metadata” and the reasons for using it. It then describes a low cost and simple methodology for maintaining any kind of metadata. INTRODUCTION This discussion will take an indirect approach to defining metadata. It’ll describe the metadata which might be included, or hard-coded, in a Base SAS program and propose alternatives to storing and using the metadata. Outside of programs “data” and “code” are distinct. However within programs, the distinction gets blurred when data values, called “hardcoded” data, are included within the code. Hardcoded values include, but are not limited to: • Text constants, literals • Names of companies, people, organizations and places • Directory paths and file names • Parameters on SAS procedures such as WHERE, KEEP, DROP, RENAME, VARS, BY etc • Numeric constants including dates* • Statistical constants • Period begin and end dates • Mixed text and numeric values • Expressions in IF and WHERE clauses • What-if scenarios (* excluding dates which are derived logically using a SAS functions such as TODAY(), DATETIME(), INTNX and NXTPD) In addition, many small conversion, cross-reference and look-up tables, which might be hardcoded as SAS formats or read into a program from many different sources, work well as metadata and fit into this framework. Obviously, some hardcoded values might never change in the life of a program. So it might be prudent to leave some hardcoded values in the code. -

Four Ways to Reorder Your Variables, Ranked by Elegance and Efficiency Louise S

Order, Order! Four Ways To Reorder Your Variables, Ranked by Elegance and Efficiency Louise S. Hadden, Abt Associates Inc. ABSTRACT SAS® practitioners are frequently required to present variables in an output data file in a particular order, or standards may require variables in a production data file to be in a particular order. This paper and presentation offer several methods for reordering variables in a data file, encompassing both DATA step and procedural methods. Relative efficiency and elegance of the solutions will be discussed. INTRODUCTION SAS provides us with numerous methods to control all types of SAS output, including SAS data files, data tables in other formats, and ODS output. This paper focuses solely on output SAS data files (which may then be used to generate SAS data files and other types of output from SAS processes), and specifically on DATA step and PROC SQL methods. This short paper and presentation is suitable for all SAS practitioners at all levels of expertise. Attendees will gain a greater understanding of the processes by which SAS assigns variable attributes, including variable/column order within a data file, and how to obtain information on variable attributes – and in the process, learn how to reorder variables within a SAS data file. KNOW THY DATA It is always important to understand fully and explore the inputs to SAS-created output. SAS has provided users with a variety of possibilities in terms of determining locations of variables or columns (and other important details comprising metadata). These possibilities include, but are not limited to: 1. The CONTENTS Procedure 2. -

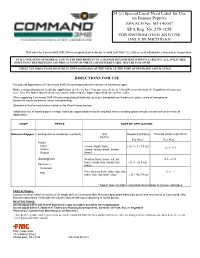

Command 3ME Banana Pepper Label

24 (c) Special Local Need Label for Use on Banana Peppers EPA SLN No. MI-140007 EPA Reg. No. 279-3158 FOR DISTRIBUTION AND USE ONLY IN MICHIGAN This label for Command® 3ME Microencapsulated Herbicide is valid until May 13, 2024 or until withdrawn, canceled or suspended. IT IS A VIOLATION OF FEDERAL LAW TO USE THIS PRODUCT IN A MANNER INCONSISTENT WITH ITS LABELING. ALL APPLICABLE DIRECTIONS, RESTRICTIONS AND PRECAUTIONS ON THE EPA REGISTERED LABEL MUST BE FOLLOWED. THESE USE DIRECTIONS MUST BE IN THE POSSESSION OF THE USER AT THE TIME OF PESTICIDE APPLICATION. DIRECTIONS FOR USE For ground application of Command 3ME Microencapsulated Herbicide on banana pepper. Make a single broadcast herbicide application at 10.7 to 42.7 fl oz per acre (0.25 to 1 lb ai/A) in a minimum of 10 gallons of water per acre. Use the lower specified rate on course soils and the higher specified rate on fine soils. When applying Command 3ME Microencapsulated Herbicide as a pre-transplant soil treatment, place roots of transplants below the chemical barrier when transplanting. Observe all buffer restrictions noted in the Restrictions section. Additional use of labeled post-emerge herbicide applications may be required where existing grass weeds are present at the time of application. CROP PESTS RATE OF APPLICATION Banana Pepper Lambsquarters (herbicide resistant) Soil Broadcast Rates Pounds Active Ingredient Texture Per Acre* Per Acre Foxtail- Giant, Coarse (light) Soils: (10.7 – 21.3 fl oz) 0.25 - 0.5 Green (sand, loamy sand, sandy Robust loam) Goosegrass Medium Soils: (loam, silt, silt 0.5 – 0.75 loam, sandy clay, sandy clay (21.3 - 32 fl oz) Panicum – loam) Common Fine (heavy) Soils: (silty clay, clay 0.75 - 1 Fall loam, silty clay loam, clay) (32 – 42.7 fl oz) * Select lower to higher rates based on lighter to heavier soil types. -

External Commands

5/22/2018 External commands External commands Previous | Content | Next External commands are known as Disk residence commands. Because they can be store with DOS directory or any disk which is used for getting these commands. Theses commands help to perform some specific task. These are stored in a secondary storage device. Some important external commands are given below- MORE MOVE FIND DOSKEY MEM FC DISKCOPY FORMAT SYS CHKDSK ATTRIB XCOPY SORT LABEL 1. MORE:-Using TYPE command we can see the content of any file. But if length of file is greater than 25 lines then remaining lines will scroll up. To overcome through this problem we uses MORE command. Using this command we can pause the display after each 25 lines. Syntax:- C:\> TYPE <File name> | MORE C:\> TYPE ROSE.TXT | MORE or C: \> DIR | MORE 2. MEM:-This command displays free and used amount of memory in the computer. Syntax:- C:\> MEM the computer will display the amount of memory. 3. SYS:- This command is used for copy system files to any disk. The disk having system files are known as Bootable Disk, which are used for booting the computer. Syntax:- C:\> SYS [Drive name] C:\> SYS A: System files transferred This command will transfer the three main system files COMMAND.COM, IO.SYS, MSDOS.SYS to the floppy disk. 4. XCOPY:- When we need to copy a directory instant of a file from one location to another the we uses xcopy command. This command is much faster than copy command. Syntax:- C:\> XCOPY < Source dirname > <Target dirname> C:\> XCOPY TC TURBOC 5. -

SAS Programming Tips

TUESDAY TIPS – SAS PROGRAMMING Weekly Tip for Nov. 3, 2020 Your data dictionary contains some variables that can iterate - for example, the unknown # of COVID tests for an unknown # of infants. How do you manage documentation? A data dictionary for a file based on Electronic Medical Records (EMR) contains variables which represent an unknown number of COVID tests for an unknown number of infants – there is no way to know in advance how many iterations of this variable will exist in the actual data file. In addition, variables in this file may exist for three different groups (pregnant women, postpartum women, and infants), with PR, PP and IN prefixes, respectively. This Tuesday Tip demonstrates how to process such variables in a data dictionary to drive label (and value label) description creation for iterated (and other) labels using SAS functions, as well as other utilities. Using PROC CONTENTS and ODS OUTPUT on an imported data dictionary (example shown above) and a data file from a health care entity, the position ODS OUTPUT object is created, and the column variable is standardized using the UPCASE function. ************************************************************; *** Import Personal Data Dictionary one tab at a time ***; ************************************************************; %macro imptabs(tabn=1, tabnm=identifiers, intab=Identifiers, startrow=10, endcol=H); proc import dbms=xlsx out = temp datafile = " \file.xlsx" replace; RANGE="&intab.$A&startrow.:&endcol.999"; getnames=YES; run; . data labels&tabn.; length label -

Partition.Pdf

Linux Partition HOWTO Anthony Lissot Revision History Revision 3.5 26 Dec 2005 reorganized document page ordering. added page on setting up swap space. added page of partition labels. updated max swap size values in section 4. added instructions on making ext2/3 file systems. broken links identified by Richard Calmbach are fixed. created an XML version. Revision 3.4.4 08 March 2004 synchronized SGML version with HTML version. Updated lilo placement and swap size discussion. Revision 3.3 04 April 2003 synchronized SGML and HTML versions Revision 3.3 10 July 2001 Corrected Section 6, calculation of cylinder numbers Revision 3.2 1 September 2000 Dan Scott provides sgml conversion 2 Oct. 2000. Rewrote Introduction. Rewrote discussion on device names in Logical Devices. Reorganized Partition Types. Edited Partition Requirements. Added Recovering a deleted partition table. Revision 3.1 12 June 2000 Corrected swap size limitation in Partition Requirements, updated various links in Introduction, added submitted example in How to Partition with fdisk, added file system discussion in Partition Requirements. Revision 3.0 1 May 2000 First revision by Anthony Lissot based on Linux Partition HOWTO by Kristian Koehntopp. Revision 2.4 3 November 1997 Last revision by Kristian Koehntopp. This Linux Mini−HOWTO teaches you how to plan and create partitions on IDE and SCSI hard drives. It discusses partitioning terminology and considers size and location issues. Use of the fdisk partitioning utility for creating and recovering of partition tables is covered. The most recent version of this document is here. The Turkish translation is here. Linux Partition HOWTO Table of Contents 1. -

Ch 7 Using ATTRIB, SUBST, XCOPY, DOSKEY, and the Text Editor

Using ATTRIB, SUBST, XCOPY, DOSKEY, and the Text Editor Ch 7 1 Overview The purpose and function of file attributes will be explained. Ch 7 2 Overview Utility commands and programs will be used to manipulate files and subdirectories to make tasks at the command line easier to do. Ch 7 3 Overview This chapter will focus on the following commands and programs: ATTRIB XCOPY DOSKEY EDIT Ch 7 4 File Attributes and the ATTRIB Command Root directory keeps track of information about every file on a disk. Ch 7 5 File Attributes and the ATTRIB Command Each file in the directory has attributes. Ch 7 6 File Attributes and the ATTRIB Command Attributes represented by single letter: S - System attribute H - Hidden attribute R - Read-only attribute A - Archive attribute Ch 7 7 File Attributes and the ATTRIB Command NTFS file system: Has other attributes At command line only attributes can change with ATTRIB command are S, H, R, and A Ch 7 8 File Attributes and the ATTRIB Command ATTRIB command: Used to manipulate file attributes Ch 7 9 File Attributes and the ATTRIB Command ATTRIB command syntax: ATTRIB [+R | -R] [+A | -A] [+S | -S] [+H | -H] [[drive:] [path] filename] [/S [/D]] Ch 7 10 File Attributes and the ATTRIB Command Attributes most useful to set and unset: R - Read-only H - Hidden Ch 7 11 File Attributes and the ATTRIB Command The A attribute (archive bit) signals file has not been backed up. Ch 7 12 File Attributes and the ATTRIB Command XCOPY command can read the archive bit. -

Enhanced K-Blue Subst, ..., Safety Data Sheet, English

SAFETY DATA SHEET according to HSNO Act 1996, as amended Page 1/11 Enhanced K-Blue® Substrate Revision 6 Revision date 2021-02-11 SECTION 1: Identification of the substance/mixture and of the company/undertaking 1.1. Product identifier Product name Enhanced K-Blue® Substrate Product code 308171, 308175, 308176, 308177, 308181, Product code 21007, 308170-W, 308174-W, 308177-U, 308187, 308187-L, 308189, 308189-L, 308189-WH-L, 308193, 308194-W, 308194-WL, 308199, 308202, 308203, 308203-L, 308205, 308205-W, 308206, 308208, 308209, 308212, 308240, 308240-W, 308243, 308249-L, 308249-WL, 308251, 308254, 308254-W, 308254-WL, 308255-W, 308256, 308256-L, 308257, 308258, 308261, 308xxx (generic), 501822, 501823. 1.2. Relevant identified uses of the substance or mixture and uses advised against Product Use [SU3] Industrial uses: Uses of substances as such or in preparations at industrial sites; [PC21] Laboratory chemicals; Description Substrate Solution. Intended for laboratory use only. Do not use components from one kit with any other kit. 1.3. Details of the supplier of the safety data sheet Company Neogen Corporation Address 620 Lesher Place Lansing MI 48912 USA Web www.neogen.com Telephone 517-372-9200/800-234-5333 Email [email protected] 1.4. Emergency telephone number 24 hours: Medical: 1-800-498-5743 (U.S. and Canada) or 1-651-523-0318 (international) Spill/CHEMTREC: 1-800-424-9300 (U.S. and Canada) or 1-703-527-3887 (international) Further information Manufactured By:. Neogen Corporation 944 Nandino Blvd. Lexington, KY 40511-1205 U.S.A. SECTION 2: Hazards identification 2.1. -

Label Formatting Commands

TSPL/TSPL2 Programming Language TSC BAR CODE PRINTER SERIES PROGRAMMING MANUAL TABLE OF CONTENTS How to Read .......................................................................................................................................... VI Document Conventions......................................................................................................................... VII Object Position Calculation .................................................................................................................. VIII Printer Model List ................................................................................................................................... X Setup and System Commands ................................................................................................................. 1 SIZE ....................................................................................................................................................... 1 GAP ...................................................................................................................................................... 2 GAPDETECT .......................................................................................................................................... 4 BLINEDETECT ........................................................................................................................................ 5 AUTODETECT ....................................................................................................................................... -

Introduction: DOS (Disk Operating System) Is an Oldest Type of Operating System

DOS (Disk Operating System) 1 Introduction: DOS (Disk Operating System) is an oldest type of Operating System. Disk Operating System is abbreviated as DOS. DOS is a CUI type of Operating System. In computer science, a generic term describing any operating system is system software which is loaded from disk devices when the system is started or rebooted. DOS is a single-tasking, single-user operating system with a command-line interface. DOS acts on commands. Because DOS is ready to perform when given proper command hence, it is also known as Command Prompt. Commands are certain words of English language or short form of English words. The meaning of these word or short form is already known to DOS. Since, DOS recognized these words and hence acts accordingly. These words and short forms of the English words are better known as commands. Internal Command:-Those commands which are already stored in the “Command.Com” file of DOS are known as internal commands. For example, CLS, VOL, TIME, DATE, COPY etc External Command:-Those commands which are not included in the command.com file of DOS rather included in other files of DOS are known as external commands. It is formatted according to programme. For example, TREE, FORMAT, MODE etc Some Internal Commands:- 1. CLS To clear the screen. \>cls 2. DIR To view the directory and files C:\>Dir 3. DATE To View and change the date C:\>Date Current date is: 01-01-2008 Enter new date (mm/dd/yy):21-03-2009 4. TIME To view and change the time.