Defining the Hundred Year Flood

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Impacts of Storms

THE IMPACTS OF STORMS It is important to think more about storms, how they are formed, their potentially devastating impacts and whether you are resilient to them. Planning in advance is essential, especially if you live in areas that may be more vulnerable than others, for example, those located next to rivers or on the coast. Winter storms can bring some of the most severe and extreme weather events, including lower temperatures, gale-force winds and heavy rain, which can lead to flooding in some areas or sleet and snow if the temperature is cold enough. Storms occur at mid-latitudes where cold polar air meets warmer tropical air and the point where these two meet is known as the jet stream. Rising air from the Atlantic is removed and replaced by the strong winds of the jet stream a lot quicker than the air at lower levels and this reduction in pressure produces the strong winds of winter storms. Storms tend to form in the winter months when the temperature between the air masses is at its greatest. It is important to keep as safe and resilient as you can against the potential impact of storms. On the 5th and 6th December 2015, Storm Desmond brought strong winds of up to 81 mph and heavy rainfall, with 341.4mm of rain falling in Cumbria by 6pm on the 5th (24 hours) – a new UK record! Many people across Cumbria and Lancashire were severely affected: 5200 homes were flooded. 61,000 homes in Lancaster lost power when an electrical substation was flooded. -

Kielder Reservoir, Northumberland

Rainwise Working with communities to manage rainwater Kielder Reservoir, Northumberland Kielder Water is the largest man-made lake in northern Europe and is capable of holding 200 billion litres of water, it is located on the River North Tyne in North West Northumberland. Figure 1: Location of Kielder Figure 2: Kielder Area Figure 3: Kielder Water The Kielder Water Scheme was to provide additional flood storage capacity at Kielder Reservoir. At the same time the Environment Agency completed in 1982 and was one of the (EA) were keen to pursue the idea of variable releases largest and most forward looking projects to the river and the hydropower operator at Kielder of its time. It was the first example in (Innogy) wished to review operations in order to maximum generation ahead of plans to refurbish the main turbine the UK of a regional water grid, it was in 2017. designed to meet the demands of the north east well into the future. The scheme CHALLENGES is a regional transfer system designed to Kielder reservoir has many important roles including river allow water from Kielder Reservoir to be regulation for water supply, hydropower generation and released into the Rivers Tyne, Derwent, as a tourist attraction. As such any amendments to the operation of the reservoir could not impact on Kielder’s Wear and Tees. This water is used to ability to support these activities. Operating the reservoir maintain minimum flow levels at times of at 85 percent of its capacity would make up to 30 billion low natural rainfall and allows additional litres of storage available. -

Flooding After Storm Desmond PERC UK 2015 Flooding in Cumbria After Storm Desmond PERC UK 2015

Flooding after Storm Desmond PERC UK 2015 Flooding in Cumbria after Storm Desmond PERC UK 2015 The storms that battered the north of England and parts of Scotland at the end of 2015 and early 2016 caused significant damage and disruption to families and businesses across tight knit rural communities and larger towns and cities. This came just two years after Storm Xaver inflicted significant damage to the east coast of England. Flooding is not a new threat to the residents of the Lake District, but the severity of the events in December 2015 certainly appears to have been regarded as surprising. While the immediate priority is always to ensure that defence measures are overwhelmed. We have also these communities and businesses are back up on their looked at the role of community flood action groups feet as quickly and effectively as possible, it is also in the response and recovery from severe flooding. important that all those involved in the response take Our main recommendations revolve around three key the opportunity to review their own procedures and themes. The first is around flood risk communication, actions. It is often the case that when our response is including the need for better communication of hazard, put to the test in a ‘real world’ scenario that we risk and what actions to take when providing early discover things that could have been done better, or warning services to communities. The second centres differently, and can make changes to ensure continuous around residual risk when the first line of flood improvement. This is true of insurers as much as it is of defences, typically the large, constructed schemes central and local government and the emergency protecting entire cities or areas, are either breached services, because events like these demand a truly or over-topped. -

How LV= Delivered a Human Response to the Floods in Cumbria

A case study... How LV= delivered a human response to the floods in Cumbria BROKER LVbroker.co.uk LV= Insurance Highway Insurance ABC Insurance @LV_Broker LV= Broker Contents LV= in Kendal Storm Desmond LV= attended the scene Storm Eva Case Studies Storm Frank What next? 2 A case study... And the rain came... When Storm Desmond hit on 4 December 2015, nobody anticipated another two Background of Storm Desmond storms would follow. Storm Desmond was 4–6 December the start of intense rainfall and high winds Storm Desmond brought extreme rainfall and concentrated over Cumbria in the last part storm force winds with a few rain gauges reported of 2015. Storm Desmond was quickly to have recorded in excess of 300mm in a 24 hour period. followed by Storm Eva, proving more Thursday 3 December Friday 4 December misery over the festive period before Storm Frank put an end to the wet and windy weather on 31 December. Whilst many of the areas had been affected by flooding in the past, the immediate reaction was that this event was the worst in living memory. Many areas already had some form of flood defence in place and others were in the process of improving existing defences. Unfortunately Saturday 5 December Sunday 6 December the defences were unable to cope with the record river levels and in many instances were overtopped. LV= popped-up in Kendal Kendal was the first badly hit area and within hours of the news breaking on the 7 December, we sent two senior members of our claims team 300 miles up to Kendal (1). -

MAS8306 Topics in Statistics: Environmental Extremes

MAS8306 Topics in Statistics: Environmental Extremes Dr. Lee Fawcett Semester 2 2017/18 1 Background and motivation 1.1 Introduction Finally, there is almost1 a global consensus amongst scientists that our planet’s climate is changing. Evidence for climatic change has been collected from a variety of sources, some of which can be used to reconstruct the earth’s changing climates over tens of thousands of years. Reasonably complete global records of the earth’s surface tempera- ture since the early 1800’s indicate a positive trend in the average annual temperature, and maximum annual temperature, most noticeable at the earth’s poles. Glaciers are considered amongst the most sensitive indicators of climate change. As the earth warms, glaciers retreat and ice sheets melt, which – over the last 30 years or so – has resulted in a gradual increase in sea and ocean levels. Apart from the consequences on ocean ecosystems, rising sea levels pose a direct threat to low–lying inhabited areas of land. Less direct, but certainly noticeable in the last fiteen years or so, is the effect of rising sea levels on the earth’s weather systems. A larger amount of warmer water in the Atlantic Ocean, for example, has certainly resulted in stronger, and more frequent, 1Almost... — 3 — 1 Background and motivation tropical storms and hurricanes; unless you’ve been living under a rock over the last few years, you would have noticed this in the media (e.g. Hurricane Katrina in 2005, Superstorm Sandy in 2012). Most recently, and as reported in the New York Times in January 2018, the 2017 hurricane season was “.. -

Community Sustainability in the Dales • a Tale of Four Bridges • Saving a Dales Icon • an Unbroken Dales Record • Upland Hay Meadows

Spring 2018 : Issue 142 • Community Sustainability in the Dales • A Tale of Four Bridges • Saving a Dales Icon • An Unbroken Dales Record • Upland Hay Meadows CAMPAIGN • PROTECT • ENJOY Cover photo: Spring Life in the Dales. Courtesy of Mark Corner Photo, this page: Spring Flowers along the Ribble. Courtesy of Mark Corner CONTENTS Spring 2018 : Issue 142 Editor’s Letter ...............3 Book Review ...............13 Jerry Pearlman MBE ..........3 Upland Hay Meadows The Challenge of Community in the Dales ................14 Sustainability in the Dales .....4 Policy Committee A Tale of Four Bridges ........6 Planning Update ............15 Members’ Letters ...........16 Saving a Dales Icon ..........8 Something for Everyone? ....16 The Family of National Park Societies . 10 The Dales in Spring .........17 DalesBus Update ...........11 News .....................18 An Unbroken Record Events ....................19 in the Dales ................12 Dales Haverbread ...........20 New Business Members ......13 Editor Sasha Heseltine 2 It’s feeling a lot like Spring in the Dales With Our Deepest Condolences It is with great sadness that we make our It’s been a long winter but finally we are seeing glimmers members aware of the death of our trustee of spring cheering our majestic, wild Dales. Over and friend Jerry Pearlman MBE, who the centuries, they’ve been shaped by a remarkable passed away peacefully at home on Friday, combination of nature and human hand, and Dales 9th March, aged 84. Jerry was a founding communities play a vital role in preserving these member of the Yorkshire Dales Society, landscapes. When local residents are forced to leave the its solicitor, a very active trustee and a Dales to seek employment or affordable housing, those wonderful person. -

Living Without Electricity Transcript

Living Without Electricity Transcript Date: Tuesday, 28 February 2017 - 6:00PM Location: Museum of London 28 February 2017 Living without Electricity Professor Roger Kemp Abstract Storm Desmond brought unprecedented floods to Lancaster in December 2015 and electricity supplies were cut off and not fully restored for six days. The disruption revealed how dependent on electricity modern city life has become. What happens when power, communications, and transport are all disrupted, when shops cannot function, and when most people cannot find out what is happening? What lessons have been learnt? Society’s Dependence on Electricity To most people under 40 the World Wide Web and GSM phones are part of life but, in reality, the WWW is only 22 years old. In the 1980s it was considered revolutionary to send electronic messages outside one organisation but, by the mid-1990s, international businesses were using programmes like Lotus Notes to communicate between sites on different continents. Individual email access was through the telephone network and it was usual for people involved in international business to carry round a bag of adaptors so they could unplug the phone in their hotel room and connect a laptop to a remote server – a complicated, slow and expensive connection. It was only in the late 1990s that Wi Fi started to become widely available. Mobile phones first became available in 1985 but their widespread use only took off a decade later and, as it was not unusual to pay £700 for a handset, most purchasers were businesses. It was not until the new millennium that mobile phones and internet coalesced to form the seamless, ubiquitous information network we know today. -

The Winter Floods of 2015/2016 in the UK - a Review

National Hydrological Monitoring Programme The winter floods of 2015/2016 in the UK - a review by Terry Marsh, Celia Kirby, Katie Muchan, Lucy Barker, Ed Henderson & Jamie Hannaford National Hydrological Monitoring Programme The winter floods of 2015/2016 in the UK - a review by Terry Marsh, Celia Kirby, Katie Muchan, Lucy Barker, Ed Henderson & Jamie Hannaford CENTRE FOR ECOLOGY & HYDROLOGY l [email protected] l www.ceh.ac.uk [i] This report should be cited as Marsh, T.J.1, Kirby, C.2, Muchan, K.1, Barker, L.1, Henderson, E.2 and Hannaford, J.1 2016. The winter floods of 2015/2016 in the UK - a review. Centre for Ecology & Hydrology, Wallingford, UK. 37 pages. Affiliations: 1Centre for Ecology & Hydrology; 2British Hydrological Society. ISBN: 978-1-906698-61-4 Publication address Centre for Ecology & Hydrology Maclean Building Benson Lane Crowmarsh Gifford Wallingford Oxfordshire OX10 8BB UK General and business enquiries: +44 (0)1491 838800 E-mail: [email protected] [ii] The winter floods of 2015/2016 in the UK - a review THE WINTER FLOODS OF 2015/2016 IN THE UK – A REVIEW This report was produced by the Centre for Ecology & Hydrology (CEH), the UK’s centre for excellence for research in land and freshwater environmental sciences, in collaboration with the British Hydrological Society (BHS) which promotes all aspects of the inter-disciplinary subject of hydrology – the scientific study and practical applications of the movement, distribution and quality of freshwater in the environment. Funding support was provided by the Natural Environment Research Council. CEH and BHS are extremely grateful to the many individuals and organisations that provided data and background information for this publication. -

Flood Risk Management Plan

LIT 10200 Flood risk management plan Northumbria river basin district summary March 2016 What are flood risk management plans? Flood risk management plans (FRMPs) explain the risk of flooding from rivers, the sea, surface water, groundwater and reservoirs. FRMPs set out how risk management authorities will work with communities to manage flood and coastal risk over the period 2015 - 2021. Risk management authorities include the Environment Agency, local councils, internal drainage boards, Highways England and lead local flood authorities (LLFAs). Each EU member country must produce FRMPs as set out in the EU Floods Directive 2007. Each FRMP covers a specific river basin district. There are 11 river basin districts in England and Wales, as defined in the legislation. A river basin district is an area of land covering one or more river catchments. A river catchment is the area of land from which rainfall drains to a specific river. Each river basin district also has a river basin management plan, which looks at how to protect and improve water quality, and use water in a sustainable way. FRMPs and river basin management plans work to a 6- year planning cycle. The current cycle is from 2015 to 2021. We have developed the Northumbria FRMP alongside the Northumbria river basin management plan so that flood defence schemes can provide wider environmental benefits. Both flood risk management and river basin planning form an important part of a collaborative and integrated approach to catchment planning for water. Building on this essential work, and in the context of the Governments 25-year environment plan, over the next cycle we aim to move towards single plans for the environment on a catchment basis which will draw together and integrate objectives for flood risk management, water management, and biodiversity, with the aim of maximising the multiple benefits that can be achieved. -

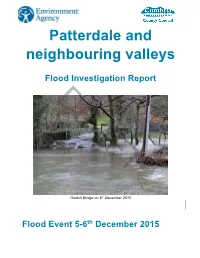

Patterdale and Neighbouring Valleys Flood Investigation Report

Patterdale and neighbouring valleys Flood Investigation Report DRAFT Goldrill Bridge on 6th December 2015 Flood Event 5-6th December 2015 Environment Agency This flood investigation report has been produced by the Environment Agency as a key Risk Management Authority under Section 19 of the Flood and Water Management Act 2010 in partnership with Eden District Council as Lead Local Flood Authority. DRAFT 2 Creating a better place Version Prepared by Reviewed by Approved by Date Working Draft for Sejal Shah Ian McCall discussion with EA DRAFT 3 Environment Agency Contents Executive Summary ................................................................................................................................................ 4 Introduction ............................................................................................................................................................. 5 Scope of this report ................................................................................................................................................... 5 Flooding History ........................................................................................................................................................ 6 Event background .................................................................................................................................................. 6 Flooding Incident ..................................................................................................................................................... -

Healthy Ecosystems Cumbria a Landscape Enterprise Networks Opportunity Analysis

1 Healthy Ecosystems Cumbria A Landscape Enterprise Networks opportunity analysis Making Landscapes work for Business and Society Message LENs: Making landscapes 1 work for business and society This document sets out a new way in which businesses can work together to influence the assets in their local landscape that matter to their bottom line. It’s called the Landscape Enterprise Networks or ‘LENs’ Approach, and has been developed in partnership by BITC, Nestlé and 3Keel. Underpinning the LENs approach is a systematic understanding of businesses’ landscape dependencies. This is based on identifying: LANDSCAPE LANDSCAPE FUNCTIONS ASSETS The outcomes that beneficiaries The features and depend on from the landscape in characteristics LANDSCAPE order to be able to operate their in a landscape that underpin BENEFICIARIES businesses. These are a subset the delivery of those functions. Organisations that are of ecosystem services, in that These are like natural capital, dependent on the they are limited to functions in only no value is assigned to landscape. This is the which beneficiaries have them beyond the price ‘market’. sufficient commercial interest to beneficiaries are willing to pay make financial investments in to secure the landscape order to secure them. functions that the Natural Asset underpins. Funded by: It provides a mechanism It moves on from It pulls together coalitions It provides a mechanism Benefits 1 for businesses to start 2 theoretical natural capital 3 of common interest, 4 for ‘next generation’ intervening to landscape- valuations, to identify pooling resources to share diversification in the rural of LENs derived risk in their real-world value propositions the cost of land management economy - especially ‘backyards’; and transactions; interventions; relevant post-Brexit. -

Cumbria Business Survey 2015/16 – Flood Impact Report Prepared For: Cumbria Local Enterprise Partnership

Research Report Cumbria Business Survey 2015/16 – Flood Impact Report Prepared for: Cumbria Local Enterprise Partnership Cumbria Business Survey 2015/16 – Flood Impact Report Prepared for: Cumbria Local Enterprise Partnership Prepared by: June Wiseman, Director and Emma Parry, Associate Director, BMG Research Date: August 2016 Produced by BMG Research © Bostock Marketing Group Ltd, 2016 www.bmgresearch.co.uk Project: 9808 Registered in England No. 2841970 Registered office: BMG Research Beech House Greenfield Crescent Edgbaston Birmingham B15 3BE UK Tel: +44 (0) 121 3336006 UK VAT Registration No. 580 6606 32 Birmingham Chamber of Commerce Member No. B4626 Market Research Society Company Partner British Quality Foundation Member The provision of Market Research Services in accordance with ISO 20252:2012 The provision of Market Research Services in accordance with ISO 9001:2008 The International Standard for Information Security Management ISO 27001:2013 Investors in People Standard - Certificate No. WMQC 0614 Interviewer Quality Control Scheme (IQCS) Member Company Registered under the Data Protection Act - Registration No. Z5081943 The BMG Research logo is a trade mark of Bostock Marketing Group Ltd Table of Contents 1 Impact of 2015 floods ..................................................................................................... 2 1.1 Key points ............................................................................................................... 2 1.2 Experience of storm and floods ..............................................................................