Power Optimizations for Graphics Processors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

High End Visualization with Scalable Display System

HIGH END VISUALIZATION WITH SCALABLE DISPLAY SYSTEM Dinesh M. Sarode*, Bose S.K.*, Dhekne P.S.*, Venkata P.P.K.*, Computer Division, Bhabha Atomic Research Centre, Mumbai, India Abstract display, then the large number of pixels shows the picture Today we can have huge datasets resulting from in greater details and interaction with it enables the computer simulations (CFD, physics, chemistry etc) and greater insight in understanding the data. However, the sensor measurements (medical, seismic and satellite). memory constraints, lack of the rendering power and the There is exponential growth in computational display resolution offered by even the most powerful requirements in scientific research. Modern parallel graphics workstation makes the visualization of this computers and Grid are providing the required magnitude difficult or impossible. computational power for the simulation runs. The rich While the cost-performance ratio for the component visualization is essential in interpreting the large, dynamic based on semiconductor technologies doubling in every data generated from these simulation runs. The 18 months or beyond that for graphics accelerator cards, visualization process maps these datasets onto graphical the display resolution is lagging far behind. The representations and then generates the pixel resolutions of the displays have been increasing at an representation. The large number of pixels shows the annual rate of 5% for the last two decades. The ability to picture in greater details and interaction with it enables scale the components: graphics accelerator and display by the greater insight on the part of user in understanding the combining them is the most cost-effective way to meet data more quickly, picking out small anomalies that could the ever-increasing demands for high resolution. -

Order Independent Transparency in Opengl 4.X Christoph Kubisch – [email protected] TRANSPARENT EFFECTS

Order Independent Transparency In OpenGL 4.x Christoph Kubisch – [email protected] TRANSPARENT EFFECTS . Photorealism: – Glass, transmissive materials – Participating media (smoke...) – Simplification of hair rendering . Scientific Visualization – Reveal obscured objects – Show data in layers 2 THE CHALLENGE . Blending Operator is not commutative . Front to Back . Back to Front – Sorting objects not sufficient – Sorting triangles not sufficient . Very costly, also many state changes . Need to sort „fragments“ 3 RENDERING APPROACHES . OpenGL 4.x allows various one- or two-pass variants . Previous high quality approaches – Stochastic Transparency [Enderton et al.] – Depth Peeling [Everitt] 3 peel layers – Caveat: Multiple scene passes model courtesy of PTC required Peak ~84 layers 4 RECORD & SORT 4 2 3 1 . Render Opaque – Depth-buffer rejects occluded layout (early_fragment_tests) in; fragments 1 2 3 . Render Transparent 4 – Record color + depth uvec2(packUnorm4x8 (color), floatBitsToUint (gl_FragCoord.z) ); . Resolve Transparent 1 2 3 4 – Fullscreen sort & blend per pixel 4 2 3 1 5 RESOLVE . Fullscreen pass uvec2 fragments[K]; // encodes color and depth – Not efficient to globally sort all fragments per pixel n = load (fragments); sort (fragments,n); – Sort K nearest correctly via vec4 color = vec4(0); register array for (i < n) { blend (color, fragments[i]); – Blend fullscreen on top of } framebuffer gl_FragColor = color; 6 TAIL HANDLING . Tail Handling: – Discard Fragments > K – Blend below sorted and hope error is not obvious [Salvi et al.] . Many close low alpha values are problematic . May not be frame- coherent (flicker) if blend is not primitive- ordered K = 4 K = 4 K = 16 Tailblend 7 RECORD TECHNIQUES . Unbounded: – Record all fragments that fit in scratch buffer – Find & Sort K closest later + fast record - slow resolve - out of memory issues 8 HOW TO STORE . -

9.Texture Mapping

OpenGL_PG.book Page 359 Thursday, October 23, 2003 3:23 PM Chapter 9 9.Texture Mapping Chapter Objectives After reading this chapter, you’ll be able to do the following: • Understand what texture mapping can add to your scene • Specify texture images in compressed and uncompressed formats • Control how a texture image is filtered as it’s applied to a fragment • Create and manage texture images in texture objects and, if available, control a high-performance working set of those texture objects • Specify how the color values in the image combine with those of the fragment to which it’s being applied • Supply texture coordinates to indicate how the texture image should be aligned with the objects in your scene • Generate texture coordinates automatically to produce effects such as contour maps and environment maps • Perform complex texture operations in a single pass with multitexturing (sequential texture units) • Use texture combiner functions to mathematically operate on texture, fragment, and constant color values • After texturing, process fragments with secondary colors • Perform transformations on texture coordinates using the texture matrix • Render shadowed objects, using depth textures 359 OpenGL_PG.book Page 360 Thursday, October 23, 2003 3:23 PM So far, every geometric primitive has been drawn as either a solid color or smoothly shaded between the colors at its vertices—that is, they’ve been drawn without texture mapping. If you want to draw a large brick wall without texture mapping, for example, each brick must be drawn as a separate polygon. Without texturing, a large flat wall—which is really a single rectangle—might require thousands of individual bricks, and even then the bricks may appear too smooth and regular to be realistic. -

Best Practice for Mobile

If this doesn’t look familiar, you’re in the wrong conference 1 This section of the course is about the ways that mobile graphics hardware works, and how to work with this hardware to get the best performance. The focus here is on the Vulkan API because the explicit nature exposes the hardware, but the principles apply to other programming models. There are ways of getting better mobile efficiency by reducing what you’re drawing: running at lower frame rate or resolution, only redrawing what and when you need to, etc.; we’ve addressed those in previous years and you can find some content on the course web site, but this time the focus is on high-performance 3D rendering. Those other techniques still apply, but this talk is about how to keep the graphics moving and assuming you’re already doing what you can to reduce your workload. 2 Mobile graphics processing units are usually fundamentally different from classical desktop designs. * Mobile GPUs mostly (but not exclusively) use tiling, desktop GPUs tend to use immediate-mode renderers. * They use system RAM rather than dedicated local memory and a bus connection to the GPU. * They have multiple cores (but not so much hyperthreading), some of which may be optimised for low power rather than performance. * Vulkan and similar APIs make these features more visible to the developer. Here, we’re mostly using Vulkan for examples – but the architectural behaviour applies to other APIs. 3 Let’s start with the tiled renderer. There are many approaches to tiling, even within a company. -

Programming Guide: Revision 1.4 June 14, 1999 Ccopyright 1998 3Dfxo Interactive,N Inc

Voodoo3 High-Performance Graphics Engine for 3D Game Acceleration June 14, 1999 al Voodoo3ti HIGH-PERFORMANCEopy en GdRAPHICS E NGINEC FOR fi ot 3D GAME ACCELERATION on Programming Guide: Revision 1.4 June 14, 1999 CCopyright 1998 3Dfxo Interactive,N Inc. All Rights Reserved D 3Dfx Interactive, Inc. 4435 Fortran Drive San Jose CA 95134 Phone: (408) 935-4400 Fax: (408) 935-4424 Copyright 1998 3Dfx Interactive, Inc. Revision 1.4 Proprietary and Preliminary 1 June 14, 1999 Confidential Voodoo3 High-Performance Graphics Engine for 3D Game Acceleration Notice: 3Dfx Interactive, Inc. has made best efforts to ensure that the information contained in this document is accurate and reliable. The information is subject to change without notice. No responsibility is assumed by 3Dfx Interactive, Inc. for the use of this information, nor for infringements of patents or the rights of third parties. This document is the property of 3Dfx Interactive, Inc. and implies no license under patents, copyrights, or trade secrets. Trademarks: All trademarks are the property of their respective owners. Copyright Notice: No part of this publication may be copied, reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photographic, or otherwise, or used as the basis for manufacture or sale of any items without the prior written consent of 3Dfx Interactive, Inc. If this document is downloaded from the 3Dfx Interactive, Inc. world wide web site, the user may view or print it, but may not transmit copies to any other party and may not post it on any other site or location. -

Antialiasing

Antialiasing CSE 781 Han-Wei Shen What is alias? Alias - A false signal in telecommunication links from beats between signal frequency and sampling frequency (from dictionary.com) Not just telecommunication, alias is everywhere in computer graphics because rendering is essentially a sampling process Examples: Jagged edges False texture patterns Alias caused by under-sampling 1D signal sampling example Actual signal Sampled signal Alias caused by under-sampling 2D texture display example Minor aliasing worse aliasing How often is enough? What is the right sampling frequency? Sampling theorem (or Nyquist limit) - the sampling frequency has to be at least twice the maximum frequency of the signal to be sampled Need two samples in this cycle Reconstruction After the (ideal) sampling is done, we need to reconstruct back the original continuous signal The reconstruction is done by reconstruction filter Reconstruction Filters Common reconstruction filters: Box filter Tent filter Sinc filter = sin(πx)/πx Anti-aliased texture mapping Two problems to address – Magnification Minification Re-sampling Minification and Magnification – resample the signal to a different resolution Minification Magnification (note the minification is done badly here) Magnification Simpler to handle, just resample the reconstructed signal Reconstructed signal Resample the reconstructed signal Magnification Common methods: nearest neighbor (box filter) or linear interpolation (tent filter) Nearest neighbor bilinear interpolation Minification Harder to handle The signal’s frequency is too high to avoid aliasing A possible solution: Increase the low pass filter width of the ideal sinc filter – this effectively blur the image Blur the image first (using any method), and then sample it Minification Several texels cover one pixel (under sampling happens) Solution: Either increase sampling rate or reduce the texture Frequency one pixel We will discuss: Under sample artifact 1. -

Texture Filtering Jim Van Verth Some Definitions

Texture Filtering Jim Van Verth Some definitions Pixel: picture element. On screen. Texel: texture element. In image (AKA texture). Suppose we have a texture (represented here in blue). We can draw the mapping from a pixel to this space, and represent it as a red square. If our scale from pixel space to texel space is 1:1, with no translation, we just cover one texel, so rendering is easy. If we translate, the pixel can cover more than one texel, with different areas. If we rotate, then the pixel can cover even more texels, with odd shaped areas. And with scaling up the texture (which scales down the pixel area in texture space)... ...or scaling down the texture (which scales up the pixel area in texture space), we also get different types of coverage. So what are we trying to do? We often think of a texture like this -- blocks of “color” (we’ll just assume the texture is grayscale in these diagrams to keep it simple). But really, those blocks represent a sample at the texel center. So what we really have is this. Those samples are snapshots of some function, at a uniform distance apart. We don’t really know what the function is, but assuming it’s fairly smooth it could look something like this. So our goal is to reconstruct this function from the samples, so we can compute in-between values. We can draw our pixel (in 1D form) on this graph. Here the red lines represent the boundary of the pixel in texture space. -

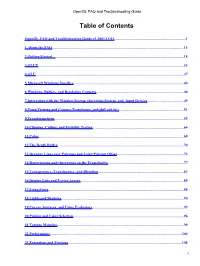

Opengl FAQ and Troubleshooting Guide

OpenGL FAQ and Troubleshooting Guide Table of Contents OpenGL FAQ and Troubleshooting Guide v1.2001.11.01..............................................................................1 1 About the FAQ...............................................................................................................................................13 2 Getting Started ............................................................................................................................................18 3 GLUT..............................................................................................................................................................33 4 GLU.................................................................................................................................................................37 5 Microsoft Windows Specifics........................................................................................................................40 6 Windows, Buffers, and Rendering Contexts...............................................................................................48 7 Interacting with the Window System, Operating System, and Input Devices........................................49 8 Using Viewing and Camera Transforms, and gluLookAt().......................................................................51 9 Transformations.............................................................................................................................................55 10 Clipping, Culling, -

Ray Tracing on Programmable Graphics Hardware

Ray Tracing on Programmable Graphics Hardware Timothy J. Purcell Ian Buck William R. Mark ∗ Pat Hanrahan Stanford University † Abstract In this paper, we describe an alternative approach to real-time ray tracing that has the potential to out perform CPU-based algorithms Recently a breakthrough has occurred in graphics hardware: fixed without requiring fundamentally new hardware: using commodity function pipelines have been replaced with programmable vertex programmable graphics hardware to implement ray tracing. Graph- and fragment processors. In the near future, the graphics pipeline ics hardware has recently evolved from a fixed-function graph- is likely to evolve into a general programmable stream processor ics pipeline optimized for rendering texture-mapped triangles to a capable of more than simply feed-forward triangle rendering. graphics pipeline with programmable vertex and fragment stages. In this paper, we evaluate these trends in programmability of In the near-term (next year or two) the graphics processor (GPU) the graphics pipeline and explain how ray tracing can be mapped fragment program stage will likely be generalized to include float- to graphics hardware. Using our simulator, we analyze the per- ing point computation and a complete, orthogonal instruction set. formance of a ray casting implementation on next generation pro- These capabilities are being demanded by programmers using the grammable graphics hardware. In addition, we compare the perfor- current hardware. As we will show, these capabilities are also suf- mance difference between non-branching programmable hardware ficient for us to write a complete ray tracer for this hardware. As using a multipass implementation and an architecture that supports the programmable stages become more general, the hardware can branching. -

Voodoo Graphics Specification

SST-1(a.k.a. Voodoo Graphics™) HIGH PERFORMANCE GRAPHICS ENGINE FOR 3D GAME ACCELERATION Revision 1.61 December 1, 1999 Copyright ã 1995 3Dfx Interactive, Inc. All Rights Reserved 3dfx Interactive, Inc. 4435 Fortran Drive San Jose, CA 95134 Phone: (408) 935-4400 Fax: (408) 262-8602 www.3dfx.com Proprietary Information SST-1 Graphics Engine for 3D Game Acceleration Copyright Notice: [English translations from legalese in brackets] ©1996-1999, 3Dfx Interactive, Inc. All rights reserved This document may be reproduced in written, electronic or any other form of expression only in its entirety. [If you want to give someone a copy, you are hereby bound to give him or her a complete copy.] This document may not be reproduced in any manner whatsoever for profit. [If you want to copy this document, you must not charge for the copies other than a modest amount sufficient to cover the cost of the copy.] No Warranty THESE SPECIFICATIONS ARE PROVIDED BY 3DFX "AS IS" WITHOUT ANY REPRESENTATION OR WARRANTY, EXPRESS OR IMPLIED, INCLUDING ANY WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, NONINFRINGEMENT OF THIRD-PARTY INTELLECTUAL PROPERTY RIGHTS, OR ARISING FROM THE COURSE OF DEALING BETWEEN THE PARTIES OR USAGE OF TRADE. IN NO EVENT SHALL 3DFX BE LIABLE FOR ANY DAMAGES WHATSOEVER INCLUDING, WITHOUT LIMITATION, DIRECT OR INDIRECT DAMAGES, DAMAGES FOR LOSS OF PROFITS, BUSINESS INTERRUPTION, OR LOSS OF INFORMATION) ARISING OUT OF THE USE OF OR INABILITY TO USE THE SPECIFICATIONS, EVEN IF 3DFX HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. [You're getting it for free. -

Lazy Occlusion Grid Culling

Institut für Computergraphik Institute of Computer Graphics Technische Universität Wien Vienna University of Technology Karlsplatz 13/186/2 email: A-1040 Wien [email protected] AUSTRIA other services: Tel: +43 (1) 58801-18675 http://www.cg.tuwien.ac.at/ Fax: +43 (1) 5874932 ftp://ftp.cg.tuwien.ac.at/ Lazy Occlusion Grid Culling Heinrich Hey Robert F. Tobler TR-186-2-99-09 March 1999 Abstract We present Lazy Occlusion Grid Culling, a new image-based occlusion culling technique for rendering of very large scenes which can be of general type. It is based on a low-resolution grid that is updated in a lazy manner and that allows fast decisions if an object is occluded or visible together with a hierarchical scene-representation to cull large parts of the scene at once. It is hardware-accelerateable and it works efficiently even on systems where pixel-based occlusion testing is implemented in software. Lazy Occlusion Grid Culling Heinrich Hey Robert F. Tobler Vienna University of Technology {hey,rft}@cg.tuwien.ac.at Abstract. We present Lazy Occlusion Grid Culling, a new image-based occlusion culling technique for rendering of very large scenes which can be of general type. It is based on a low-resolution grid that is updated in a lazy manner and that allows fast decisions if an object is occluded or visible together with a hierarchical scene-representation to cull large parts of the scene at once. It is hardware-accelerateable and it works efficiently even on systems where pixel-based occlusion testing is implemented in software. -

A Digital Rights Enabled Graphics Processing System

Graphics Hardware (2006) M. Olano, P. Slusallek (Editors) A Digital Rights Enabled Graphics Processing System Weidong Shi1, Hsien-Hsin S. Lee2, Richard M. Yoo2, and Alexandra Boldyreva3 1Motorola Application Research Lab, Motorola, Schaumburg, IL 2School of Electrical and Computer Engineering 3College of Computing Georgia Institute of Technology, Atlanta, GA 30332 Abstract With the emergence of 3D graphics/arts assets commerce on the Internet, to protect their intellectual property and to restrict their usage have become a new design challenge. This paper presents a novel protection model for commercial graphics data by integrating digital rights management into the graphics processing unit and creating a digital rights enabled graphics processing system to defend against piracy of entertainment software and copy- righted graphics arts. In accordance with the presented model, graphics content providers distribute encrypted 3D graphics data along with their certified licenses. During rendering, when encrypted graphics data, e.g. geometry or textures, are fetched by a digital rights enabled graphics processing system, it will be decrypted. The graph- ics processing system also ensures that graphics data such as geometry, textures or shaders are bound only in accordance with the binding constraints designated in the licenses. Special API extensions for media/software de- velopers are also proposed to enable our protection model. We evaluated the proposed hardware system based on cycle-based GPU simulator with configuration in line with realistic implementation and open source video game Quake 3D. Categories and Subject Descriptors (according to ACM CCS): I.3.3 [Computer Graphics]: Digital Rights, Graphics Processor 1. Introduction video players, mobile devices, etc.