Powering Ahead in Uncertain Times

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Interconnection

Interconnection 101 As cloud usage takes off, data production grows exponentially, content pushes closer to the edge, and end users demand data and applications at all hours from all locations, the ability to connect with a wide variety of players becomes ever more important. This report introduces interconnection, its key players and busi- ness models, and trends that could affect interconnection going forward. KEY FINDINGS Network-dense, interconnection-oriented facilities are not easy to replicate and are typically able to charge higher prices for colocation, as well as charging for cross-connects and, in some cases, access to public Internet exchange platforms and cloud platforms. Competition is increasing, however, and competitors are starting the long process of creating network-dense sites. At the same time, these sites are valuable and are being acquired, so the sector is consolidating. Having facili- ties in multiple markets does seem to provide some competitive advantage, particularly if the facilities are similar in look and feel and customers can monitor them all from a single portal and have them on the same contract. Mobility, the Internet of Things, services such as SaaS and IaaS (cloud), and content delivery all depend on net- work performance. In many cases, a key way to improve network performance is to push content, processing and peering closer to the edge of the Internet. This is likely to drive demand for facilities in smaller markets that offer interconnection options. We also see these trends continuing to drive demand for interconnection facilities in the larger markets as well. © 2015 451 RESEARCH, LLC AND/OR ITS AFFILIATES. -

Van+ May 2014 Aw Layout 1

ISSN 1745-1736 April / May 2014 Will CSPs maximise Volume 16 Issue 2 their potential in the cloud? How to win by playing to your strengths BIG DATA ANALYTICS CEM POLICY BILL & CHARGE 5G Can CSP data transform Does quality awareness Does it deliver business Why CSPs alone can Why it’s here and what performance? set CSPs apart? model flexibility? keep control of billing. it means for CSPs. VanillaPlus Insight VanillaPlus Insight VanillaPlus Insight VanillaPlus Insight VanillaPlus Insight out June 2014 out July 2014 out October 2014 out December 2014 out February 2015 PLUS: Astellia buys Ingenia Telecom • Cerillion survey heralds cloud billing • Openet and OpenCloud launch joint service definition system • Amdocs announces policy control for LTE • Hadoop can cut licencing costs by 70%, says cVidya • TeliaSonera chooses Ericsson for customer experience assurance • CSG International launches convergent billing into space with Inmarsat • Inside Vodacom’s Digital Route deployment • Read the latest news at www.vanillaplus.com technological enabler OF DEUTSCHE BÖRSE CLOUD EXCHANGE CONTENTS IN THIS ISSUE TALKING HEADS Thomas Vasen explains Will CSPs maximise why software in the cloud, 4 EDITOR’S COMMENT their potential in the for the cloud and enabling George Malim wonders when moving too slowly means you’ve reached the cloud? the cloud opens up end of the line opportunities for CSPs 5 INDUSTRY NEWS Astellia acquires Ingenia Telecom, Bytesphere element polling assets 22 bought by Exfo 6 MARKET NEWS Cerillion survey heralds cloud billing, Syniverse -

Middle East 2019 Post Event Report .Pdf

MIDDLE EAST 2019 Save the date: Capacity Middle East will return on 3 - 5 March 2020! POST-EVENT REPORT THANK YOU TO THE SPONSORS Host sponsor Diamond sponsor Platinum sponsors Gold sponsors Silver sponsors Associate sponsors To Serve and to Connect Subsea Middle East sponsors Host sponsor Associate sponsors MIDDLE EAST 2019 THANK YOU Capacity Middle East welcomed 1,683 industry leaders and decision makers from over 500 companies for four days packed with networking, market discussions and lively debates. Our exhibition showcased companies from across the global telecoms community and our conference engaged thought leaders and showcased key market developments. It was the perfect opportunity to reinforce partnerships and explore new business opportunities. The Capacity Media team would like to thank all of our sponsors, speakers and delegates for making this event possible, and look forward to seeing you next year for Capacity Middle East 2020. CONTENTS View the highlights from Capacity Middle East 2019 Who attended? Who spoke at Capacity Middle East 2019 Agenda highlights Key market developments from the Middle East Subsea Middle East Who spoke at Subsea Middle East Testimonials Social media highlights All the networking that happened MIDDLE EAST 2019 1683 519 80 ATTENDEES COMPANIES COUNTRIES VIEW THE HIGHLIGHTS FROM CAPACITY MIDDLE EAST 2019 VIEW THE 2019 HIGHLIGHTS HERE We are delighted to be the Host Sponsor of Capacity Middle East . The event brings together all the stakeholders and it is the ideal platform for us to meet all of our customers, suppliers and members of our entire ecosystem. Ananda Bose, Chief Wholesale & Corporate Affairs Officer, DATAMENA We are a regular participant in Capacity Middle East. -

Winter 2020 Colocation Services

2020 WINTER CUSTOMER SUCCESS REPORT COLOCATION SERVICES CATEGORY COLOCATION SERVICES OVERVIEW Colocation services allow businesses to host their data in a third-party data center that has privately-owned networking equipment and servers. Instead of maintaining servers in-house, at a private data center, or in offices, enterprises can elect to co-locate their infrastructure by renting space in a colocation center. Unlike other types of hosting where clients can rent server space owned by a hosting vendor, with colocation, the client owns the server and rents the physical space needed to house it in a data center. The colocation service rents out data center space where clients can install their equipment. It also provides the cooling, IP address, bandwidth, and power systems that the customer needs to deploy their servers. 2 Customer Success Report Ranking Methodology The FeaturedCustomers Customer Success ranking is based on data from our customer reference Customer Success Report platform, market presence, web presence, & social Award Levels presence as well as additional data aggregated from online sources and media properties. Our ranking engine applies an algorithm to all data collected to calculate the final Customer Success Report rankings. The overall Customer Success ranking is a weighted average based on 3 parts: Market Leader Content Score is affected by: Vendor on FeaturedCustomers.com with 1. Total # of vendor generated customer substantial customer base & market share. references (case studies, success stories, Leaders have the highest ratio of customer testimonials, and customer videos) success content, content quality score, and social media presence relative to company size. 2. Customer reference rating score 3. -

TRADE EUROPE NOW! the Why and How of Electronic Execution in the EU and Beyond

TRADE EUROPE NOW! The Why and How of Electronic Execution in the EU and Beyond Commissioned by: Interxion Researched and Written by: A-Team Group TRADE EUROPE NOW! EXECUTIVE SUMMARY 01 European financial markets are poised for major change as institutions prepare for the EU’s Markets for Financial Instruments Directive (MiFID) II regulation, which comes into effect in January 2017. 02 In the absence of a centralised pan-European order book, trading firms will need to consolidate orders from multiple trading venues to construct their own views of European Best Bid/Offer (EBBO). 03 The emerging picture of the European market structure presents US traders with opportunities to source significant liquidity and diversify their trading activities. 04 Practitioners also need access to independent service providers for key capabilities required under MiFID II, including time-stamping, time synchronisation and risk management gateways. 05 MiFID II’s focus on transparency across a broad range of asset types brings Europe more closely into line with US markets, making it relatively straightforward for US participants to take advantage of Europe’s sophisticated market centres. 06 To succeed, it’s essential that new entrants and current firms secure fast access to key trading venues in the London area, including the London Stock Exchange, BATS Chi-X, the Euronext markets, ICE Futures Europe (the former Liffe), and Frankfurt-based Eurex, a key leading indicator of European market direction. 07 London is the gateway to Europe and selecting the right colocation data centre from which to execute from is an important first step towards successful trading in Europe.Interxion’s City of London data centre campus is situated equidistant from key liquidity venues, Slough in the West, Basildon in the East and right next to the London Stock Exchange. -

“Tics Para La Mejora De La Competitividad Energética”

Guía de referencia “TICs para la mejora de la competitividad energética” Tendencias, Soluciones y 100 proveedores fundamentales M2M Tele pre se er nc t ia en C ta a D artCities Sm A M u orac o t b ió v o IT la n i o li m n C d - E a e d a e t n i i d z e f ó r a i i t c G c s i i o n ó e S s n ó g i m e I l c n o e a a c t T i e z r i r t l l t G i g a c i r e r é i l n e d t E t e s a V s m o s l e D i rt u c u í C h l a o e g u V d li n i z r a e t c i e ó n M t r a m S R e a l i d a a d d a A t u n e m Plataforma de empresas TIC para la mejora de la Eficiencia Energética Eficiencia la de mejora la para TIC empresas de Plataforma “TICs para la mejora de la competitividad energética” competitividad la de mejora la para “TICs Guía de referencia de Guía índice Prólogo 4 Perspectiva general: Eficiencia Energética 6 Impulso de las Admnistraciones Públicas 15 10 Principales tendencias en SmartEnergy 21 Soluciones Tecnológicas en 4 áreas 37 Empresas asociadas 49 Otros proveedores fundamentales 73 Red de colaboración institucional 75 Organismos y Enlaces de referencia 79 10 documentos de referencia 85 Misión, objetivos e iniciativas de enerTIC 87 > Ir a Indice Prólogo Inmersos en unos tiempos de grandes cambios y oportunidades, la Plataforma enerTIC, surge como un proyecto para generar sinergias, colaborar con otras organizaciones nacionales e internacionales, y contribuir al desarrollo del potencial de transformación de las Tecnologías de la Información y Comunicaciones en el ámbito de la eficiencia energética en España. -

Next-Gen Private/Hybrid Cloud – Data Center Services & Solutions

2021 Next-Gen Private/Hybrid Cloud – Data Center Services & Solutions 2021 Copyright © 2019, Information Services Group, Inc. All Rights Reserved. 1 ISG (Information Services Group) (NASDAQ: III) is a leading global technology research and advisory firm. A trusted business partner to more than 700 clients, including 75 of the top 100 enterprises in the world, ISG is committed to helping corporations, public sector organizations, and service and technology providers achieve operational excellence and faster growth. The firm specializes in digital transformation services, including automation, cloud and data analytics; sourcing advisory; managed governance and risk services; network carrier services; technology strategy and operations design; change management; market intelligence and technology research and analysis. Founded in 2006 and based in Stamford, Conn., ISG employs more than 1,300 professionals operating in more than 20 countries — a global team known for its innovative thinking, market influence, deep industry and technology expertise, and world-class research and analytical capabilities based on the industry’s most comprehensive marketplace data. For more information, visit www.isg-one.com. © 2021, Information Services Group, Inc. All Rights Reserved. 2 Table of Contents Definition ........................................................................................................................................ 4 Quadrants Research ..................................................................................................................... -

Interxion Ams5

Fact sheet INTERXION AMS5 AMSTERDAM SCHIPHOL CAMPUS LOCATION Tupolevlaan 101, 1119 PA Schiphol-Rijk Amsterdam's leading interconnection hub At a glance Interxion’s AMS5 carrier-neutral data centre provides the latest secure, scalable infrastructure for mission-critical IT systems, with a wide range of connectivity ■ Over 4,000m2 of conveniently located solutions. Located in Schiphol-Rijk, part of our Amsterdam Schiphol Campus, just ten equipped data centre space minutes from Schiphol International Airport. The facility offers 2N UPS power and N+0.5 ■ Trained security staff on site 24x7 cooling, as well as the most advanced alarm and monitoring systems. AMS5 supports the latest high-density power configurations and has been designed using Interxion’s ■ Located in Interxion's Amsterdam energy-efficient modular architecture, with 100% renewable and maximum-efficiency Schiphol Campus with four other components as standard. Interxion data centres: AMS3, AMS7, AMS8 and AMS10. ■ Direct access to 65+ carriers/ISPs and Metro Connect NL-IX exchange Interxion has connected its data centers with MetroConnect on the Amsterdam Schiphol ■ ASHRAE-standard temperature and Campus with an ultra-fast, 100 Gbit/s connection to its data center on the Amsterdam humidity SLAs Science Park. ■ 100% renewable energy is used in our data centres Power Sustainability ■ N+0.5 generator backup with ■ 100% of purchased energy is from About Interxion minimum 24 hours of fuel stored on renewable sources site, with SLA on on-time refueling ■ Optimized power usage effectiveness Interxion (NYSE: INXN) is a leading provider ■ Redundant UPS Power configuration (PUE) and energy efficient cooling of carrier and cloud-neutral colocation systems data centre services in Europe, serving a ■ SLA’s up to 99.999% availability on wide range of customers through over 50 power and cooling ■ Data centres operated to the highest data centres in 11 European countries. -

Network Services for Enterprises and Carriers Neutral Interconnection

POP LIST Network Services We are a leading ISP focused on providing network POP connectivity throughout Europe, the U.S. and Asia-Pacific. MAN London Telehouse North Milan Enter DC1/DC2, Caldera, Equinix ML2/3, MAN + Data Center for Enterprises Paris Telehouse 2 Voltaire, Equinix PA2/3, Data4, BT/INET, Infracom Avalon NETWORK Our IP backbone spans Europe’s largest PoPs (Frankfurt, Partner Network Interxion PAR 1/2 Turin Corso Svizzera Amsterdam, London, Paris, Madrid, Stockholm and Metro Ethernet Network and Carriers STO Amsterdam Equinix AM1/3/5/7, IPTP Matrix 4, Rome Caspur/Namex Brussels), as well as the U.S. (New York, Los Angeles, Radio Coverage EvoSwitch, NIKHEF, Interxion Science Park Palermo TISparkle Sicily HUB Miami) and far East (Hong Kong) to serve companies Frankfurt Equinix FR2/5, Interxion FRA, Madrid Interxion MAD1 in Europe, North America and APAC. NewTelco, e-shelter Stockholm Equinix SK1 Brussels Interxion BRU1 Vienna Interxion VIE1 Services AMS LON Prague CEColo • Ethernet / L2 BRU Budapest Dataplex • VPN / MPLS / SD-WAN FRA PRG New York Digital Realty (60th Hudson st.) PAR • Internet Access / DIA Hong Kong Equinix HK1, MEGA-i • Cloud Interconnection MIL VIE NYC TRN BUD Los Angeles Coresite LA1 Miami Equinix MI1 (NOTA) MRS Marseilles Interxion MRS1 REMOTE PEERING LAX MAD ROM PMO MIA HGK ACCESS NETWORKS LOCAL MAN Italy Spain Amsterdam Milan Equinix ML3 to Paris Gijon to Frankfurt Bilbao to Marseille Equinix INET Our data centers are located in the most strategic IPTP Caldera Evoswitch AM3 Bolzano Campus Neutral and interconnected locations in Italy: Zaragoza • 2 DCs in Milan Caldera Como Bergamo NIKHEF / Interxion Milan Barcelona Equinix Science Park Interconnection • 1 DC in Turin, Corso Svizzera Venezia Trieste Madrid AM7 Data4 Equinix ML2 Turin Verona Alessandria Equinix DATA CENTER DATA Bologna Valencia Facility Modena AM1/2 We are carrier neutral, meaning we work with several Genova Rimini Pisa Florence networking providers to deliver connectivity that never Ancona Equinix AM5 Seville compromises on performance, speed or availability. -

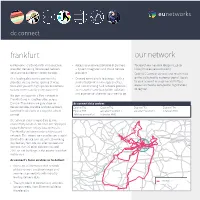

Frankfurt Our Network

dc connect frankfurt our network euNetworks is a bandwidth infrastructure • Access to an on-net portfolio of partners To search our network footprint, go to provider, delivering fibre-based network – System Integrators and Cloud Service http://map.eunetworks.com/ solutions to customers across Europe. providers Order DC Connect services and much more As a leading data centre connectivity • Onward connectivity to Europe – with a on the euNetworks customer portal. Speak provider, we are always looking at ways similar footprint in 14 European cities, to your account manager or visit http:// to enable you with high speed connections and a diverse long haul network provides www.eunetworks.com/portal-registration/ to data centres and key sites you need. access to the same bandwidth solutions to register. and experience wherever you need to be. We own and operate a fibre network in Frankfurt and in 13 other cities across Europe. This means we guarantee to dc connect data centres deliver reliable, scalable and cost-efficient Equinix FR1 Equinix FR2 Equinix FR3 Equinix FR4 bandwidth solutions in a way that others Equinix FR5 eShelter Frankfurt 1 eShelter Frankfurt 3 Interxion FRA1 cannot. Telehouse Frankfurt Interxion FRA3 DC Connect is our unique data centre connectivity solution. We have pre-deployed Bad Vilbel capacity between 10 key data centres in Steinbach Frankfurt by utilising our deep fibre based Schwalbach am Taunus Maintal Bad Soden network. This means we can offer you a rapid Eschberg bandwidth service turn up, with 10 working Kelkheim day delivery SLA. We can offer connectivity Mühlheim am Main to more than 20 other data centres and Frankfurt am Main West FRANKFURT 200+ on-net buildings in the greater Frankfurt Offenbach Hofheim Kriftel metro area. -

Interxion Holding N.V. Annual Report

2018 InterXion Holding N.V. Annual Report For the year ended December 31, 2018 Carrier and cloud-neutral data centers at the heart of the European economy Carrier and cloud-neutral data center services Interxion is a leading provider of carrier- and Cloud-neutral colocation data center services with 52 state of the art facilities across 13 cities in 11 European countries. Twenty years of experience allows us to deliver operational excellence across our footprint, while consistent designs facilitate our customers’ ability to easily scale their deployments across Europe. Our data center campuses are gateways to global connectivity networks and many submarine cables terminate in our data centers, increasingly from Asia, Africa and the Middle East. Our substantial reach across Europe provides our customers with access to approximately 80% of EU GDP. 02 / www.interxion.com Forward-Looking Statements This annual report contains forward-looking statements within These factors include, among other things: the meaning of Section 27A of the Securities Act of 1933, as amended, and Section 21E of the Securities Exchange Act ■ operating expenses cannot be easily reduced in the short of 1934, as amended, with respect to all statements other term; than statements of historical fact regarding our business, ■ inability to utilize the capacity of newly planned or acquired financial condition, results of operations and certain of our data centers and data center expansions; plans, objectives, assumptions, projections, expectations or beliefs with respect to these items and statements regarding ■ significant competition; other future events or prospects. These statements include, ■ cost and supply of electrical power; without limitation, those concerning: our strategy and our ability to achieve it; expectations regarding sales, profitability and ■ overcapacity in the data center industry; and growth; plans for the construction of new data centers; our ability to integrate new acquisitions; our possible or assumed ■ performance under service level agreements. -

Cloud Connect

INTERXION SCIENCE PARK Mike Hollands, Director Market Development & Strategy Copyright © 2016 Interxion - CONFIDENTIAL 2 Our Locations 11 13 45 Countries Cities Data Centres Austria Netherlands Belgium Spain Denmark Sweden France Switzerland Germany UK Ireland 3 PeeringDB 4 INTERXION SCIENCE PARK The largest interconnectivity hub in Amsterdam Cross Connect One Virtual Building Interxion DRT NIKHEF Amsterdam Data Science Park Campus Cross Tower Connect • 1,500m2 • 1,825m2 • AM1: 5,225 m2 k k k Interxion Equinix AM3 Schiphol Rijk Campus and AM4 Cross Connect • AM3:12,800 m2 • 5 Data Centres • AM4:11,500 m2 • 27,200m2 Metro Connect k 5 INTERXION AMSTERDAM Metro Connect solution between Schiphol Rijk and Science Park INTERXION SCIENCE PARK • Direct distance is 17km • North Route (Primary) Fibre distance 33km • South Route (back-up )Fibre distance 34km • Latency is 0.85ms RTD. INTERXION • Metro Connects are protected SCHIPHOL RIJK as standard with a 10ms cutover in event primary route fails. • 1Gb, 10Gb and 100Gb services available. • 3 day SLA for provisioning a new circuit 6 INTERXION SCIENCE PARK | 170+ CONNECTIVITY PROVIDERS LOCATED WITHIN ISP AND NIKHEF COMBINED – ALL AVAILABLE VIA AN INTERXION CROSS CONNECT 1&1 Internet Datadigest Service Provider Init7 (Switzerland) Prolocation T-Systems Nederland 2AT Datafiber Telecom Interconnect Services Proxility Turk Telekom A1 Telekom Austria Datahop Internap PTCL (Pakistan Telecom Company Ltd.) UNET A2B Facilities DataWeb Interoute Managed Services Raffel Internet Unilogic ADES DELTA Kabelcomfort