UNIVERSITY of CALIFORNIA RIVERSIDE Detecting and Verifying

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

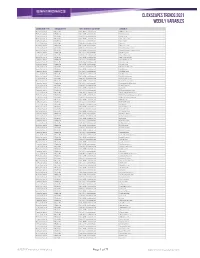

Clickscapes Trends 2021 Weekly Variables

ClickScapes Trends 2021 Weekly VariableS Connection Type Variable Type Tier 1 Interest Category Variable Home Internet Website Arts & Entertainment 1075koolfm.com Home Internet Website Arts & Entertainment 8tracks.com Home Internet Website Arts & Entertainment 9gag.com Home Internet Website Arts & Entertainment abs-cbn.com Home Internet Website Arts & Entertainment aetv.com Home Internet Website Arts & Entertainment ago.ca Home Internet Website Arts & Entertainment allmusic.com Home Internet Website Arts & Entertainment amazonvideo.com Home Internet Website Arts & Entertainment amphitheatrecogeco.com Home Internet Website Arts & Entertainment ancestry.ca Home Internet Website Arts & Entertainment ancestry.com Home Internet Website Arts & Entertainment applemusic.com Home Internet Website Arts & Entertainment archambault.ca Home Internet Website Arts & Entertainment archive.org Home Internet Website Arts & Entertainment artnet.com Home Internet Website Arts & Entertainment atomtickets.com Home Internet Website Arts & Entertainment audible.ca Home Internet Website Arts & Entertainment audible.com Home Internet Website Arts & Entertainment audiobooks.com Home Internet Website Arts & Entertainment audioboom.com Home Internet Website Arts & Entertainment bandcamp.com Home Internet Website Arts & Entertainment bandsintown.com Home Internet Website Arts & Entertainment barnesandnoble.com Home Internet Website Arts & Entertainment bellmedia.ca Home Internet Website Arts & Entertainment bgr.com Home Internet Website Arts & Entertainment bibliocommons.com -

Free Photo Editing Apps for Phones, Tablets & Computers

FREE PHOTO EDITING APPS FOR PHONES, TABLETS & COMPUTERS Here is a compilation of popular and good-quality free photo editing apps. You can download most of these onto your phone, computer, or tablet device and most are available for both Android and Apple devices too. They are all free to use and all have a wide range of applications and tools in their basic package, but some do have in-app purchases for add-ons and increased functionality – so be careful. I have only used a few personally, so you will have to explore and see what works best for you. However, I highly recommend Pixlr for the quick, easy addition of filters, effects, and frames and beFunky for great collage making. These websites/apps will enable you to creatively experiment and develop your own photographs at home. _______________________________________________________________________________________________ Instagram - It should go without saying: If you’re taking photos, Instagram is the place to share them. The site is the third-largest social network in the world after Facebook and YouTube, and as of June 2018, the app has over 1 billion monthly active users. PicsArt Photo editor - PicsArt is an image editing, collage and drawing application and a social network. PicsArt enables users to take and edit pictures, draw with layers, and share their images with the PicsArt community and on other networks like Facebook and Instagram. The app is available on iOS, Android, and Windows mobile devices. Pixlr - Pixlr is a Photoshop clone that offers a generous treasure trove of image- editing features along with the ability to import photos from Facebook. -

Data from Sensortower

Q4 2019 Store Intelligence Data Digest © 2020 Sensor Tower Inc. - All Rights Reserved Executive Summary: Highlights Worldwide app downloads totaled 28.7 billion in The biggest story of Q4 2019 was the launch of 4Q19, a 4.7% year-over-year increase. 2019 full Disney+, which quickly became the top year downloads grew 9.1% to 114.9B, including downloaded app in the U.S. It had more than 30 30.6B on the App Store and 84.3 from Google Play. million U.S. downloads in the quarter. For the first time in more than five years, Google TikTok ended the year as the No. 2 app by global passed Facebook to become the top mobile downloads behind WhatsApp. India was publisher by worldwide downloads. It had 841M responsible for nearly 45% of TikTok’s first-time first-time downloads in 4Q19, up 27.6% Y/Y. downloads in 2019. 2 © 2020 Sensor Tower Inc. - All Rights Reserved Table of Contents: Topics Covered The Q4 2019 Store Intelligence Data Digest offers analysis on the latest mobile trends: Top Charts for the Quarter 2019 Year in Review 5. Market Overview: 36. Top Categories: 43. 2019 Year in Review: Worldwide year-over-year download A look at year-over-year growth for the Top apps, games, publishers, new apps, growth for the App Store and Google Play top categories on both app stores and new games globally in 2019 6. Top Apps: 39. Top Countries: 51. Disney+: Top non-game apps globally, in the U.S., The countries that had the most installs A look at where Disney+’s launch places it and in Europe in the quarter and a look at Y/Y growth among top SVOD apps in the U.S. -

How Safe Are Popular Apps?

HOW SAFE ARE POPULAR APPS? A Study of Critical Vulnerabilities and Why Consumers Should Care EXECUTIVE SUMMARY Should consumers be concerned about their personal information – including credit card and location information – the next time they order an online pizza or use their ridesharing app? This report explores the security of the most popular Android apps and highlights the threats posed by the growing reliance of companies and American consumers on software that increasingly contains out-of-date, unsecured versions of open source components. With the annual cost of cybercrime expected to reach $2 trillion by 2019, this problem has not attracted the attention it deserves.1 The use of open source code has grown across all industries in recent years, allowing companies to lower development costs, bring their products to market faster, and accelerate innovation. Despite these advantages, open source code has certain characteristics that make it particularly attractive to hackers. To gain a deeper understanding of open source vulnerabilities, this report scrutinizes 330 of the most popular Android apps in the U.S., drawn from 33 different categories. Using Insignary’s Clarity tool, these common apps were analyzed (scanned) for known, preventable security vulnerabilities. Of the sample, 105 apps (32% of the total) were identified to contain vulnerabilities across a number of severity levels – critical, high, medium and low risk – totaling 1,978 vulnerabilities. In effect, the results found an average of 6 vulnerabilities per app over the entire sample -- or an alarming 19 vulnerabilities per app for the identified 105 apps. Among the detected vulnerabilities, 43% are considered critical or high risk by the National Vulnerability Database. -

Snapchat Free Download Apk

Snapchat free download apk Continue Snapchat is a quick way to send photos as well as video chat on the go. The self-destruct feature of photos and videos means your friends can't share photos you share through Snapchat. Enjoy a quick and fun mobile conversation! Attach a photo or video, add a caption and send it to a friend. They will view it, laugh and then Snap disappears from the screen. You can also add Snap to your story just like Instagram at the touch of a button to share your day with all your friends. When you live in the moment, life is more fun. By logging in and attaching your first Snap after downloading and installing Snapchat, you must first create an account with a valid email address to start. There are no options for logging in with Facebook or Google. You don't have to friend with your friends on the Snapchat app to share items. In fact, you can send a snap to everyone, whether it's someone you know, or a stranger you found through a Snapchat search tool. However, many users adjust their privacy settings to get snapshots only from friends, so there's a chance that strangers may not get your shots. Navigation Once you open the app, it will open on the camera screen where you can take a photo or record a video with your main or front-facing camera. At the bottom of the screen are two icons, a simple square and a friend tab. Click on the square to see the action feed with the list of contacts you sent the pictures to or from whom you received the photo. -

Q1 2021: Store Intelligence Data Digest — Explore the Quarter’S Top Apps, Games, Publishers, and More © 2021 Sensor Tower Inc

Q1 2021: Store Intelligence Data Digest — Explore the Quarter’s Top Apps, Games, Publishers, and More © 2021 Sensor Tower Inc. - All Rights Reserved Executive Summary: Highlights Worldwide app downloads totaled 36.6 billion in Q1 2021 was a dynamic quarter for finance apps, 1Q21, an 8.7% year-over-year increase. App Store with increased demand for stock trading apps downloads fell 8.6% to 8.4B (due to an outsized total caused by the WallStreetBets subreddit and soaring in 1Q20 during the initial spread of COVID-19), while cryptocurrency prices. As a result, stock trading Google Play downloads increased 15.3% to 28.2B. and cryptocurrency apps saw installs skyrocket. Crash Bandicoot: On the Run from King showed Secure messaging apps continued to gain the power of popular console and PC properties popularity in Q1 2021. Apps such as Telegram and on mobile, releasing worldwide on March 25. The Signal experienced rapid growth last quarter and game was a massive success in its first week, gained market share versus the top established becoming the most downloaded title in Q1 2021. messaging apps. 2 © 2021 Sensor Tower Inc. - All Rights Reserved About This Data: Methodology Could your business benefit from Sensor Tower’s Mobile Insights team compiled the download estimates access to Store Intelligence insights provided in this report using the Sensor Tower Store Intelligence platform. and the highly accurate data used to ● Figures cited in this report reflect App Store and Google Play build this report? See the fastest download estimates for January 1, 2016 through March 31, 2021. -

The Value of Technology Releases in the Mobile App Ecosystem

The Value of Technology Releases in the Mobile App Ecosystem THE ECONOMIC IMPACT OF SOFTWARE DEVELOPER KITS Jin-Hyuk Kim, University of Colorado Boulder Yidan Sun, Illinois Institute of Technology Liad Wagman, Illinois Institute of Technology January 2021 The Value of Technology Releases in the Mobile App Ecosystem 1.EXECUTIVE SUMMARY The smartphone digital ecosystem comprises many layers. Platforms release new hardware, operating systems, and functionalities, accompanied by Software Developer Kits (SDKs), which enable app developers to take advantage of the new hardware and software features. Developers, engineers and designers come up with innovative product ideas, and these ideas can result in new apps offered to consumers. Despite the theoretical understanding of some layers of platform markets in the academic literature, and the well-established practice of utilizing SDKs in the app ecosystem, how much economic value or consumer surplus is being generated by such technology releases has been elusive. Using an expansive dataset of iOS and Android app market activities from an app intelligence service provider, we derive results that identify robust effects of technology (SDK) releases on app development, which in turn affect smartphone sales. In addition, we link technology releases to new job creation, and provide a measure that estimates the consumer surplus associated with apps. An implication of SDK adoption is that more apps leverage new platform technologies. Put simply, SDK adoption reduces the time it takes to develop apps and enables developers to introduce and take advantage of new hardware functionalities. To that effect, we present preliminary evidence that SDK releases may increase early-stage startup funding as well as the size of employment in a relevant high-tech sector (e.g., software developers). -

Ks3/Ks4/Ks5 Photography Online Resources Experimentation

KS3/KS4/KS5 PHOTOGRAPHY ONLINE RESOURCES EXPERIMENTATION Hi everyone, I have been researching and compiling information on free photo editing apps, as most of you won’t have access to Photoshop/Adobe Creative Suite at home. You can download most of these onto your phone, computer or tablet device; some programs are web based e.g. Pixlr. These websites/apps will enable you to creatively experiment and develop your own photographs at home. EXPERIMENT (AO2) with your own Photographs/chosen themes. **Add your original photographs & edits to your SWAY, under the heading “Experimentation with Photo Apps”. You could use a comparison card if you want to show the DEVELOPMENT (AO3) of an idea** Remember to save your photographs in JPEG Format prior to uploading Have fun DJM Instagram - It should go without saying: If you’re taking photos, Instagram is the place to share them. The site is the third-largest social network in the world after Facebook and YouTube, and as of June 2018, the app has over 1 billion monthly active users. PicsArt Photo editor - PicsArt is an image editing, collage and drawing application and a social network. PicsArt enables users to take and edit pictures, draw with layers, and share their images with the PicsArt community and on other networks like Facebook and Instagram. The app is available on iOS, Android, and Windows mobile devices. Pixlr - Pixlr is a Photoshop clone that offers a generous treasure trove of image-editing features along with the ability to import photos from Facebook. Pixlr has also been compared to GNU in terms of functionality and user interface. -

Hello JWA Photographers, I Have Been Researching and Compiling

Hello JWA Photographers, I have been researching and compiling information on free photo editing apps. You can download most of these onto your phone, computer or tablet device. I’ve only used a few personally, so you’ll have to explore and see what works best for you. However I highly recommend Snapseed and Photoshop Express. These websites/apps will enable you to creatively experiment and develop your own photographs at home. Please email me if you have any recommendations to share? Instagram - It should go without saying: If you’re taking photos, Instagram is the place to share them. The site is the third-largest social network in the world after Facebook and YouTube, and as of June 2018, the app has over 1 billion monthly active users. PicsArt Photo editor - PicsArt is an image editing, collage and drawing application and a social network. PicsArt enables users to take and edit pictures, draw with layers, and share their images with the PicsArt community and on other networks like Facebook and Instagram. The app is available on iOS, Android, and Windows mobile devices. Pixlr - Pixlr is a Photoshop clone that offers a generous treasure trove of image- editing features along with the ability to import photos from Facebook. Pixlr has also been compared to GIMP in terms of functionality and user interface. Although it may be overkill for some, it’s just the right balance of form and functionality for others. Foodie - Everyone has been guilty of taking pictures of their food at some point. Foodie embraces this impulse and helps you take your food photos to an entirely new level. -

Measuring and Mitigating Security and Privacy Issues on Android Applications

Measuring and Mitigating Security and Privacy Issues on Android Applications Candidate: Supervisor: Lucky Onwuzurike Dr. Emiliano De Cristofaro Examiners: Professor Lorenzo Cavallaro, Kings College London Dr. Steven Murdoch UCL Computer Science A thesis submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy Information Security Research Group Department of Computer Science University College London 2 Declaration I, Lucky Onwuzurike, confirm that the work presented in this thesis is my own. Where information has been derived from other sources, I confirm that this has been appropriately indicated in the thesis. 3 4 Abstract Over time, the increasing popularity of the Android operating system (OS) has resulted in its user-base surging past 1 billion unique devices. As a result, cybercriminals and other non-criminal actors are attracted to the OS due to the amount of user information they can access. Aiming to investigate security and privacy issues on the Android ecosystem, previous work has shown that it is possible for malevolent actors to steal users' sensitive personal information over the network, via malicious applications, or vulnerability exploits etc., presenting proof of concepts or evidences of exploits. Due to the ever-changing nature of the Android ecosystem and the arms race involved in detecting and mitigating malicious applications, it is important to continuously examine the ecosystem for security and privacy issues. This thesis presents research contributions in this space, and it is divided into two parts. The first part focuses on measuring and mitigating vulnerabilities in applications due to poor implementation of security and privacy protocols. In particular, we investigate the implementation of the SSL/TLS protocol validation logic, and properties such as ephemer- ality, anonymity, and end-to-end encryption. -

Art 2020 - 2021 Hi My Name Is Ms

Oh Snap !!! Art 2020 - 2021 Hi my name is Ms. Dagum and let me tell you ... When the world took a pause ... The classes went to virtual format … The challenge : The arts are essential, but enrichment classes often involve physical materials, group activities, March 13, 2020 - we went on spring or even audiences—requirements that break and never came back ... aren't easily met in digital environments. Additionally, teachers typically serve multiple grades and classes that require differentiated lesson plans... No access to art materials … what to do... • Instead of focusing on skill-based assignments, some teachers use basic household materials or found objects (many students have no access to the typical art supplies). • Social media apps - Instagram, Snapchat/ bitmojis etc. • Digital collaging with apps ... FACE 2 FACE BLENDED DIGITAL/VIRTUAL Face-to-face instruction is Blended courses (also known as hybrid education that takes place over the that face-to-face lectures involve or mixed-mode courses) Internet. It is often referred to as students and instructors interacting are classes where a portion of the “elearning” among other terms. • in real time. traditional face-to-face instruction is Online Learning: Internet-based Students in the classroom adhering replaced by web-based online learning. courses offered synchronously to social distancing protocol… Blended learning uses technology to and/or asynchronously. • Mobile combine in-class and out-of- Learning: by means of devices such Teachers present lesson that will class learning.