Measuring and Mitigating Security and Privacy Issues on Android Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

App for Creating a Songs to Download 5 Best Free Happy Birthday Video Maker Apps

app for creating a songs to download 5 Best Free Happy Birthday Video Maker Apps. Precious moments in life fade from memory quickly, and remembering birthday parties becomes more difficult as time goes on. Luckily, we no longer have to rely on memory to remember the most amazing moments of a birthday party because our Smartphones can capture high-resolution videos of those moments. Raw footage captured by any type of camera is rarely presentable, since it may contain scenes that you simply don't want to include in your videos. If you are looking for a video maker app that lets you wish a happy birthday to your loved ones or create a stunning video of their birthday party, you are at the right place because in this article we are going to take you through best happy birthday video maker apps. For those who want to make a happy birthday video with online tools, check our pick of the top 10 best online tools to make happy birthday videos. Top 5 Best Free Happy Birthday Video Maker Apps. You don't have to be a skilled video editor to create happy birthday videos with any of the apps featured in this article, because each video maker app offers simple and creative tools that enable you to create a video in a few simple steps. Let's have a look at some of the best options on the market. Make Happy Birthday Slideshow Video with Filter/Effect/Element in Filmora. To make your birthday video with more effect, emojis, elements, filters and titles, I highly recommend you to try Wondershare Fimora9 video editor. -

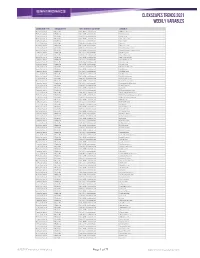

Clickscapes Trends 2021 Weekly Variables

ClickScapes Trends 2021 Weekly VariableS Connection Type Variable Type Tier 1 Interest Category Variable Home Internet Website Arts & Entertainment 1075koolfm.com Home Internet Website Arts & Entertainment 8tracks.com Home Internet Website Arts & Entertainment 9gag.com Home Internet Website Arts & Entertainment abs-cbn.com Home Internet Website Arts & Entertainment aetv.com Home Internet Website Arts & Entertainment ago.ca Home Internet Website Arts & Entertainment allmusic.com Home Internet Website Arts & Entertainment amazonvideo.com Home Internet Website Arts & Entertainment amphitheatrecogeco.com Home Internet Website Arts & Entertainment ancestry.ca Home Internet Website Arts & Entertainment ancestry.com Home Internet Website Arts & Entertainment applemusic.com Home Internet Website Arts & Entertainment archambault.ca Home Internet Website Arts & Entertainment archive.org Home Internet Website Arts & Entertainment artnet.com Home Internet Website Arts & Entertainment atomtickets.com Home Internet Website Arts & Entertainment audible.ca Home Internet Website Arts & Entertainment audible.com Home Internet Website Arts & Entertainment audiobooks.com Home Internet Website Arts & Entertainment audioboom.com Home Internet Website Arts & Entertainment bandcamp.com Home Internet Website Arts & Entertainment bandsintown.com Home Internet Website Arts & Entertainment barnesandnoble.com Home Internet Website Arts & Entertainment bellmedia.ca Home Internet Website Arts & Entertainment bgr.com Home Internet Website Arts & Entertainment bibliocommons.com -

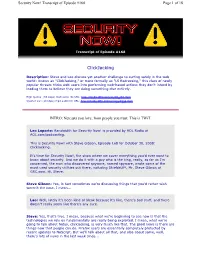

Clickjacking

Security Now! Transcript of Episode #168 Page 1 of 18 Transcript of Episode #168 ClickJacking Description: Steve and Leo discuss yet another challenge to surfing safely in the web world: Known as "ClickJacking," or more formally as "UI Redressing," this class of newly popular threats tricks web users into performing web-based actions they don't intend by leading them to believe they are doing something else entirely. High quality (64 kbps) mp3 audio file URL: http://media.GRC.com/sn/SN-168.mp3 Quarter size (16 kbps) mp3 audio file URL: http://media.GRC.com/sn/sn-168-lq.mp3 INTRO: Netcasts you love, from people you trust. This is TWiT. Leo Laporte: Bandwidth for Security Now! is provided by AOL Radio at AOL.com/podcasting. This is Security Now! with Steve Gibson, Episode 168 for October 30, 2008: Clickjacking. It's time for Security Now!, the show where we cover everything you'd ever want to know about security. And we do it with a guy who is the king, really, as far as I'm concerned, the man who discovered spyware, named spyware, wrote some of the most used security utilities out there, including ShieldsUP!, Mr. Steve Gibson of GRC.com. Hi, Steve. Steve Gibson: Yes, in fact sometimes we're discussing things that you'd rather wish weren't the case. I mean... Leo: Well, lately it's been kind of bleak because it's like, there's bad stuff, and there doesn't really seem like there's any cure. Steve: Yes, that's true. -

Metro Trains 4

Contents Dumb Ways to Die, by Metro Trains 4 Volvo Gets Flexible 12 Have Michelin Guide, Will Travel 20 Betty Crocker’s Sweet Ambitions 26 Blendtec Crushes It at Content Marketing 34 Jell-O Shapes its Future 44 HSBC Makes an Elevator Pitch to Small Businesses 52 LinkedIn Marketing Solutions Creates an Owned Media Empire 60 2 Intro will go here 3 Astonishing Tales of Content Marketing Dumb Ways to Die, by Metro Trains There’s a movement underway to add humor and personality to marketing. I’m a major supporter of that movement. There’s no substitute for human emotion when you’re trying to make a connection with your audience. Metro Trains 5 Surely, though, there are times when humor is strictly inappropriate. For example, say you work for a staid, buttoned- down industry like public transportation. Now imagine you’re in that industry and need to get across a serious, life-or-death public safety message. You should Those adorable, disaster-prone animated beans absolutely avoid humor and became a runaway viral hit in 2012. The video personality in this context. currently sits at over 100 million views on YouTube. The soundtrack tune cracked the Top 100 in the Unless, that is, you want to Netherlands and hit #38 on the UK Indie charts. Two create a worldwide multimedia spinoff games racked up millions of downloads. phenomenon, like Australia’s Metro Trains Melbourne did with So how did a public safety announcement become their video Dumb Ways to Die. such a smashing success? Read on. Metro Trains 6 The Message People Need Metro Trains Melbourne had an Instead of starting with the (But Don’t Want) to Hear important message to get across: message and crafting dire visuals Be safe around trains, whether around it, they wanted to create you’re driving near tracks or something people would enjoy waiting on a platform. -

Security Guide for Cisco Unified Communications Manager, Release 11.0(1) First Published: 2015-06-08

Security Guide for Cisco Unified Communications Manager, Release 11.0(1) First Published: 2015-06-08 Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS" WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

A Semi-Automated Security Advisory System to Resist Cyber-Attack in Social Networks

A Semi-Automated Security Advisory System to Resist Cyber-attack in Social Networks Samar Muslah Albladi and George R S Weir University of Strathclyde, Glasgow G1 1XH, UK {samar.albladi; george.weir}@strath.ac.uk Abstract. Social networking sites often witness various types of social engi- neering (SE) attacks. Yet, limited research has addressed the most severe types of social engineering in social networks (SNs). The present study in- vestigates the extent to which people respond differently to different types of attack in a social network context and how we can segment users based on their vulnerability. In turn, this leads to the prospect of a personalised security advisory system. 316 participants have completed an online-ques- tionnaire that includes a scenario-based experiment. The study result re- veals that people respond to cyber-attacks differently based on their de- mographics. Furthermore, people’s competence, social network experience, and their limited connections with strangers in social networks can decrease their likelihood of falling victim to some types of attacks more than others. Keywords: Advisory System, Social Engineering, Social Networks. 1 Introduction Individuals and organisations are becoming increasingly dependent on working with computers, accessing the World Wide Web and, more importantly, sharing data through virtual communication. This makes cyber-security one of today’s greatest issues. Pro- tecting people and organisations from being targeted by cybercriminals is becoming a priority for industry and academia [1]. This is due to the huge potential damage that could be associated with losing valuable data and documents in such attacks. Previous research focuses on identifying factors that influence people’s vulnerability to cyber-attack [2] as the human has been characterised as the weakest link in infor- mation security research. -

Prospectus for the Initial Public Offering of up to 14,634,146 Ordinary Shares in the Company at an Offer Price of $0.41 Per Share Prospectus to Raise $6.0 Million

Atomos Limited ACN 139 730 500 Prospectus for the initial public offering of up to 14,634,146 ordinary shares in the Company at an offer price of $0.41 per share Prospectus to raise $6.0 million. Underwriters and Joint Lead Managers Australian Legal Adviser Morgans Corporate Limited Henslow Pty Limited Maddocks Lawyers (AFSL 235407) (AFSL 483168) Important Notices Offer Historical Financial Information is presented on both a statutory and pro forma The Offer contained in this Prospectus is an invitation to apply for fully paid basis (refer Section 4.2) and has been prepared and presented in accordance ordinary shares in Atomos Limited (ACN 139 730 500) (Company). This Prospectus with the recognition and measurement principles of Australian Accounting is issued by the Company for the purposes of Chapter 6D of the Corporations Act. Standards (AAS) (including the Australian Accounting Interpretations) issued by the Australian Accounting Standards Board (AASB), which are consistent with International Financial Reporting Standards (IFRS) and interpretations Lodgement and Listing issued by the International Accounting Standards Board (IASB). This Prospectus is dated 30 November 2018 (Prospectus Date) and a copy This Prospectus also includes Forecast Financial Information based on the of this Prospectus was lodged with ASIC on that date. The Company will best estimate assumptions of the Directors. The basis of preparation and apply to ASX for admission of the Company to the official list of ASX and for presentation of the Forecast Financial Information is consistent with the quotation of its Shares on ASX within seven days after the date of this Prospectus. -

Free Photo Editing Apps for Phones, Tablets & Computers

FREE PHOTO EDITING APPS FOR PHONES, TABLETS & COMPUTERS Here is a compilation of popular and good-quality free photo editing apps. You can download most of these onto your phone, computer, or tablet device and most are available for both Android and Apple devices too. They are all free to use and all have a wide range of applications and tools in their basic package, but some do have in-app purchases for add-ons and increased functionality – so be careful. I have only used a few personally, so you will have to explore and see what works best for you. However, I highly recommend Pixlr for the quick, easy addition of filters, effects, and frames and beFunky for great collage making. These websites/apps will enable you to creatively experiment and develop your own photographs at home. _______________________________________________________________________________________________ Instagram - It should go without saying: If you’re taking photos, Instagram is the place to share them. The site is the third-largest social network in the world after Facebook and YouTube, and as of June 2018, the app has over 1 billion monthly active users. PicsArt Photo editor - PicsArt is an image editing, collage and drawing application and a social network. PicsArt enables users to take and edit pictures, draw with layers, and share their images with the PicsArt community and on other networks like Facebook and Instagram. The app is available on iOS, Android, and Windows mobile devices. Pixlr - Pixlr is a Photoshop clone that offers a generous treasure trove of image- editing features along with the ability to import photos from Facebook. -

Financial Fraud and Internet Banking: Threats and Countermeasures

Report Financial Fraud and Internet Banking: Threats and Countermeasures By François Paget, McAfee® Avert® Labs Report Financial Fraud and Internet Banking: Threats and Countermeasures Table of Contents Some Figures 3 U.S. Federal Trade Commission Statistics 3 CyberSource 4 Internet Crime Complaint Center 4 In Europe 5 The Many Faces of Fraud 6 Small- and large-scale identity theft 7 Carding and skimming 8 Phishing and pharming 8 Crimeware 9 Money laundering 10 Mules 10 Virtual casinos 11 Pump and dump 12 Nigerian advance fee fraud (419 fraud) 12 Auctions 14 Online shopping 16 Anonymous payment methods 17 Protective Measures 18 Scoring 18 Europay, MasterCard, and Visa (EMV) standard 18 PCI-DSS 19 Secure Sockets Layer (SSL) and Transport Secured Layer (TLS) protocols 19 SSL extended validation 20 3-D Secure technology 21 Strong authentication and one-time password devices 22 Knowledge-based authentication 23 Email authentication 23 Conclusion 24 About McAfee, Inc. 26 Report Financial Fraud and Internet Banking: Threats and Countermeasures Financial fraud has many faces. Whether it involves swindling, debit or credit card fraud, real estate fraud, drug trafficking, identity theft, deceptive telemarketing, or money laundering, the goal of cybercriminals is to make as much money as possible within a short time and to do so inconspicuously. This paper will introduce you to an array of threats facing banks and their customers. It includes some statistics and descriptions of solutions that should give readers—whether they are responsible for security in a financial organization or a customer—an overview of the current situation. Some Figures U.S. -

Glossary of Tools and Terms

REPRODUCIBLE Glossary of Tools and Terms This appendix includes a list of terms and resources we introduced and used throughout the book. Apps, programs, and websites are listed, as well as digital and academic terms that will aid you in lesson planning both NOW and in the future. 1:1 or one to one: Describes the number of technology devices (iPads, laptops, Chromebooks) given to each student in an academic setting; a 1:1 school has one device per each student. 1:2 or one to two: Describes the number of technology devices (iPads, laptops, Chromebooks) given to each student in an academic setting; a 1:2 school means that one technology device is available for every two students in an academic setting. Two classes may share one class set, or students may partner up to use devices. Animoto (https://animoto.com): A video-creation website and app with limited free features and options for educator accounts (see https://animoto.com/education/classroom) Annotable (www.moke.com/annotable): A full-featured image-annotation tool Appy Pie (www.appypie.com): A free do-it-yourself software tool for building apps in three easy steps Audacity (www.audacityteam.org): A free macOS and Windows software tool for editing complex audio clips AutoRap (www.smule.com/listen/autorap/79): An iOS and Android app for mixing audio tracks to create a rap; the free version allows users to choose from two beats to make a song, and the paid version allows users to choose from a large selection of beats, including new and popular songs. -

Use Style: Paper Title

Forensic Investigations of Popular Ephemeral Messaging Applications on Android and iOS Platforms M A Hannan Bin Azhar, Rhys Cox and Aimee Chamberlain School of Engineering, Technology and Design Canterbury Christ Church University Canterbury, United Kingdom e-mail: [email protected]; [email protected]; [email protected]; Abstract—Ephemeral messaging applications are growing It is not just criminals using EMAs. Mobile phones are an increasingly popular on the digital mobile market. However, essential part of modern-day life. According to the Global they are not always used with good intentions. Criminals may System for Mobile Communications [6], there were five see a gateway into private communication with each other billion mobile users in the world by the second quarter of through this transient application data. This could negatively 2017, with a prediction that another 620 million people will impact criminal court cases for evidence, or civil matters. To become mobile phone users by 2020, together that would find out if messages from such applications can indeed be account for almost three quarters of the world population. Due recovered or not, a forensic examination of the device would be to the increasing popularity in mobile phones, there is required by the law enforcement authority. This paper reports naturally an increasing concern over mobile security and how mobile forensic investigations of ephemeral data from a wide range of applications using both proprietary and freeware safe communication between individuals or groups is. It is forensic tools. Both Android and iOS platforms were used in the known that EMAs can be used in civil concerns such as investigation. -

Clickjacking: Attacks and Defenses

Clickjacking: Attacks and Defenses Lin-Shung Huang Alex Moshchuk Helen J. Wang Carnegie Mellon University Microsoft Research Microsoft Research [email protected] [email protected] [email protected] Stuart Schechter Collin Jackson Microsoft Research Carnegie Mellon University [email protected] [email protected] Abstract such as a “claim your free iPad” button. Hence, when Clickjacking attacks are an emerging threat on the web. the user “claims” a free iPad, a story appears in the user’s In this paper, we design new clickjacking attack variants Facebook friends’ news feed stating that she “likes” the using existing techniques and demonstrate that existing attacker web site. For ease of exposition, our description clickjacking defenses are insufficient. Our attacks show will be in the context of web browsers. Nevertheless, the that clickjacking can cause severe damages, including concepts and techniques described are generally applica- compromising a user’s private webcam, email or other ble to all client operating systems where display is shared private data, and web surfing anonymity. by mutually distrusting principals. We observe the root cause of clickjacking is that an Several clickjacking defenses have been proposed and attacker application presents a sensitive UI element of a deployed for web browsers, but all have shortcomings. target application out of context to a user (such as hiding Today’s most widely deployed defenses rely on frame- the sensitive UI by making it transparent), and hence the busting [21, 37], which disallows a sensitive page from user is tricked to act out of context. To address this root being framed (i.e., embedded within another web page).