Improving External Validity of Machine Learning, Reduced Form, And

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Translated and Edited Publications

Translated and edited publications There are nearly 250 items in this list, including co-translations and revised editions. Editing and translations from German and French are indicated, otherwise the listed publications are translations from Dutch. Katrijn Van Bragt and Sven Van Dorst, Study of a Young Woman: An exceptional glimpse into Michaelina Wautier’s studio (1604–1689) (Phoebus Focus 19) (Antwerp: The Phoebus Foundation, 2020) Leen Kelchtermans, Portrait of Elisabeth Jordaens: Jacob Jordaens’ (1593–1678) tribute to his eldest daughter and country life (Phoebus Focus 18) (Antwerp: The Phoebus Foundation, 2020) Nils Büttner, A Sailor and a Woman Embracing: Peter Paul Rubens (1577–1640) and modern painting (Phoebus Focus 16) (Antwerp: The Phoebus Foundation, 2020) Dina Aristodemo, Descrittione di tutti i Paesi Bassi: Lodovico Guicciardini and the Low Countries (Phoebus Focus 15) (Antwerp: The Phoebus Foundation, 2020) Timothy De Paepe, Elegant Company in a Garden: A musical painting full of sixteenth-century wisdom (Phoebus Focus 9) (Antwerp: The Phoebus Foundation, 2020) Chris Stolwijk and Renske Cohen Tervaert, Masterpieces in the Kröller- Müller Museum (Otterlo: Kröller-Müller Museum, 2020) Maximiliaan Martens et al., Van Eyck: An Optical Revolution (Antwerp: Hannibal, 2020). All the translations from Dutch. Nienke Bakker and Lisa Smit (eds.), In the Picture: Portraying the Artist (Amsterdam: Van Gogh Museum, 2020) Hans Vlieghe, Apollo on His Sun Chariot (Phoebus Focus 8) (Antwerp: The Phoebus Foundation, 2019) Joris Van Grieken, Maarten Bassens, et al., Bruegel in Black & White: The World of Bruegel (Antwerp: Hannibal, 2019). Translation of all but one of the texts. Bert van Beneden (ed.) From Titian to Rubens: Masterpieces from Antwerp and other Flemish Cities (Ghent: Snoeck, 2019); exhibition catalogue Venice, Palazzo Ducale. -

Ii. Disposiciones Y Anuncios Del Estado Sumario

Suplemento al número 81, correspondiente al día 6 de abril de 2009 Fascículo I SUMARIO II. DISPOSICIONES Y ANUNCIOS DEL ESTADO — Coslada (Régimen económico) .................... 183 — Estremera (Régimen económico) .................. 209 — Ministerios .................................... 1 — Getafe (Régimen económico) ..................... 210 — Humanes de Madrid (Régimen económico) .......... 215 III. ADMINISTRACIÓN LOCAL — Leganés (Régimen económico) .................... 217 — Pozuelo de Alarcón (Régimen económico) ........... 221 AYUNTAMIENTOS — San Fernando de Henares (Régimen económico) ...... 236 — Madrid (Régimen económico) ..................... 95 — San Martín de la Vega (Régimen económico) ......... 238 — Madrid (Contratación) ........................... 150 — San Sebastián de los Reyes (Régimen económico) ..... 249 — Ajalvir (Régimen económico) ..................... 152 — Valdemorillo (Régimen económico) ................ 250 — Alcalá de Henares (Régimen económico) ............ 152 — Alcobendas (Régimen económico) ................. 152 — Aranjuez (Régimen económico) ................... 155 IV. ADMINISTRACIÓN DE JUSTICIA — Boadilla del Monte (Régimen económico) ........... 182 — Juzgados de Primera Instancia e Instrucción .......... 252 — Brunete (Régimen economico) .................... 183 — JuzgadosdeloSocial............................ 252 II. DISPOSICIONES Y ANUNCIOS DEL ESTADO MINISTERIO DE TRABAJO E INMIGRACIÓN ción del presente anuncio en el tablón de edictos del Ayuntamiento del último domicilio co- nocido del deudor y en -

MREB Cleared Protocols

MREB Cleared Protocols MREB# Date Cleared Title Faculty Faculty Dept Student Type Level of Project 2003 014 Feb-10-2003 I. Bourgeault Health Studies R. Walji Faculty 2004 064 May-11-2004 Canadian Professors as Public Intellectuals N. McLaughlin Sociology K. Turcotte Faculty 2004 157 Oct-24-2004 M. Cadieux Psychology Faculty 2005 061 Aug-10-2005 S. Mestelman Economics M. Rogers Faculty 2005 168 Mar-01-2006 An exploration of Self-Actualization (the mind-body- W. Shaffir Sociology Y. Le Blanc PhD energy connection) as a Holistic Health Practice: participant observation 2005 186 Feb-05-2006 B. Milliken Psychology Hannah Teja Faculty 2006 006 Feb-06-2006 Automaticity and Focus of Attention in Handwriting J. Starkes Kinesiology J. Cullen Faculty 2006 007 Feb-06-2006 Actively Building Capacity in Primary Care Research C. Levitt Family Medicine Faculty 2006 008 Feb-09-2006 Parents: The unacknowledged victims of childhood T. Vaillancourt Psychology J. Johnson PostDoc bullying 2006 009 Feb-09-2006 Community medicine focus groups I. Tyler Family Medicine M. Hau Medical Student 2006 011 Feb-21-2006 Code-switching and prosody in sentence comprehension C. Anderson Linguistics Faculty 2006 012 Feb-28-2006 Elicited Writing Errors K. Humphreys Psychology D. Pollock MA 2006 013 Apr-05-2006 Correlation Between Student's High-School Mathematics M. Lovric Mathematics M. Fioroni MA Backgrounds and Performance in First Year Mathematics at McMaster University 2006 014 Mar-08-2006 The Impossible Policy Problem? An Exploration of the V. Satzewich Sociology A. Walker MA Policy Roadblocks to Foreign Credential Recognition in Canada 2006 015 Feb-05-2006 Inclusive Occupational Therapy Education: A Pilot Survey B. -

Barriers to Clinical Trial Enrollment in Racial and Ethnic Minority Patients with Cancer

cancercontroljournal.org Vol. 23, No. 4, October 2016 H. LEE MOFFITT CANCER CENTER & RESEARCH INSTITUTE, AN NCI COMPREHENSIVE CANCER CENTER Barriers to Clinical Trial Enrollment in Racial and Ethnic Minority Patients With Cancer Racial and Ethnic Differences in the Epidemiology and Genomics of Lung Cancer Black Heterogeneity in Cancer Mortality: US-Blacks, Haitians, and Jamaicans Genomic Disparities in Breast Cancer Among Latinas Clinical Considerations of Risk, Incidence, and Outcomes of Breast Cancer in Sexual Minorities Construction and Validation of a Multi-Institutional Tissue Microarray of Invasive Ductal Carcinoma From Racially and Ethnically Diverse Populations Determinants of Treatment Delays Among Underserved Hispanics With Lung and Head and Neck Cancers Geographical Factors Associated With Health Disparities in Prostate Cancer Disparities in Penile Cancer Chemoprevention in African American Men With Prostate Cancer Disparities in Adolescents and Young Adults With Cancer Tobacco-Related Health Disparities Across the Cancer Care Continuum Cancer Control is included in Index Medicus/MEDLINE Editorial Board Members Editor: Conor C. Lynch, PhD Production Team: Assistant Member Lodovico Balducci, MD Veronica Nemeth Tumor Biology Senior Member Editorial Coordinator Program Leader, Senior Adult Oncology Program Kristen J. Otto, MD Sherri Damlo Moffitt Cancer Center Associate Member Medical Copy Editor Head and Neck and Endocrine Oncology Diane McEnaney Deputy Editor: Graphic Design Consultant Julio M. Pow-Sang, MD Michael A. Poch, MD Senior Member Assistant Member Associate Editor of Chair, Department of Genitourinary Oncology Genitourinary Oncology Educational Projects Director of Moffitt Robotics Program Jeffery S. Russell, MD, PhD John M. York, PharmD Moffitt Cancer Center Assistant Member Akita Biomedical Consulting Endocrine Tumor Oncology 1111 Bailey Drive Editor Emeritus: Elizabeth M. -

Delegate List

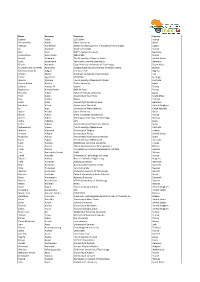

Name Surname Company Country Gabriel Abba LCFC‐ENIM France Hossameldin Abbas Qatar University Qatar Hentout Abdelfetah Centre for Development of Advanced Technologies Algeria Ali Abdullah Kuwait University Kuwait Dirk Abel RWTH Aachen University Germany Cesar Arturo Aceves Lara INRA LISBP France Behcet Acikmese The University of Texas at Austin United States Carlo Ackermann Technical University Darmstadt Germany Charles Adewole Cape Peninsula University of Technology South Africa OLUWATOSIN LUKMAN ADEYEMO postgraduate school,university of ibadan,nigeria Nigeria Olushola Olayinka Adigun Leafwater Ltd Nigeria Ahmad Afshar Amirkabir University of Technology Iran Carlos Agostinho UNINOVA Portugal Ricardo Aguilera The University of New South Wales Australia Yaseen Adnan Ahmed Osaka University Japan Sofiane Ahmed Ali Irseem France Meghnous Ahmed Rédha INSA de Lyon France Shin Ichi Aihara Tokyo University of Science Japan Priye Ainah University of Cape Town South Africa Pasi Airikka Metso Finland Tarek Aissa University of Applied Science Germany Jonathan Aitken University of Sheffield United Kingdom Jiri Ajgl University of West Bohemia Czech Republic Ilyasse Aksikas Qatar University Qatar Mazen Alamir CNRS, University of Grenoble France Anders Albert Norwegian University of Technology Norway Pedro Albertos UPV Spain Carlos Aldana Universidad Politécnica de Cataluña Spain Mohammad Aldeen The University of Melbourne Australia Ibrahim Aljamaan University of Calgary Canada Andrew Alleyne University of Illinois United States Alejandro Alonso Universidad -

Sharing the Journey to a Brighter Future

Sharing the Journey to a Brighter Future 2017 ANNUAL REPORT Dear Friends in Christ: As we celebrate the gift of another year, we remember the many blessings God bestowed upon us and our families. We are also grateful for the countless lives that were bettered in 2017 thanks to the work of Catholic Charities, its vital supporters and from so many others in our Archdiocese. This year’s annual report, whose theme is “Sharing the Journey to a Brighter Future,” helps us to recall the ways in which Catholic Charities accompanied our sisters and brothers in need over the past year. And how appropriate that the theme reflects the Our Mission: Inspired by the Gospel mandates to love, serve and invitation of our Holy Father to be present with and for those in our midst who are teach, Catholic Charities provides care and services to improve newly-arrived, fleeing difficult circumstances in their homelands, or who are simply the lives of Marylanders in need. in need of basic necessities to overcome life’s obstacles. The programs of Catholic Charities provide a welcome hand to the newly-arrived. Our Vision: Cherishing the Divine within, we are committed to The Esperanza Center offers much-needed services to immigrants seeking help with issues a Maryland where each person has the opportunity to reach his ranging from legal support to English classes and access to healthcare. And the adoptions or her God-given potential. program of Catholic Charities helps bring together children in need of love and security with parents seeking to share God’s unending love. -

Manchester Forum in Linguistics 2018

Manchester Forum in Linguistics A conference for early career researchers in linguistics University of Manchester 26-27 April 2018 www.mfilconf.co.uk [email protected] The 2018 Manchester Forum in Linguistics is supported by the University of Manchester’s Department of Linguistics and English Language, artsmethods@manchester, the Linguistics Association of Great Britain and the Arts and Humanities Research Council. i Contents General information ..................................... 1 Programme ......................................... 2 Plenary speakers ....................................... 4 The principle of no synonymy in language change: The facts and the fiction, Lauren Fonteyn . 5 The highs and lows of social life: What studies of intonation can teach sociolinguists, Anna Jespersen ....................................... 7 The emergence of word order: From improvisation to conventions in the manual and vocal modality, Marieke Schouwstra ............................ 8 Non-adult questions in child language: Manipulating bias and Questions Under Discussion, Rebecca Woods ................................... 9 Talks ............................................. 11 Emerging self-identities and emotions: A qualitative case study of ten Saudi students in the United Kingdom, Oun Almesaar ........................... 12 The expression of attributive possession in Najdi Arabic and English, Eisa Alrasheedi . 13 Of mice and men: Harvesting the fields of animal communication and cognition for cognitive linguistics, Jenny Amphaeris ............................ -

De Nakomelingen Van Elsken Joosten Aerts

een genealogieonline publicatie De nakomelingen van Elsken Joosten Aerts door Louk Box 6 augustus 2021 De nakomelingen van Elsken Joosten Aerts Louk Box De nakomelingen van Elsken Joosten Aerts Generatie 1 1. Elsken Joosten Aerts, dochter van Joost Aerts, is geboren rond 1605 in Strijp, Noord-Brabant, Nederland. Zij is voor een levensbeschrijving en haar afstamming zie noot van beroep. Zij is getrouwd voor 1635 met Aert Jansen Smits Fabri, zoon van Jan de Smit. Hij is geboren rond 1600 in Hapert, Noord-Brabant, Nederland. Hij is smid van beroep. Aert Jansen Smits is overleden en is begraven op 16 oktober 1687 in Hapert, Noord-Brabant, Nederland. Zij kregen 9 kinderen: Joost Aertsen Smets Fabri, volg 2. Catalijn Aertsdr Jansen Fabri, volg 3. Abraham Aerts Fabri, volg 4. Jacob Aertsen Fabri, volg 5. Maria Aerts Fabri, volg 6. Isaak Aerts Fabri, volg 7. Jenneken Aertsdr Fabri, volg 8. Henrixken Aertsdr Fabri, volg 9. Aleken Aertsdr Fabri, volg 10. Elsken Joosten is overleden op 6 maart 1696 in Hapert, Noord-Brabant, Nederland. Generatie 2 2. Joost Aertsen Smets Fabri, zoon van Aert Jansen Smits Fabri en Elsken Joosten Aerts, is geboren rond 1630. Hij is smid van beroep. Hij is getrouwd (1) rond 1658 met Mayke Jans Bullens, dochter van Jan Claessen Bullens. Zij is geboren voor 1640 in Oostelbeers, Noord-Brabant, Nederland. Mayke Jans is overleden op 1 april 1676 in Hoogeloon, Noord-Brabant, Nederland. Zij kregen 6 kinderen: Maria Joosten Fabri, volg 11. Jenneke Joosten Fabri, volg 12. Jan Joosten Fabri, volg 13. Hendrik Joosten Fabri, volg 14. Catharina Joosten Fabri, volg 15. -

Belgian Refugees, 1914-1920

Belgian Refugees, 1914‐1920 Govern Register Municip ment Relationshi Marital No. al No. No. Surname Forename p Occupation Gender Age Status Belgian Address Arrival date Address on arrival Removal Date Address removed to Remarks 1 1 Embrechts Karl F Farmer M 26 M Wespelaer Great Eastern Hotel, 100 Duke St, Glasgow Crieff 2 1 Embrechts Elizabeth W F 25 M Wespelaer Great Eastern Hotel, 100 Duke St, Glasgow Crieff 3 1 Embrechts Larent Alfons, Joseph S M 4 S Wespelaer Great Eastern Hotel, 100 Duke St, Glasgow Crieff 4 2 Van der Heyden Henri F Stoker M 50 M Lindenweg, Blaubit, Louvain Great Eastern Hotel, 100 Duke St, Glasgow SalvationArmy, High Street, Glasgow 5 2 Van der Heyden Rosalie Antonie W F 49 M Lindenweg, Blaubit, Louvain Great Eastern Hotel, 100 Duke St, Glasgow SalvationArmy, High Street, Glasgow 6 2 Van der Heyden Sidonia Marie D F 18 S Lindenweg, Blaubit, Louvain Great Eastern Hotel, 100 Duke St, Glasgow SalvationArmy, High Street, Glasgow 7 2 Van der Heyden Clementina Catherina D F 16 S Lindenweg, Blaubit, Louvain Great Eastern Hotel, 100 Duke St, Glasgow SalvationArmy, High Street, Glasgow 8 2 Van der Heyden Stephenia Ludovica D F 13 S Lindenweg, Blaubit, Louvain Great Eastern Hotel, 100 Duke St, Glasgow SalvationArmy, High Street, Glasgow 9 3 Jacobs Pierre Francois Journalist M 39 S 33 Montague Solur Noir, Malines Great Eastern Hotel, 100 Duke St, Glasgow Left for London for Military 10 4 Heesteers Paul Labourer M 26 S 16 Selis Rue, Malines Great Eastern Hotel, 100 Duke St, Glasgow Service 11 5 Van der Plas Emile F Foreman -

2011 EUI Publications Directory (October 2009-December 2010)

QM-AH-11-001-EN-C European University Institute Via dei Roccettini, 9 I-50014 San Domenico di Fiesole - Italy www.eui.eu [email protected] December 2010 December ‒ October 2009 The European Commission supports the EUI through the European Publications: Academic Union budget. This publication reflects the views only of the author(s), and the Commission cannot be held responsible for any use which may be made of the information contained therein. Academic © European University Institute, 2011 Publications doi:10.2870/25594 October 2009 - December 2010 ISBN-13: 978-92-9084-060-2 ISSN: 1977-1193 EUI Academic Publications: October 2009 – December 2010 Published in April 2011 by the European University Institute The Directory is a non-commercial publication prepared for the purpose of highlighting the academic output of the European University Institute. It is distributed free of charge. All cover-art and abstracts are the property of the copyright holders. Front cover photo, © European University Institute, 2011 CONTENTS Foreword 5 Introduction 6 Books 7 Theses 143 Articles 161 Contributions to Books 207 Working Papers 241 Research Reports 277 Other Series 289 Index of Authors and Editors 295 Academic Publications at the EUI and Cadmus 313 FOREWORD I am proud and pleased to introduce the work of all those who have contributed by their publications to this third directory of the academic publications of the EUI and its members. Its size does not pay full justice to the 133 books, to the 192 doctoral theses, and to the hundreds of articles published in the best national and international journals in the past fifteen months (October 2009‒December 2010). -

Comprehensive Modeling of Agrochemicals Biodegradation in Soil a Multidisciplinary Approach to Make Informed Choices to Protect Human Health and the Environment

Comprehensive modeling of agrochemicals biodegradation in soil A multidisciplinary approach to make informed choices to protect human health and the environment Daniele la Cecilia School of Civil Engineering Faculty of Engineering The University of Sydney 2019 A thesis submitted in fulfilment of the requirements for the degree of Doctor of Philosophy Supervisor: Ass. Prof. Federico Maggi Auxiliary Supervisor: Prof. Chengwang Lei Abstract Numerical models are relied upon by risk assessors to predict the dynamics of potentially haz- ardous pesticides in soil. Those models may account for fundamental processes affecting pes- ticide dynamics, such as environmental and edaphic conditions, water flow, degradation, and sorption. However, those models lack the ability to account for complex biogeochemical and ecological feedbacks, and thus create challenges in achieving robust predictions. In particular, no attention has been paid on the coupled mechanistic description of microbial dynamics and soil organic matter cycling and the implications on agrochemicals biodegradation and soil and groundwater quality. This thesis aims to provide this description by developing a comprehen- sive framework through a multidisciplinary approach. Microbiological regulation of pesticide dynamics was investigated by coupling theoretical and numerical approaches with experiments carried out in our environmental laboratory or sourced from the literature. We propose the use of reaction networks to highlight the possibly multiple pesticide degradation pathways and the feedbacks with macronutrient cycles. Biochemically-similar pathways are mediated by a spe- cific microbial functional group, which represents the microbial community carrying out par- ticular functions; these functions are biodegradation of pesticides and metabolism of carbon-, nitrogen-, and phosphorus-containing molecules. We describe biochemical reactions by means of Michaelis-Menten-Monod (MMM) kinetics. -

Growth Related Material Properties of Hydrogenated Amorphous Silicon

Growth related material properties of hydrogenated amorphous silicon Citation for published version (APA): Smets, A. H. M. (2002). Growth related material properties of hydrogenated amorphous silicon. Technische Universiteit Eindhoven. https://doi.org/10.6100/IR556557 DOI: 10.6100/IR556557 Document status and date: Published: 01/01/2002 Document Version: Publisher’s PDF, also known as Version of Record (includes final page, issue and volume numbers) Please check the document version of this publication: • A submitted manuscript is the version of the article upon submission and before peer-review. There can be important differences between the submitted version and the official published version of record. People interested in the research are advised to contact the author for the final version of the publication, or visit the DOI to the publisher's website. • The final author version and the galley proof are versions of the publication after peer review. • The final published version features the final layout of the paper including the volume, issue and page numbers. Link to publication General rights Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal.