Predictive Performance of Tree-Based Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hitlers GP in England.Pdf

HITLER’S GRAND PRIX IN ENGLAND HITLER’S GRAND PRIX IN ENGLAND Donington 1937 and 1938 Christopher Hilton FOREWORD BY TOM WHEATCROFT Haynes Publishing Contents Introduction and acknowledgements 6 Foreword by Tom Wheatcroft 9 1. From a distance 11 2. Friends - and enemies 30 3. The master’s last win 36 4. Life - and death 72 5. Each dangerous day 90 6. Crisis 121 7. High noon 137 8. The day before yesterday 166 Notes 175 Images 191 Introduction and acknowledgements POLITICS AND SPORT are by definition incompatible, and they're combustible when mixed. The 1930s proved that: the Winter Olympics in Germany in 1936, when the President of the International Olympic Committee threatened to cancel the Games unless the anti-semitic posters were all taken down now, whatever Adolf Hitler decrees; the 1936 Summer Games in Berlin and Hitler's look of utter disgust when Jesse Owens, a negro, won the 100 metres; the World Heavyweight title fight in 1938 between Joe Louis, a negro, and Germany's Max Schmeling which carried racial undertones and overtones. The fight lasted 2 minutes 4 seconds, and in that time Louis knocked Schmeling down four times. They say that some of Schmeling's teeth were found embedded in Louis's glove... Motor racing, a dangerous but genteel activity in the 1920s and early 1930s, was touched by this, too, and touched hard. The combustible mixture produced two Grand Prix races at Donington Park, in 1937 and 1938, which were just as dramatic, just as sinister and just as full of foreboding. This is the full story of those races. -

When the Engines Echoed Around Bremgarten

Bern, 22 August 2018 PRESS RELEASE Temporary exhibition, Grand Prix Suisse 1934–54 – racing fever in Bern, 23 August 2018 to 22 April 2019 Bern and the need for speed: when the engines echoed around Bremgarten Between 1934 and 1954, the Swiss Grand Prix, at the time Switzerland’s biggest sporting event, turned Bern into a showcase for world motorsport. The captivating travelling cir- cus that is international motor racing arrived in the Swiss capital every summer, leading to the city’s first traffic jams as enthusiasts from Switzerland and abroad – over 120,000 of them at the event’s peak in 1948 – flocked to the Bremgartenwald circuit. The Ber- nisches Historisches Museum’s new exhibition, Grand Prix Suisse 1934–54 – racing fever in Bern, opens on 23 August 2018, and will place this historic event in the context of its technological, social and economic impact on Bern and Switzerland as a whole. For a few days each summer between 1934 and 1939, then again from 1947 to 1954, Bern be- came the centre of world motorsport. The race, run on the Bremgartenwald circuit, was consid- ered one of motorsport’s great classic events, along with those at Monte Carlo, Silverstone and the Nürburgring. Car races were held in a range of different categories, and from 1950 onwards, the main race was part of the newly created world championship series, known today as the Formula 1 World Championship. The circuit also hosted various classes of motorcycle racing. The Swiss Grand Prix – Swiss racing history “The exhibition will examine the complex significance of the Swiss Grand Prix for Bern and for Switzerland. -

The Chequered Flag

THE CHEQUERED March 2016 Issue 1 FLAG F101 MR322G £100 MR191 £295 1985 British Lewis Hamilton Truck Grand signed Formula 1 Prix Silverstone photo, our choice programme. Signed inside by two-time Moto GP World Champion Barry Sheene who later turned to Truck Racing, plus tickets MR225 £295 Pedro Rodriguez De La Vega signed ticket MR273 £100 Patrick Head, Adrian Newey, and Ross Brawn signed 2010 Sixty Years of Formula One Silverstone cover, they were all engineers MR322F £150 1987 Truck Prix signed official MR238 £350 Brands Hatch Graham Hill signed 4 x 6 photo programme. mounted onto card Signed inside by Rod Chapman (7x European Truck Champ) Barry Sheene (2x Moto GP Champ) Davina Galica (F1), Barry Lee (4x Truck World Champ), plus tickets MR117A £175 01303 278137 Michael EMAIL: [email protected] Schumacher signed photo, our choice Buckingham Covers, Warren House, Shearway Road, Folkestone, Kent CT19 4BF 1 Tel 01303 278137 Fax 01303 279429 Email [email protected] SIGNED SILVERSTONE 2010 - 60 YEARS OF F1 Occassionally going round fairs you would find an odd Silverstone Motor Racing cover with a great signature on, but never more than one or two and always hard to find. They were only ever on sale at the circuit, and were sold to raise funds for things going on in Silverstone Village. Being sold on the circuit gave them access to some very hard to find signatures, as you can see from this initial selection. MR261 £30 MR262 £25 MR77C £45 Father and son drivers Sir Jackie Jody Scheckter, South African Damon Hill, British Racing Driver, and Paul Stewart. -

Video Name Track Track Location Date Year DVD # Classics #4001

Video Name Track Track Location Date Year DVD # Classics #4001 Watkins Glen Watkins Glen, NY D-0001 Victory Circle #4012, WG 1951 Watkins Glen Watkins Glen, NY D-0002 1959 Sports Car Grand Prix Weekend 1959 D-0003 A Gullwing at Twilight 1959 D-0004 At the IMRRC The Legacy of Briggs Cunningham Jr. 1959 D-0005 Legendary Bill Milliken talks about "Butterball" Nov 6,2004 1959 D-0006 50 Years of Formula 1 On-Board 1959 D-0007 WG: The Street Years Watkins Glen Watkins Glen, NY 1948 D-0008 25 Years at Speed: The Watkins Glen Story Watkins Glen Watkins Glen, NY 1972 D-0009 Saratoga Automobile Museum An Evening with Carroll Shelby D-0010 WG 50th Anniversary, Allard Reunion Watkins Glen, NY D-0011 Saturday Afternoon at IMRRC w/ Denise McCluggage Watkins Glen Watkins Glen October 1, 2005 2005 D-0012 Watkins Glen Grand Prix Festival Watkins Glen 2005 D-0013 1952 Watkins Glen Grand Prix Weekend Watkins Glen 1952 D-0014 1951-54 Watkins Glen Grand Prix Weekend Watkins Glen Watkins Glen 1951-54 D-0015 Watkins Glen Grand Prix Weekend 1952 Watkins Glen Watkins Glen 1952 D-0016 Ralph E. Miller Collection Watkins Glen Grand Prix 1949 Watkins Glen 1949 D-0017 Saturday Aternoon at the IMRRC, Lost Race Circuits Watkins Glen Watkins Glen 2006 D-0018 2005 The Legends Speeak Formula One past present & future 2005 D-0019 2005 Concours d'Elegance 2005 D-0020 2005 Watkins Glen Grand Prix Festival, Smalleys Garage 2005 D-0021 2005 US Vintange Grand Prix of Watkins Glen Q&A w/ Vic Elford 2005 D-0022 IMRRC proudly recognizes James Scaptura Watkins Glen 2005 D-0023 Saturday -

Press Release

Press Release OCTOBER 06, 2013 Podium lock out for Renault power in Korean Grand Prix Sebastian Vettel wins Korean Grand Prix for Infiniti Red Bull RacingRenault, four seconds ahead of Kimi Raikkonen. Lotus F1 Team’s Kimi Raikkonen and Romain Grosjean finish second and third to make podium 100% Renault powered. Third allRenault powered podium this season. Vettel has led 209 of the past 213 racing laps. Vettel’s win gives him a mathematical chance to seal championship in Japan. Renaultpowered drivers locked out the podium in today’s Korean Grand Prix, with Sebastian Vettel (Infiniti Red Bull Racing) winning the chaotic race from Lotus F1 Team’s Kimi Raikkonen and Romain Grosjean. The perfect podium result is the third time this season that the podium has been 100% Renaultpowered.* Vettel – whose pole position yesterday took Renault’s total of F1 poles to 208, equal with Ferrari’s record – opened up a comfortable lead at the start ahead of Grosjean, who had jumped ahead of Hamilton on the first corner. The pair built up a cushion of several seconds over the battles behind and were able to pit and rejoin in formation. Vettel remained in front despite two different safety cars but Raikkonen got the jump on Grosjean before the second safety car period. The Finn had a standout race from P9 on the grid, overtaking Rosberg, Hamilton, Alonso, Hulkenberg and finally his teammate to score his second consecutive podium. Mark Webber started from P13 as a result of a grid penalty but quickly found himself into the top ten racing with Alonso and Raikkonen. -

Spin Off Feb 11

NOTTINGHAM SPORTS CAR CLUB February 2011 1 CLUB WEBSITE www.gosprinting.co.uk Don’t forget to visit the Club Web Site. Its full of useful information from Club events and dates to results, Championship positions, downloadable regs and membership forms and details. If you’ve got something that could be useful to other NSCC Club members, then why not advertise it on the Web site. For further details contact Cliff Mould on :- 0114 2864135 or email [email protected] SPIN OFF ARTICLES Breaking news, adverts, for sale items, letters, views and race & event write ups should be sent to the Editor. Copy date for the next Spin Off:- 14th March 2010 And sent to : Paul Marvin. 4 Marriott Drive, Kibworth Harcourt, Leicester LE8 0JX Tel : Mobile : 07715 353440 or Email : [email protected] 2 IN THIS ISSUE Chairmans Chatter 4 Editors Mutterings 5 Classic Corner incorporating Memorable moments 6 Club Website 6 Proposed 2011 NSCC Speed Championship Calendar 7 Invited Event dates for 2011 8 Major Motor Sport Event dates for 2011 9 Event Contacts List 10 Trailer FOR SALE 11 Membership for 2011 11 Curborough Lives Again! 12 Winter Paddock dates 13 Marshals Update 14 / 15 Bernard Hunter (in memory of) 15 Advert FOR SALE 16 Historic pages from “The Bulletin” Winter 1966 17 - 22 MSA News 23 - 26 Official & Committee contacts 27 Acknowledgement The artwork on the front cover is re-printed with the kind permission of the well known motorsport cartoonist Jim Bamber. Jim has very kindly allowed me to use his illustra- tions. For a sneek preview why not visit his web site www.jimbamber.co.uk 3 Chairman's Chatter A couple of apologies to start with. -

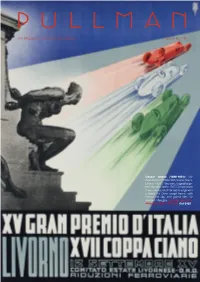

The Magazine of the Pullman Gallery Issue No. 60

The Magazine of the Pullman Gallery Issue No. 60 Cesare Gobbo (1899-1981): ‘XV Gran Premio D’Italia XVII Coppa Ciano, Livorno 1937’. Very rare, original large- format poster dated 1937. Conservation linen mounted and framed to edge with a black Art Deco swept frame, with copper-leaf slip, and glazed with UV resistant Plexiglas. Overall size: 60 x 44 inches (153 x 112 cm). Ref 6462 Playing Ketchup p.52 p.62 p.67 p.3 p.47 p.20 p.21 p.8143 p.46 The Pullman Gallery specializes in objets de luxe dating from 1880-1950. Our gallery in King Street, St. James’s next to Christie’s and our appointment- only studios near Chelsea Bridge, houses London’s An extremely desirable mid-century novelty ice bucket 14 King Street finest collection of rareArt Deco cocktail shakers and in the form of a tomato, the nickel plated body with removable lid complete with realistic leaves and stalk, St. James’s luxury period accessories, sculpture, original posters revealing the original rose-gold ‘mercury’ glass bowl, London SW1Y 6QU and paintings relating to powered transport, as well which keeps the ice from melting too quickly. Stamped as automobile bronzes, trophies, fine scale racing THERMID PARIS, MADE IN FRANCE. French, circa Tel: +44 (0)20 7930 9595 car models, early tinplate toys, vintage car mascots, 1950s. Art Deco furniture, winter sports-related art and Ref 6503 [email protected] objects and an extensive collection of antique Louis Height: 9 inches (23 cm), diameter: 8 inches (20 cm). www.pullmangallery.com Vuitton and Hermès luggage and accessories. -

Racing Factbook Circuits

Racing Circuits Factbook Rob Semmeling Racing Circuits Factbook Page 2 CONTENTS Introduction 4 First 5 Oldest 15 Newest 16 Ovals & Bankings 22 Fastest 35 Longest 44 Shortest 48 Width 50 Corners 50 Elevation Change 53 Most 55 Location 55 Eight-Shaped Circuits 55 Street Circuits 56 Airfield Circuits 65 Dedicated Circuits 67 Longest Straightaways 72 Racing Circuits Factbook Page 3 Formula 1 Circuits 74 Formula 1 Circuits Fast Facts 77 MotoGP Circuits 78 IndyCar Series Circuits 81 IMSA SportsCar Championship Circuits 82 World Circuits Survey 83 Copyright © Rob Semmeling 2010-2016 / all rights reserved www.wegcircuits.nl Cover Photography © Raphaël Belly Racing Circuits Factbook Page 4 Introduction The Racing Circuits Factbook is a collection of various facts and figures about motor racing circuits worldwide. I believe it is the most comprehensive and accurate you will find anywhere. However, although I have tried to make sure the information presented here is as correct and accurate as possible, some reservation is always necessary. Research is continuously progressing and may lead to new findings. Website In addition to the Racing Circuits Factbook file you are viewing, my website www.wegcircuits.nl offers several further downloadable pdf-files: theRennen! Races! Vitesse! pdf details over 700 racing circuits in the Netherlands, Belgium, Germany and Austria, and also contains notes on Luxembourg and Switzerland. The American Road Courses pdf-documents lists nearly 160 road courses of past and present in the United States and Canada. These files are the most comprehensive and accurate sources for racing circuits in said countries. My website also lists nearly 5000 dates of motorcycle road races in the Netherlands, Belgium, Germany, Austria, Luxembourg and Switzerland, allowing you to see exactly when many of the motorcycle circuits listed in the Rennen! Races! Vitesse! document were used. -

ACES WILD ACES WILD the Story of the British Grand Prix the STORY of the Peter Miller

ACES WILD ACES WILD The Story of the British Grand Prix THE STORY OF THE Peter Miller Motor racing is one of the most 10. 3. BRITISH GRAND PRIX exacting and dangerous sports in the world today. And Grand Prix racing for Formula 1 single-seater cars is the RIX GREATS toughest of them all. The ultimate ambition of every racing driver since 1950, when the com petition was first introduced, has been to be crowned as 'World Cham pion'. In this, his fourth book, author Peter Miller looks into the back ground of just one of the annual qualifying rounds-the British Grand Prix-which go to make up the elusive title. Although by no means the oldest motor race on the English sporting calendar, the British Grand Prix has become recognised as an epic and invariably dramatic event, since its inception at Silverstone, Northants, on October 2nd, 1948. Since gaining World Championship status in May, 1950 — it was in fact the very first event in the Drivers' Championships of the W orld-this race has captured the interest not only of racing enthusiasts, LOONS but also of the man in the street. It has been said that the supreme test of the courage, skill and virtuosity of a Grand Prix driver is to w in the Monaco Grand Prix through the narrow streets of Monte Carlo and the German Grand Prix at the notorious Nürburgring. Both of these gruelling circuits cer tainly stretch a driver's reflexes to the limit and the winner of these classic events is assured of his rightful place in racing history. -

Audi Win Le Mans

Classic and Competition Car July 2013 Issue 34 Cholmondeley Pageant of Power Blancpain Silverstone La Vie en Bleu British GT Snetterton Contents Our Team Simon Wright - Editor. Page 3 News Simon has been Page 5 Sir Chris Hoy's Radical racing debut photographing and Page 8 Pietro Fittipaldi F4 Snetterton reporting on motor races Page 14 British Hill climb Championship Shelsley Walsh for many years. Served an Page 15 GT Cup Brands Hatch engineering apprenticeship Page 18 La Vie en Bleu Prescott many years ago. Big fan of Page 22 Archive Photo of the month. the Porsche 917 Page 23 Blancpain Endurance Silverstone. Page 27 Four Ashes Car meeting Pete Austin. Page 31 British GT Championship Snetterton Pete is the man for Historic Page 34 BRDC Formula 4 Snetterton racing, with an extensive Page 36 Shelsley Walsh Breakfast club archive of black and white Page 37 Cholmondeley Pageant of Power images covering the last Page 42 VSCC Cadwell Park few decades of motorsport Page 47 Classic car of the month - Austin A90 Atlantic convertible in Britain. Very keen on Page 49 Corvette Club UK 60th celebration Coventry Transport museum. BRM. Front Cover. Mick Herring Allan Rennie Flies at Cholmoneley Pageant of Power in his F1 Lotus - Martin 35 © Simon Wright Mick's first love is GT Blanchemain/Beaubelique/Goueslard Ferrari 458 Italia Blancpain Silverstone © Janet Wright racing, including Historic's, Mike Ward Bugatti T13 La Vie en Bleu Prescott © Simon Wright especially the Lola T70. Mark Poole starts to slide his Aston Martin British GT Snetterton © Mick Herring Has an extensive All content is copyright classicandcompetitioncar.com unless otherwise stated. -

Correnti Della Storia

CORRENTI DELLA STORIA ALBERTO ASCARI (1918–1955) Last month’s essay (February, 2017), dealt with the life and work of Enzo Ferrari, who was both a race-car driver and owner/builder of high performance automobiles. He also founded and ran the racing team of Scude- ria Ferrari. This month’s essay is about one of that team’s most successful and famous drivers, Alberto Ascari. He became a very close friend of Ferrari, so much so that after his tragic death in a racing accident, Ferrari was terribly distraught and decided from then on to avoid making close personal friendships with his drivers. Alberto was the son of one of Italy’s great pre-war drivers, Antonio Ascari. He went on to become one of Formula One racing’s most dominant and best-loved champions. He was known for his careful precision and finely-judged accuracy that made him one of the safest drivers in a very dangerous era of auto racing. He was also notoriously superstitious and took great pains to avoid tempting fate. But his unexplained fatal accident—at exactly the same age as his father’s (36), on the same day of the month (the 26th) and in eerily similar circumstances—remains one of Formula One racing’s great unsolved mysteries (see below). During his short nine-year racing career, Alberto Ascari won 47 international races in 56 starts. His two Formula One World Championships attest to his ranking as one of the greatest drivers in European history. Alberto was born in Milan, Italy on July 13, 1918. -

Spanish GP Race Stewards Biographies

Spanish GP Race Stewards Biographies PAUL GUTJAHR PRESIDENT OF THE FIA HILL CLIMB COMMISSION, BOARD MEMBER AND PRESIDENT OF AUTO SPORT SUISSE SARL Paul Gutjahr started racing in the late 1960s with Alfa Romeo, Lancia, Lotus and Porsche, then March in Formula 3. In the early ‘70s he became President of the Automobile Club Berne and organised numerous events. He acted as President of the organising committee of the Swiss GP at Dijon between 1980-82. Between 1980-2005 he acted as President of the Commission Sportive Nationale de l’Automobile Club de Suisse and in 2005 he became President and board member of the Auto Sport Suisse motor sports club. Gutjahr is President of the Alliance of European Hill Climb Organisers and has been steward at various high-level international competitions. He was the Formula 3000 Sporting Commissioner and has been a Formula One steward since 1995 ROGER PEART PRESIDENT, FIA CIRCUITS COMMISSION; PRESIDENT OF AUTORITE SPORTIVE NATIONALE DU CANADA (ASN) Roger Peart is a civil engineer by training and designed the Gilles Villeneuve circuit, Home of the Canadian Grand Prix since 1978. In the years 1949-1953 he gained his first experience of motor sport, working as a racing mechanic while still at school in the UK. By 1960 he had become a competitor. Until 1963 he drove in the Canadian National Rally Championship, before switching to racing from 1964 to 1976. In 1967 Peart became involved in the organisation of Canadian motor sport and was instrumental in getting the Circuit Gilles Villeneuve onto the F1 calendar. Since 1991 Peart has been President of ASN Canada FIA and, since 1999, President of the FIA Circuits Commission.