A Keyword-Based Query Processing Method for Datasets with Schemas

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

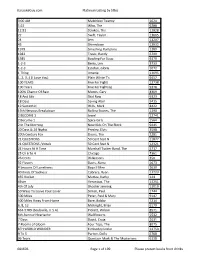

Song Title Artist Disc

Song Title Artist Disc # # 1 CRUSH GARBAGE 6639 # 9 DREAM LENNON, JOHN 6013 #1 NELLY 9406 (LOVE IS LIKE A) HEATWAVE MARTHA & THE VANDELLAS 7235 (YOU WANT TO) MAKE A MEMORY BON JOVI 14106 (YOU'VE GOT) THE MAGIC TOUCH PLATTERS, THE 1255 '03 BONNIE & CLYDE JAY-Z & BEYONCE 10971 1 THING AMERIE 13133 1, 2, 3 RED LIGHT 1910 FRUITGUM CO. 10237 1,2 STEP CIARA & MISSY ELLIOTT 12989 10 OUT OF 10 LOUCHIE LOU 7399 10 SECONDS DOWN SUGAR RAY 6418 10,000 PROMISES BACKSTREET BOYS 3350 100 YEARS FIVE FOR FIGHTING 12772 100% PURE LOVE WATERS, CRYSTAL 9306 1000 OCEANS AMOS, TORRIE 6711 10TH AVE. FREEZEOUT SPRINGSTEEN, BRUCE 11813 10TH AVENUE FREEZE OUT SPRINGSTEEN, BRUCE 2632 12:51 STROKES, THE 12520 1-2-3 BARRY, LEN 8210 ESTEFAN, GLORIA 13 18 & LIFE SKID ROW 2633 1969 STEGALL, KEITH 6712 1979 SMASHING PUMPKINS 6713 1982 TRAVIS, RANDY 3386 1985 BOWLING FOR SOUP 12880 1999 PRINCE 6714 19TH NERVOUS BREAKDOWN ROLLING STONES, THE 1032 2 BECOME 1 SPICE GIRLS 1427 2 STEP DJ UNK 14155 20 GOOD REASONS THIRSTY MERC 14107 2001 INTRO ELVIS 1604 20TH CENTURY FOX DOORS, THE 6715 21 QUESTIONS 50 CENT & NATE DOGG 11730 24 JEM 13169 24 HOURS AT A TIME TUCKER, MARSHALL, B 2634 24/7 EDMONDS, KEVON 6820 25 MILES STARR, EDWIN 9488 25 OR 6 TO 4 CHICAGO 4735 26 MILES FOUR PREPS, THE 10102 26 CENTS WILKINSONS, THE 6821 29 NIGHTS LEIGH, DANNI 6822 3 LIBRAS PERFECT CIRCLE, A 6824 3 LITTLE PIGS GREEN JELLY 2429 3:00 A.M. -

Songs by Title

Karaoke Song Book Songs by Title Title Artist Title Artist #1 Nelly 18 And Life Skid Row #1 Crush Garbage 18 'til I Die Adams, Bryan #Dream Lennon, John 18 Yellow Roses Darin, Bobby (doo Wop) That Thing Parody 19 2000 Gorillaz (I Hate) Everything About You Three Days Grace 19 2000 Gorrilaz (I Would Do) Anything For Love Meatloaf 19 Somethin' Mark Wills (If You're Not In It For Love) I'm Outta Here Twain, Shania 19 Somethin' Wills, Mark (I'm Not Your) Steppin' Stone Monkees, The 19 SOMETHING WILLS,MARK (Now & Then) There's A Fool Such As I Presley, Elvis 192000 Gorillaz (Our Love) Don't Throw It All Away Andy Gibb 1969 Stegall, Keith (Sitting On The) Dock Of The Bay Redding, Otis 1979 Smashing Pumpkins (Theme From) The Monkees Monkees, The 1982 Randy Travis (you Drive Me) Crazy Britney Spears 1982 Travis, Randy (Your Love Has Lifted Me) Higher And Higher Coolidge, Rita 1985 BOWLING FOR SOUP 03 Bonnie & Clyde Jay Z & Beyonce 1985 Bowling For Soup 03 Bonnie & Clyde Jay Z & Beyonce Knowles 1985 BOWLING FOR SOUP '03 Bonnie & Clyde Jay Z & Beyonce Knowles 1985 Bowling For Soup 03 Bonnie And Clyde Jay Z & Beyonce 1999 Prince 1 2 3 Estefan, Gloria 1999 Prince & Revolution 1 Thing Amerie 1999 Wilkinsons, The 1, 2, 3, 4, Sumpin' New Coolio 19Th Nervous Breakdown Rolling Stones, The 1,2 STEP CIARA & M. ELLIOTT 2 Become 1 Jewel 10 Days Late Third Eye Blind 2 Become 1 Spice Girls 10 Min Sorry We've Stopped Taking Requests 2 Become 1 Spice Girls, The 10 Min The Karaoke Show Is Over 2 Become One SPICE GIRLS 10 Min Welcome To Karaoke Show 2 Faced Louise 10 Out Of 10 Louchie Lou 2 Find U Jewel 10 Rounds With Jose Cuervo Byrd, Tracy 2 For The Show Trooper 10 Seconds Down Sugar Ray 2 Legit 2 Quit Hammer, M.C. -

My Screentest, Or, I Coulda Been Debra Winger

MY SCREENTEST, OR, I COULD’VE BEEN DEBRA WINGER On a hot September afternoon in 1969, four months past my college graduation, I found myself dozing on a hand-me-down convertible sofa in pre-gay West Hollywood, Sweetzer Avenue, to be exact, a street on which many have begun their pursuit of the Hollywood dream. It was here, in a drab fifties apartment complex which looked more like Army base housing – no charming Spanish courtyard bungalow dripping in bouganvilla –that I found myself wasting away yet another afternoon. I had been an English major which meant I had given no particular thought to a “career.” I had spent the summer writing a series of unfinished short stories in varying states of inebriation. My ongoing fantasy was to become a latter day Anais Nin crossed with Katherine Mansfield and a dash of Virginia Woolf. After all, I kept a journal. In truth, I had no idea what kind of a writer I really was. I had been a student all my life and the fact that I wasn’t going back to school in the ninth month of the year had thrown me into a vague state of confusion I was loathe to call an identity crisis, let alone a depression. When the wall phone rang, I half-expected Lindsay, my roommate, to answer it until I remembered she was at UCLA in her senior seminar on Thackeray, so I dragged my lazy ass over to answer it. “Hey Lizzie. It’s Charlie.” Charlie didn’t wait for me to return the salutation. -

8123 Songs, 21 Days, 63.83 GB

Page 1 of 247 Music 8123 songs, 21 days, 63.83 GB Name Artist The A Team Ed Sheeran A-List (Radio Edit) XMIXR Sisqo feat. Waka Flocka Flame A.D.I.D.A.S. (Clean Edit) Killer Mike ft Big Boi Aaroma (Bonus Version) Pru About A Girl The Academy Is... About The Money (Radio Edit) XMIXR T.I. feat. Young Thug About The Money (Remix) (Radio Edit) XMIXR T.I. feat. Young Thug, Lil Wayne & Jeezy About Us [Pop Edit] Brooke Hogan ft. Paul Wall Absolute Zero (Radio Edit) XMIXR Stone Sour Absolutely (Story Of A Girl) Ninedays Absolution Calling (Radio Edit) XMIXR Incubus Acapella Karmin Acapella Kelis Acapella (Radio Edit) XMIXR Karmin Accidentally in Love Counting Crows According To You (Top 40 Edit) Orianthi Act Right (Promo Only Clean Edit) Yo Gotti Feat. Young Jeezy & YG Act Right (Radio Edit) XMIXR Yo Gotti ft Jeezy & YG Actin Crazy (Radio Edit) XMIXR Action Bronson Actin' Up (Clean) Wale & Meek Mill f./French Montana Actin' Up (Radio Edit) XMIXR Wale & Meek Mill ft French Montana Action Man Hafdís Huld Addicted Ace Young Addicted Enrique Iglsias Addicted Saving abel Addicted Simple Plan Addicted To Bass Puretone Addicted To Pain (Radio Edit) XMIXR Alter Bridge Addicted To You (Radio Edit) XMIXR Avicii Addiction Ryan Leslie Feat. Cassie & Fabolous Music Page 2 of 247 Name Artist Addresses (Radio Edit) XMIXR T.I. Adore You (Radio Edit) XMIXR Miley Cyrus Adorn Miguel Adorn Miguel Adorn (Radio Edit) XMIXR Miguel Adorn (Remix) Miguel f./Wiz Khalifa Adorn (Remix) (Radio Edit) XMIXR Miguel ft Wiz Khalifa Adrenaline (Radio Edit) XMIXR Shinedown Adrienne Calling, The Adult Swim (Radio Edit) XMIXR DJ Spinking feat. -

Ken Mcdonald Music

24 Songwriting is a curious thing. It happens in lots of different ways. Compendium to ‘All the Best’ Some songs just pop out quickly. Some start with a lyric or trigger words while others are built off a rhythm section or melody. If you Lyrics and Stories of 20 songs are lucky the words and the melody come together. In most cases, for Ken McDonald me, a good song requires a lot of thought to the structure, lyrics and melody. The arrangements in a recording is another challenge. There are lots of examples of great songs that did not ‘take off’ until the arrangements and singer got it right. Performing on stage or in a video is another dimension again. Some songs can take a very long time to work through. It seems that great recorded popular songs have a number things. 1. Strong and interesting melody 2. Interesting lyrics 3. Catchy rhythm or beat 4. Excellent arrangements. The lead vocalist of course makes a big difference. Good harmonies are like gold. Sounds easy. It’s been an interesting ride. My son Fraser has done a great job building up our music web site www.kenmcdonaldmusic.com There are some excellent videos. If you want to contact me, my mobile is 0419664258 and email [email protected] Kyra, Leo, Ken at Coolum about 2007 Interesting people doing interesting things in interesting places. 2 23 My family all loved songs, music and sang. Being the 6th son and having a younger sister was a luxury. Mum got worn out trying to get all the older boys to play the piano so we discussed the issue and she bought me a cheap guitar. -

Episode Transcript

The Hamilcast: A Hamilton Podcast Episode #97: I wanna talk about what I have learned, the hard-won wisdom I have earned // Part One Host: Gillian Pensavalle Guest: Chris Jackson, OG George Washington, Hamilton on Broadway Description: Ladies and gentlemen, Chris Jackson coulda been anywhere in the world tonight, but he’s here with me in New York City. Are you ready for Part One of my chat with him?? In this episode, CJack gets real about some of his past bad behavior during the early days of In The Heights, his experience being in the original Broadway company of Hamilton, the moment he thought he chose the wrong career path, and the mutual love, respect, and admiration flowing between the members of the Lin’s Cabinet. We go in on those #TequilaThoughts, with a torrential downpour as our soundtrack. Transcribed by: Cheryl Sebrell, Proofed by: Kathy Wille and Joan Crofton The Hamilcast‘s Transcribing Army Ok, so we are doing this . ___________________________________________________ [Intro: Hi, I’m Stage and Stage’s Lin-Manuel Miranda and you’re listening to The Hamilcast.] [Boots/Cut, laughter] [Intro music: Hamilton instrumental] G.PEN: Hello everyone, welcome back to The Hamilcast. It is me, Gillian. I am joined today by … Christopher Jackson? C.JACK: The unicorn. G.PEN: Is that true? CJack, you know, you could have been anywhere in the world tonight but you're here with me... C.JACK: We’re here… G.PEN: ... in New York City in the room where the podcast happens. C.JACK: That’s it. G.PEN: I appreciate that. -

NY Unofficial Archive V5.2 22062018 TW.Pdf

........................................................................................................................................................................................... THE UNOFFICIAL ARCHIVE: NEIL YOUNG’S “UNRELEASED” SONGS ©Robert Broadfoot 2018 • [email protected] Version 5.2 -YT: 22 June 2018 Page 1 of 98 CONTENTS CONTENTS ............................................................................................................................. 2 FOREWORD .......................................................................................................................... 3 A NOTE ON SOURCES ......................................................................................................... 5 KEY .......................................................................................................................................... 6 I. NEIL YOUNG SONGS NOT RELEASED ON OFFICIAL MEDIA PART ONE THE CANADIAN YEARS .............................................................................. 7 PART TWO THE AMERICAN YEARS ........................................................................... 16 PART THREE EARLY COVERS AND INFLUENCES ........................................................ 51 II. NEIL YOUNG PERFORMING ON THE RELEASED MEDIA AND AT CONCERT APPEARANCES, OF OTHER ARTISTS ..................................................... 63 III. UNRELEASED NEIL YOUNG ALBUM PROJECTS PART ONE DOCUMENTED ALBUM PROJECTS ....................................................... 83 PART TWO SPECULATION -

Karaoke with a Message – August 16, 2019 – 8:30PM

Another Protest Song: Karaoke with a Message – August 16, 2019 – 8:30PM a project of Angel Nevarez and Valerie Tevere for SOMA Summer 2019 at La Morenita Canta Bar (Puente de la Morena 50, 11870 Ciudad de México, MX) karaoke provided by La Morenita Canta Bar songbook edited by Angel Nevarez and Valerie Tevere ( ) 18840 (Ghost) Riders In The Sky Johnny Cash 10274 (I Am Not A) Robot Marina & Diamonds 00005 (I Can't Get No) Satisfaction Rolling Stones 17636 (I Hate) Everything About You Three Days Grace 15910 (I Want To) Thank You Freddie Jackson 05545 (I'm Not Your) Steppin' Stone Monkees 06305 (It's) A Beautiful Mornin' Rascals 19116 (Just Like) Starting Over John Lennon 15128 (Keep Feeling) Fascination Human League 04132 (Reach Up For The) Sunrise Duran Duran 05241 (Sittin' On) The Dock Of The Bay Otis Redding 17305 (Taking My) Life Away Default 15437 (Who Says) You Can't Have It All Alan Jackson # 07630 18 'til I Die Bryan Adams 20759 1994 Jason Aldean 03370 1999 Prince 07147 2 Legit 2 Quit MC Hammer 18961 21 Guns Green Day 004-m 21st Century Digital Boy Bad Religion 08057 21 Questions 50 Cent & Nate Dogg 00714 24 Hours At A Time Marshall Tucker Band 01379 25 Or 6 To 4 Chicago 14375 3 Strange Days School Of Fish 08711 4 Minutes Madonna 08867 4 Minutes Madonna & Justin Timberlake 09981 4 Minutes Avant 18883 5 Miles To Empty Brownstone 13317 500 Miles Peter Paul & Mary 00082 59th Street Bridge Song Simon & Garfunkel 00384 9 To 5 Dolly Parton 08937 99 Luftballons Nena 03637 99 Problems Jay-Z 03855 99 Red Balloons Nena 22405 1-800-273-8255 -

© 2021 Andrew Gregory Page 1 of 13 RECORDINGS Hard Rock / Metal

Report covers the period of January 1st to Penny Knight Band - "Cost of Love" March 31st, 2021. The inadvertently (single) [fusion hard rock] Albany missed few before that time period, which were brought to my attention by fans, Remains Of Rage - "Remains Of Rage" bands & others, are listed at the [hardcore metal] Troy end,along with an End Note. Senior Living - "The Paintbox Lace" (2- track) [alternative grunge rock shoegaze] Albany Scavengers - "Anthropocene" [hardcore metal crust punk] Albany Thank you to Nippertown.com for being a partner with WEXT Radio in getting this report out to the people! Scum Couch - "Scum Couch | Tree Walker Split" [experimental noise rock] Albany RECORDINGS Somewhere In The Dark - "Headstone" (single track) Hard Rock / Metal / Punk [hard rock] Glenville BattleaXXX - "ADEQUATE" [clitter rock post-punk sasscore] Albany The Frozen Heads - "III" [psychedelic black doom metal post-punk] Albany Bendt - "January" (single) [alternative modern hard rock] Albany The Hauntings - "Reptile Dysfunction" [punk rock] Glens Falls Captain Vampire - "February Demos" [acoustic alternative metalcore emo post-hardcore punk] Albany The One They Fear - "Perservere" - "Metamorphosis" - "Is This Who We Are?" - "Ignite" (single tracks) Christopher Peifer - "Meet Me at the Bar" - "Something [hardcore metalcore hard rock] Albany to Believe In" (singles) [garage power pop punk rock] Albany/NYC The VaVa Voodoos - "Smash The Sun" (single track) [garage punk rock] Albany Dave Graham & The Disaster Plan - "Make A Scene" (single) [garage -

“My Logo Is Branded on Your Skin”: the Wu-Tang Clan, Authenticity, Black Masculinity, and the Rap Music Industry

“MY LOGO IS BRANDED ON YOUR SKIN”: THE WU-TANG CLAN, AUTHENTICITY, BLACK MASCULINITY, AND THE RAP MUSIC INDUSTRY By Ryan Alexander Huey A THESIS Submitted to Michigan State University in partial fulfillment of the requirements for the degree of History – Master of Arts 2014 ABSTRACT “MY LOGO IS BRANDED ON YOUR SKIN”: THE WU-TANG CLAN, AUTHENTICITY, BLACK MASCULINITY, AND THE RAP MUSIC INDUSTRY By Ryan Alexander Huey The rap group, the Wu-Tang Clan came out of a turbulent period of time in both the rap music industry and American society in the early 1990s. Lawsuits over sampling in rap music forced producers to rethink the ways they made music while crack cocaine and the War on Drugs wreaked havoc in urban communities across the nation, as it did in the Clan’s home borough of Staten Island, New York. Before the formation of the group, Gary “GZA” Grice, had managed to land a recording contract as a solo artist, but his marketing was mismanaged and his career stagnated. He returned in 1992 as one of the nine member collective, who billed themselves as kung fu movie buffs melding low-fi, eerie productions with realistic raps about ghetto life. Drawing from the vibrant underground rap scene of New York City in the 1970s and 1980s, Brooklyn’s rich African American chess tradition, the teachings of the Five Percenters, and the cult following for Hong Kong action cinema, the Clan became a huge hit across the country. Each member fashioned a unique masculine identity for himself, bolstering their hardcore underground image while pushing the boundaries of acceptable expressions of manhood in rap music. -

Las Vegas PRIDE in Support of the It Gets Better Project and Film Your Story Between 10Am and 3Pm Friday Or Saturday Near the Registration Desk

TO Fabulous LAS VEGAS NEVADA OFFICIAL 2012 CAPI CONFERENCE GUIDE SCHEDULE | WORKSHOPS | EVENTS | ENTERTAINMENT | LASVEGASPRIDE.ORG 4 Contents 6 Welcome 8 Conference Schedule of Events 10 Conference Center Map 12 Workshop Schedule & Room Assignments (Groups A-D) 14 Workshop Schedule & Room Assignments (Groups E-I) Meet the Presenters 17 Kathy Alday 23 James Healey 17 Brenda Herman 23 Brandon Johnson 18 Anti-Defamation League 24 Jake Naylor 18 Jerime Black 24 Brian Rogers 18 Sylvain Bruni 24 Dori Shields 20 Dr. Becki Cohn-Vargas 26 Kelly Smith 20 Nick DiMartino 26 Ernie Yuen 23 Anna Dubrowski Workshop Details 29 21st Century Technology for 21st Century PRIDEs 29 In-Kind Sponsorships Q&A 29 It’s All About the Contract 30 Festival Logistics Management 30 Mix it UP! 30 NO PLACE FOR HATE® 30 Social Media – Tips for Marketing PRIDE 32 Not In Our School 32 Not Just Another Pretty Face 32 Planning a Conference 34 PRIDE Festivals from the Vendor’s Eye 34 Partner for Success 34 Thinking Outside the Box 36 Turning Volunteers into Catalyst for Change 36 Waivers, Releases & Cyberliability 36 Using Surveys to Grow Your Event 38 Using Technology for PRIDE LAS VEGAS PROUDLY SUPPORTS THE CONSOLIDATED ASSOCIATION OF PRIDE, INC. (CAPI) CONFERENCE Open to everything. And everyone. VisitLasVegas.com/GayTravel "077048.01_L46_LGBT_CAPI_Conference_Prog Trim: 5.5"x 8.5" Bleed: 5.75"x8.75" 6 Welcome Aloha! Welcome to beautiful Las Vegas, Nevada! On behalf of the Board of Directors of Southern Nevada Association of PRIDE, Inc. (SNAPI) I would like to welcome you to the 2012 CAPI Conference, “This is it”. -

Karaokeguy.Com Platinum Listing by Titles 3:00 AM Matchbox Twenty

KaraokeGuy.com Platinum Listing by titles 3:00 AM Matchbox Twenty 1624 5:15 Who, The 6288 12:51 Strokes, The 12828 22 Swift, Taylor 13665 24 Jem 13207 45 Shinedown 12832 1979 Smashing Pumpkins 1289 1982 Travis, Randy 6799 1985 Bowling For Soup 9379 1-2-3 Berry, Len 3733 1-2-3 Estefan, Gloria 1072 1 Thing Amerie 13095 1, 2, 3, 4 (I Love You) Plain White T's 9457 100 YEARS Five For Fighti 12738 100 Years Five For Fighting 9378 100% Chance Of Rain Morris, Gary 8309 18 And Life Skid Row 9323 18 Days Saving Abel 9415 19 Somethin' Wills, Mark 8422 19th Nervous Breakdown Rolling Stones, The 1390 2 BECOME 1 Jewel 12741 2 Become 1 Spice Girls 1564 2 In The Morning New Kids On The Block 9445 20 Days & 20 Nights Presley, Elvis 3598 20th Century Fox Doors, The 2281 21 QUESTIONS 50 Cent feat N 11877 21 QUESTIONS /Vocals 50 Cent feat N 12321 24 Hours At A Time Marshall Tucker Band, The 5717 25 Or 6 To 4 Chicago 7361 26 Cents Wilkinsons 350 32 Flavors Davis, Alana 1673 4 Seasons Of Loneliness Boyz II Men 2639 40 Kinds Of Sadness Cabrera, Ryan 13223 455 Rocket Mattea, Kathy 144 4Ever Veronicas, The 13251 4th Of July Shooter Jenning 13010 50 Ways To Leave Your Lover Simon, Paul 1744 500 Miles Peter, Paul & Mary 9147 500 Miles Away From Home Bare, Bobby 7214 6, 8, 12 McKnight, Brian 6179 634-5789 (Soulsville, U.S.A) Pickett, Wilson 8571 6th Avenue Heartache Wallflowers 1532 7 Days David, Craig 2031 7-Rooms of Gloom Four Tops, The 8075 8TH WORLD WONDER Kimberley Locke 12753 9 To 5 Parton, Dolly 6768 96 Tears Question Mark & The Mysterians 6338 081606 Page