Synergising Woodwind Improvisation with Bespoke Software Technology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hermann NAEHRING: Wlodzimierz NAHORNY: NAIMA: Mari

This discography is automatically generated by The JazzOmat Database System written by Thomas Wagner For private use only! ------------------------------------------ Hermann NAEHRING: "Großstadtkinder" Hermann Naehring -perc,marimba,vib; Dietrich Petzold -v; Jens Naumilkat -c; Wolfgang Musick -b; Jannis Sotos -g,bouzouki; Stefan Dohanetz -d; Henry Osterloh -tymp; recorded 1985 in Berlin 24817 SCHLAGZEILEN 6.37 Amiga 856138 Hermann Naehring -perc,marimba,vib; Dietrich Petzold -v; Jens Naumilkat -c; Wolfgang Musick -b; Jannis Sotos -g,bouzouki; Stefan Dohanetz -d; recorded 1985 in Berlin 24818 SOUJA 7.02 --- Hermann Naehring -perc,marimba,vib; Dietrich Petzold -v; Jens Naumilkat -c; Wolfgang Musick -b; Jannis Sotos -g,bouzouki; Volker Schlott -fl; recorded 1985 in Berlin A) Orangenflip B) Pink-Punk Frosch ist krank C) Crash 24819 GROSSSTADTKINDER ((Orangenflip / Pink-Punk, Frosch ist krank / Crash)) 11.34 --- Hermann Naehring -perc,marimba,vib; Dietrich Petzold -v; Jens Naumilkat -c; Wolfgang Musick -b; Jannis Sotos -g,bouzouki; recorded 1985 in Berlin 24820 PHRYGIA 7.35 --- 24821 RIMBANA 4.05 --- 24822 CLIFFORD 2.53 --- ------------------------------------------ Wlodzimierz NAHORNY: "Heart" Wlodzimierz Nahorny -as,p; Jacek Ostaszewski -b; Sergiusz Perkowski -d; recorded November 1967 in Warsaw 34847 BALLAD OF TWO HEARTS 2.45 Muza XL-0452 34848 A MONTH OF GOODWILL 7.03 --- 34849 MUNIAK'S HEART 5.48 --- 34850 LEAKS 4.30 --- 34851 AT THE CASHIER 4.55 --- 34852 IT DEPENDS FOR WHOM 4.57 --- 34853 A PEDANT'S LETTER 5.00 --- 34854 ON A HIGH PEAK -

Svensk Jazzhistoria - En Översikt Norstedts 1985

Svensk jazzhistoria - en översikt Norstedts 1985. Av Erik Kjellberg Förord till den reviderade nätupplagan 2009 Denna bok är slutsåld sedan många år. Men intresset för jazzhi storia har ökat och litteraturen är numera mycket omfattande. Jazzens historia i Sverige har under de senaste 25 åren doku menterats på skivor och sysselsatt flera forskare, författare och musiker. Förutsättningen då jag skrev boken var min deltidsan ställning vid Svenskt visarkiv (1978-83) i den verksamhet som resulterade i arkivets Jazzavdelning. Jag hade förmånen att ta del av de intervjuer som vi gjorde i Gruppen för svensk jazzhisto ria och de noter, tidskrifter och skivor som vi samlade in till den nya avdelningen vid Svenskt visarkiv. Boken författades på ledi ga stunder. Varje tid ställer nya förväntningar på det som skildras. Det är det med viss tvekan som jag nu gör min text tillgänglig igen. Jag har justerat en del formuleringar och felaktigheter, och (den för åldrade) diskografin har utelämnats liksom även personregistret som ju hänvisar till boksidor. I övrigt är boken intakt. En diskus sion av jazzens öden och äventyr i Sverige fr.o.m. 1980-talet till idag finns alltså inte med. En sådan komplettering skulle kräva en ny bok! Stockholm i september 2009 Erik Kjellberg INLEDNING 1900-talets musik och musikkulturer skulle kunna liknas vid ett brokigt lapptäcke, sammansytt av olikstora bitar i de mest varie rade former, färger och mönster. Vi finner en rad musiksorter och musiktraditioner sida vid sida, men där finns också över-gångar och samspel mellan olika riktningar och tendenser. Det som bru kar kallas konstmusik, populärmusik, folkmusik och jazzmusik, uppvisar var och en sin speciella färgskala och sina skiftningar, även om gränserna mellan dem inte alltid är så skarpa och enty diga som man kanske förleds att tro. -

Ib Glindemann 1934-1959

Biografi Ib Glindemann 1934-59 Kapitel 1: Denne beretning dækker hovedsageligt Ib Glindemanns indledende jazzbetonede karriere i 1950’erne, idet hans senere orkesteraktivitet er beskrevet i booklets til adskillige CD-udgivelser. I en alder, hvor de fleste som folkepensionister læner sig tilbage i en nydende og beskuende rolle, dannede Ib Glindemann i 1994 atter eget permanent big band og har holdt sig inde i rampelyset med utrolig iver og uformindsket musikglæde - indtil manglen på engagementer i efteråret 2012 tvang IG til at opløse orkestret. Glindemann var allerede årtier tilbage kendt for sine markante standpunkter, mange vil kalde dem kontroversielle, og disse fremstår ikke mere moderat efter de mange år – uanset at tillægsordene og de skarpe meninger ofte i højere grad forårsager modsætningsforhold end de åbner døre. Grov, stædig, enspænder, firkantet, naiv og ulidelig perfektionist – lignende karakteristik finder man stort set i alle IG-karrierens interviews og avisomtaler, men Ib Glindemann viger stadig ikke fra sin helt egen opfattelse, og hellere end at renoncere en tøddel derpå, deler han veloplagt ud af sine synspunkter. Lejlighedsvis ’ledes Ib videre i programmet’, når hans søster kort og godt gør ende på den rige argumentation: ”Hold nu mund, Ib, og spil!” – og det gør han så. I det efterfølgende undgår man imidlertid ikke at bemærke, hvilken helt usædvanlig jernvilje, indlevelse og vedholdenhed, som Ib Glindemann, selv som ganske ung mand, har lagt i sine bestræbelser for jazzen under de første big band år frem til 1959 – en alsidig og stor forkæmper oppe mod besværligheder, som man dårligt kan forestille sig i dag, når datidens ringe eller ikke eksisterende hjælpemidler, tilskudsordninger og formidlingsveje tages i betragtning. -

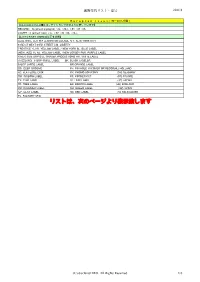

Anagram Records, Rusthållarevägen 15, 192 78 Sollentuna, Sweden 2 Tel: +46 8 754 37 84 E-Mail: [email protected] ALBUM LIST

TABLE OF CONTENTS 1. Album List 2. Series Notes – Swedish Jazz in the Sixties 3. Album Notes – The Music of Norman Howard arr. Mats Gustafsson Anagram Records, Rusthållarevägen 15, 192 78 Sollentuna, Sweden 2 Tel: +46 8 754 37 84 E-mail: [email protected] ALBUM LIST Scandinavian Jazz Lars Gullin – Alma Mater-Alma Almah (cd) ANACD10 Rolf Billberg – Altosupremo (cd) ANACD7 Harry Bäcklund – Remembering Harry (cd) ANACD6 Lars Gullin – In Germany 1955 and 1956 Vol. 1 (cd) ANACD2 Lars Gullin – In Germany 1955, 1956 and 1959 Vol. 2 (cd) ANACD5 Free Jazz / Avant Garde Enar Jonsson Quintet & Sextet – Gothenberg 1965-69 (cd) ANACD9 Forthcoming release Don Cherry et al – Movement Incorporated (cd) ANACD8 Mats Gustafsson – The Music of Norman Howard (vinyl lp) ANALP1 Classical Opera Legends - Early Singers in Swedish Opera (cd) ANACD3 Other Music Dalila - Soberana (cd) ANACD4 Anagram Records, Rusthållarevägen 15, 192 78 Sollentuna, Sweden 3 Tel: +46 8 754 37 84 E-mail: [email protected] Scandinavian Jazz Lars Gullin - Alma Mater-Alma Almah (cd) ANACD10 Lars Gullin - baritone sax featuring Harry Bäcklund and others 1. Billie's Bounce 2. Four 3. Darn That Dream 4. Darn That Dream 5. I Remember You 6. I've Seen 7. What's New Previously Unreleased Material Rolf Billberg – Altosupremo (cd) ANACD7 Rolf Billberg - alto sax featuring Lars Gullin and others 1. Chircorennes Tracks 2. Bluezette 3. Billbäck 4. Nature Boy 5. Ablution 6. Detour Ahear 7. Nigerian Walk 8. Sweet Lovely 9. My Old Flame Previously Unreleased Material Harry Bäcklund – Remembering Harry (cd) ANACD6 Harry Bäcklund - tenor sax featuring Lars Gullin and others 1. -

Redesigning a Performance Practice: Synergising Woodwind Improvisation with Bespoke Software Technology

Technological University Dublin ARROW@TU Dublin Doctoral Applied Arts 2013-8 Redesigning a Performance Practice: Synergising Woodwind Improvisation with Bespoke Software Technology. Seán Mac Erlaine Technological University Dublin, [email protected] Follow this and additional works at: https://arrow.tudublin.ie/appadoc Part of the Music Education Commons, Musicology Commons, and the Music Performance Commons Recommended Citation MacErlaine, S. (2013) Redesigning a Performance Practice: Synergising Woodwind Improvisation with Bespoke Software Technology. Doctoral Thesis, Technological University Dublin. doi:10.21427/D7PS3G This Theses, Ph.D is brought to you for free and open access by the Applied Arts at ARROW@TU Dublin. It has been accepted for inclusion in Doctoral by an authorized administrator of ARROW@TU Dublin. For more information, please contact [email protected], [email protected]. This work is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 License Redesigning a Performance Practice: Synergising Woodwind Improvisation with Bespoke Software Technology. Submitted to the Dublin Institute of Technology in candidature for the Degree of Doctor of Philosophy Dublin Institute of Technology Conservatory of Music and Drama Supervisor: Dr. Mark Fitzgerald August 2013 Seán Mac Erlaine I hereby certify that this material, which I now submit for assessment on the programme of study leading to the award of PhD, is entirely my own work and has not been submitted for assessment for any academic -

4 European Issue.Xlsx

通販専門リスト・夏号 2021/8 !"#$%&'())*++"&,-./0123 D40354'C35604EFGHIJKLHMNOPQRSTUVWXY 40354'Z)&)![\]^_`)abc\[def()g&+)g&+C+)g0h)g0)g0+ 35604Z#)![\]^_`)bei()g#+)g,h)g,)g,+)g,+C+ D%8*:jO10;)<54'FklmnoY ,%=0)&5:0)%0>)?@?)%0>8&-:5&)#60&=0)&2;2)&0<);541)38:; @A4')B?)<0*:)@A4')*:400:)%8,2)%8,04:; .40*:8-0);%)&;2);0%%5<)%#,0%)C)&0<);541),%2),%=0)%#,0% !&0<)"#$$();%)&"2);0%%5<)%#,0%)C)&0<)"04*0;).=42).=4.%0)%#,0% 48604)*8'0),-.),8%%)-4#=04).45'=3:85&*)<72)<78:0)%#,0% !"#$$%#&'())*+,-.)*/#%%)%#,0% ,12),%#31)%#,0%,4 ,+,-.)%#4-0)%#,0% 54254#&-0)%#,0% '-2)'00.)-45560 .72).8&)75%0)8&)35604)54)40354!7%()75%%#&' 9'2)9%#:)0'-0)'8*1 .42).45/5:85&)35.;))))))))))))))))))))))!-4()-04/#&; 4<2)4#8,5<)%#,0% 332)354&04)3=:)))))))))))))))))))))))))))))))!94()94#&30 .12).8&1)%#,0% %92)%0#9)%#,0%)))))))))))))))))))))))))))))))))!".()"#.#& :42):400)%#,0% ,42),45<&)%#,0%)))))))))))))))))))))))))))!=1()0&-%#&' '/2)'4=//04)%#,0% -42)-400&)%#,0%))))))))))))))))))))))))))))))!*.()*.#8& -;2)-%#;)%#,0% 4'2)40')%#,0%))))))))))))))))))))))))))))))))))95)95%')35604 9*2)9#3:54;)*0#% !"#$%&'()*+,-./012 (C) disclandJARO. All Rights Reserved. 1/6 通販専門リスト・夏号 2021/8 !"#$%&'())*++"&,-./0123 wxtq))y 456*76 6*68! 849!8 849!8): ;<6! !"#$!% pqrst utvps E 13890 PHIL WOODS QUINTET INTEGRITY RED VPA 177 2LP. FO. N A- 4800 E 13972 CEDAR WALTON QUARTET THIRD SET STEEPLE CHASE SCS 1179 N A-/- 2800 E 13984 CEDAR WALTON SEXTET TIMELESS LL-STARS:HEART TIMELESS SJP 182 N A 1500 E 14116 PAUL BLEY, J. LUNDGAARD LIVE STEEPLE CHASE SCS 1223 N A 1800 E 14340 STEVE KUHN TRIO WATCH WHAT HAPPENS ! MPS MPS 15 193 PK. -

Lars Gullin Discography

LARS GULLIN DISCOGRAPHY LARS GULLIN RECORDINGS, CONCERTS AND WHEREABOUTS Lars Gunnar Victor Gullin was born in Sanda (Visby), Gotland, Sweden, May 4, 1928 He passed away, due to a heart attack, at home in Slott (Vissefjärda), Småland, Sweden, May 17, 1976 by Kenneth Hallqvist, Sweden January 2019 Lars Gullin DISCOGRAPHY - Recordings, Concerts and Whereabouts by Kenneth Hallqvist - page No. 1 INTERNAL INFORMATION Colour markings for physical position: IKEA-boxes for Lars Gullin = YELLOW IKEA-boxes for Gerry Mulligan = BLUE Shelves = ORANGE Cupboard= RED Lars Gullin DISCOGRAPHY - Recordings, Concerts and Whereabouts by Kenneth Hallqvist - page No. 2 Abbreviations used in this discography acc accordion mda mandola arr arranger mdln mandolin as alto saxophone mel melodica b bass (contrabass or double bass) mgs Moog synthesizer b-cl bass clarinet oboe oboe b-tb bass trombone oca ocarina b-tp bass trumpet org organ bars baritone saxophone p piano bgs bongos panfl pan flute bjo banjo perc percussion bnd bandoneon saxes saxophones bs bass saxophone sop soprano saxophone bsn bassoon st-d steel drums cgs congas st-g steel guitar cl clarinet synth synthesizer clv clavinet tam tambourine cnt cornet tb trombone comp composer th tenor horn cond conductor tim timbales dm drums tmb tamboura el-b electric bass tmp timpani el-g electric guitar tp trumpet el-org electric organ ts tenor saxophone el-p electric piano tu tuba fl flute vcl vocalist flh fluegelhorn vib vibraphone frh French horn vla viola g guitar vlc violoncello hmc harmonica vln violin keyb keyboard vtb valve trombone mar maraccas ww woodwinds mba marimba xyl xylophone Design of the Disc/Song-information NAME OF THE ARTIST/BAND/ORCHESTRA Personnel - Name of artist (instrument acc. -

Dragon Records Arkiv

DRAGON RECORDS ARKIV Svenskt visarkiv Mars 2017 Leif Larsson INLEDNING Dragon Records är ett svenskt grammofonbolag som bildades 1975 av Lars Westin och Jan Wallgren. Produktionen är koncentrerad till samtida svensk jazz, men även historiska utgivningar förekommer med svenska och utländska musiker och artister. Sedan 1982 har Dragon Records mottagit 14 stycken Gyllene skivan utmärkelser för årets bästa jazzalbum. Dragon Records totala utgivningar på CD och LP uppgår till ca. 400 titlar. Material rörande Dragon Records hittar man också i Lars Westins arkiv som finns på Svensk visarkiv. Samlingen donerades till SVA i oktober 2016, där den är fritt tillgängligt för forskning. INNEHÅLL SERIER F1 Anteckningsböcker L1 Pressklipp L2 Kataloger Z1 Rullband Z2 U-matic Z3 Hi 8 Z4 DAT-band Z5 Betacam / Betamax Z6 Kassettband Z7 VHS-band Z8 CD-skivor Ö1 Föremål F1 ANTECKNINGSBÖCKER Sex stycken anteckningsböcker med information runt verksamheten åren 1983 – 1999. Innehåller bl.a. uppgifter om medverkande musiker, musikval, inspelningsdata, adresser m.m. Häri finns också på lösa blad sparade förteckningar över data på inspelningar som finns i arkivet. L1 PRESSKLIPP Tidningsurklipp som mestadels rör Dragon Records utgivning, såsom recensioner, artiklar, intervjuer m.m. Vol 1 1979 – 1985 Vol 2 1986 – 1997 / Odaterade klipp L2 KATALOGER Tre stycken tryckta kataloger över Dragon Records CD och LP produktion. [Serien placerad i volym F1] Z1 RULLBAND Innehåller ett stort antal masterband ur Dragon Records produktion men även andra band med inspelningar från radio, konserter m.m. Serien är detaljförtecknad och numrerad. Se bilaga 1. Z2 U-MATIK Innehåller nästan uteslutande masterband till Dragon Records katalog. Serien är detaljförtecknad och numrerad. -

29 Jul-2 Aug 2015 Konsert / Concerts Konsert / Concerts

29 jul-2 aug 2015 KONSERT / CONCERTS KONSERT / CONCERTS Tid Plats Artist Ordinarie Jazzkort Lustkort Ungdom Sida Tid Plats Artist Ordinarie Jazzkort Lustkort Ungdom Sida Time Venue Standard Jazz Card Youth Page Time Venue Standard Jazz Card Youth Page Onsdag 29 juli / Wednesday 29 July Lördag 1 augusti / Saturday 1 August 14.00 Per Helsas gård The Rad Trads 220 165 175 110 6 09.00 Hos Morten Café Frukostjazz / Breakfast jazz 180 135 145 90 14 Jonathan Dafgård Trio ”Guitar Jazz” 15.30 Per Helsas gård-N. Promenaden YAzzparad / YAzz parade, The Rad Trads 6 11.00 Per Helsas gård Bobby Medina’s Latin American Jazz Experience 280 210 225 140 21 16.30 Norra Promenaden Norrbotten Big Band 6 ”Between Worlds” 18.30 Ystads Teater The Rad Trads, Jan Lundgren & Nicole Johänntgen, 340 255 170 7 13.00 Ystad Saltsjöbad Bossa Negra featuring Hamilton de Holanda & 340 255 270 170 23 Johanna Jarl Kvartett Diogo Nogueira 22.00 S:ta Maria kyrka Inblåsning av festivalen / 7 14.30 MarsvinsholmsTeatern JazzKidz Rymdjazz med Pax och Monday Night Big 200 100 9 A fanfare from the church tower Band Performed by Bobby Medina 15.00 Continental du Sud Nicole Johänntgen “Sofia Project” 220 165 175 110 21 22.30 S:ta Maria kyrka Michel Reis “Pavane of Jazz” 180 135 145 90 7 17.00 Ystads Teater Marius Neset ”Pinball” 260 195 210 130 22 Torsdag 30 juli / Thursday 30 July 18.30 Scala Sharón Clark meets Mattias Nilsson 240 180 190 120 22 11.00 Per Helsas gård Jan Allan – 80 with Norrbotten Big Band 260 195 210 130 10 20.00 Ystads Teater Jan Lundgren invites Karin Krog, Harry -

Bjarne Arnulf Nerem

1 The TENORSAX of BJARNE ARNULF NEREM Solographer: Jan Evensmo Last update: Dec. 3, 2019 2 Born: Oslo, July 31, 1923 Died: Oslo, April 1, 1991 Introduction: Bjarne Nerem was Oslo Jazz Circle’s greatest hero, and we were so happy when he returned to Norway after his self-imposed exile in Sweden for almost twenty years. He was not an easy person to deal with, not at all competitive, with a highly variable mood. It is said he was waiting for telephone calls to give him jobs, instead of actively go out and sell his great talents. He could have been an internationally recognized tenorsax star, but he has left us, not only Norway and Sweden but the jazz world as such, quite a lot of magnificent tenorsax music. Early history: Sporadically member of Oslo Swingklubb's band, summer engagement a t Havna, Tjøme, 1941, in Hein Paulsen's Rytmeorkester 1941-42, Fred Lange-Nielsen's Rytmesekstett at Bygdønes summer 1942, altosax in own band at Lillestrøm autumn 1942. Recording debut with Syv Muntre 1943. Member of Stein Lorentzen's large orchestra (also a member of the orchestra's vocal quintet!!). Active in Oslo Hot Club 1945, with Rowland Greenberg's large orchestra, Hein Paulsen's quintet in Åsgårdstrand summer 1945, concerts with Kjeld Bonfils and Per Nyhaug's radio band later same year, in Will Arild's orchestra at Valencia 1945- 46, with Pete Brown's orchestra spring 1946. In Willy Andresen's orchestra at Humlen 1946-47. From then professional musician, left for Sweden to join Thore Jederby's quartet at Maxim, Stockholm autumn 1947. -

Jazz Och Blues

JAZZ på CD & LP Kulturbibliotekets vinylsamling: Jazz och Blues A JAZZ PIANO ANTHOLOGY A 608 From ragtime to free jazz (insp. 1921-71) ..........................................................1921-71 ABELEEN, Staffan A 177 Svit cachasa. Staffan Abeleen, Lars Färnlöf & Radiojazzgruppen .................... 1973 A 772 Sweet Alva ........................................................................................................... 1974 ABERCROMBIE, John A 1238 Timeless ........................................................................................................... 1974 ADAMS, George A 1593 Earth beams. Med Don Pullen Quartet .............................................................. 1981 A 1950 Lifeline. Med Don Pullen Quartet ....................................................................... 1981 A 2119 Live at Village Vanguard. George Adams - Don Pullen Quartet ........................ 1983 ADAMS, Pepper A 785 Ephemera ........................................................................................................... 1973 A 966 Julian ........................................................................................................... 1975 A 1697 Reflectory ........................................................................................................... 1978 ADDERLEY, Cannonball A 260 Somethin' else ..................................................................................................... 1958 A 1176 Coast to coast .................................................................................................... -

Selective Listing of Basic Original Issued Albums

DANISH JAZZ DISCOGRAPHY 1945-2000 Edited by Erik Raben · Available at www.jazzdiscography.dk DETAILED LISTING OF ISSUES Acid Is Native Music Denmark – Compilation CD Vol.2 1998/99 (var. artists) (c. 1998) Native Music(D)NaMuCD9802(CD) Acoustic Guitars Acoustic Guitars (1986) Stunt(D)STULP8703/STUCD18703(CD) Gajos In Disguise (1989/90) Stunt(D)STULP9001/STUCD19001(CD) All That Jazz From Denmark 1991 (var. artists) (1989/90) Stunt(D)STUCD19101(CD) Out Of The Blue (1995) Stunt(D)STUCD19507(CD)/Elap(D)46714(CD) Advokatens New Orleans Jazzband Frokostjazz på ”Futten” (1999) Unnumbered(VID) Grethe Agatz Danse, spille og synge med Grethe Agatz (c. 1981) Mascot(D)MALP103/MKA103(MC) Børnerytmer for hele familien af Grethe Agatz (c. 1983) Harlekin(D)HMLP3524/HMMC3524(MC) Kom og dans en tigerdans (c. 1998) Sundance(S)SCD105-2(CD) Thomas Agergaard Rounds (1992) Olufsen(D)DOCD5177(CD) Changes – Five Young Danish Groups Play Jazz (var. artists) (1992) Stunt(D)STUCD19305(CD) Thomas Agergaard Quartet: Testing 1-2-3-4 (1998) ManRec(D)No.10(CD) All That Jazz – Music From Denmark 2000 (var. artists) (1998) Danish Music Export(D)MXPCD0300(CD) Ginman – Blachman – Dahl feat. Th. Agergaard: Testing 1-2-3-4 (1998, 2005) Verve UMD987.302.2(CD+DVD) Agergaard – Ginman – Blackman – Dahl: Face The Demon (2000) Time Span (download) Flemming Agerskov Face To Face (1999) Dacapo(D)DCCD9445(CD) Dansk musik i 1000 år – Jazz (var. artists) (1999) Dacapo(D)8.224196(CD) More Jazz Visits 1997–99 (var. artists) (1999) Stunt(D)STUCD01122(2xCD) Alarm 112 Alarm 112 (1997) Av-Art(D)AACD1005(CD) Freddy Albeck Freddy Albeck – Kjeld Bonfils Trio: In Town Tonight (1957) Metronome(D)MEP1151(EP) Freddy Albeck – Arne Astrup: Poor Little Miss Otis – One More Time (1987) Olufsen(D)DOC5033 A Night At Hotel Evergreen (var.