IEEE Paper Template in A4 (V1)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Medi-Cal Dental EDI How-To Guide

' I edi Cal Dental _ Electronic Data Interchange HOW-TO GUIDE EDI EDI edi EDI Support Group Phone: (916) 853-7373 Email: [email protected] Revised November 2019 , ] 1 ,edi .. Cal Dental Medi-Cal Dental Program EDI How-To Guide 11/2019 Welcome to Medical Dental Program’s Electronic Data Interchange Program! This How-To Guide is designed to answer questions providers may have about submitting claims electronically. The Medi-Cal Dental Program's Electronic Data Interchange (EDI) program is an efficient alternative to sending paper claims. It will provide more efficient tracking of the Medi-Cal Dental Program claims with faster responses to requests for authorization and payment. Before submitting claims electronically, providers must be enrolled as an EDI provider to avoid rejection of claims. To enroll, providers must complete the Medi-Cal Dental Telecommunications Provider and Biller Application/Agreement (For electronic claim submission), the Provider Service Office Electronic Data Interchange Option Selection Form and Electronic Remittance Advice (ERA) Enrollment Form and return them to the address indicated on those forms. Providers should advise their software vendor that they would like to submit Medi-Cal Dental Program claims electronically, and if they are not yet enrolled in the EDI program, an Enrollment Packet should be requested from the EDI Support department. Enrollment forms are also available on the Medi-Cal Dental Program Web site (www.denti- cal.ca.gov) under EDI, located on the Providers tab. Providers may also submit digitized images of documentation to the Medi-Cal Dental Program. If providers choose to submit conventional radiographs and attachments through the mail, an order for EDI labels and envelopes will need to be placed using the Forms Reorder Request included in the Enrollment Packet and at the end of this How-To Guide. -

A Brief Introduction to Unix-2019-AMS

Brief Intro to Linux/Unix Brief Intro to Unix (contd) A Brief Introduction to o Brief History of Unix o Compilers, Email, Text processing o Basics of a Unix session o Image Processing Linux/Unix – AMS 2019 o The Unix File System Pete Pokrandt o Working with Files and Directories o The vi editor UW-Madison AOS Systems Administrator o Your Environment [email protected] o Common Commands Twitter @PTH1 History of Unix History of Unix History of Unix o Created in 1969 by Kenneth Thompson and Dennis o Today – two main variants, but blended o It’s been around for a long time Ritchie at AT&T o Revised in-house until first public release 1977 o System V (Sun Solaris, SGI, Dec OSF1, AIX, o It was written by computer programmers for o 1977 – UC-Berkeley – Berkeley Software Distribution (BSD) linux) computer programmers o 1983 – Sun Workstations produced a Unix Workstation o BSD (Old SunOS, linux, Mac OSX/MacOS) o Case sensitive, mostly lowercase o AT&T unix -> System V abbreviations 1 Basics of a Unix Login Session Basics of a Unix Login Session Basics of a Unix Login Session o The Shell – the command line interface, o Features provided by the shell o Logging in to a unix session where you enter commands, etc n Create an environment that meets your needs n login: username n Some common shells n Write shell scripts (batch files) n password: tImpAw$ n Define command aliases (this Is my password At work $) Bourne Shell (sh) OR n Manipulate command history IHateHaving2changeMypasswordevery3weeks!!! C Shell (csh) n Automatically complete the command -

Unix/Linux Command Reference

Unix/Linux Command Reference .com File Commands System Info ls – directory listing date – show the current date and time ls -al – formatted listing with hidden files cal – show this month's calendar cd dir - change directory to dir uptime – show current uptime cd – change to home w – display who is online pwd – show current directory whoami – who you are logged in as mkdir dir – create a directory dir finger user – display information about user rm file – delete file uname -a – show kernel information rm -r dir – delete directory dir cat /proc/cpuinfo – cpu information rm -f file – force remove file cat /proc/meminfo – memory information rm -rf dir – force remove directory dir * man command – show the manual for command cp file1 file2 – copy file1 to file2 df – show disk usage cp -r dir1 dir2 – copy dir1 to dir2; create dir2 if it du – show directory space usage doesn't exist free – show memory and swap usage mv file1 file2 – rename or move file1 to file2 whereis app – show possible locations of app if file2 is an existing directory, moves file1 into which app – show which app will be run by default directory file2 ln -s file link – create symbolic link link to file Compression touch file – create or update file tar cf file.tar files – create a tar named cat > file – places standard input into file file.tar containing files more file – output the contents of file tar xf file.tar – extract the files from file.tar head file – output the first 10 lines of file tar czf file.tar.gz files – create a tar with tail file – output the last 10 lines -

Medic Cal Cente Er of the E Rockie Es

Medical Center of the Rockies Location: Loveland, CO Client: Poudre Valley Health System Design Firm(s): BHA Design (LA), Martin/Martin (Civil) Landscape architect/Project contact: Angela Milewski, ASLA Email: [email protected] ASLA Chapter: Colorado Photo: BHA Design Incorporated Project Specifications Project Description: As a new major heart and trauma hospital, Medical Center of the Rockies was designed as a facility to provide world-class healthcare in a unique design that would join site and building in a single composition. The stormwater conveyance and detention facilties were designed as an integral part of a zoned, naturalistic landscape concept which embraced the natural Colorado landscape and helped achieve sitee credits toward acheiving LEED Gold project certification. The resulting benefits included reduced irrigation water use, improved wildlife habitat and stormwater quality, and a restorative natural setting for users. Case No. 273 Pag e | 2 Project Type: Institutional/education Part of a new development Design features: Stormwater conveyance and detention facilities. This project was designed to meet the following specific requirements or mandates: Local ordinance, NPDES Impervious area managed: greater than 5 acres Amount of existing green space/open space conserved or preserved for managing stormwater on site: greater than 5 acres The regulatory environment and regulator was supportive of the project. Did the client request that other factors be considered, such as energy savings, usable green space, or property value enhancements? The stormwater improvements were part of an overall design for this new hospital facility. The project as a whoole sought and received LEED Gold certification, so the improvements were part of a comprehensive design focused on sustainability. -

A DENOTATIONAL ENGINEERING of PROGRAMMING LANGUAGES to Make Software Systems Reliable and User Manuals Clear, Complete and Unambiguous

A DENOTATIONAL ENGINEERING OF PROGRAMMING LANGUAGES to make software systems reliable and user manuals clear, complete and unambiguous A book in statu nascendi Andrzej Jacek Blikle in cooperation with Piotr Chrząstowski-Wachtel It always seems impossible until it's done. Nelson Mandela Warsaw, March 22nd, 2019 „A Denotational Engineering of Programming Languages” by Andrzej Blikle in cooperation with Piotr Chrząstowski-Wachtel has been licensed under a Creative Commons: Attribution — NonCommercial — NoDerivatives 4.0 International. For details see: https://creativecommons.org/licenses/by-nc-nd/4.0/le- galcode Andrzej Blikle in cooperation with Piotr Chrząstowski-Wachtel, A Denotational Engineering of Programming Languages 2 About the current versions of the book Both versions ― Polish and English ― are in statu nascendi which means that they are both in the process of correction due to my readers’ remarks. Since December 2018 both versions, and currently also two related papers, are available in PDF format and can be downloaded from my website: http://www.moznainaczej.com.pl/what-has-been-done/the-book as well as from my accounts on ResearchGate, academia.edu and arXiv.org I very warmly invite all my readers to send their remarks and questions about all aspects of the book. I am certainly aware of the fact that my English requires a lot of improvements and therefore I shall very much appreciate all linguistic corrections and suggestions as well. You may write to me on [email protected]. All interested persons are also invited to join the project Denotational Engineering. For more details see: http://www.moznainaczej.com.pl/an-invitation-to-the-project Acknowledgements to the Polish version Since June 2018 a preliminary version of the Polish version has been made available to selected readers which resulted with a flow of remarks. -

System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 15 SP1

SUSE Linux Enterprise Server 15 SP1 System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 15 SP1 An administrator's guide for problem detection, resolution and optimization. Find how to inspect and optimize your system by means of monitoring tools and how to eciently manage resources. Also contains an overview of common problems and solutions and of additional help and documentation resources. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xiii -

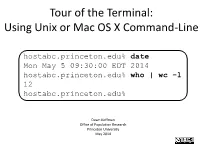

Tour of the Terminal: Using Unix Or Mac OS X Command-Line

Tour of the Terminal: Using Unix or Mac OS X Command-Line hostabc.princeton.edu% date Mon May 5 09:30:00 EDT 2014 hostabc.princeton.edu% who | wc –l 12 hostabc.princeton.edu% Dawn Koffman Office of Population Research Princeton University May 2014 Tour of the Terminal: Using Unix or Mac OS X Command Line • Introduction • Files • Directories • Commands • Shell Programs • Stream Editor: sed 2 Introduction • Operating Systems • Command-Line Interface • Shell • Unix Philosophy • Command Execution Cycle • Command History 3 Command-Line Interface user operating system computer (human ) (software) (hardware) command- programs kernel line (text (manages interface editors, computing compilers, resources: commands - memory for working - hard-drive cpu with file - time) memory system, point-and- hard-drive many other click (gui) utilites) interface 4 Comparison command-line interface point-and-click interface - may have steeper learning curve, - may be more intuitive, BUT provides constructs that can BUT can also be much more make many tasks very easy human-manual-labor intensive - scales up very well when - often does not scale up well when have lots of: have lots of: data data programs programs tasks to accomplish tasks to accomplish 5 Shell Command-line interface provided by Unix and Mac OS X is called a shell a shell: - prompts user for commands - interprets user commands - passes them onto the rest of the operating system which is hidden from the user How do you access a shell ? - if you have an account on a machine running Unix or Linux , just log in. A default shell will be running. - if you are using a Mac, run the Terminal app. -

Datasheet Avalight-CAL

AvaLight-CAL Spectral Calibration Source AvaLight-CAL-Mini The AvaLight-CAL-xxx is a spectral calibra- The AvaLight-CAL-Mini, AvaLight-CAL-AR- tion lamp. It’s available in Mercury-Argon Mini, AvaLight-CAL-Neon-Mini all come in (253.6-922.5 nm), Neon (337-1084.5 nm), the Mini-housing. They are equipped with Argon (696.5-1704 nm) Zinc (202.5-636.2 a connector at the rear enabling to switch nm) and Cadmium (214.4-643.8 nm) ver- the unit on/off remotely with a TTL signal. sions. The major lines including their rela- tive intensity and structures are shown The AvaLight-CAL can also be delivered in below. rack-mountable version, to be integrated in Avantes 19” Rack-mount or the 9.5” desk- The standard SMA-905 connector supplies top housing. The PS-12V/1.0A power supply an easy connection between the lamp should be ordered separately. and optical fibers, making the AvaLight- CAL-xxx a low cost wavelength calibration • Calibration light source system for any fiber-optic spectrom- • Available in a variety of wavelength eter. AvaSoft-Full spectroscopy software ranges (UV to NIR) includes an automatic recalibration proce- dure. 100 95 Light Sources 90 85 80 75 70 65 60 55 Intensity 50 Reeks1 45 40 Relative 35 30 25 20 15 10 5 0 253,65 265,20 265,37 275,28 289,36 296,73 302,15 312,57 334,15 365,01 404,66 407,78 434,75 435,83 546,08 576,96 579,07 696,54 706,72 727,29 738,40 763,51 772,40 794,82 800,62 811,53 842,45 852,14 912,30 922,45 Wavelength (nm) Figure 14 Spectral lines AvaLight-CAL-Mini 98 | [email protected] | www.avantes.com 100 90 80 70 60 -

K–12 Computer Science Curriculum Guide

MASSACHUSETTS K-12 Computer Science Curriculum Guide MassCAN Massachusetts Computing Attainment Network MASSACHUSETTS K-12 COMPUTER SCIENCE CURRICULUM GUIDE The Commonwealth of Massachusetts Executive Office of Education, under James Peyser, Secretary of Education, funded the development of this guide. Anne DeMallie, Computer Science and STEM Integration Specialist at Massachusetts Department of Elementary and Secondary Education, provided help as a partner, writer, and coordinator of crosswalks to the Massachusetts Digital Literacy and Computer Science Standards. Steve Vinter, Tech Leadership Advisor and Coach, Google, wrote the section titled “What Are Computer Science and Digital Literacy?” Padmaja Bandaru and David Petty, Co-Presidents of the Greater Boston Computer Science Teachers Association (CSTA), supported the engagement of CSTA members as writers and reviewers of this guide. Jim Stanton and Farzeen Harunani EDC and MassCAN Editors Editing and design services provided by Digital Design Group, EDC. An electronic version of this guide is available on the EDC website (http://edc.org). This version includes hyperlinks to many resources. Massachusetts K-12 Computer Science Curriculum Guide | iii TABLE OF CONTENTS ABBREVIATIONS USED IN THIS GUIDE ................................................... VII INTRODUCTION ...............................................................................................1 WHAT ARE COMPUTER SCIENCE AND DIGITAL LITERACY? .................. 2 ELEMENTARY SCHOOL CURRICULA AND TOOLS ................................... -

The Historical Path from Research and Development to Operational

elementary mathematics program was also tested. The Historical Path from Prior to 1965, the children were transported from Research and Development their schools to the Stanford campus to receive their CAl lessons. The first use of CAl in an to Operational Use of CAl elementary school was in the spring of 1965, when 41 fourth-grade children were given daily arithme tic drill-and-practice lessons in their classroom on a Patrick Suppes and teletype machine that was connected to the Insti Elizabeth Macken tute's computer by telephone lines (Suppes, 1972). CAl from 1965 to 1970 In this article we will trace the development of In this section we will first continue the story of present-day uses of CAl beginning with the early the development of CAl at Stanford, and then stages in the late 1950s. Because of the brevity of discuss the development of CAl at other institu the article, we will limit ourselves to CAl as it has tions. During the 1965-66 school year the Stanford been developed and used in publ ic schools and CAl program expanded to three schools, all con universities; we will not include the use of CAl by taining teletypes linked to the IMSSS computer. the military. Even so, we can do little more than About 270 elementary students and 60 high school mention some of the most important projects; we students received drill-and-practice CA I lessons in will, however, give an extensive list of references in mathematics. During the same time about 50 which more detailed information can be found. -

Summary of UNIX Commands Furnishing, Performance, Or the Use of These Previewers Commands Or the Associated Descriptions Available on Most UNIX Systems

S u mmary of UNIX Commands- version 3.2 Disclaimer 8. Usnet news 9. File transfer and remote access Summary of UNIX The author and publisher make no warranty of any 10. X window kind, expressed or implied, including the warranties of 11. Graph, Plot, Image processing tools commands merchantability or fitness for a particular purpose, with regard to the use of commands contained in this 12. Information systems 1994,1995,1996 Budi Rahardjo reference card. This reference card is provided “as is”. 13. Networking programs <[email protected]> The author and publisher shall not be liable for 14. Programming tools damage in connection with, or arising out of the 15. Text processors, typesetters, and This is a summary of UNIX commands furnishing, performance, or the use of these previewers commands or the associated descriptions available on most UNIX systems. 16. Wordprocessors Depending on the configuration, some of 17. Spreadsheets the commands may be unavailable on your Conventions 18. Databases site. These commands may be a 1. Directory and file commands commercial program, freeware or public bold domain program that must be installed represents program name separately, or probably just not in your bdf search path. Check your local dirname display disk space (HP-UX). See documentation or manual pages for more represents directory name as an also df. details (e.g. man programname). argument cat filename This reference card, obviously, cannot filename display the content of file filename describe all UNIX commands in details, but represents file name as an instead I picked commands that are useful argument cd [dirname] and interesting from a user’s point of view. -

Compiler Construction

Compiler construction PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 10 Dec 2011 02:23:02 UTC Contents Articles Introduction 1 Compiler construction 1 Compiler 2 Interpreter 10 History of compiler writing 14 Lexical analysis 22 Lexical analysis 22 Regular expression 26 Regular expression examples 37 Finite-state machine 41 Preprocessor 51 Syntactic analysis 54 Parsing 54 Lookahead 58 Symbol table 61 Abstract syntax 63 Abstract syntax tree 64 Context-free grammar 65 Terminal and nonterminal symbols 77 Left recursion 79 Backus–Naur Form 83 Extended Backus–Naur Form 86 TBNF 91 Top-down parsing 91 Recursive descent parser 93 Tail recursive parser 98 Parsing expression grammar 100 LL parser 106 LR parser 114 Parsing table 123 Simple LR parser 125 Canonical LR parser 127 GLR parser 129 LALR parser 130 Recursive ascent parser 133 Parser combinator 140 Bottom-up parsing 143 Chomsky normal form 148 CYK algorithm 150 Simple precedence grammar 153 Simple precedence parser 154 Operator-precedence grammar 156 Operator-precedence parser 159 Shunting-yard algorithm 163 Chart parser 173 Earley parser 174 The lexer hack 178 Scannerless parsing 180 Semantic analysis 182 Attribute grammar 182 L-attributed grammar 184 LR-attributed grammar 185 S-attributed grammar 185 ECLR-attributed grammar 186 Intermediate language 186 Control flow graph 188 Basic block 190 Call graph 192 Data-flow analysis 195 Use-define chain 201 Live variable analysis 204 Reaching definition 206 Three address