A DENOTATIONAL ENGINEERING of PROGRAMMING LANGUAGES to Make Software Systems Reliable and User Manuals Clear, Complete and Unambiguous

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

AEDIT Text Editor Iii Notational Conventions This Manual Uses the Following Conventions: • Computer Input and Output Appear in This Font

Quick Contents Chapter 1. Introduction and Tutorial Chapter 2. The Editor Basics Chapter 3. Editing Commands Chapter 4. AEDIT Invocation Chapter 5. Macro Commands Chapter 6. AEDIT Variables Chapter 7. Calc Command Chapter 8. Advanced AEDIT Usage Chapter 9. Configuration Commands Appendix A. AEDIT Command Summary Appendix B. AEDIT Error Messages Appendix C. Summary of AEDIT Variables Appendix D. Configuring AEDIT for Other Terminals Appendix E. ASCII Codes Index AEDIT Text Editor iii Notational Conventions This manual uses the following conventions: • Computer input and output appear in this font. • Command names appear in this font. ✏ Note Notes indicate important information. iv Contents 1 Introduction and Tutorial AEDIT Tutorial ............................................................................................... 2 Activating the Editor ................................................................................ 2 Entering, Changing, and Deleting Text .................................................... 3 Copying Text............................................................................................ 5 Using the Other Command....................................................................... 5 Exiting the Editor ..................................................................................... 6 2 The Editor Basics Keyboard ......................................................................................................... 8 AEDIT Display Format .................................................................................. -

COMMAND LINE CHEAT SHEET Presented by TOWER — the Most Powerful Git Client for Mac

COMMAND LINE CHEAT SHEET presented by TOWER — the most powerful Git client for Mac DIRECTORIES FILES SEARCH $ pwd $ rm <file> $ find <dir> -name "<file>" Display path of current working directory Delete <file> Find all files named <file> inside <dir> (use wildcards [*] to search for parts of $ cd <directory> $ rm -r <directory> filenames, e.g. "file.*") Change directory to <directory> Delete <directory> $ grep "<text>" <file> $ cd .. $ rm -f <file> Output all occurrences of <text> inside <file> (add -i for case-insensitivity) Navigate to parent directory Force-delete <file> (add -r to force- delete a directory) $ grep -rl "<text>" <dir> $ ls Search for all files containing <text> List directory contents $ mv <file-old> <file-new> inside <dir> Rename <file-old> to <file-new> $ ls -la List detailed directory contents, including $ mv <file> <directory> NETWORK hidden files Move <file> to <directory> (possibly overwriting an existing file) $ ping <host> $ mkdir <directory> Ping <host> and display status Create new directory named <directory> $ cp <file> <directory> Copy <file> to <directory> (possibly $ whois <domain> overwriting an existing file) OUTPUT Output whois information for <domain> $ cp -r <directory1> <directory2> $ curl -O <url/to/file> $ cat <file> Download (via HTTP[S] or FTP) Copy <directory1> and its contents to <file> Output the contents of <file> <directory2> (possibly overwriting files in an existing directory) $ ssh <username>@<host> $ less <file> Establish an SSH connection to <host> Output the contents of <file> using -

Types and Programming Languages by Benjamin C

< Free Open Study > . .Types and Programming Languages by Benjamin C. Pierce ISBN:0262162091 The MIT Press © 2002 (623 pages) This thorough type-systems reference examines theory, pragmatics, implementation, and more Table of Contents Types and Programming Languages Preface Chapter 1 - Introduction Chapter 2 - Mathematical Preliminaries Part I - Untyped Systems Chapter 3 - Untyped Arithmetic Expressions Chapter 4 - An ML Implementation of Arithmetic Expressions Chapter 5 - The Untyped Lambda-Calculus Chapter 6 - Nameless Representation of Terms Chapter 7 - An ML Implementation of the Lambda-Calculus Part II - Simple Types Chapter 8 - Typed Arithmetic Expressions Chapter 9 - Simply Typed Lambda-Calculus Chapter 10 - An ML Implementation of Simple Types Chapter 11 - Simple Extensions Chapter 12 - Normalization Chapter 13 - References Chapter 14 - Exceptions Part III - Subtyping Chapter 15 - Subtyping Chapter 16 - Metatheory of Subtyping Chapter 17 - An ML Implementation of Subtyping Chapter 18 - Case Study: Imperative Objects Chapter 19 - Case Study: Featherweight Java Part IV - Recursive Types Chapter 20 - Recursive Types Chapter 21 - Metatheory of Recursive Types Part V - Polymorphism Chapter 22 - Type Reconstruction Chapter 23 - Universal Types Chapter 24 - Existential Types Chapter 25 - An ML Implementation of System F Chapter 26 - Bounded Quantification Chapter 27 - Case Study: Imperative Objects, Redux Chapter 28 - Metatheory of Bounded Quantification Part VI - Higher-Order Systems Chapter 29 - Type Operators and Kinding Chapter 30 - Higher-Order Polymorphism Chapter 31 - Higher-Order Subtyping Chapter 32 - Case Study: Purely Functional Objects Part VII - Appendices Appendix A - Solutions to Selected Exercises Appendix B - Notational Conventions References Index List of Figures < Free Open Study > < Free Open Study > Back Cover A type system is a syntactic method for automatically checking the absence of certain erroneous behaviors by classifying program phrases according to the kinds of values they compute. -

The Gnu Binary Utilities

The gnu Binary Utilities Version cygnus-2.7.1-96q4 May 1993 Roland H. Pesch Jeffrey M. Osier Cygnus Support Cygnus Support TEXinfo 2.122 (Cygnus+WRS) Copyright c 1991, 92, 93, 94, 95, 1996 Free Software Foundation, Inc. Permission is granted to make and distribute verbatim copies of this manual provided the copyright notice and this permission notice are preserved on all copies. Permission is granted to copy and distribute modi®ed versions of this manual under the conditions for verbatim copying, provided also that the entire resulting derived work is distributed under the terms of a permission notice identical to this one. Permission is granted to copy and distribute translations of this manual into another language, under the above conditions for modi®ed versions. The GNU Binary Utilities Introduction ..................................... 467 1ar.............................................. 469 1.1 Controlling ar on the command line ................... 470 1.2 Controlling ar with a script ............................ 472 2ld.............................................. 477 3nm............................................ 479 4 objcopy ....................................... 483 5 objdump ...................................... 489 6 ranlib ......................................... 493 7 size............................................ 495 8 strings ........................................ 497 9 strip........................................... 499 Utilities 10 c++®lt ........................................ 501 11 nlmconv .................................... -

Medi-Cal Dental EDI How-To Guide

' I edi Cal Dental _ Electronic Data Interchange HOW-TO GUIDE EDI EDI edi EDI Support Group Phone: (916) 853-7373 Email: [email protected] Revised November 2019 , ] 1 ,edi .. Cal Dental Medi-Cal Dental Program EDI How-To Guide 11/2019 Welcome to Medical Dental Program’s Electronic Data Interchange Program! This How-To Guide is designed to answer questions providers may have about submitting claims electronically. The Medi-Cal Dental Program's Electronic Data Interchange (EDI) program is an efficient alternative to sending paper claims. It will provide more efficient tracking of the Medi-Cal Dental Program claims with faster responses to requests for authorization and payment. Before submitting claims electronically, providers must be enrolled as an EDI provider to avoid rejection of claims. To enroll, providers must complete the Medi-Cal Dental Telecommunications Provider and Biller Application/Agreement (For electronic claim submission), the Provider Service Office Electronic Data Interchange Option Selection Form and Electronic Remittance Advice (ERA) Enrollment Form and return them to the address indicated on those forms. Providers should advise their software vendor that they would like to submit Medi-Cal Dental Program claims electronically, and if they are not yet enrolled in the EDI program, an Enrollment Packet should be requested from the EDI Support department. Enrollment forms are also available on the Medi-Cal Dental Program Web site (www.denti- cal.ca.gov) under EDI, located on the Providers tab. Providers may also submit digitized images of documentation to the Medi-Cal Dental Program. If providers choose to submit conventional radiographs and attachments through the mail, an order for EDI labels and envelopes will need to be placed using the Forms Reorder Request included in the Enrollment Packet and at the end of this How-To Guide. -

A Brief Introduction to Unix-2019-AMS

Brief Intro to Linux/Unix Brief Intro to Unix (contd) A Brief Introduction to o Brief History of Unix o Compilers, Email, Text processing o Basics of a Unix session o Image Processing Linux/Unix – AMS 2019 o The Unix File System Pete Pokrandt o Working with Files and Directories o The vi editor UW-Madison AOS Systems Administrator o Your Environment [email protected] o Common Commands Twitter @PTH1 History of Unix History of Unix History of Unix o Created in 1969 by Kenneth Thompson and Dennis o Today – two main variants, but blended o It’s been around for a long time Ritchie at AT&T o Revised in-house until first public release 1977 o System V (Sun Solaris, SGI, Dec OSF1, AIX, o It was written by computer programmers for o 1977 – UC-Berkeley – Berkeley Software Distribution (BSD) linux) computer programmers o 1983 – Sun Workstations produced a Unix Workstation o BSD (Old SunOS, linux, Mac OSX/MacOS) o Case sensitive, mostly lowercase o AT&T unix -> System V abbreviations 1 Basics of a Unix Login Session Basics of a Unix Login Session Basics of a Unix Login Session o The Shell – the command line interface, o Features provided by the shell o Logging in to a unix session where you enter commands, etc n Create an environment that meets your needs n login: username n Some common shells n Write shell scripts (batch files) n password: tImpAw$ n Define command aliases (this Is my password At work $) Bourne Shell (sh) OR n Manipulate command history IHateHaving2changeMypasswordevery3weeks!!! C Shell (csh) n Automatically complete the command -

Unix/Linux Command Reference

Unix/Linux Command Reference .com File Commands System Info ls – directory listing date – show the current date and time ls -al – formatted listing with hidden files cal – show this month's calendar cd dir - change directory to dir uptime – show current uptime cd – change to home w – display who is online pwd – show current directory whoami – who you are logged in as mkdir dir – create a directory dir finger user – display information about user rm file – delete file uname -a – show kernel information rm -r dir – delete directory dir cat /proc/cpuinfo – cpu information rm -f file – force remove file cat /proc/meminfo – memory information rm -rf dir – force remove directory dir * man command – show the manual for command cp file1 file2 – copy file1 to file2 df – show disk usage cp -r dir1 dir2 – copy dir1 to dir2; create dir2 if it du – show directory space usage doesn't exist free – show memory and swap usage mv file1 file2 – rename or move file1 to file2 whereis app – show possible locations of app if file2 is an existing directory, moves file1 into which app – show which app will be run by default directory file2 ln -s file link – create symbolic link link to file Compression touch file – create or update file tar cf file.tar files – create a tar named cat > file – places standard input into file file.tar containing files more file – output the contents of file tar xf file.tar – extract the files from file.tar head file – output the first 10 lines of file tar czf file.tar.gz files – create a tar with tail file – output the last 10 lines -

Clostridium Difficile (C. Diff)

Living with C. diff Learning how to control the spread of Clostridium difficile (C. diff) This can be serious, I need to do something about this now! IMPORTANT C. diff can be a serious condition. If you or someone in your family has been diagnosed with C. diff, there are steps you can take now to avoid spreading it to your family and friends. This booklet was developed to help you understand and manage C. diff. Follow the recommendations and practice good hygiene to take care of yourself. C. diff may cause physical pain and emotional stress, but keep in mind that it can be treated. For more information on your C.diff infection, please contact your healthcare provider. i CONTENTS Learning about C. diff What is Clostridium difficile (C. diff)? ........................................................ 1 There are two types of C.diff conditions .................................................... 2 What causes a C. diff infection? ............................................................... 2 Who is most at risk to get C. diff? ............................................................ 3 How do I know if I have C. diff infection? .................................................. 3 How does C. diff spread from one person to another? ............................... 4 What if I have C. diff while I am in a healthcare facility? ............................. 5 If I get C. diff, will I always have it? ........................................................... 6 Treatment How is C. diff treated? ............................................................................. 7 Prevention How can the spread of C. diff be prevented in healthcare facilities? ............ 8 How can I prevent spreading C. diff (and other germs) to others at home? .. 9 What is good hand hygiene? .................................................................... 9 What is the proper way to wash my hands? ............................................ 10 What is the proper way to clean? ......................................................... -

Computer Matching Agreement

COMPUTER MATCHING AGREEMENT BETWEEN NEW tvIEXICO HUMAN SERVICES DEPARTMENT AND UNIVERSAL SERVICE ADMINISTRATIVE COMPANY AND THE FEDERAL COMMUNICATIONS COMMISSION INTRODUCTION This document constitutes an agreement between the Universal Service Administrative Company (USAC), the Federal Communications Commission (FCC or Commission), and the New Mexico Human Services Department (Department) (collectively, Parties). The purpose of this Agreement is to improve the efficiency and effectiveness of the Universal Service Fund (U SF’) Lifeline program, which provides support for discounted broadband and voice services to low-income consumers. Because the Parties intend to share information about individuals who are applying for or currently receive Lifeline benefits in order to verify the individuals’ eligibility for the program, the Parties have determined that they must execute a written agreement that complies with the Computer Matching and Privacy Protection Act of 1988 (CMPPA), Public Law 100-503, 102 Stat. 2507 (1988), which was enacted as an amendment to the Privacy Act of 1974 (Privacy Act), 5 U.S.C. § 552a. By agreeing to the terms and conditions of this Agreement, the Parties intend to comply with the requirements of the CMPPA that are codified in subsection (o) of the Privacy Act. 5 U.S.C. § 552a(o). As discussed in section 11.B. below, U$AC has been designated by the FCC as the permanent federal Administrator of the Universal Service funds programs, including the Lifeline program that is the subject of this Agreement. A. Title of Matching Program The title of this matching program as it will be reported by the FCC and the Office of Management and Budget (0MB) is as follows: “Computer Matching Agreement with the New Mexico Human Services Department.” B. -

Powerview Command Reference

PowerView Command Reference TRACE32 Online Help TRACE32 Directory TRACE32 Index TRACE32 Documents ...................................................................................................................... PowerView User Interface ............................................................................................................ PowerView Command Reference .............................................................................................1 History ...................................................................................................................................... 12 ABORT ...................................................................................................................................... 13 ABORT Abort driver program 13 AREA ........................................................................................................................................ 14 AREA Message windows 14 AREA.CLEAR Clear area 15 AREA.CLOSE Close output file 15 AREA.Create Create or modify message area 16 AREA.Delete Delete message area 17 AREA.List Display a detailed list off all message areas 18 AREA.OPEN Open output file 20 AREA.PIPE Redirect area to stdout 21 AREA.RESet Reset areas 21 AREA.SAVE Save AREA window contents to file 21 AREA.Select Select area 22 AREA.STDERR Redirect area to stderr 23 AREA.STDOUT Redirect area to stdout 23 AREA.view Display message area in AREA window 24 AutoSTOre .............................................................................................................................. -

Project1: Build a Small Scanner/Parser

Project1: Build A Small Scanner/Parser Introducing Lex, Yacc, and POET cs5363 1 Project1: Building A Scanner/Parser Parse a subset of the C language Support two types of atomic values: int float Support one type of compound values: arrays Support a basic set of language concepts Variable declarations (int, float, and array variables) Expressions (arithmetic and boolean operations) Statements (assignments, conditionals, and loops) You can choose a different but equivalent language Need to make your own test cases Options of implementation (links available at class web site) Manual in C/C++/Java (or whatever other lang.) Lex and Yacc (together with C/C++) POET: a scripting compiler writing language Or any other approach you choose --- must document how to download/use any tools involved cs5363 2 This is just starting… There will be two other sub-projects Type checking Check the types of expressions in the input program Optimization/analysis/translation Do something with the input code, output the result The starting project is important because it determines which language you can use for the other projects Lex+Yacc ===> can work only with C/C++ POET ==> work with POET Manual ==> stick to whatever language you pick This class: introduce Lex/Yacc/POET to you cs5363 3 Using Lex to build scanners lex.yy.c MyLex.l lex/flex lex.yy.c a.out gcc/cc Input stream a.out tokens Write a lex specification Save it in a file (MyLex.l) Compile the lex specification file by invoking lex/flex lex MyLex.l A lex.yy.c file is generated -

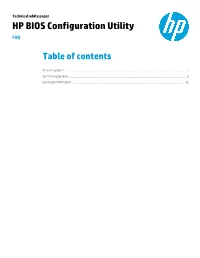

HP BIOS Configuration Utility FAQ

Technical white paper HP BIOS Configuration Utility FAQ Table of contents Feature updates ............................................................................................................................................................................ 2 Common questions ....................................................................................................................................................................... 2 For more information ................................................................................................................................................................. 12 Feature updates Version Features 4.0.2.1 Changes config file keyword to BIOSConfig. Changes commands from /cspwdfile and /nspwdfile to /cpwdfile and /npwdfile to match HP SSM. Adds /Unicode command to query if a system supports a Unicode password. Removes BIOS user commands. Maintains backwards compatibility. 3.0.13.1 Allows only one /cspwdfile command. Adds /WarningAsErr command to include warnings in the final BCU return code. 3.0.3.1 Changes commands from /cspwd and /nspwd command to /cspwdfile and /nspwdfile to read passwords from encrypted files created by HPQPswd.exe 2.60.13.1 Adds additional return codes when encountering WMI errors. 2.60.3 Add /SetDefaults command to reset BIOS to factory default. Supports configuration file comments. Common questions Q: The BIOS Configuration Utility (BCU) is an HP utility, so why it does not work on some HP platforms? A: BCU is a command-line utility for controlling various BIOS settings on a supported HP notebook, desktop, or workstation system. It requires a BIOS that supports HP WMI Namespace within the BIOS. HP began integrating CMI/WMI support directly into the BIOS during approximately 2006–2008 for managed business systems, which did not include consumer-based systems or entry-level units. If the system BIOS does not have the required WMI support, BCU does not work. This is not a failure of BCU. It is a limitation of the system BIOS that does include WMI support in the BIOS code.