Variations on a Theme

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Biological Monitoring of Surface Waters in New York State, 2019

NYSDEC SOP #208-19 Title: Stream Biomonitoring Rev: 1.2 Date: 03/29/19 Page 1 of 188 New York State Department of Environmental Conservation Division of Water Standard Operating Procedure: Biological Monitoring of Surface Waters in New York State March 2019 Note: Division of Water (DOW) SOP revisions from year 2016 forward will only capture the current year parties involved with drafting/revising/approving the SOP on the cover page. The dated signatures of those parties will be captured here as well. The historical log of all SOP updates and revisions (past & present) will immediately follow the cover page. NYSDEC SOP 208-19 Stream Biomonitoring Rev. 1.2 Date: 03/29/2019 Page 3 of 188 SOP #208 Update Log 1 Prepared/ Revision Revised by Approved by Number Date Summary of Changes DOW Staff Rose Ann Garry 7/25/2007 Alexander J. Smith Rose Ann Garry 11/25/2009 Alexander J. Smith Jason Fagel 1.0 3/29/2012 Alexander J. Smith Jason Fagel 2.0 4/18/2014 • Definition of a reference site clarified (Sect. 8.2.3) • WAVE results added as a factor Alexander J. Smith Jason Fagel 3.0 4/1/2016 in site selection (Sect. 8.2.2 & 8.2.6) • HMA details added (Sect. 8.10) • Nonsubstantive changes 2 • Disinfection procedures (Sect. 8) • Headwater (Sect. 9.4.1 & 10.2.7) assessment methods added • Benthic multiplate method added (Sect, 9.4.3) Brian Duffy Rose Ann Garry 1.0 5/01/2018 • Lake (Sect. 9.4.5 & Sect. 10.) assessment methods added • Detail on biological impairment sampling (Sect. -

Table of Contents 2

Southwest Association of Freshwater Invertebrate Taxonomists (SAFIT) List of Freshwater Macroinvertebrate Taxa from California and Adjacent States including Standard Taxonomic Effort Levels 1 March 2011 Austin Brady Richards and D. Christopher Rogers Table of Contents 2 1.0 Introduction 4 1.1 Acknowledgments 5 2.0 Standard Taxonomic Effort 5 2.1 Rules for Developing a Standard Taxonomic Effort Document 5 2.2 Changes from the Previous Version 6 2.3 The SAFIT Standard Taxonomic List 6 3.0 Methods and Materials 7 3.1 Habitat information 7 3.2 Geographic Scope 7 3.3 Abbreviations used in the STE List 8 3.4 Life Stage Terminology 8 4.0 Rare, Threatened and Endangered Species 8 5.0 Literature Cited 9 Appendix I. The SAFIT Standard Taxonomic Effort List 10 Phylum Silicea 11 Phylum Cnidaria 12 Phylum Platyhelminthes 14 Phylum Nemertea 15 Phylum Nemata 16 Phylum Nematomorpha 17 Phylum Entoprocta 18 Phylum Ectoprocta 19 Phylum Mollusca 20 Phylum Annelida 32 Class Hirudinea Class Branchiobdella Class Polychaeta Class Oligochaeta Phylum Arthropoda Subphylum Chelicerata, Subclass Acari 35 Subphylum Crustacea 47 Subphylum Hexapoda Class Collembola 69 Class Insecta Order Ephemeroptera 71 Order Odonata 95 Order Plecoptera 112 Order Hemiptera 126 Order Megaloptera 139 Order Neuroptera 141 Order Trichoptera 143 Order Lepidoptera 165 2 Order Coleoptera 167 Order Diptera 219 3 1.0 Introduction The Southwest Association of Freshwater Invertebrate Taxonomists (SAFIT) is charged through its charter to develop standardized levels for the taxonomic identification of aquatic macroinvertebrates in support of bioassessment. This document defines the standard levels of taxonomic effort (STE) for bioassessment data compatible with the Surface Water Ambient Monitoring Program (SWAMP) bioassessment protocols (Ode, 2007) or similar procedures. -

Water Bug ID Guide

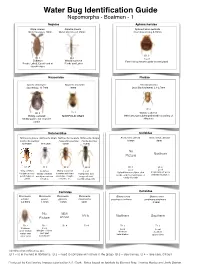

Water Bug Identification Guide Nepomorpha - Boatmen - 1 Nepidae Aphelocheridae Nepa cinerea Ranatra linearis Aphelocheirus aestivalis Water Scorpion, 20mm Water Stick Insect, 35mm River Saucer bug, 8-10mm ID 1 ID 1 ID 1 Local Common Widely scattered Fast flowing streams under stones/gravel Ponds, Lakes, Canals and at Ponds and Lakes stream edges Naucoridae Pleidae Ilycoris cimicoides Naucoris maculata Plea minutissima Saucer bug, 13.5mm 10mm Least Backswimmer, 2.1-2.7mm ID 1 ID 1 ID 2 Widely scattered Widely scattered NORFOLK ONLY Often amongst submerged weed in a variety of Muddy ponds and stagnant stillwaters canals Notonectidae Corixidae Notonecta glauca Notonecta viridis Notonecta maculata Notonecta obliqua Arctocorisa gemari Arctocorisa carinata Common Backswimmer Peppered Backswimmer Pied Backswimmer 8.8mm 9mm 14-16mm 13-15mm 15mm 15mm No Northern Picture ID 2 ID 2 ID 2 ID 2 ID 3 ID 3 Local Local Very common Common Widely scattered Local Upland limestone lakes, dew In upland peat pools Ubiquitous in all Variety of waters In waters with hard Peat ponds, acid ponds, acid moorland lakes, or with little vegetation ponds, lakes or usually more base substrates, troughs, bog pools and sandy silt ponds canals rich sites concrete, etc. recently clay ponds Corixidae Corixidae Micronecta Micronecta Micronecta Micronecta Glaenocorisa Glaenocorisa scholtzi poweri griseola minutissima propinqua cavifrons propinqua propinqua 2-2.5mm 1.8mm 1.8mm 2mm 8.3mm No NEW RARE Northern Southern Picture ARIVAL ID 2 ID 2 ID 4 ID 4 ID 3 ID 3 Local Common Local Local Margins of rivers open shallow Northern Southern and quiet waters over upland lakes upland lakes silt or sand backwaters Identification difficulties are: ID 1 = id in the field in Northants. -

In a Western Balkan Peat Bog

A peer-reviewed open-access journal ZooKeys 637: 135–149Spatial (2016) distribution and seasonal changes of mayflies( Insecta, Ephemeroptera)... 135 doi: 10.3897/zookeys.637.10359 RESEARCH ARTICLE http://zookeys.pensoft.net Launched to accelerate biodiversity research Spatial distribution and seasonal changes of mayflies (Insecta, Ephemeroptera) in a Western Balkan peat bog Marina Vilenica1, Andreja Brigić2, Mladen Kerovec2, Sanja Gottstein2, Ivančica Ternjej2 1 University of Zagreb, Faculty of Teacher Education, Petrinja, Croatia 2 University of Zagreb, Faculty of Science, Department of Biology, Zagreb, Croatia Corresponding author: Marina Vilenica ([email protected]) Academic editor: B. Price | Received 31 August 2016 | Accepted 9 November 2016 | Published 2 December 2016 http://zoobank.org/F3D151AA-8C93-49E4-9742-384B621F724E Citation: Vilenica M, Brigić A, Kerovec M, Gottstein S, Ternjej I (2016) Spatial distribution and seasonal changes of mayflies (Insecta, Ephemeroptera) in a Western Balkan peat bog. ZooKeys 637: 135–149. https://doi.org/10.3897/ zookeys.637.10359 Abstract Peat bogs are unique wetland ecosystems of high conservation value all over the world, yet data on the macroinvertebrates (including mayfly assemblages) in these habitats are still scarce. Over the course of one growing season, mayfly assemblages were sampled each month, along with other macroinvertebrates, in the largest and oldest Croatian peat bog and an adjacent stream. In total, ten mayfly species were recorded: two species in low abundance in the peat bog, and nine species in significantly higher abundance in the stream. Low species richness and abundance in the peat bog were most likely related to the harsh environ- mental conditions and mayfly habitat preferences.In comparison, due to the more favourable habitat con- ditions, higher species richness and abundance were observed in the nearby stream. -

Full Issue for TGLE Vol. 53 Nos. 1 & 2

The Great Lakes Entomologist Volume 53 Numbers 1 & 2 - Spring/Summer 2020 Numbers Article 1 1 & 2 - Spring/Summer 2020 Full issue for TGLE Vol. 53 Nos. 1 & 2 Follow this and additional works at: https://scholar.valpo.edu/tgle Part of the Entomology Commons Recommended Citation . "Full issue for TGLE Vol. 53 Nos. 1 & 2," The Great Lakes Entomologist, vol 53 (1) Available at: https://scholar.valpo.edu/tgle/vol53/iss1/1 This Full Issue is brought to you for free and open access by the Department of Biology at ValpoScholar. It has been accepted for inclusion in The Great Lakes Entomologist by an authorized administrator of ValpoScholar. For more information, please contact a ValpoScholar staff member at [email protected]. et al.: Full issue for TGLE Vol. 53 Nos. 1 & 2 Vol. 53, Nos. 1 & 2 Spring/Summer 2020 THE GREAT LAKES ENTOMOLOGIST PUBLISHED BY THE MICHIGAN ENTOMOLOGICAL SOCIETY Published by ValpoScholar, 1 The Great Lakes Entomologist, Vol. 53, No. 1 [], Art. 1 THE MICHIGAN ENTOMOLOGICAL SOCIETY 2019–20 OFFICERS President Elly Maxwell President Elect Duke Elsner Immediate Pate President David Houghton Secretary Adrienne O’Brien Treasurer Angie Pytel Member-at-Large Thomas E. Moore Member-at-Large Martin Andree Member-at-Large James Dunn Member-at-Large Ralph Gorton Lead Journal Scientific Editor Kristi Bugajski Lead Journal Production Editor Alicia Bray Associate Journal Editor Anthony Cognato Associate Journal Editor Julie Craves Associate Journal Editor David Houghton Associate Journal Editor Ronald Priest Associate Journal Editor William Ruesink Associate Journal Editor William Scharf Associate Journal Editor Daniel Swanson Newsletter Editor Crystal Daileay and Duke Elsner Webmaster Mark O’Brien The Michigan Entomological Society traces its origins to the old Detroit Entomological Society and was organized on 4 November 1954 to “. -

A428 Black Cat to Caxton Gibbet Improvements

A428 Black Cat to Caxton Gibbet improvements TR010044 Volume 6 6.3 Environmental Statement Appendix 8.17: Aquatic Invertebrates Planning Act 2008 Regulation 5(2)(a) Infrastructure Planning (Applications: Prescribed Forms and Procedure) Regulations 2009 26 February 2021 PCF XXX PRODUCT NAME | VERSION 1.0 | 25 SEPTEMBER 2013 | 5124654 A428 Black Cat to Caxton Gibbet improvements Environmental Statement – Appendix 8.17: Aquatic Invertebrates Infrastructure Planning Planning Act 2008 The Infrastructure Planning (Applications: Prescribed Forms and Procedure) Regulations 2009 A428 Black Cat to Caxton Gibbet Improvements Development Consent Order 202[ ] Appendix 8.17: Aquatic Invertebrates Regulation Number Regulation 5(2)(a) Planning Inspectorate Scheme TR010044 Reference Application Document Reference TR010044/APP/6.3 Author A428 Black Cat to Caxton Gibbet improvements Project Team, Highways England Version Date Status of Version Rev 1 26 February 2021 DCO Application Planning Inspectorate Scheme Ref: TR010044 Application Document Ref: TR010044/APP/6.3 A428 Black Cat to Caxton Gibbet improvements Environmental Statement – Appendix 8.17: Aquatic Invertebrates Table of contents Chapter Pages 1 Introduction 1 1.1 Background and scope of works 1 2 Legislation and policy 3 2.1 Legislation 3 2.2 Policy framework 3 3 Methods 6 3.1 Survey Area 6 3.2 Desk study 6 3.3 Field survey: watercourses 7 3.4 Field survey: ponds 7 3.5 Biodiversity value 10 3.6 Competence of surveyors 12 3.7 Limitations 13 4 Results 14 4.1 Desk study 14 4.2 Field survey 14 5 Summary and conclusion 43 6 References 45 7 Figure 1 47 Table of Tables Table 1.1: Summary of relevant legislation for aquatic invertebrates .................................. -

A Review of the Hemiptera of Great Britain: the Aquatic and Semi-Aquatic Bugs

Natural England Commissioned Report NECR188 A review of the Hemiptera of Great Britain: The Aquatic and Semi-aquatic Bugs Dipsocoromorpha, Gerromorpha, Leptopodomorpha & Nepomorpha Species Status No.24 First published 20 November 2015 www.gov.uk/natural -england Foreword Natural England commission a range of reports from external contractors to provide evidence and advice to assist us in delivering our duties. The views in this report are those of the authors and do not necessarily represent those of Natural England. Background Making good decisions to conserve species should primarily be based upon an objective process of determining the degree of threat to the survival of a species. The recognised international approach to undertaking this is by assigning the species to one of the IUCN threat categories. This report was commissioned to update the national status of aquatic and semi-aquatic bugs using IUCN methodology for assessing threat. It covers all species of aquatic and semi-aquatic bugs (Heteroptera) in Great Britain, identifying those that are rare and/or under threat as well as non-threatened and non-native species. Reviews for other invertebrate groups will follow. Natural England Project Manager - Jon Webb, [email protected] Contractor - A.A. Cook (author) Keywords - invertebrates, red list, IUCN, status reviews, Heteroptera, aquatic bugs, shore bugs, IUCN threat categories, GB rarity status Further information This report can be downloaded from the Natural England website: www.gov.uk/government/organisations/natural-england. For information on Natural England publications contact the Natural England Enquiry Service on 0845 600 3078 or e-mail [email protected]. -

Bibliographia Trichopterorum

Entry numbers checked/adjusted: 23/10/12 Bibliographia Trichopterorum Volume 4 1991-2000 (Preliminary) ©Andrew P.Nimmo 106-29 Ave NW, EDMONTON, Alberta, Canada T6J 4H6 e-mail: [email protected] [As at 25/3/14] 2 LITERATURE CITATIONS [*indicates that I have a copy of the paper in question] 0001 Anon. 1993. Studies on the structure and function of river ecosystems of the Far East, 2. Rep. on work supported by Japan Soc. Promot. Sci. 1992. 82 pp. TN. 0002 * . 1994. Gunter Brückerman. 19.12.1960 12.2.1994. Braueria 21:7. [Photo only]. 0003 . 1994. New kind of fly discovered in Man.[itoba]. Eco Briefs, Edmonton Journal. Sept. 4. 0004 . 1997. Caddis biodiversity. Weta 20:40-41. ZRan 134-03000625 & 00002404. 0005 . 1997. Rote Liste gefahrdeter Tiere und Pflanzen des Burgenlandes. BFB-Ber. 87: 1-33. ZRan 135-02001470. 0006 1998. Floods have their benefits. Current Sci., Weekly Reader Corp. 84(1):12. 0007 . 1999. Short reports. Taxa new to Finland, new provincial records and deletions from the fauna of Finland. Ent. Fenn. 10:1-5. ZRan 136-02000496. 0008 . 2000. Entomology report. Sandnats 22(3):10-12, 20. ZRan 137-09000211. 0009 . 2000. Short reports. Ent. Fenn. 11:1-4. ZRan 136-03000823. 0010 * . 2000. Nattsländor - Trichoptera. pp 285-296. In: Rödlistade arter i Sverige 2000. The 2000 Red List of Swedish species. ed. U.Gärdenfors. ArtDatabanken, SLU, Uppsala. ISBN 91 88506 23 1 0011 Aagaard, K., J.O.Solem, T.Nost, & O.Hanssen. 1997. The macrobenthos of the pristine stre- am, Skiftesaa, Haeylandet, Norway. Hydrobiologia 348:81-94. -

Advances in the Study of Behavior, Volume 31.Pdf

Advances in THE STUDY OF BEHAVIOR VOLUME 31 Advances in THE STUDY OF BEHAVIOR Edited by PETER J. B. S LATER JAY S. ROSENBLATT CHARLES T. S NOWDON TIMOTHY J. R OPER Advances in THE STUDY OF BEHAVIOR Edited by PETER J. B. S LATER School of Biology University of St. Andrews Fife, United Kingdom JAY S. ROSENBLATT Institute of Animal Behavior Rutgers University Newark, New Jersey CHARLES T. S NOWDON Department of Psychology University of Wisconsin Madison, Wisconsin TIMOTHY J. R OPER School of Biological Sciences University of Sussex Sussex, United Kingdom VOLUME 31 San Diego San Francisco New York Boston London Sydney Tokyo This book is printed on acid-free paper. ∞ Copyright C 2002 by ACADEMIC PRESS All Rights Reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopy, recording, or any information storage and retrieval system, without permission in writing from the Publisher. The appearance of the code at the bottom of the first page of a chapter in this book indicates the Publisher’s consent that copies of the chapter may be made for personal or internal use of specific clients. This consent is given on the condition, however, that the copier pay the stated per copy fee through the Copyright Clearance Center, Inc. (222 Rosewood Drive, Danvers, Massachusetts 01923), for copying beyond that permitted by Sections 107 or 108 of the U.S. Copyright Law. This consent does not extend to other kinds of copying, such as copying for general distribution, for advertising or promotional purposes, for creating new collective works, or for resale. -

Survey of Ponds at Gallows Bridge Farm Nature Reserve, Buckinghamshire

A survey of ponds at Gallows Bridge Farm Nature Reserve, Buckinghamshire A report for the Freshwater Habitats Trust Martin Hammond Ecology [email protected] September 2016 1. Introduction Gallows Bridge Farm forms part of the Upper Ray Meadows complex owned by the Berkshire, Buckinghamshire and Oxfordshire Wildlife Trust (BBOWT). Six ponds were surveyed on 19th and 20th September 2016. A range of relict stream channels and foot drains were dry at the time of the survey, as were three small ponds in the southern meadows (Ponds 16 to 18, around SP 664 198). Tetchwick Brook, a small tributary of the River Ray, was sampled briefly at three locations to provide additional records. Each pond was surveyed as per the PSYM methodology (Environment Agency, 2002), i.e. three minutes netting time divided equally between each of the meso-habitats present plus one minute examining the water surface and submerged debris. Where it was considered that additional sampling effort was warranted, additional netting was carried out but the taxa thus found were recorded separately. As far as possible, all material was identified to species level and raw data have been provided in spreadsheet format. 2. The ponds surveyed Pond Field: Main Pond (SP 669 199) The Pond Field contains around 30 ponds created by Freshwater Habitats Trust. The Main Pond is the permanent pond nearest the car park. It is a shallow, clay-bedded pond with patchy Broad-leaved Pondweed Potamogeton natans and stoneworts Chara spp., with low emergent vegetation around its banks. Nine-spined Sticklebacks are present. A total of 45 species were recorded (40 in the PSYM sample), notably including the Nationally Scarce reed-beetle Donacia thalassina. -

Organism-Substrate Relationships in Lowland Streams

H.H.Tolkamp Hature Conservation Department, Agricultural University, Wageningen Organism-substrate relationships in lowland streams IpudooI Centre for Agricultural Publishing and Documentation Wageningen - 1980 ,y* <3 "3! Communication NatureConservatio n Department211 . ISBN9 022 0075 96 Theautho r graduatedo n6 Februar y 1981a sDocto r ind e Landbouwwetenschappen atth eAgricultura l University,Wageningen ,th eNetherlands ,o na thesi swit h thesam etitl ean dcontents . ^)Centr e forAgricultura l Publishing andDocumentation ,1980 . Nopar to fthi sboo kma yb ereproduce do rpublishe d inan y form,b yprint , photoprint,microfil mo ran yothe rmean swithou twritte n permission fromth e publishers. Abstract Tolkamp, H.H. (1980)Organism-substrat e relationships inlowlan d streams.Agric . Res.Rep . (Versl.landbouwk .Onderz. )907 ,ISB N9 022 007S 96 , (xi)+ 21 1p. ,8 0 tables,4 3 figs.,31 9refs. ,Eng .an dDutc hsummaries , 14appendices . Also:Doctora l thesis,Wageningen . Afiel d and laboratory studyo n themicrodistributio n ofbotto m dwellingmacro - invertebrates toinvestigat eth erol eo fth estrea msubstrat e inth edevelopmen t andpreservatio no fth emacroinvertebrat ecommunitie s innatural ,undisturbe d low land streams isdescribed .Fiel ddat ao nbotto m substratesan d faunawer ecollecte d between 1975an d 1978fro mtw oDutc hlowlan d streams.Substrate swer e characterized by thenatur ean dth eamoun to forgani c detritus and theminera l particlesizes :i n a fieldclassificatio no n thebasi so fth evisuall y dominantparticl e sizes;i na grain-size classificationo nth ebasi so fexac t particle-size analysis inth elabora tory.Substrat epreferenc e for8 4macroinvertebrat especie swa sdemonstrate d using the Indexo fRepresentation . Substrate-selection experimentswer econducte d ina laborator y stream forthre e Trichopteraspecie s (Mioropterna sequax, Chaetopteryx villosa and Seriaostoma per sonation) andon eEphemeropter aspecie s (Ephemera daniea). -

Het News Issue 3

Issue 3 Spring 2004 Het News nd 2 Series Newsletter of the Heteroptera Recording Schemes Editorial: There is a Dutch flavour to this issue which we hope will be of interest. After all, The Netherlands is not very far as the bug flies and with a following wind there could easily be immigrants reaching our shores at any time. We have also introduced an Archive section, for historical articles, to appear when space allows. As always we are very grateful to all the providers of material for this issue and, for the next issue, look forward to hearing about your 2004 (& 2003) exploits, exciting finds, regional news, innovative gadgets etc. Sheila Brooke 18 Park Hill Toddington Dunstable Beds LU5 6AW [email protected] Bernard Nau 15 Park Hill Toddington Dunstable Beds LU5 6AW [email protected] Contents Editorial .................................................................... 1 Forthcoming & recent events ................................. 7 Dutch Bug Atlas....................................................... 1 Checklist of British water bugs .............................. 8 Recent changes in the Dutch Heteroptera............. 2 The Lygus situation............................................... 11 Uncommon Heteroptera from S. England ............. 5 Web Focus.............................................................. 12 News from the Regions ........................................... 6 From the Archives ................................................. 12 Gadget corner – Bug Mailer.....................................