UNIVERSITY of CALIFORNIA RIVERSIDE Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

PALATABILITAS KUPU-KUPU (LEPIDOPTERA: NYMPHALIDAE DAN PAPILIONIDAE) SEBAGAI PAKAN Tarsius Fuscus DI PENANGKARAN

Prosiding Seminar Nasional Biologi dan Pembelajarannya Universitas Negeri Medan, 12 Oktober 2018 ISSN 2656-1670 PALATABILITAS KUPU-KUPU (LEPIDOPTERA: NYMPHALIDAE DAN PAPILIONIDAE) SEBAGAI PAKAN Tarsius fuscus DI PENANGKARAN PALATABILITY OF BUTTERFLIES (LEPIDOPTERA: NYMPHALIDAE AND PAPILIONIDAE Tarsius fuscus IN CAPTIVITY Indra A.S.L.P. Putri1 1Balai Penelitian dan Pengembangan Lingkungan Hidup dan Kehutanan Makassar, e-mail: [email protected], Jalan Perintis Kemerdekaan Km 16.5 Telp +62411504049 dan +62411504058, Makassar ABSTRACT Tarsius fuscus is an endemic animal of Sulawesi. It is a nocturnal primate, consuming insects in its natural habitat. However, palatability of Nymphalidae and Papilionidae butterflies families as the feed of Tarsius fuscus is unknown. The butterflies are available abundantly around the Tarsius’ captivity in the natural habitat of Tarsius fuscus, therefore they are a potential natural feeding for Tarsius fuscus. This study aims to determine the palatability of Nymphalidae and Papilionidae butterflies families as the feed for Tarsius fuscus. This information is important to provide natural feeding for Tarsius fuscus, to avoid the dependence of Tarsius fuscus on grasshopper feed in captivity. The palatability treatment used was free choice feeding methods. The diet was given ad- libitum. The results showed that 12 species of butterflies from Nymphalidae family and 9 species of butterflies from the Papilionidae family were palatable for the feed of Tarsius fuscus Keywords : diet, Tarsius fuscus, Lepidoptera, captivity ABSTRAK Tarsius fuscus merupakan salah satu satwa endemik Sulawesi. Primata nocturnal ini diketahui mengkonsumsi berbagai spesies serangga di habitat alaminya, Namun demikian, palatabilitas Tarsius fuscus terhadap kupu-kupu dari familia Nymphalidae dan Papilionidae belum diketahui. Kupu-kupu ini diketahui tersedia cukup melimpah di sekitar lokasi kandang penangkaran yang di bangun di habitat alami Tarsius fuscus sehingga berpotensi tinggi sebagai pakan alami. -

K & K Imported Butterflies

K & K Imported Butterflies www.importedbutterflies.com Ken Werner Owners Kraig Anderson 4075 12 TH AVE NE 12160 Scandia Trail North Naples Fl. 34120 Scandia, MN. 55073 239-353-9492 office 612-961-0292 cell 239-404-0016 cell 651-269-6913 cell 239-353-9492 fax 651-433-2482 fax [email protected] [email protected] Other companies Gulf Coast Butterflies Spineless Wonders Supplier of Consulting and Construction North American Butterflies of unique Butterfly Houses, and special events Exotic Butterfly and Insect list North American Butterfly list This a is a complete list of K & K Imported Butterflies We are also in the process on adding new species, that have never been imported and exhibited in the United States You will need to apply for an interstate transport permit to get the exotic species from any domestic distributor. We will be happy to assist you in any way with filling out the your PPQ526 Thank You Kraig and Ken There is a distinction between import and interstate permits. The two functions/activities can not be on one permit. You are working with an import permit, thus all of the interstate functions are blocked. If you have only a permit to import you will need to apply for an interstate transport permit to get the very same species from a domestic distributor. If you have an import permit (or any other permit), you can go into your ePermits account and go to my applications, copy the application that was originally submitted, thus a Duplicate application is produced. Then go into the "Origination Point" screen, select the "Change Movement Type" button. -

Chromosomal Studies on Interspeci Fic Hybrids of Butterflies (Papilionidae, Lepidoptera)

No. 10] Proc. Japan Acad., 52 (1976) 567 153. Chromosomal Studies on Interspeci fic Hybrids of Butterflies (Papilionidae, Lepidoptera). VII Studies in Crosses among P. memnon, P. ascalaphus, P. polymnestor, and P. rumanzovia By Kodo MAEKI* ) and Shigeru A. AE**) (Communicated by Sajiro MAKINO, M. J. A., Dec. 13, 1976) Since McClung (1908) suggested in a pioneer work on orthop- teran chromosomes the correlation that could be expected to exist between the chromosomes and the structural organization of organ- isms, the behavior and morphological changes of chromosomes during phylogenesis has called prime interest of biologists, particularly in the field of cytogenetics. We are intending, in a series of cytogenetic studies of the butterfly genus Papilio, to provide critical data essen- tial for understanding the interspecific relationships of these insects in terms of their genetic makeup, in an approach from hybridization experiments. Several reports along this line have been published to account for chromosomal mechanism in relation to species-differentia- tion or interspecific relationship, inquiring into the meiotic behavior of chromosomes in the following hybrids from crosses among P. polyctor, P. bianor, P. paris, P. maackii, P. polytes, P. helenus, P. protenor, P. nepheles, P. aegeus, P. f uscus, P. macilentus, and P. mem- non (Maeki and Ae 1966, 1970, 1975, 1976a, 1976b, 1976c). The present article presents further data on the meiotic features, particu- larly of chromosome pairing in male individuals, derived from the following hybrids : P, polymnestor x P. memnon, P. polymnestor x P. rumanzovia, P. memnon x P. rumanzovia, and P. memnon x P. ascalaphus. The hybrid specimens were obtained by means of arti- ficial fertilization by Ae (1967, 1968, 1971, 1974). -

A Bibliography of the Catalogs, Lists, Faunal and Other Papers on The

A Bibliography of the Catalogs, Lists, Faunal and Other Papers on the Butterflies of North America North of Mexico Arranged by State and Province (Lepidoptera: Rhopalocera) WILLIAM D. FIELD CYRIL F. DOS PASSOS and JOHN H. MASTERS SMITHSONIAN CONTRIBUTIONS TO ZOOLOGY • NUMBER 157 SERIAL PUBLICATIONS OF THE SMITHSONIAN INSTITUTION The emphasis upon publications as a means of diffusing knowledge was expressed by the first Secretary of the Smithsonian Institution. In his formal plan for the Insti- tution, Joseph Henry articulated a program that included the following statement: "It is proposed to publish a series of reports, giving an account of the new discoveries in science, and of the changes made from year to year in all branches of knowledge." This keynote of basic research has been adhered to over the years in the issuance of thousands of titles in serial publications under the Smithsonian imprint, com- mencing with Smithsonian Contributions to Knowledge in 1848 and continuing with the following active series: Smithsonian Annals of Flight Smithsonian Contributions to Anthropology Smithsonian Contributions to Astrophysics Smithsonian Contributions to Botany Smithsonian Contributions to the Earth Sciences Smithsonian Contributions to Paleobiology Smithsonian Contributions to Zoology Smithsonian Studies in History and Technology In these series, the Institution publishes original articles and monographs dealing with the research and collections of its several museums and offices and of professional colleagues at other institutions of learning. These papers report newly acquired facts, synoptic interpretations of data, or original theory in specialized fields. These pub- lications are distributed by mailing lists to libraries, laboratories, and other interested institutions and specialists throughout the world. -

Download Download

BIODIVERSITAS ISSN: 1412-033X Volume 20, Number 11, November 2019 E-ISSN: 2085-4722 Pages: 3275-3283 DOI: 10.13057/biodiv/d201121 The abundance and diversity of butterflies (Lepidoptera: Rhopalocera) in Talaud Islands, North Sulawesi, Indonesia RONI KONERI1,, MEIS J. NANGOY2, PARLUHUTAN SIAHAAN1, 1Department of Biology, Faculty of Mathematics and Natural Sciences, Universitas Sam Ratulangi. Jl. Kampus Bahu, Manado 95115, North Sulawesi, Indonesia, Tel./Fax.: +62-431-864386; Fax.: +62-431-85371, email: [email protected], [email protected] 2Department of Animal Production, Faculty of Animal Science, Universitas Sam Ratulangi. Jl. Kampus Bahu, Manado 95115, North Sulawesi, Indonesia, Tel./Fax.: +62-431-864386; Fax.: +62-431-85371, email: [email protected] , Manuscript received: 8 September 2019. Revision accepted: 22 October 2019. Abstract. Koneri R, Nangoy MJ, Siahaan P. 2019. The abundance and diversity of butterflies (Lepidoptera: Rhopalocera) in Talaud Islands, North Sulawesi, Indonesia. Biodiversitas 20: 3275-3283. Butterflies play a number of roles in the ecosystem. They help pollination and natural propagation and also are an important element of the food chain as prey for bats, birds, and other insectivorous animals. This study aimed to analyze the abundance and diversity of butterflies (Lepidoptera: Rhopalocera) in the Talaud Islands of North Sulawesi, Indonesia. The sampling method used was scan sampling along the transect line in three habitat types, namely, forest edge, farmland, and shrubland. The species diversity was determined by using the diversity index (Shanon-Wiener), the species richness index was calculated using the Margalef species richness index (R1), while species evenness was counted by using the Shannon evenness index (E). -

(Lepidoptera) Di Kawasan Cagar Alam Gunung Ambang Sulawesi Utara

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Online Journals Universitas Kristen Indonesia KELIMPAHAN KUPU-KUPU (LEPIDOPTERA) DI KAWASAN CAGAR ALAM GUNUNG AMBANG SULAWESI UTARA Roni Koneri* Parluhutan Siahaan Jurusan Biologi, FMIPA, Universitas Sam Ratulangi, Jalan Kampus Bahu, Manado *Penulis untuk korespondensi, Tel. +62-0431- 827932, Fax. +62-0431- 822568, [email protected] Abstract Gunung Ambang Nature Reserve is one of the preserved areas which serve to protect the flora and fauna that live in it. This study aimed to analyze the abundance of butterflies (Lepidoptera) on various types of habitat in the area of the Gunung Ambang Nature Reserve, North Sulawesi. Sampling of habitat include primary forest, secondary forest, plantation and scrub. Sampling used a sweeping technique that follows the line transect which applied at random along the 1000 meters in each habitat type. The result obtained 5 families of insect, that Nymphalidae, Papilionidae, Pieridae, Riodinidae, and Satyridae, including 37 species and 560 individuals. The most Family found are Nymphalidae (72.50%), while the majority of species is Lohara dexaminus (24.64). The highest abundance of butterflies found in scrub habitat and the lowest on the plantation. Results of this research are expected to be the data base about the abundance of butterflies in North Sulawesi. Keywords: Forest, butterflies, Nymphalidae PENDAHULUAN Keanekaragaman spesies yang tinggi 24% merupakan kupu-kupu endemik merupakan karakteristik hutan hujan (Pegie dan Amir, 2006). tropis. Salah satu fauna yang terdapat di Cagar Alam Gunung Ambang hutan tropis adalah kupu-kupu. Kehadiran merupakan salah satu kawasan konservasi kupu-kupu pada suatu ekosistem hutan yang terdapat di Sulawesi Utara dan sangat penting. -

The Biodiversity Value of the Buton Forests

The biodiversity value of the Buton Forests An Operation Wallacea Report 1 Contents Executive summary……………...………..………………………………………….……..3 Section 1 – Study site overview...……….………………………………………….………4 Section 2 - Mammals…………...……….………………………………………..………..8 Section 3 – Birds…………..…………………………………………………...…………. 10 Section 4 – Herpetofauna…………………………………………………………….……12 Section 5 – Freshwater fish………………………………………………………….…….13 Section 6 –Invertebrates…………………………………….……………………….……14 Section 7 – Botany…………………………………………...………………………...…..15 Section 8 – Further work…………………………………….………………………...….16 References…………………………………………………….…………………………....22 Appendicies………………………………………………………………………………...24 2 Executive summary - Buton, the largest satellite island of mainland Sulawesi, lies off the coast of the SE peninsular and retains large areas of lowland tropical forest. - The biodiversity of these forests possesses an extremely high conservation value. To date, a total of 50 mammal species, 139 bird species, 60 herpetofauna species, 46 freshwater fish species, 184 butterfly species and 223 tree species have been detected in the study area. - This diversity is remarkably representative of species assemblages across Sulawesi as a whole, given the size of the study area. Buton comprises only around 3% of the total land area of the Sulawesi sub- region, but 62% of terrestrial birds, 42% of snakes and 33% of butterflies known to occur in the region have been found here. - Faunal groups in the forests of Buton also display high incidence of endemism; 83% of native non- volant mammals, -

Distribusi Dan Keanekaragaman Kupu-Kupu (Lepidoptera) Di Gunung Manado Tua, Kawasan Taman Nasional Laut Bunaken, Sulawesi Utara

DISTRIBUSI DAN KEANEKARAGAMAN KUPU-KUPU (LEPIDOPTERA) DI GUNUNG MANADO TUA, KAWASAN TAMAN NASIONAL LAUT BUNAKEN, SULAWESI UTARA Roni Koneri1* dan Saroyo** Jurusan Biologi, FMIPA, Universitas Sam Ratulangi, Jalan Kampus Bahu, Manado 95115. Tel. +62-0431- 827932, Fax. +62-0431- 822568, E-mail: *[email protected]; **[email protected] Abstract Butterfly (Lepidoptera) are beneficial as pollinators, silk producers, indicators of environmen- tal quality, and are appreciated for their aesthetic value. The objective of the research was to study analyze the distribution and diversity of butterfly (Lepidoptera) in Manado Tua Montauin, region of Bunaken National Marine Park, North Sulawesi. This research was conducted over two months, between Juni and Juli, 2011 using a sweeping technique applied to follow the line transect length of 1000 meters at random in each habitat type (primary forest, secondary forest, gardens and shrubs). The result found 28 species from 4 families (Papilionidae, Nymphalidae, Lycaenidae, and Pieridae ). The distribution of each butterfly species is different along habitat. The highest diversity index value (H‘=2.16) was found at shrubs, while the lowest diversity index value (H‘=0.33) was found at primary forest. This research is expected to be the basic data on butterfly diversity and effects of habitat changes on the diversity and distribution of butterfly in North Sulawesi Keywords: Distribution, diversity, butterfly, Manado Tua, North Sulawesi. 1. Pendahuluan kupu juga mempunyai nilai ekonomis, terutama dalam Gunung Manado tua terletak di Pulau Manado bentuk dewasa dijadikan koleksi, dan sebagai bahan Tua dan termasuk dalam kawasan Taman Nasional pola dan seni (Borror et. al., 1996). Luat Bunaken. Pulau tersebut merupakan pulau Seperti satwa lainnya, kupu-kupu juga terbesar dari kelompok pulau-pulau yang berada menghadapi ancaman kelangkaan dan kepunahan, dalam batasan Teluk Manado. -

Systematic Bibliography of the Butterflies of the United States And

Butterflies of the United States and Canada 497 SYSTEMATIC BIBLIOGRAPHY an Society 33(2): 95-203, 1 fig., 65 tbls. {[25] Feb 1988} OF THE BUTTERFLIES ACKERY, PHILLIP RONALD & ROBERT L. SMILES. 1976. An illustrated list OF THE UNITED STATES AND CANADA of the type-specimens of the Heliconiinae (Lepidoptera: Nym- (Entries that were not examined are marked with an asterisk) phalidae) in the British Museum (Natural History). Bulletin of the British Museum (Natural History)(Entomology) 32(5): --A-- 171-214, 39 pls. {Jan 1976} ACKERY, PHILLIP RONALD, RIENK DE JONG & RICHARD IRWIN VANE-WRIGHT. AARON, EUGENE MURRAY. 1884a. Erycides okeechobee, Worthington. Pa- 1999. 16. The butterflies: Hedyloidea, Hesperioidea and Pa- pilio 4(1): 22. {[20] Feb 1884; cited in Papilio 4(3): 62} pilionoidea. Pp. 263-300, 9 figs., in: N. P. Kristensen (Ed.), AARON, EUGENE MURRAY. 1884b. Eudamus tityrus, Fabr., and its va- The Lepidoptera, moths and butterflies. Volume 1: Evolu- rieties. Papilio 4(2): 26-30. {Feb, (15 Mar) 1884; cited in Pa- tion, Systematics and Biogeography. Handbuch der Zoologie pilio 4(3): 62} 4(35): i-x, 1-487. {1999} AARON, EUGENE MURRAY. [1885]. Notes and queries. Pamphila bara- ACKERY, PHILLIP RONALD & RICHARD IRWIN VANE-WRIGHT. 1984. Milk- coa, Luc. in Florida. Papilio 4(7/8): 150. {Sep-Oct 1884 [22 Jan weed butterflies, their cladistics and biology. Being an account 1885]; cited in Papilio 4(9/10): 189} of the natural history of the Danainae, a subfamily of the Lepi- AARON, EUGENE MURRAY. 1888. The determination of Hesperidae. doptera, Nymphalidae. London/Ithaca; British Museum (Nat- Entomologica Americana 4(7): 142-143. -

Spring 2021 BUTTERFLIES & INSECTS

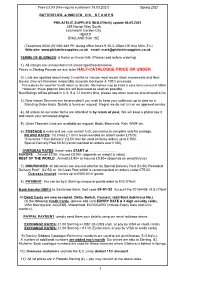

Price £3.00 (free regular customers 15.03.2021) Spring 2021 BUTTERFLIES & INSECTS O N S T A M P S PHILATELIC SUPPLIES (M.B.O'Neill) update 06.05.2021 359 Norton Way South Letchworth Garden City HERTS ENGLAND SG6 1SZ (Telephone 0044-(0)1462-684191 during office hours 9.30-3.-00pm UK time Mon.-Fri.) Web-site: www.philatelicsupplies.co.uk email: [email protected] TERMS OF BUSINESS: & Notes on these lists: (Please read before ordering). 1). All stamps are unmounted mint unless specified otherwise. Prices in Sterling Pounds we aim to be HALF-CATALOGUE PRICE OR UNDER 2). Lists are updated about every 3 months to include most recent stock movements and New Issues; they are therefore reasonably accurate stockwise & 100% pricewise. This reduces the need for "credit notes" or refunds. Alternatives may be listed in case items are out of stock However, these popular lists are still best used as soon as possible. Next listings will be printed in 3, 6, 9 & 12 months time; please say when next we should send a list. 3). New Issues Services can be provided if you wish to keep your collection up to date on a Standing Order basis. Details & forms on request. Regret we do not run an on approval service. 4). All orders on our order forms are attended to by return of post. We will keep a photocopy it and return your annotated original. 5). Other Thematic Lists are available on request; Birds, Mammals, Fish, WWF etc. 6). POSTAGE is extra and we use current G.B. -

The Biodiversity Value of the Buton Forests

The biodiversity value of the Buton Forests A 2018 Operation Wallacea Report 1 Contents Executive summary……………...………..………………………………………….……..3 Section 1 – Study site overview...……….………………………………………….………4 Section 2 - Mammals…………...……….………………………………………..………..8 Section 3 – Birds…………..…………………………………………………...…………. 10 Section 4 – Herpetofauna…………………………………………………………….……12 Section 5 – Freshwater fish………………………………………………………….…….13 Section 6 – Invertebrates…………………………………….……………………….……14 Section 7 – Botany…………………………………………...………………………...…..15 Section 8 – Further work…………………………………….………………………...….16 References…………………………………………………….…………………………....22 Appendicies………………………………………………………………………………...24 2 Executive summary - Buton, the largest satellite island of mainland Sulawesi, lies off the coast of the SE peninsular and retains large areas of lowland tropical forest. - The biodiversity of these forests possesses an extremely high conservation value. To date, a total of 53 mammal species, 149 bird species, 64 herpetofauna species, 46 freshwater fish species, 194 butterfly species and 222 tree species have been detected in the study area. - This diversity is remarkably representative of species assemblages across Sulawesi as a whole, given the size of the study area. Buton comprises only around 3% of the total land area of the Sulawesi sub- region, but 70% of terrestrial birds, 54% of snakes and 35% of butterflies known to occur in the region have been found here. - Faunal groups in the forests of Buton also display high incidence of endemism; 83% of native non- volant mammals, 48.9% of birds, 34% of herpetofauna and 55.1% of butterflies found in the island’s forest habitats are entirely restricted to the Wallacean biodiversity hotspot. - Numerous organisms are also very locally endemic to the study area. Three species of herpetofauna are endemic to Buton, and another has its only known extant population here. Other currently undescribed species are also likely to prove to be endemic to Buton. -

Mimikry" Am Beispiel Südostasiatischer Insekten 113-151 Beitr

ZOBODAT - www.zobodat.at Zoologisch-Botanische Datenbank/Zoological-Botanical Database Digitale Literatur/Digital Literature Zeitschrift/Journal: Beiträge zur naturkundlichen Forschung in Südwestdeutschland Jahr/Year: 1977 Band/Volume: 36 Autor(en)/Author(s): Roesler Rolf-Ulrich Artikel/Article: Beiträge zur Kenntnis der Insektenfauna Sumatras Teil 6: Betrachtungen zum Problemkreis "Mimikry" am Beispiel südostasiatischer Insekten 113-151 Beitr. naturk. Forsch. SüdwDtl. Band 36 S. 13-151 Karlsruhe, 1. 12.1977 Beiträge zur Kenntnis der Insektenfauna Sumatras Teil 6: Betrachtungen zum Problemkreis „Mimikry“ am Beispiel südostasiatischer Insekten von R. U. Roesler und P. V. K üppers A) Die verschiedenen Erscheinungsformen der Mimikry von R. U lrich Roesler Mit 12 Abbildungen Inhalt Einleitung 113 I) Pseudomimikry 115 II) Phylaktische Tracht 117 A) Tarntracht 117 1) Mimese 117 2) Sympathische Tracht 120 B) Kryptische Tracht 120 1) Somatolyse 120 2) Katalepsie 121 3) Farbwechsel 121 4) Gegenschattierung 121 III) Aposematische Tracht. 122 A) Fremdtracht. 123 1) Misoneismus 123 2) Drohfärbung 123 B) Schrecktracht 124 1) Trutzfärbung 124 2) Warntracht 124 3) Scheinwarntracht 124 4) Mimikry 126 Zusammenfassung, Summary 129 Literaturverzeichnis 131 Einleitung Das oft verwandte deutsche Wort „T racht“ bezieht sich lediglich auf den optischen Sektor (die vorliegenden Betrachtungen beschränken sich auf diesen optischen Sektor, da die Beob achtungen der übrigen Sparten sich noch nicht auswerten ließen). Gemeint ist dabei Farbe, Zeichnungsmuster und schließlich die Form. Trachten können ganz verschiedenen Zwecken 113 dienen wie zum Beispiel dem Anlocken (etwa der Geschlechter oder bei den Pflanzen die be kannten Attrappenbildungen der Orchideenblüten), der äußeren Ausschmückung oder auch unter anderem dem Schutze bzw. der Warnung. Einteilungen und Abgrenzungen sind norma lerweise äußerst schwierig, da es sich um ein ineinandergreifendes Naturgeschehen handelt, dessen spezielle Einzelgegebenheiten nicht immer einwandfrei deutbar sind bzw.