Uitnodig Distance-Based Analysis of Dynamical Systems and Time Series

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

De Bibliothecis Syntagma Di Giusto Lipsio: Novità E Conferme Per La Storia Delle Biblioteche

Diego Baldi* De Bibliothecis Syntagma di Giusto Lipsio: novità e conferme per la storia delle biblioteche uando, nel 1602, Lipsio1 espresse a Moretus l’intenzione di dedi- care il De Bibliothecis Syntagma a Charles de Croy,2 si riferì al suo Qtrattatello come un’operetta di poco conto, buona per incoraggiare il duca di Aarschot nell’allestimento della sua superba biblioteca.3 Probabil- mente, il fiammingo coltivava la speranza che il de Croy si sentisse in qualche modo ispirato da questo scritto e si convincesse, stante il suo amore per i libri e le bonae litterae, a regalare a Lovanio una sua biblioteca pubblica.4 Il velleitario desiderio di Lipsio era destinato a rimanere tale, nonostante il tan- * ISMA – CNR, Roma. Mi preme ringraziare in questa sede il professor Marcello Fagiolo per la copia fornitami dell’inedita voce Bybliotheca, facente parte del secondo libro delle Antichità di Roma di Pirro Ligorio e contenuta nel codice a.III.3.15.J.4 custodito presso l’Ar- chivio di Stato di Torino. La sua cortesia mi ha permesso di arricchire notevolmente il pre- sente lavoro. Grazie all’usuale e preziosa attenzione della dottoressa Monica Belli, inoltre, ho potuto limitare notevolmente sviste e imprecisioni. Di questo, come sempre, le sono grato. 1. Per un primo, indispensabile orientamento bibliografico nella smisurata letteratura dedicata all’erudito di Lovanio rimando ai saggi di Aloïs Gerlo. Les études lipsiennes: état de la question, in Juste Lipse (1547-1606), Colloque international tenu en mars 1987. Edité par Aloïs Gerlo. Bruxelles, University Press, 1988, pp. 9-24; Rudolf De Smet. -

Manoscritti Tonini

Manoscritti Tonini strumento di corredo al fondo documentario a cura di Maria Cecilia Antoni Biblioteca Civica Gambalunga. Rimini. 2013 1 Il Fondo Tonini entrò in biblioteca nel 1924, in agosto una prima parte: più di 1500 fra volumi e opuscoli e dieci buste di manoscritti...e tutti i manoscritti, codici e pergamene appartenuti ai Tonini, custoditi dai fratelli Ricci,1 il resto in dicembre, in una stanza al piano terra di palazzo Gambalunga; il 26 aprile 1925 viene trasmessa al Sindaco di Rimini una Relazione degli esecutori testamentari: Alessandro Tosi e padre Gregorio Giovanardi2. La relazione, dattiloscritta, intitolata: Elenco manoscritti, opuscoli, libri di Luigi e Carlo Tonini, donati alla Biblioteca Gambalunga descrive nove nuclei contrassegnati da lettere alfabetiche (A-I) e da capoversi, interni ai nuclei, numerati progressivamente da 1 a 733; i nuclei A-F sono preceduti dal titolo: Luigi Tonini 4; il nucleo G è invece intitolato: Manoscritti di Carlo Tonini 5; seguono H: Pergamene (nn.47-53); I: Raccolta di documenti riguardanti la storia di Rimini ed altri luoghi, originali ed in copia. Questa descrizione tuttavia non permetteva più il rinvenimento dei documenti a causa di interventi, spostamenti e condizionamenti successivi6. 1 Articolo di G. GIOVANARDI, "La Riviera Romagnola", 11 settembre 1924, da cui è tratta la citazione sopra riportata. Nell'articolo Giovanardi individua dieci contenitori con lettere dell'alfabeto, da lui riordinati in questo modo: Busta A, Busta B, Busta C, contenenti le Vite d'insigni italiani di Carlo Tonini; Busta E con Epigrafi di Luigi e Carlo Tonini; Busta F Lavori di storia patri inediti di Luigi Tonini (tali Lavori sono numerati da I a XIV; i numeri XIII e XIV rimandano a volumi mss. -

Scientific Programme for All

Optimal radiotherapy Scientific Programme for all ESTRO ANNUAL CONFE RENCE 27 - 31 August 2021 Onsite in Madrid, Spain & Online Saturday 28 August 2021 Track: Radiobiology Teaching lecture: The microbiome: Its role in cancer development and treatment response Saturday, 28 August 2021 08:00 - 08:40 N104 Chair: Marc Vooijs - 08:00 The microbiome: Its role in cancer development and treatment response SP - 0004 A. Facciabene (USA) Track: Clinical Teaching lecture: Breast reconstruction and radiotherapy Saturday, 28 August 2021 08:00 - 08:40 Plenary Chair: Philip Poortmans - 08:00 Breast reconstruction and radiotherapy SP - 0005 O. Kaidar-Person (Israel) Track: Clinical Teaching lecture: Neurocognitive changes following radiotherapy for primary brain tumours Saturday, 28 August 2021 08:00 - 08:40 Room 1 Chair: Brigitta G. Baumert - 08:00 Evaluation and care of neurocognitive effects after radiotherapy SP - 0006 M. Klein (The Netherlands) 08:20 Imaging biomarkers of dose-induced damage to critical memory regions SP - 0007 A. Laprie (France Track: Physics Teaching lecture: Independent dose calculation and pre-treatment patient specific QA Saturday, 28 August 2021 08:00 - 08:40 Room 2.1 Chair: Kari Tanderup - 08:00 Independent dose calculation and pre-treatment patient specific QA SP - 0008 P. Carrasco de Fez (Spain) 1 Track: Physics Teaching lecture: Diffusion MRI: How to get started Saturday, 28 August 2021 08:00 - 08:40 Room 2.2 Chair: Tufve Nyholm - Chair: Jan Lagendijk - 08:00 Diffusion MRI: How to get started SP - 0009 R. Tijssen (The Netherlands) Track: RTT Teaching lecture: The role of RTT leadership in advancing multi-disciplinary research Saturday, 28 August 2021 08:00 - 08:40 N103 Chair: Sophie Perryck - 08:00 The role of RTT leadership in advancing multi-disciplinary research SP - 0010 M. -

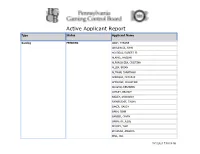

Active Applicant Report Type Status Applicant Name

Active Applicant Report Type Status Applicant Name Gaming PENDING ABAH, TYRONE ABULENCIA, JOHN AGUDELO, ROBERT JR ALAMRI, HASSAN ALFONSO-ZEA, CRISTINA ALLEN, BRIAN ALTMAN, JONATHAN AMBROSE, DEZARAE AMOROSE, CHRISTINE ARROYO, BENJAMIN ASHLEY, BRANDY BAILEY, SHANAKAY BAINBRIDGE, TASHA BAKER, GAUDY BANH, JOHN BARBER, GAVIN BARRETO, JESSE BECKEY, TORI BEHANNA, AMANDA BELL, JILL 10/1/2021 7:00:09 AM Gaming PENDING BENEDICT, FREDRIC BERNSTEIN, KENNETH BIELAK, BETHANY BIRON, WILLIAM BOHANNON, JOSEPH BOLLEN, JUSTIN BORDEWICZ, TIMOTHY BRADDOCK, ALEX BRADLEY, BRANDON BRATETICH, JASON BRATTON, TERENCE BRAUNING, RICK BREEN, MICHELLE BRIGNONI, KARLI BROOKS, KRISTIAN BROWN, LANCE BROZEK, MICHAEL BRUNN, STEVEN BUCHANAN, DARRELL BUCKLEY, FRANCIS BUCKNER, DARLENE BURNHAM, CHAD BUTLER, MALKAI 10/1/2021 7:00:09 AM Gaming PENDING BYRD, AARON CABONILAS, ANGELINA CADE, ROBERT JR CAMPBELL, TAPAENGA CANO, LUIS CARABALLO, EMELISA CARDILLO, THOMAS CARLIN, LUKE CARRILLO OLIVA, GERBERTH CEDENO, ALBERTO CENTAURI, RANDALL CHAPMAN, ERIC CHARLES, PHILIP CHARLTON, MALIK CHOATE, JAMES CHURCH, CHRISTOPHER CLARKE, CLAUDIO CLOWNEY, RAMEAN COLLINS, ARMONI CONKLIN, BARRY CONKLIN, QIANG CONNELL, SHAUN COPELAND, DAVID 10/1/2021 7:00:09 AM Gaming PENDING COPSEY, RAYMOND CORREA, FAUSTINO JR COURSEY, MIAJA COX, ANTHONIE CROMWELL, GRETA CUAJUNO, GABRIEL CULLOM, JOANNA CUTHBERT, JENNIFER CYRIL, TWINKLE DALY, CADEJAH DASILVA, DENNIS DAUBERT, CANDACE DAVIES, JOEL JR DAVILA, KHADIJAH DAVIS, ROBERT DEES, I-QURAN DELPRETE, PAUL DENNIS, BRENDA DEPALMA, ANGELINA DERK, ERIC DEVER, BARBARA -

Involvement of the P2X7 Purinergic Receptor in Colonic Motor Dysfunction Associated with Bowel Inflammation in Rats

RESEARCH ARTICLE Involvement of the P2X7 Purinergic Receptor in Colonic Motor Dysfunction Associated with Bowel Inflammation in Rats Luca Antonioli1., Maria Cecilia Giron2., Rocchina Colucci1*, Carolina Pellegrini1, Deborah Sacco1, Valentina Caputi2, Genny Orso3, Marco Tuccori1, Carmelo Scarpignato4, Corrado Blandizzi1, Matteo Fornai1 1. Division of Pharmacology and Chemotherapy, Department of Clinical and Experimental Medicine, University of Pisa, Pisa, Italy, 2. Department of Pharmaceutical and Pharmacological Sciences, University of Padova, Padova, Italy, 3. Scientific Institute IRCCS Eugenio Medea, Conegliano, Treviso, Italy, 4. Laboratory of Clinical Pharmacology, University of Parma, Parma, Italy *[email protected] OPEN ACCESS . These authors contributed equally to this work. Citation: Antonioli L, Giron MC, Colucci R, Pellegrini C, Sacco D, et al. (2014) Involvement of the P2X7 Purinergic Receptor in Colonic Motor Dysfunction Associated with Bowel Inflammation in Rats. PLoS ONE 9(12): e116253. doi:10.1371/ Abstract journal.pone.0116253 Editor: Jean Kanellopoulos, University Paris Sud, Background and Purpose: Recent evidence indicates an involvement of P2X7 France purinergic receptor (P2X7R) in the fine tuning of immune functions, as well as in Received: July 10, 2014 driving enteric neuron apoptosis under intestinal inflammation. However, the Accepted: December 6, 2014 participation of this receptor in the regulation of enteric neuromuscular functions Published: December 30, 2014 remains undetermined. This study was aimed at investigating the role of P2X7Rs in Copyright: ß 2014 Antonioli et al. This is an the control of colonic motility in experimental colitis. open-access article distributed under the terms of the Creative Commons Attribution License, which Experimental Approach: Colitis was induced in rats by 2,4-dinitrobenzenesulfonic permits unrestricted use, distribution, and repro- acid. -

Black Sheep Brewery Ripon Triathlon (National Standard Distance Championships) ‐ 2017

Black Sheep Brewery Ripon Triathlon (National Standard Distance Championships) ‐ 2017 Race No First Name Surname Club / Team Name Cat Wave Start Time GB Age Group? 1 Peter Gaskell The Endurance Store M 20‐24 1 13:00:00 Yes 2 Beau Smith Racepace M 20‐24 1 13:00:00 Yes 3 Christian Brown Leeds Triathlon Centre M 20‐24 1 13:00:00 4 Brett Halliwell Yonda Racing M 20‐24 1 13:00:00 Yes 5 James Scott‐Farrington Leeds Triathlon Centre M 20‐24 1 13:00:00 Yes 6 Daniel Guerrero Loughborough University M 20‐24 1 13:00:00 Yes 7 Robert Winfield M 20‐24 1 13:00:00 Yes 8 Sean Wylie Red Venom M 20‐24 1 13:00:00 Yes 9 Daniel Mcfeely Hartree JETS Triathlon M 20‐24 1 13:00:00 Yes 10 Christopher Price Hoddesdon Tri Club M 20‐24 1 13:00:00 11 Jacob Shannon Leeds Triathlon Centre / Triology M 20‐24 1 13:00:00 Yes 12 Chris Hine Race Hub M 20‐24 1 13:00:00 Yes 13 Jonny Breedon Tri London M 20‐24 1 13:00:00 Yes 14 Angus Smith M 20‐24 1 13:00:00 Yes 15 Jonathan Khoo M 20‐24 1 13:00:00 Yes 16 Zachary Cooper Athlete Lab M 20‐24 1 13:00:00 Yes 17 Gareth Jooste M 20‐24 1 13:00:00 18 Jake Hayward Hadleigh Hares A.C M 20‐24 1 13:00:00 Yes 19 Steve Bowser Clapham Chasers M 20‐24 1 13:00:00 Yes 20 Benjamin Potter Darlington Triathlon Club M 20‐24 1 13:00:00 Yes 21 Jack Hipkiss Kirkstall Harriers M 20‐24 1 13:00:00 Yes 22 Joe Howard Wakefield Triathlon Club M 20‐24 1 13:00:00 Yes 23 Jonny Tomes Yorkshire Velo / Craven Energy M 20‐24 1 13:00:00 24 Matt Keogh Southampton Tri Club M 20‐24 1 13:00:00 Yes 25 Jack Ellis M 20‐24 1 13:00:00 26 Henry Fitton‐Thomas M 20‐24 1 13:00:00 -

Sulla Riscoperta Di Ludovico De Donati: Spunti Dal Fondo Caffi *

SULLA RISCOPERTA DI LUDOVICO DE DONATI: SPUNTI DAL FONDO CAFFI * Come nel caso di altri artisti minori, Ludovico (o Alvise) de Donati rimase a lungo ignoto e la ricostruzione della sua carriera, precisata in maniera soddi- sfacente solo nell’arco degli ultimi decenni, iniziò nella metà del XVIII secolo 1. Il nome dell’artista sembra essere apparso per la prima volta nel 1752, quando il padre domenicano Agostino Maria Chiesa, spinto da intenti diversi dall’amore per l’arte, descrisse il trittico della chiesa di San Benigno a Berbenno (Sondrio) riportando l’iscrizione che lo dichiarava opera di «Aluisius de Donatis» 2. Questo ricordo tuttavia passò inosservato a causa della natura agiografica del testo. Al contrario fu prontamente registrato e godette di ampia diffusione il passo della Storia pittorica d’Italia (1795-1796) di Luigi Lanzi dove il pittore venne presentato come Luigi De Donati, comasco e discepolo del Civerchio, autore di non meglio specificate «tavole autentiche» 3. Come Lanzi stesso ammetteva, il giudizio su *) Desidero ringraziare il prof. Giovanni Agosti, per le preziose indicazioni bibliografiche, e Laura Andreozzi, per i suoi competenti consigli sulla stesura dell’Appendice. 1) Su Ludovico vd. Natale - Shell 1987, pp. 656-660; Porro 1990, pp. 399-416; Mascetti 1993, p. 91; Gorini 1993, pp. 449-450; Battaglia 1996, pp. 209-241; Natale 1998, pp. 65-68; Partsch 2001, pp. 499-502; Baiocco 2004, pp. 167-168. Riprende il problema dell’attività giovanile di Ludovico Bentivoglio Ravasio 2006, pp. 100-104. 2) Chiesa 1752, p. 170; già segnalato in Porro 1990, p. 416 nt. 52. -

The Journal of College and University Student Housing

The Journal of College and University Student Housing Sustainability Theme Issue Volume 36, No. 1 • April/May 2009 Association of College & University Housing Officers – International The Journal of College and University Student Housing Volume 36, No. 1 • April/May 2009 Copyright Information: Articles published in The Journal of College and University Student Housing are copyright The Association of College & University Housing Officers – International (ACUHO-I) unless noted otherwise. For educational purposes, information may be used without restriction. However, ACUHO-I does request that copies be distributed at or below cost and that proper identification of author(s) andThe Journal of College and University Student Housing be affixed to each copy. Abstracts and Indexes: Currently abstracted in Higher Education Abstracts. Subscriptions: $30 per two-volume year for members $40 for nonmembers single copies $15 per copy for members, $25 for nonmembers Available from the ACUHO-I Central Office, 941 Chatham Lane, Suite 318, Columbus, Ohio 43221-2416. Xerographic or microfilm reprints of any previous issue: University Microfilms International, Serials Bid Coordinator, 300 North Zeeb Road, Ann Arbor, Michigan 48106. The ACUHO-I Foundation The Journal of College and University Student Housing is supported, in part, by the ACUHO-I Foundation. The ACUHO-I Foundation was formed in 1988 to provide a way for individuals, institutions, corporations, government agencies, and other foundations to support the collegiate housing profession through gifts and grants. Since its inception, the Foundation has raised more than $1 million to fund commissioned research, study tours, conference speakers, institutes, and scholarships. More information about the ACUHO-I Foundation, its work, and means to make a contribution can be found at www.acuho-i.org. -

2003 Directory of State and Local Government

DIRECTORY OF STATE AND LOCAL GOVERNMENT Prepared by RESEARCH DIVISION LEGISLATIVE COUNSEL BUREAU January 2003 Revised October 2004 TABLE OF CONTENTS TABLE OF CONTENTS Please refer to the Alphabetical Index to the Directory of State and Local Gov- ernment for a complete list of agencies. NEVADA STATE GOVERNMENT ORGANIZATION CHART .................D-9 CONGRESSIONAL DELEGATION .................................................. D-11 DIRECTORY OF STATE GOVERNMENT CONSTITUTIONAL OFFICERS: Attorney General ....................................................................... D-13 State Controller ......................................................................... D-17 Governor ................................................................................. D-18 Lieutenant Governor ................................................................... D-21 Secretary of State ....................................................................... D-22 State Treasurer .......................................................................... D-23 EXECUTIVE BOARDS ................................................................. D-24 UNIVERSITY AND COMMUNITY COLLEGE SYSTEM OF NEVADA .... D-25 EXECUTIVE BRANCH AGENCIES: Department of Administration ........................................................ D-30 Administrative Services Division ............................................... D-30 Budget Division .................................................................... D-30 Economic Forum ................................................................. -

Delinquent Suwannee Democrat 129Th YEAR, NO

2013 SUWANNEE COUNTY TAX LIST SPECIAL 2014 NOTICE OF TAX CERTIFICATE SALE SECTION INSIDE Delinquent Suwannee Democrat 129th YEAR, NO. 67 | 3 SECTIONS, 46 PAGES Wednesday Edition — May 21, 2014 50 CENTS Serving Suwannee County since 1884, including Live Oak, Wellborn, Dowling Park, Branford, McAlpin and O’Brien “This is the phase of the project many people Confusion have been waiting for.” at SVTA - Sheryl Rehberg, executive director of CareerSource North Florida during Klausner now transition accepting applications Board puts hold on 15 positions posted comp time, reverts in Employ Florida to 1983 manual By Joyce Marie Taylor By Bryant Thigpen [email protected] [email protected] The boardroom was packed with people Construction on a state-of-the-art during a two-and-a-half-hour Suwannee Val- sawmill at the catalyst site in Suwan- ley Transit Authority (SVTA) Board of Direc- nee County is underway and applica- tors meeting on Tuesday, May 13, as the board tions are now being accepted for vari- struggled to decipher which Personnel Poli- ous positions within the company, ac- cies and Procedures Manual they were operat- cording to Sheryl Rehberg, executive ing under. director of CareerSource North Flori- SVTA is being watched closely by the da. Klausner Lumber One LLC is ex- pected to bring about 350 jobs within SEE CONFUSION, PAGE 13A the first three years of operation. The first concrete was poured at the Klausner site on Saturday, March 15. There are currently over a dozen - Photo: CareerSource North Florida jobs posted on CareerSource’s website including: forklift driver, sawmill; metal worker; log yard supervisor; hu- In April, CareerSource North Flori- forklift driver, planer mill; electrician man resources manager; accounting da had about 500 people turn out for department manager; wheel loader dri- clerk; controller; on-site IT support; an event at the Suwannee-Hamilton ver infeed-saw; kiln/boiler operator; environmental, health and safety man- electrician; metal working manager; ager; and a senior accountant. -

Publication DILA

o Quarante-cinquième année. – N 41 B ISSN 0298-2978 Samedi 26 et dimanche 27 février 2011 BODACCBULLETIN OFFICIEL DES ANNONCES CIVILES ET COMMERCIALES ANNEXÉ AU JOURNAL OFFICIEL DE LA RÉPUBLIQUE FRANÇAISE DIRECTION DE L’INFORMATION Standard......................................... 01-40-58-75-00 LÉGALE ET ADMINISTRATIVE Annonces....................................... 01-40-58-77-56 Accueil commercial....................... 01-40-15-70-10 26, rue Desaix, 75727 PARIS CEDEX 15 Abonnements................................. 01-40-15-67-77 www.dila.premier-ministre.gouv.fr (8h30à 12h30) www.bodacc.fr Télécopie........................................ 01-40-15-72-75 BODACC “B” Modifications diverses - Radiations Avis aux lecteurs Les autres catégories d’insertions sont publiées dans deux autres éditions séparées selon la répartition suivante Vente et cessions................................................ Créations d’établissements ............................... Procédures collectives ....................................... BODACC “A” Procédures de rétablissement personnel ....... Avis relatifs aux successions ............................ } Avis de dépôt des comptes des sociétés ....... BODACC “C” Banque de données BODACC servie par les sociétés : Altares-D&B, EDD, Extelia, Questel, Tessi Informatique, Jurismedia, Pouey International, Scores et Décisions, Les Echos, Creditsafe, Coface services, Cartegie, La Base Marketing, Infolegale, France Telecom Orange, Telino et Maxisoft. Conformément à l’article 4 de l’arrêté du 17 mai 1984 relatif à la -

Annual Report

2008-09 Cortland College Foundation Annual Report 1 2008-09 Cortland College Foundation Annual Report TABLE OF CONTENTS Note: Selecting a title below will take you directly to the page. To search for a name, a class year or any other word(s), select the Edit menu and go to Find. 3 A Message From The Chair: Overcoming Tough Times Together 4 The Cortland Fund: Alumni Give in Record Numbers 5 Alumni Profile: Ethel McCloy Smiley ’31 6 Saying ‘Thank You’ with a Scholarship 7 Alumni Profile: Gerald “Jerry” Theisen ’53, M.S. ’58 8 ASC’s $980,000 Gift Builds Scholarship Endowment 9 A Heartfelt Thanks To Our Donors Chart: SUNY Cortland 2008-09 Sources of Funding Cortland College Foundation Board of Directors 10 Lifetime Giving Recognition Societies 11-13 Partners in Leadership 13 Associate Partners in Leadership 14-15 The Lofty Elm Society 15-18 Memorial Gifts 19-20 Honorary Gifts 21-22 Our Dedicated Volunteers 23-50 Alumni Gifts 24 Chart: A Summary of Gifts 25 Chart: 2009 Class Reunion Gift Campaign 51-52 Faculty, Staff and Emeriti Gifts 52-54 Friend, Foundation and Organization Gifts 55 Parent, Student and Family Gifts 56 Corporate and Matching Gifts 57-61 Gifts in Honor of Current Students 62-63 Gifts in Honor of Seniors 64 Gifts in Kind New Look for Annual Report Preserves Our Financial, Natural Resources 2 Table of Contents A Message From The Chair Overcoming Tough Times Together Brian Murphy ’83, Chair, Cortland College Foundation Although I have been a Cortland College Foundation Board Watching the festivities in the Park Center Alumni Arena, member since 2005, I had the privilege in 2008-09 of serving I was moved by the thought of your gifts to the foundation as the board chair.