A Program for Screening Remotely Captured Images

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

XF Camera System User Manual

XF Camera System User Manual XF Camera System Manual | Table of Contents 1 Jump to Table of Contents XF Camera System Manual | Table of Contents 2 Content | XF Camera System Manual Primary parts of the XF Camera System 4 XF Camera System Capture Modes 59 Lens, XF Camera Body, IQ Digital Back 4 XF Camera System Exposure Modes 61 Dials, Buttons and Touch Screen Interface 6 Lens, XF Camera Body, IQ Digital Back 6 Exposure Compensation 63 Assembling the XF Camera System 8 Long Exposure 64 Digital Back and camera body modularity 8 Electronic Shutter (ES) 65 Readying the camera system 10 General advice for using the battery and charger 11 Live View 67 Live View on the LCD, Capture One or HDMI monitor 67 Navigating the XF Camera System 14 XF Camera Body Controls 15 XF Custom Presets and System Backup 70 Customizing buttons and dials 18 Flash Photography 73 Profoto Remote Tool 74 OneTouch User Interface Flow Diagram 20 Triggering a Capture with the Profoto Remote 75 XF Camera Menu Overview 20 Flash Analysis Tool 76 IQ Digital Back Menu Overview 22 Rear Curtain sync and Trim 76 Shutter Mechanisms and Flash Synchronization range 77 OneTouch User Interface Overview 25 XF Camera Body Navigation 25 XF Camera System Lenses 78 Top Touch Screen Display 26 Capture One Pro 79 XF Tools on the Top Touch Screen 28 Capture One Tethered Use 82 IQ Digital Back Navigation 33 IQ Digital Back Navigation Shortcuts 34 Built-In WiFi and Capture Pilot 84 IQ Digital Back Viewing images 34 A-series Camera System 87 IQ Digital Back Contextual Menu 35 Overview of Contextual -

Underwater Survey for Oil and Gas Industry: a Review of Close Range Optical Methods

remote sensing Review Underwater Survey for Oil and Gas Industry: A Review of Close Range Optical Methods Bertrand Chemisky 1,*, Fabio Menna 2 , Erica Nocerino 1 and Pierre Drap 1 1 LIS UMR 7020, Aix-Marseille Université, CNRS, Université De Toulon, 13397 Marseille, France; [email protected] (E.N.); [email protected] (P.D.) 2 3D Optical Metrology (3DOM) Unit, Bruno Kessler Foundation (FBK), Via Sommarive 18, 38123 Trento, Italy; [email protected] * Correspondence: [email protected] Abstract: In both the industrial and scientific fields, the need for very high-resolution cartographic data is constantly increasing. With the aging of offshore subsea assets, it is very important to plan and maintain the longevity of structures, equipment, and systems. Inspection, maintenance, and repair (IMR) of subsea structures are key components of an overall integrity management system that aims to reduce the risk of failure and extend the life of installations. The acquisition of very detailed data during the inspection phase is a technological challenge, especially since offshore installations are sometimes deployed in extreme conditions (e.g., depth, hydrodynamics, visibility). After a review of high resolution mapping techniques for underwater environment, this article will focus on optical sensors that can satisfy the requirements of the offshore industry by assessing their relevance and degree of maturity. These requirements concern the resolution and accuracy but also cost, ease of implementation, and qualification. With the evolution of embedded computing resources, in-vehicle optical survey solutions are becoming increasingly important in the landscape of large-scale mapping solutions and more and more off-the-shelf systems are now available. -

USING SONY A900 for ASTROPHOTOGRAPHY • TELESCOPE ADAPTORS - PRIME FOCUS - EYEPIECE PROJECTION

USING SONY a900 for ASTROPHOTOGRAPHY • TELESCOPE ADAPTORS - PRIME FOCUS - EYEPIECE PROJECTION • REMOTE RELEASE - SONY CABLE RELEASE RM-S1AM - SONY REMOTE COMMANDER RMT-DSLR1 - GADGET INFINITY RADIO S1 REMOTE - JJC-JR-C IR MODULAR REMOTE - HÄHNEL HW433S80 RF WiFi REMOTE - JJC TM-F LCD DIGITAL TIMER / INTERVALOMETER • DRIVE FUNCTION - MIRROR LOCK-UP • FOCUS - SCREEN L - DIOPTRE CORRECTION - RIGHT ANGLE MAGNIFIER • FINE FOCUS - RACK & PINION FOCUSER - MOTORISED FOCUS - REMOTE DIGITAL FOCUSER - DEPTH OF FOCUS • TRACKING FOCUS • SEEING • UN-DAMPED VIBRATION - ELECTROMAGNETIC SHUTTER RELEASE • CHECKING FOCUS • LAPTOP REMOTE CAPTURE & CONTROL vs LCD VIEW SCREEN • EXPOSURE - MANUAL MODE OPTION - CALCULATING EXPOSURE TIMES - ISO SETTING & RESOLUTION - ISO vs SEEING - BRACKETING vs DR-O USING SONY a900 for ASTROPHOTOGRAPHY The Sony a900 DSLR is capable of taking fine photographs of the Sun in H-alpha and white light, the Moon, and deep sky objects. It has a 35mm Fx format CMOS sensor specifically designed for low light level photography. The twin BIONZ processors are designed to produce high resolution, low noise, high dynamic range images. The 3-inch LCD view screen may enlarge raw images x19, and its resolution is sufficient to judge precise focus without having to resort to a remote laptop monitor. The optical viewfinder affords 100% frame coverage, and has internal dioptre correction and interchangeable focusing screens. • TELESCOPE ADAPTORS - PRIME FOCUS The Sony 'Exmor' CMOS sensor size is 35.9mm x 24.0mm, giving a frame diagonal 43.2mm. The throat clearance I.D. of the prime focus adaptor needs to accommodate an image circle 43.3mm diameter (allowing 0.1mm clearance) otherwise the frame corners will be mechanically vignetted. -

Ilca-99M2 Α99ii

Interchangeable Lens Digital Camera ILCA-99M2 α99II Names of parts/Icons and indicators Names of parts Front side [1] Rear side [2] Top side [3] Sides [4] Bottom [5] Basic operations Using the multi-selector [6] Using the front multi-controller [7] Using MENU items [8] Using the Fn (Function) button [9] How to use the Quick Navi screen [10] How to use the keyboard [11] Icons and indicators List of icons on the monitor [12] Indicators on the display panel [13] Switching the screen display (while shooting/during playback) [14] DISP Button (Monitor/Finder) [15] Preparing the camera Checking the camera and the supplied items [16] Charging the battery pack Charging the battery pack using a charger [17] Inserting/removing the battery pack [18] Battery life and number of recordable images [19] Notes on the battery pack [20] Inserting a memory card (sold separately) Inserting/removing a memory card [21] Memory cards that can be used [22] Notes on memory card [23] Recording images on two memory cards Selecting which memory card to record to (Select Rec. Media) [24] Attaching a lens Attaching/removing a lens [25] Attaching the lens hood [26] Attaching accessories Vertical grip [27] Setting language, date and time [28] In-Camera Guide [29] Shooting Shooting still images [30] Focusing Focus Mode [31] Auto focus Auto focus mechanism [32] Focus Area [33] Focus Standard [34] AF/MF control [35] AF w/ shutter (still image) [36] AF On [37] Eye AF [38] AF Range Control [39] AF Rng.Ctrl Assist (still image) [40] Center Lock-on AF [41] Eye-Start AF (still image) [42] AF drive speed (still image) [43] AF Track Sens (still image) [44] Priority Set in AF-S [45] Priority Set in AF-C [46] AF Illuminator (still image) [47] AF Area Auto Clear [48] Wide AF Area Disp. -

Peter Read Miller on Sports Photography

Final spine = 0.5902 in. PETER READ MILLER ON SPORTS PHOTOGRAPHY A Sports Illustrated® photographer’s tips, tricks, and tales on shooting football, the Olympics, and portraits of athletes P ETER READ MILLER ON SPORTS PHOTOGRAPHY A Sports Illustrated photographer’s tips, tricks, and tales on shooting football, the Olympics, and portraits of athletes PETER READ MILLER ON SPORTS PHOTOGRAPHY A Sports Illustrated ® photographer’s tips, tricks, and tales on shooting football, the Olympics, and portraits of athletes Peter Read Miller New Riders www.newriders.com New Riders is an imprint of Peachpit, a division of Pearson Education Find us on the Web at www.newriders.com To report errors, please send a note to [email protected] Copyright © 2014 by Peter Read Miller Acquisitions Editor: Ted Waitt Project Editor: Valerie Witte Senior Production Editor: Lisa Brazieal Developmental and Copy Editor: Anne Marie Walker Photo Editor: Steve Fine Proofreader: Erfert Fenton Composition: Kim Scott/Bumpy Design Indexer: Valerie Haynes Perry Cover and Interior Design: Mimi Heft Cover Images: Peter Read Miller NOTICE OF RIGHTS All rights reserved. No part of this book may be reproduced or transmitted in any form by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of the publisher. For information on getting permission for reprints and excerpts, contact [email protected]. NOTICE OF LIABILITY The information in this book is distributed on an “As Is” basis, without warranty. While every precaution has been taken in the preparation of the book, neither the author nor Peachpit shall have any liability to any person or entity with respect to any loss or damage caused or alleged to be caused directly or indirectly by the instructions contained in this book or by the computer software and hardware products described in it. -

Fundamentals of Photography Course Guidebook

Topic Subtopic Better Living Arts & Leisure Fundamentals of Photography Course Guidebook Joel Sartore Photographer, National Geographic Fellow PUBLISHED BY: THE GREAT COURSES Corporate Headquarters 4840 Westfi elds Boulevard, Suite 500 Chantilly, Virginia 20151-2299 Phone: 1-800-832-2412 Fax: 703-378-3819 www.thegreatcourses.com Copyright © The Teaching Company, 2012 Printed in the United States of America This book is in copyright. All rights reserved. Without limiting the rights under copyright reserved above, no part of this publication may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form, or by any means (electronic, mechanical, photocopying, recording, or otherwise), without the prior written permission of The Teaching Company. Joel Sartore Professional Photographer National Geographic Magazine oel Sartore is a photographer, a speaker, an author, a teacher, and a regular contributor Jto National Geographic magazine. He holds a bachelor’s degree in Journalism, and his work has been recognized by the National Press Photographers Association and the Pictures of the Year International competition. Mr. Sartore was recently made a Fellow in the National Geographic Society for his work as a conservationist. The hallmarks of his professional style are a sense of humor and a midwestern work ethic. Mr. Sartore’s assignments have taken him to some of the world’s most beautiful and challenging environments, from the Arctic to the Antarctic. He has traveled to all 50 states and all seven continents, photographing every- thing from Alaskan salmon-fi shing bears to Amazonian tree frogs. His most recent focus is on documenting wildlife, endan gered species, and landscapes, bringing public attention to what he calls “a world worth saving.” His interest in nature started in child hood, when he learned about the very last passenger pigeon from one of his mother’s TIME LIFE picture books. -

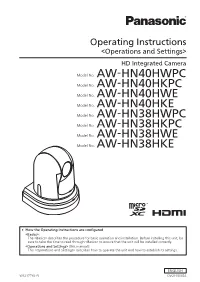

AW-HN40H Operating Instructions

Operating Instructions <Operations and Settings> HD Integrated Camera Model No. AW‑HN40HWPC Model No. AW‑HN40HKPC Model No. AW‑HN40HWE Model No. AW‑HN40HKE Model No. AW‑HN38HWPC Model No. AW‑HN38HKPC Model No. AW‑HN38HWE Model No. AW‑HN38HKE ● How the Operating Instructions are configured <Basics>: The <Basics> describes the procedure for basic operation and installation. Before installing this unit, be sure to take the time to read through <Basics> to ensure that the unit will be installed correctly. <Operations and Settings> (this manual): This <Operations and Settings> describes how to operate the unit and how to establish its settings. ENGLISH W1217TY0 -FJ DVQP1509ZA Trademarks and registered trademarks Abbreviations ● Microsoft®, Windows®, Windows® 7, Windows® 8, The following abbreviations are used in this manual. Windows® 8.1, Internet Explorer® and ActiveX® are ● Microsoft® Windows® 7 Professional SP1 32/64-bit is either registered trademarks or trademarks of Microsoft abbreviated to “Windows 7”. Corporation in the United States and other countries. ● Microsoft® Windows® 8 Pro 32/64-bit is abbreviated to ● Intel® and Intel® CoreTM are trademarks or registered “Windows 8”. trademarks of Intel Corporation in the United States and ● Microsoft® Windows® 8.1 Pro 32/64-bit is abbreviated to other countries. “Windows 8.1”. ● Adobe® and Reader® are either registered trademarks or ● Windows® Internet Explorer® 8.0, Windows® Internet trademarks of Adobe Systems Incorporated in the United Explorer® 9.0, Windows® Internet Explorer® 10.0 and States and/or other countries. Windows® Internet Explorer® 11.0 are abbreviated to ● HDMI, the HDMI logo and High-Definition Multimedia “Internet Explorer”. -

XF Camera System User Manual

XF Camera System User Manual XF Camera System Manual | Table of Contents 1 Jump to XF Camera System Manual | Table of Contents 2 Table of Contents Content | XF Camera System Manual Primary parts of the XF Camera System 4 Basic settings, and how to restore defaults. 39 Lens, XF Camera Body, IQ Digital Back 4 Custom White Balance 41 Dials, Buttons and Touch Screen Interface 6 Creating a Custom White Balance 41 Lens, XF Camera Body, IQ Digital Back 6 HAP-1 AF Focusing system 42 Assembling the XF Camera System 8 Working with hyperfocal distance 45 Digital Back and camera body modularity 8 XF Camera System Capture Modes 47 Readying the camera system 10 General advice for using the battery and charger 11 XF Camera System Exposure Modes 48 Navigating the XF Camera System 14 Exposure Compensation 49 XF Camera Body Controls 15 Long Exposure 50 OneTouch User Interface Flow Diagram 18 Live View 51 XF Camera Menu Overview 18 Live View on the LCD, Capture One or HDMI monitor 51 IQ Digital Back Menu Overview 20 XF Camara Body OneTouch User Interface Overview 23 Custom Presets 53 XF Camera Body Navigation 23 Top Touch Screen Display 24 Flash Photography 54 Locking the Touch and/or the Dials on the XF Camera 25 Tools on the XF Top screen 25 XF Camera System Lenses 55 IQ Digital Back Navigation 27 Capture One Pro 56 IQ Digital Back Navigation Shortcuts 28 The XF Camera System is bundled with Capture One Pro, 56 IQ Digital Back Viewing images 28 Capture One Tethered Use 59 IQ Digital Back Contextual Menu 29 Overview of Contextual Menu 29 Built-In WiFi and -

Rm-Lp100u Rm-Lp100e Instructions

REMOTE CAMERA CONTROLLER RM-LP100U RM-LP100E INSTRUCTIONS . Specifications and appearance of this unit are subject to change for further improvement without prior notice. Details For details on settings and operation, refer to “INSTRUCTIONS” on the website. Please check the latest INSTRUCTIONS, tools, etc. from the URL below. North America: http://pro.jvc.com/prof/main.jsp Europe: http://www.service.jvcpro.eu/public/ China: http://www.jvc.com.cn/service/download/index.html Thank you for purchasing this product. Before beginning to operate this unit, please read the “INSTRUCTIONS” carefully to ensure proper use of the product. In particular, please read through the “Safety Precautions” to ensure safe use of the product. After reading, store them with the warranty card and refer to them whenever needed. The serial number is crucial for the purpose of quality control. After purchasing this product, please check to ensure that the serial number is indicated correctly on this product, and the same serial number is also indicated on the warranty card. Please read the following before getting started: Thank you for purchasing this product. Before operating this unit, please read the instructions For Customer Use: carefully to ensure the best possible performance. Enter below the Serial No. which is located on the body. Retain this information for future reference. In this manual, each model number is described without the last letter (U/E) which means the shipping destination. Model No. RM-LP100U (U: for USA and Canada, E: for Europe) Serial No. Only “U” models (RM-LP100U) have been evaluated by UL. IM 1.01 B5A-1936-00 Safety Precautions Cautions Getting Started WARNING: CAUTION TO PREVENT FIRE OR SHOCK HAZARD, DO NOT EXPOSE THIS UNIT TO RAIN OR MOISTURE. -

Remote Camera Trap Installation and Servicing Protocol

Remote Camera Trap Installation and Servicing Protocol Citizen Wildlife Monitoring Project 2017 Field Season Prepared by David Moskowitz Alison Huyett Contributors: Aleah Jaeger Laurel Baum This document available online at: https://www.conservationnw.org/wildlife-monitoring/ Citizen Wildlife Monitoring Project partner organizations: Conservation Northwest and Wilderness Awareness School Contents Field Preparation……………………………………………………………………………...1 Installing a Remote Camera Trap…………………………………………………………...2 Scent lures and imported attractants………………………………………………..5 Setting two remote cameras in the same area……………………………………..6 Servicing a Remote Camera Trap ……………………………………................................6 Considerations for relocating a camera trap………………………………………...8 Remote Camera Data Sheet and Online Photo Submission………………………….......8 CWMP Communications Protocol……………………………………………………11 Wildlife Track and Sign Documentation………………..……………………………………12 Acknowledgements…………………………………………………………………………....13 Appendix: Track Photo Documentation Guidelines………………………………………..14 Field Preparation 1. Research the target species for your camera, including its habitat preferences, tracks and signs, and previous sightings in the area you are going. 2. Research your site, consider your access and field conditions. Where will you park? Do you need a permit to park in this location? What is your hiking route? Call the local ranger district office closest to your site for information on current field conditions, especially when snow is possible to still be present. 3. Know your site: familiarize yourself with your location, the purpose of your monitoring, target species, and site-specific instructions (i.e. scent application, additional protocols). 4. Review this protocol and the species-specific protocol for your camera trap installation, to understand processes and priorities for the overall program this year. 5. Coordinate with your team leader before conducting your camera check to make sure you receive any important updates. -

Remote Camera System Guide

C-960-100-13 (1) EN Remote Camera System Guide © 2017 Sony Corporation 0002 Table of Contents Read This First ....................................................................3 Chapter 3 Products Chapter 1 Application Remote cameras ..............................................................36 Small Studios .....................................................................5 System camera .................................................................39 Reality Shows .....................................................................6 Remote controllers ...........................................................40 Houses of Worship .............................................................7 Switchers ..........................................................................41 Lecture Capture ..................................................................8 Optional items for BRC-H900 ..........................................42 Event Production ................................................................9 Edge Analytics Appliance ................................................43 Parliament/Congress .......................................................10 Chapter 4 Edge Analytics Appliance Application Live Sports Events ............................................................11 Edge Analytics Appliance Application Configuration .....45 Video Conferences ...........................................................12 Usage example of Handwriting Extraction in the Radio Booth ......................................................................13 -

Space Science Researcher

Space Science Researcher cientists have used the observation and exploration of light to make discoveries that deepen their S understanding of the Sun, stars, and other objects in space. In this badge, you’ll re-create some of these scientific experiments, observe the night sky with your own eyes, and explore the possibility of seeing light in new ways. Steps 1. What more can you see? 2. Explore “invisible” light 3. See the stars in a new way “ It’s an incredible 4. Expand your vision 5. Conserve the night sky Universe we’re in and how could you do Purpose anything but try and When I’ve earned this badge, Credit: Lammen, Y. DSI learn about it?” I will understand more about the amazing properties of light —Vera Rubin, and how we use it to make astronomer honored with the National Medal of Science discoveries about the who discovered dark matter Universe and space science. SOFIA telescope SPACE SCIENCE RESEARCHER 1 Every step has three choices. Do ONE choice to complete each step. STEP Inspired? Do more! What more 1 can you see? When you study space science, you are studying light from stars and other objects in space, including our Sun. Because visible light reaches our eyes by bouncing off objects, we see green trees, red cars, and planets of different colors. Create a This light from our star—the Sun—appears to be one color. Is it Notebook possible it’s made of all the colors we see? Let’s find out! CHOICES—DO ONE: Go retro! Carrying a notebook is a quick, low-tech way to Construct a spinner.