Bachelor Project

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Uila Supported Apps

Uila Supported Applications and Protocols updated Oct 2020 Application/Protocol Name Full Description 01net.com 01net website, a French high-tech news site. 050 plus is a Japanese embedded smartphone application dedicated to 050 plus audio-conferencing. 0zz0.com 0zz0 is an online solution to store, send and share files 10050.net China Railcom group web portal. This protocol plug-in classifies the http traffic to the host 10086.cn. It also 10086.cn classifies the ssl traffic to the Common Name 10086.cn. 104.com Web site dedicated to job research. 1111.com.tw Website dedicated to job research in Taiwan. 114la.com Chinese web portal operated by YLMF Computer Technology Co. Chinese cloud storing system of the 115 website. It is operated by YLMF 115.com Computer Technology Co. 118114.cn Chinese booking and reservation portal. 11st.co.kr Korean shopping website 11st. It is operated by SK Planet Co. 1337x.org Bittorrent tracker search engine 139mail 139mail is a chinese webmail powered by China Mobile. 15min.lt Lithuanian news portal Chinese web portal 163. It is operated by NetEase, a company which 163.com pioneered the development of Internet in China. 17173.com Website distributing Chinese games. 17u.com Chinese online travel booking website. 20 minutes is a free, daily newspaper available in France, Spain and 20minutes Switzerland. This plugin classifies websites. 24h.com.vn Vietnamese news portal 24ora.com Aruban news portal 24sata.hr Croatian news portal 24SevenOffice 24SevenOffice is a web-based Enterprise resource planning (ERP) systems. 24ur.com Slovenian news portal 2ch.net Japanese adult videos web site 2Shared 2shared is an online space for sharing and storage. -

Casual Gaming

Casual gaming KW Cheng [email protected] VU Amsterdam January 28, 2011 Abstract Common elements in the design of casual games include [TRE10]: Casual games have started to get a large player - Rules and goals must be clear. base in the last decade. In this paper we are - Players need to be able to quickly reach profi- going to have a basic look at the technology in- ciency. volved in creating casual games, common game - Casual gameplay adapts to a players life and mechanics, and the influence of social media on schedule. casual games. - Game concepts borrow familiar content and themes from life. 1 Introduction 1.2 History In this paper we are going to look at how ca- sual games are being created. This will include The start of casual gaming began in 1990 when useful tools, and commonly used programming Microsoft started bundling Windows Solitaire with languages. The main part of this paper will look Windows. Many people were still getting used at some game mechanics which are at the core to the idea of using a mouse to navigate through of casual games. We will pick out some popular a graphical user interface. Microsoft used Win- games and look at which mechanics are crucial to dows Solitaire to train people to use the mouse a successful gameplay. We will also look at the and to soothe people intimidated by the operat- influence of how social media introduced more ing system. [LEV08] The reason why Windows people to casual games. Solitaire is successful is because it is accessible, you do not have to install anything, because it 1.1 What are casual games comes with your operating system. -

Comparative Study of Anti-Cheat Methods in Video Games

Comparative Study of Anti-cheat Methods in Video Games Samuli Lehtonen Master’s thesis UNIVERSITY OF HELSINKI Department of Computer Science Helsinki, March 7, 2020 HELSINGIN YLIOPISTO — HELSINGFORS UNIVERSITET — UNIVERSITY OF HELSINKI Tiedekunta — Fakultet — Faculty Laitos — Institution — Department Faculty of Science Department of Computer Science Tekijä — Författare — Author Samuli Lehtonen Työn nimi — Arbetets titel — Title Comparative Study of Anti-cheat Methods in Video Games Oppiaine — Läroämne — Subject Computer Science Työn laji — Arbetets art — Level Aika — Datum — Month and year Sivumäärä — Sidoantal — Number of pages Master’s thesis March 7, 2020 71 + 48 as appendices Tiivistelmä — Referat — Abstract Online gaming is more popular than ever and many video game companies are reliant on the cash flow generated by online games. If a video game company wants its game to be successful, the game has to be resilient against cheating, the presence of which can ruin an otherwise successful game. Cheating in a video game can bankrupt an entire company as the non-cheating players leave the game because of unscrupulous individuals using cheats to gain an unfair advantage. Cheating can also involve criminal activity where maliciously acquired in-game items are traded against real money online. Commercial cheat programs are sold on online black markets and are available even to players who have no deep technical knowledge. The widespread availability and easy accessibility of cheats compounds the issue. This thesis will categorize different anti-cheat techniques and give a brief history of anti-cheat starting from the early 1980s. The history section describes how the fight against online cheating began and how it has evolved over the years. -

Joël A. Lamotte Born: 1983 – French Tel: 0(+33)6.52.26.61.58 Email: [email protected]

Joël A. Lamotte Born: 1983 – French Tel: 0(+33)6.52.26.61.58 email: [email protected] PROFESSIONAL EXPERIENCE Independent - Lille (France) (Since July 2012) Projects: Working on NetRush (RTS game) and Art Of Sequence (OSS digital story-telling tools) Developed a client-server multi-process concurrent-tasks game-specific engine for the needs of NetRush (RTS game). Learned a lot about concurrency (using C++) while doing so, using the practical case of this game. Designed NetRush and several other smaller game prototypes. Developed and published an interpreter for AOSL in JavaScript as a partial Proof of Concept of Art of Sequence projects. Development on Art Of Sequence tools are still going on. Kayac - Kamakura (Japan) Creator (2012, 4 months) Projects: Make Games (Farmer Carrots Zombies , unreleased rogue-like prototype) Provided international game development expertise and point of view to the company that wished to sell games worldwide. Challenged to develop an iOS game in no time. It took us 2 weeks to produce FCZ, I made all the code and sound design and half of the game design. However, pressed by the time we were not able to do better. Releasing it publicly what not my decision but I did my best to make it enjoyable. Learned iOS (ObjectiveC/C++) development, Japanese keyboard, MacOSX use and Cocos2D-X (which I patched and provided back to the devs) in a very short time. Proposed 7 game concepts to work on next (after FCZ) which have all been approved, the game development team being confident in my skills, they suggested that I should chose the project myself. -

Lumin Software End User License Agreement Version 0.96

LUMIN SOFTWARE END USER LICENSE AGREEMENT VERSION 0.96 IMPORTANT: PLEASE READ THIS LUMIN SOFTWARE END USER LICENSE AGREEMENT (THIS “LICENSE”) CAREFULLY BEFORE USING YOUR MAGIC LEAP ONE (“DEVICE”). THE TERMS OF THIS LICENSE ARE ALSO AVAILABLE ON MAGIC LEAP’S WEBSITE LOCATED AT WWW.MAGICLEAP.COM/LEGAL. THIS LICENSE DESCRIBES THE LIMITED RIGHTS YOU HAVE TO USE THE LUMIN SOFTWARE ON YOUR DEVICE AND IS A BINDING CONTRACT BETWEEN YOU AND MAGIC LEAP, INC. ("MAGIC LEAP"). BY CLICKING “ACCEPT”, OR BY INSTALLING OR USING THE LUMIN SOFTWARE, YOU REPRESENT YOU HAVE READ, UNDERSTAND, AND AGREE TO BE BOUND BY THE TERMS AND CONDITIONS OF THIS LICENSE. IF YOU DO NOT AGREE TO THIS LICENSE, INCLUDING THE MANDATORY ARBITRATION AND CLASS ACTION WAIVER REFERENCED IN SECTION 10, THEN YOU MAY NOT CONTINUE WITH THE INSTALLATION OR USE OF THE LUMIN SOFTWARE. IN SUCH EVENT, YOU MAY RETURN THE DEVICE WITHIN THE RETURN PERIOD IN ACCORDANCE WITH MAGIC LEAP’S RETURN POLICY LOCATED AT WWW.MAGICLEAP.COM/WARRANTY. NOTICES REGARDING OSS (AS DEFINED BELOW) AND OTHER ITEMS ARE ADDRESSED AT THE END OF THIS LICENSE. 1. LICENSE TO LUMIN SOFTWARE AND UPDATES 1.1 This License covers your use of all Lumin Software, unless any particular Lumin Software component or application is accompanied by separate terms. In that case, the separate terms will solely govern the applicable Lumin Software component or application. If the separate terms do not accompany the Lumin Software component or application, they may be found in the Lumin Software’s NOTICES file. “Lumin Software” means individually or collectively: (a) the Lumin OS software on your Device; (b) all applications that are pre-installed on your Device; (c) certain OSS (defined in Section 4 below); and (d) all related documentation. -

Multiplayer Game Programming: Architecting Networked Games

ptg16606381 Multiplayer Game Programming ptg16606381 The Addison-Wesley Game Design and Development Series Visit informit.com/series/gamedesign for a complete list of available publications. ptg16606381 Essential References for Game Designers and Developers hese practical guides, written by distinguished professors and industry gurus, Tcover basic tenets of game design and development using a straightforward, common-sense approach. The books encourage readers to try things on their own and think for themselves, making it easier for anyone to learn how to design and develop digital games for both computers and mobile devices. Make sure to connect with us! informit.com/socialconnect Multiplayer Game Programming Architecting Networked Games ptg16606381 Joshua Glazer Sanjay Madhav New York • Boston • Indianapolis • San Francisco Toronto • Montreal • London • Munich • Paris • Madrid Cape Town • Sydney • Tokyo • Singapore • Mexico City Many of the designations used by manufacturers and sellers to distinguish their products Editor-in-Chief are claimed as trademarks. Where those designations appear in this book, and the Mark Taub publisher was aware of a trademark claim, the designations have been printed with initial capital letters or in all capitals Acquisitions Editor Laura Lewin The authors and publisher have taken care in the preparation of this book, but make no expressed or implied warranty of any kind and assume no responsibility for errors or Development Editor omissions. No liability is assumed for incidental or consequential damages in connection Michael Thurston with or arising out of the use of the information or programs contained herein. Managing Editor For information about buying this title in bulk quantities, or for special sales opportunities Kristy Hart (which may include electronic versions; custom cover designs; and content particular to your business, training goals, marketing focus, or branding interests), please contact our Project Editor corporate sales department at [email protected] or (800) 382-3419. -

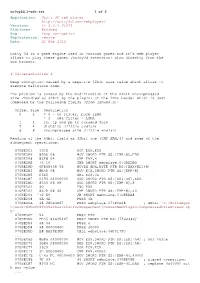

Application: Unity 3D Web Player

unity3d_1-adv.txt 1 of 2 Application: Unity 3D web player http://unity3d.com/webplayer/ Versions: <= 3.2.0.61061 Platforms: Windows Bug: heap corruption Exploitation: remote Date: 21 Feb 2012 Unity 3d is a game engine used in various games and it’s web player allows to play these games (unity3d extension) also directly from the web browser. # Vulnerabilities # Heap corruption caused by a negative 32bit size value which allows to execute malicious code. The problem is caused by the modification of the 64bit uncompressed size (handled as 32bit by the plugin) of the lzma header which is just composed by the following fields (from lzma86.h): Offset Size Description 0 1 = 0 - no filter, pure LZMA = 1 - x86 filter + LZMA 1 1 lc, lp and pb in encoded form 2 4 dictSize (little endian) 6 8 uncompressed size (little endian) Reading of the 64bit field as 32bit one (CMP EAX,4) and some of the subsequent operations: 070BEDA3 33C0 XOR EAX,EAX 070BEDA5 895D 08 MOV DWORD PTR SS:[EBP+8],EBX 070BEDA8 83F8 04 CMP EAX,4 070BEDAB 73 10 JNB SHORT webplaye.070BEDBD 070BEDAD 0FB65438 05 MOVZX EDX,BYTE PTR DS:[EAX+EDI+5] 070BEDB2 8B4D 08 MOV ECX,DWORD PTR SS:[EBP+8] 070BEDB5 D3E2 SHL EDX,CL 070BEDB7 0196 A4000000 ADD DWORD PTR DS:[ESI+A4],EDX 070BEDBD 8345 08 08 ADD DWORD PTR SS:[EBP+8],8 070BEDC1 40 INC EAX 070BEDC2 837D 08 40 CMP DWORD PTR SS:[EBP+8],40 070BEDC6 ^72 E0 JB SHORT webplaye.070BEDA8 070BEDC8 6A 4A PUSH 4A 070BEDCA 68 280A4B07 PUSH webplaye.074B0A28 ; ASCII "C:/BuildAgen t/work/b0bcff80449a48aa/PlatformDependent/CommonWebPlugin/CompressedFileStream.cp p" 070BEDCF 53 PUSH EBX 070BEDD0 FF35 84635407 PUSH DWORD PTR DS:[7546384] 070BEDD6 6A 04 PUSH 4 070BEDD8 68 00000400 PUSH 40000 070BEDDD E8 BA29E4FF CALL webplaye.06F0179C .. -

On the Scalability and Security of Distributed Multiplayer Online Games

On The Scalability and Security of Distributed Multiplayer Online Games Seyed Amir Yahyavi Firouz Abadi Doctor of Philosophy School of Computer Science McGill University Montreal, Quebec, Canada April 2014 A thesis submitted to McGill University in partial fulfillment of the requirements of the degree of Doctor of Philosophy c Seyed Amir Yahyavi Firouz Abadi, 2014 Abstract Multiplayer Online Games (MOGs) are an extremely popular online technology, one that produces billions of dollars in revenues. Yet, providing scalable, fast, and cheat-resistant games is challenging. In this thesis we propose solutions to some of these challenges. The underlying architecture plays a crucial role in enabling the games to meet the scala- bility, response time, and low cost requirements that are of utmost importance in designing a successful massively multiplayer online game. Peer-to-peer architectures where the game runs exclusively on client machines, and hybrid approaches, where some tasks run on the clients while a central server controls other aspects, have, due to their distributed and col- laborative nature, low infrastructure costs, and can achieve high scalability. They can also achieve fast response times by creating direct connections between players. However, they introduce their own challenges such as cheating. As one contribution of this thesis, we provide a comprehensive overview of current peer-to-peer and hybrid solutions for mas- sively multiplayer games using a uniform terminology. Most of the solutions we studied fail to meet one of the following main requirements: (1) fast response time, (2) scalability, (3) cheat-resistance. This thesis makes several proposals that address these requirements: Watchmen, mobile security, AntReckoning, and AntAI. -

C# Java PHP J2ME HTML

Jeremy Evers 604-418-6986 [email protected] http://jeremyevers.com/portfolio #308 – 2220 West 2nd Ave, Vancouver, BC, V6K 1H9 Programming Languages ● C/C++ ● C# ● Javascript ● Actionscript (Flash 8) ● Java ● PHP ● NSIS installer scripting language ● z80 Assembly ● x86 Assembly ● 6502/6510 Assembly ● HuC6280 Assembly ● J2ME ● HTML Areas of Expertise ● Software Development ● TCP/UDP/IP ● Debugging ● Optimization ● User Interface design ● Multi-Threading ● Web Development ● Graphics ● DirectX ● OpenGL ● Engine/Tools Development ● AI and Collision Detection ● Audio Engineering ● Audio DSP, Synthesis & Sequencing ● Digital Music & Sound Recording, Editing, Mixing and Mastering ● Target Platforms: PS3, Xbox One, Unity, Windows, Linux, Nintendo DS, Gameboy Advance, Gameboy Color, Flash, NEC Turbo Grafx ● Tools: Microsoft Visual Studio, Unity, Perforce, Wireshark, NEWT, Clumsy, Pro Motion, Platform Builder, GNU C/C++, Ethereal, Glowcode, Flash, mProjector, MySQL, Paint Shop Pro, Ableton Live, Nuendo, Wavelab, Waves Plugins, After Effects, Vegas, Word, Writer, Excel, Quark, Poser, Soundforge, ACID, Photoshop, CVS, Bugzilla, SOAP, Tortoise SVN Employment History United Front Games, Vancouver, British Columbia, Canada 2008-2016 Programmer ● My main responsibility at United Front Games is to program videogame software for the Sony Playstation 3, Microsoft Xbox One and Windows PC. ● I was mostly involved in online components, but fixed bugs everywhere. Smash and Grab (Steam, Xbox One, PS4) ~ Programmer ● Smash and Grab used Unity under the hood. ● I was brought on the project to build a new gameserver and port the game away from the Unity server lockstep model to a predicted model with time correction. ● I also wrote client side dll that are used as our prediction and communication layer, using RakNet as the transport layer. -

Distributed Doodling

Distributed Doodling Daniel Burrell, Mike Gist, Jan Hosang, Dave Lawrence, Andrew Slater January 15, 2009 1 Abstract Communications for the business and academic world is increasingly taking to the digital highways of the internet. As yet though, there is no easy way for a group of peers to collaborate together on a design project involving diagrams being analysed and reworked. This project addresses that issue in attempting to create a distributed system that allows a group of people to connect over the internet and collaborate on a drawing. The construction of the Tabula application was the result of the Dis- tributed Doodling project. The application aims to provide the desired chat and drawing solution for collaborations by multiple users. To aid the construction of the application, the Qt framework was chosen to sup- port the GUI development while RakNet was found to provide the desired network functionality. Qt provides many useful widgets that greatly sim- plified development and the signals and slots system creates an easy to use method for connection possible user actions (pressing a button for exam- ple) to a function. RakNet provides permits for the layering of some TCP like features over a UDP connection, greatly reducing the overheads that would have been associated with using TCP. Overall the architecture has been designed to be highly extensible in anticipation of future functionality being added. Custom `Events' have been designed to represent actions of the user; this may include drawing an object or sending a text chat message. To keep a record of these Event objects a logging system has been developed which uses a unique logical timestamp system to allow every Event to be identified and ordered. -

Jon Kenkel Resume.Pdf

jonkenkel.com (202) 430-5665 JON KENKEL [email protected] TECHNICAL Languages C++, C#, UnrealScript, C, Java, JavaScript, JQuery, HTML, CSS, PHP, Python, BASH, SQL, Lua, SKILLS Groovy, Objective-C, Scheme, Prolog Platforms Windows, Ubuntu Linux, Mac OS X, Xbox One, Playstation 4 Software / APIs Unreal 3/4, Unity (UNET, Raknet, uGUI, IMGUI), Visual Studio, Perforce, Git, SVN, OpenGL, DirectX, MySQL, VIM, Bootstrap, JQuery/UI, Grails, Phaser JS, Eclipse/IBM RAD, G++/GCC, GDB, XCode, Office GAMES Paladins – Unreal Engine 3 – Hero Shooter / Overwatch Competitor May 2016 to Present Software Engineer/Associate Software Engineer Reload 360 – Unity 5 – Networked First Person Shooter May 2016 Gameplay and Network Engineer in Team of 13 Networked Multiplayer (UNET) with Dead Reckoning and Lag compensated weapons Mercator Projection Camera and Wall walking Troncano – Unreal Engine 4 – 3D First-Person Platformer November 2014 Lead Engineer in Team of 11 Player could push and pull off objects in the environment, with gameplay similar to Lunar Lander No Gamer Left Behind – Unity 4.6 – Platformer with swarm of characters November 2014 Lead Engineer in Team of 6 Player controls dozens of characters at once, trying to avoid hazards EDUCATION M.E.A.E., University of Utah Entertainment Arts and Engineering Master Games Studio: Engineer (3.9 GPA) May 2016 B.S., Buena Vista University Computer Science and Management Information Systems (3.9 GPA) May 2014 A.A., Iowa Western Community College General Studies (3.9 GPA) May 2011 EXPERIENCE Hirez Studios August 2017 to Present Software Engineer – Platform Team – Game Systems Develop monetization features (e.g. VIP System) and gameplay systems (e.g. -

EAI Endorsed Transactions on Serious Games Research Article Game Engines: a Survey

EAI Endorsed Transactions on Serious Games Research Article Game engines: a survey A. Andrade1,* 1 Virtual Campus Lda. Av. Fernão de Magalhães, 716, 1 PT 4350-151, Porto, Portugal – http://virtual-campus.eu Abstract Due to hardware limitations at the origin of the video game industry, each new game was generally coded from the ground up. Years later, from the evolution of hardware and the need for quick game development cycles, spawned the concept of game engine. A game engine is a reusable software layer allowing the separation of common game concepts from the game assets (levels, graphics, etc.). This paper surveys fourteen different game engines relevant today, ranging from the industry-level to the newcomer-friendlier ones. Keywords: animation, game engines, game design, serious games, physics, rendering. Received on 15 October 2015, accepted on 15 October 2015, published on 05 November 2015 Copyright © 2015 António Andrade, licensed to EAI. This is an open access article distributed under the terms of the Creative Commons Attribution licence (http://creativecommons.org/licenses/by/3.0/), which permits unlimited use, distribution and reproduction in any medium so long as the original work is properly cited. doi: 10.4108/eai.5-11-2015.150615 1. Introduction or the concept of game entity) also meant that teams could grow and specialize, improving productivity and game features. In the longer run, it ended transforming the One might think that each new game to date, particularly the typology of game development teams where we will now ones with innovative game mechanics, is developed from typically find several times as many artists as actual scratch as a singular entity, with no relation to previous programmers [3].