Using Comparable Corpora to Augment Low Resource SMT Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Compilation of a Swiss German Dialect Corpus and Its Application to Pos Tagging

Compilation of a Swiss German Dialect Corpus and its Application to PoS Tagging Nora Hollenstein Noemi¨ Aepli University of Zurich University of Zurich [email protected] [email protected] Abstract Swiss German is a dialect continuum whose dialects are very different from Standard German, the official language of the German part of Switzerland. However, dealing with Swiss German in natural language processing, usually the detour through Standard German is taken. As writing in Swiss German has become more and more popular in recent years, we would like to provide data to serve as a stepping stone to automatically process the dialects. We compiled NOAH’s Corpus of Swiss German Dialects consisting of various text genres, manually annotated with Part-of- Speech tags. Furthermore, we applied this corpus as training set to a statistical Part-of-Speech tagger and achieved an accuracy of 90.62%. 1 Introduction Swiss German is not an official language of Switzerland, rather it includes dialects of Standard German, which is one of the four official languages. However, it is different from Standard German in terms of phonetics, lexicon, morphology and syntax. Swiss German is not dividable into a few dialects, in fact it is a dialect continuum with a huge variety. Swiss German is not only a spoken dialect but increasingly used in written form, especially in less formal text types. Often, Swiss German speakers write text messages, emails and blogs in Swiss German. However, in recent years it has become more and more popular and authors are publishing in their own dialect. Nonetheless, there is neither a writing standard nor an official orthography, which increases the variations dramatically due to the fact that people write as they please with their own style. -

Minority Languages in India

Thomas Benedikter Minority Languages in India An appraisal of the linguistic rights of minorities in India ---------------------------- EURASIA-Net Europe-South Asia Exchange on Supranational (Regional) Policies and Instruments for the Promotion of Human Rights and the Management of Minority Issues 2 Linguistic minorities in India An appraisal of the linguistic rights of minorities in India Bozen/Bolzano, March 2013 This study was originally written for the European Academy of Bolzano/Bozen (EURAC), Institute for Minority Rights, in the frame of the project Europe-South Asia Exchange on Supranational (Regional) Policies and Instruments for the Promotion of Human Rights and the Management of Minority Issues (EURASIA-Net). The publication is based on extensive research in eight Indian States, with the support of the European Academy of Bozen/Bolzano and the Mahanirban Calcutta Research Group, Kolkata. EURASIA-Net Partners Accademia Europea Bolzano/Europäische Akademie Bozen (EURAC) – Bolzano/Bozen (Italy) Brunel University – West London (UK) Johann Wolfgang Goethe-Universität – Frankfurt am Main (Germany) Mahanirban Calcutta Research Group (India) South Asian Forum for Human Rights (Nepal) Democratic Commission of Human Development (Pakistan), and University of Dhaka (Bangladesh) Edited by © Thomas Benedikter 2013 Rights and permissions Copying and/or transmitting parts of this work without prior permission, may be a violation of applicable law. The publishers encourage dissemination of this publication and would be happy to grant permission. -

Semantic Approaches in Natural Language Processing

Cover:Layout 1 02.08.2010 13:38 Seite 1 10th Conference on Natural Language Processing (KONVENS) g n i s Semantic Approaches s e c o in Natural Language Processing r P e g a u Proceedings of the Conference g n a on Natural Language Processing 2010 L l a r u t a This book contains state-of-the-art contributions to the 10th N n Edited by conference on Natural Language Processing, KONVENS 2010 i (Konferenz zur Verarbeitung natürlicher Sprache), with a focus s e Manfred Pinkal on semantic processing. h c a o Ines Rehbein The KONVENS in general aims at offering a broad perspective r on current research and developments within the interdiscipli - p p nary field of natural language processing. The central theme A Sabine Schulte im Walde c draws specific attention towards addressing linguistic aspects i t of meaning, covering deep as well as shallow approaches to se - n Angelika Storrer mantic processing. The contributions address both knowledge- a m based and data-driven methods for modelling and acquiring e semantic information, and discuss the role of semantic infor - S mation in applications of language technology. The articles demonstrate the importance of semantic proces - sing, and present novel and creative approaches to natural language processing in general. Some contributions put their focus on developing and improving NLP systems for tasks like Named Entity Recognition or Word Sense Disambiguation, or focus on semantic knowledge acquisition and exploitation with respect to collaboratively built ressources, or harvesting se - mantic information in virtual games. Others are set within the context of real-world applications, such as Authoring Aids, Text Summarisation and Information Retrieval. -

Text Corpora and the Challenge of Newly Written Languages

Proceedings of the 1st Joint SLTU and CCURL Workshop (SLTU-CCURL 2020), pages 111–120 Language Resources and Evaluation Conference (LREC 2020), Marseille, 11–16 May 2020 c European Language Resources Association (ELRA), licensed under CC-BY-NC Text Corpora and the Challenge of Newly Written Languages Alice Millour∗, Karen¨ Fort∗y ∗Sorbonne Universite´ / STIH, yUniversite´ de Lorraine, CNRS, Inria, LORIA 28, rue Serpente 75006 Paris, France, 54000 Nancy, France [email protected], [email protected] Abstract Text corpora represent the foundation on which most natural language processing systems rely. However, for many languages, collecting or building a text corpus of a sufficient size still remains a complex issue, especially for corpora that are accessible and distributed under a clear license allowing modification (such as annotation) and further resharing. In this paper, we review the sources of text corpora usually called upon to fill the gap in low-resource contexts, and how crowdsourcing has been used to build linguistic resources. Then, we present our own experiments with crowdsourcing text corpora and an analysis of the obstacles we encountered. Although the results obtained in terms of participation are still unsatisfactory, we advocate that the effort towards a greater involvement of the speakers should be pursued, especially when the language of interest is newly written. Keywords: text corpora, dialectal variants, spelling, crowdsourcing 1. Introduction increasing number of speakers (see for instance, the stud- Speakers from various linguistic communities are increas- ies on specific languages carried by Rivron (2012) or Soria ingly writing their languages (Outinoff, 2012), and the In- et al. -

Bringing Ol Chiki to the Digital World

Typography and Education http://www.typoday.in Bringing Ol Chiki to the digital world Saxena, Pooja, [email protected] Panigrahi, Subhashish, Programme Officer, Access to Knowledge, Center for Internet and Society, Bengaluru (India), [email protected] Abstract: Can a typeface turn the fate of an indigenous language around by making communication possible on digital platforms and driving digital activism? In 2014, a project was initiated with financial support from the Access to Knowledge programme at The Center for Internet and Society, Bangalore to look for answers to this question. The project’s goal was to design a typeface family supporting Ol Chiki script, which is used to write Santali, along with input methods that would make typing in Ol Chiki possible. It was planned that these resources would be released under a free license, with the hope to provide tools to Santali speakers to read and write in their own script online. Key words: Ol Chiki script, typeface design, minority script, Santali language 1. Introduction The main aim of this paper is to share the experiences and knowledge gained by working on a typeface and input method design project for a minority script from India, in this case Ol Chiki, which is used to write Santali. This project was initiated by the Access to Knowledge programme at the Center for Internet and Society (CIS-A2K, whose mandate is to work towards catalysing the growth of the free and open knowledge movement in South Typography Day 2016 1 Asia and in Indic languages. From September 2012, CIS has been actively involved in growing the open knowledge movement in India through a grant received from the Wikimedia Foundation (WMF). -

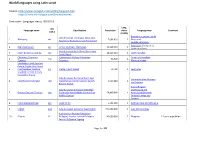

World Languages Using Latin Script

World languages using Latin script Source: http://www.omniglot.com/writing/langalph.htm https://www.ethnologue.com/browse/names Sort order : Language status, ISO 639-3 Lang, ISO Language name Classification Population status Language map Comment 639-3 (EGIDS) Botswana, Lesotho, South Indo-European, Germanic, West, Low 1. Afrikaans, afr 7,096,810 1 Africa and Saxon-Low Franconian, Low Franconian SwazilandNamibia Azerbaijan,Georgia,Iraq 2. Azeri,Azerbaijani azj Turkic, Southern, Azerbaijani 24,226,940 1 Jordan and Syria Indo-European Balto-Slavic Slavic West 3. Czech Bohemian Cestina ces 10,619,340 1 Czech Republic Czech-Slovak Chamorro,Chamorru Austronesian Malayo-Polynesian Guam and Northern 4. cha 94,700 1 Tjamoro Chamorro Mariana Islands Seychelles Creole,Seselwa Creole, Creole, Ilois, Kreol, 5. Kreol Seselwa, Seselwa, crs Creole, French based 72,700 1 Seychelles Seychelles Creole French, Seychellois Creole Indo-European Germanic North East Denmark Finland Norway 6. DanishDansk Rigsdansk dan Scandinavian Danish-Swedish Danish- 5,520,860 1 and Sweden Riksmal Danish AustriaBelgium Indo-European Germanic West High Luxembourg and 7. German Deutsch Tedesco deu German German Middle German East 69,800,000 1 NetherlandsDenmark Middle German Finland Norway and Sweden 8. Estonianestieesti keel ekk Uralic Finnic 1,132,500 1 Estonia Latvia and Lithuania 9. English eng Indo-European Germanic West English 341,000,000 1 over 140 countries Austronesian Malayo-Polynesian 10. Filipino fil Philippine Greater Central Philippine 45,000,000 1 Filippines L2 users population Central Philippine Tagalog Page 1 of 48 World languages using Latin script Lang, ISO Language name Classification Population status Language map Comment 639-3 (EGIDS) Denmark Finland Norway 11. -

Parsing Approaches for Swiss German

Master’s thesis presented to the Faculty of Arts and Social Sciences of the University of Zurich for the degree of Master of Arts UZH nmod case compound amod Parsing Approaches for Swiss German NOUN NOUN ADP ADJ NOUN Author: Noëmi Aepli Student ID Nr.: 10-719-250 Examiner: Prof. Dr. Martin Volk Supervisor: Dr. Simon Clematide Institute of Computational Linguistics Submission date: 10.01.2018 nmod case compound amod Parsing Approaches for Swiss German Abstract NOUN NOUN ADP ADJ NOUN Abstract This thesis presents work on universal dependency parsing for Swiss German. Natural language pars- ing describes the process of syntactic analysis in Natural Language Processing (NLP) and is a key area as many applications rely on its output. Building a statistical parser requires expensive resources which are only available for a few dozen languages. Hence, for the majority of the world’s languages, other ways have to be found to circumvent the low-resource problem. Triggered by such scenarios, research on different approaches to cross-lingual learning is going on. These methods seem promising for closely related languages and hence especially for dialects and varieties. Swiss German is a dialect continuum of the Alemannic dialect group. It comprises numerous vari- eties used in the German-speaking part of Switzerland. Although mainly oral varieties (Mundarten), they are frequently used in written communication. On the basis of their high acceptance in the Swiss culture and with the introduction of digital communication, Swiss German has undergone a spread over all kinds of communication forms and social media. Considering the lack of standard spelling rules, this leads to a huge linguistic variability because people write the way they speak. -

Ročník 68, 2017

2 ROČNÍK 68, 2017 JAZYKOVEDNÝ ČASOPIS ________________________________________________________________VEDEcKÝ ČASOPIS PrE OtáZKY tEórIE JAZYKA JOUrNAL Of LINGUIStIcS ScIENtIfIc JOUrNAL fOr thE thEOrY Of LANGUAGE ________________________________________________________________ hlavná redaktorka/Editor-in-chief: doc. Mgr. Gabriela Múcsková, PhD. Výkonní redaktori/Managing Editors: PhDr. Ingrid Hrubaničová, PhD., Mgr. Miroslav Zumrík, PhD. redakčná rada/Editorial Board: doc. PhDr. Ján Bosák, CSc. (Bratislava), PhDr. Klára Buzássyová, CSc. (Bratislava), prof. PhDr. Juraj Dolník, DrSc. (Bratislava), PhDr. Ingrid Hrubaničová, PhD. (Bra tislava), Doc. Mgr. Martina Ivanová, PhD. (Prešov), Mgr. Nicol Janočková, PhD. (Bratislava), Mgr. Alexandra Jarošová, CSc. (Bratislava), prof. PaedDr. Jana Kesselová, CSc. (Prešov), PhDr. Ľubor Králik, CSc. (Bratislava), PhDr. Viktor Krupa, DrSc. (Bratislava), doc. Mgr. Gabriela Múcsková, PhD. (Bratislava), Univ. Prof. Mag. Dr. Ste- fan Michael Newerkla (Viedeň – Rakúsko), Associate Prof. Mark Richard Lauersdorf, Ph.D. (Kentucky – USA), doc. Mgr. Martin Ološtiak, PhD. (Prešov), prof. PhDr. Slavomír Ondrejovič, DrSc. (Bratislava), prof. PaedDr. Vladimír Patráš, CSc. (Banská Bystrica), prof. PhDr. Ján Sabol, DrSc. (Košice), prof. PhDr. Juraj Vaňko, CSc. (Nitra), Mgr. Miroslav Zumrík, PhD. prof. PhDr. Pavol Žigo, CSc. (Bratislava). technický_______________________________________________________________ redaktor/technical editor: Mgr. Vladimír Radik Vydáva/Published by: Jazykovedný ústav Ľudovíta Štúra Slovenskej -

N4035 Proposal to Encode the Maithili Script in ISO/IEC 10646

ISO/IEC JTC1/SC2/WG2 N4035 L2/11-175 2011-05-05 Proposal to Encode the Maithili Script in ISO/IEC 10646 Anshuman Pandey Department of History University of Michigan Ann Arbor, Michigan, U.S.A. [email protected] May 5, 2011 1 Introduction This is a proposal to encode the Maithili script in the Universal Character Set (UCS). The recommendations made here are based upon the following documents: • L2/06-226 “Request to Allocate the Maithili Script in the Unicode Roadmap” • N3765 L2/09-329 “Towards an Encoding for the Maithili Script in ISO/IEC 10646” 2 Background Maithili is the traditional writing system for the Maithili language (ISO 639: mai), which is spoken pre- dominantly in the state of Bihar in India and in the Narayani and Janakpur zones of Nepal by more than 35 million people. Maithili is a scheduled language of India and the second most spoken language in Nepal. Maithili is a Brahmi-based script derived from Gauḍī, or ‘Proto-Bengali’, which evolved from the Kuṭila branch of Brahmi by the 10th century . It is related to the Bengali, Newari, and Oriya, which are also descended from Gauḍī, and became differentiated from these scripts by the 14th century.1 It remained the dominant writing system for Maithili until the 20th century. The script is also known as Mithilākṣara and Tirhutā, names which refer to Mithila, or Tirhut, as these regions of India and Nepal are historically known. The Maithili script is known in Bengal as tiruṭe, meaning the script of the Tirhuta region.2 The Maithili script is associated with a scholarly and scribal tradition that has produced literary and philo- sophical works in the Maithili and Sanskrit languages from at least the 14th century . -

Language Distinctiveness*

RAI – data on language distinctiveness RAI data Language distinctiveness* Country profiles *This document provides data production information for the RAI-Rokkan dataset. Last edited on October 7, 2020 Compiled by Gary Marks with research assistance by Noah Dasanaike Citation: Liesbet Hooghe and Gary Marks (2016). Community, Scale and Regional Governance: A Postfunctionalist Theory of Governance, Vol. II. Oxford: OUP. Sarah Shair-Rosenfield, Arjan H. Schakel, Sara Niedzwiecki, Gary Marks, Liesbet Hooghe, Sandra Chapman-Osterkatz (2021). “Language difference and Regional Authority.” Regional and Federal Studies, Vol. 31. DOI: 10.1080/13597566.2020.1831476 Introduction ....................................................................................................................6 Albania ............................................................................................................................7 Argentina ...................................................................................................................... 10 Australia ....................................................................................................................... 12 Austria .......................................................................................................................... 14 Bahamas ....................................................................................................................... 16 Bangladesh .................................................................................................................. -

Inspire New Readers Campaign

Inspire New Readers Campaign Results from 2018 In January 2018, the Wikimedia Foundation ran an Inspire Campaign to generate & fund ideas for how our community ideas could reach “New Readers.” The problem: Awareness of Wikipedia and other projects is very low among internet users in many countries. Wikipedia awareness among internet users before New Readers interventions Iraq USA France 25%19% Japan 87% 84% 64% Mexico 45% India Nigeria 40%33% Brazil 48%27% 39% Sources: https://meta.wikimedia.org/wiki/Global_Reach/Insights Project goals Primary: Support communities to increase awareness of Wikimedia Projects Secondary: Create learning patterns and guidelines to support future awareness grants Project timeline Inspire campaign: Pilot grants: Rapid grants: Build community awareness Communities run Templates and learning of the problem & generate projects to raise patterns for rapid grants to possible solutions awareness support ongoing efforts JAN 2018 MAR - OCT 2018 LAUNCH JAN 2019 Campaign overview Inspire campaign GOAL: more communities know that low awareness is a problem where they live. 538 participants Target: 300 362 ideas Target: 150 8 funded grants 11 submitted Target: 20 [3 Nigeria, 3 India, 1 Nepal, 1 DRC] Icons from the noun project, CC BY SA 3.0 by Tengwan & corpus delecti Grants overview 8 grants awarded Total budget: $12,982 ● 4 Online promotion 3 - Video production 1 - Social media promotion ● 4 Offline promotion 1 - Radio show 1 - Rickshaw announcements 2 - Workshops Icons 1. Video by Christian Antonius 2. instagram by Arthur -

Proceedings of the Fourth Workshop on NLP for Similar Languages, Varieties and Dialects, Pages 1–15, Valencia, Spain, April 3, 2017

VarDial 2017 Fourth Workshop on NLP for Similar Languages, Varieties and Dialects (VarDial’2017) Proceedings of the Workshop April 3, 2017 Valencia, Spain c 2017 The Association for Computational Linguistics Order copies of this and other ACL proceedings from: Association for Computational Linguistics (ACL) 209 N. Eighth Street Stroudsburg, PA 18360 USA Tel: +1-570-476-8006 Fax: +1-570-476-0860 [email protected] ISBN 978-1-945626-43-2 ii Preface VarDial is a well-established series of workshops held annually and co-located with top-tier international NLP conferences. Previous editions of VarDial were VarDial’2014, which was co- located with COLING’2014, LT4VarDial’2015, which was held together with RANLP’2015, and finally VarDial’2016 co-located with COLING’2016. The great interest of the community has made possible the fourth edition of the Workshop on NLP for Similar Languages, Varieties and Dialects (VarDial’2017), co-located with EACL’2017 in Valencia, Spain. The VarDial series has attracted researchers working on a wide range of topics related to linguistic variation such as building and adapting language resources for language varieties and dialects, creating language technology and applications that make use of language closeness, and exploiting existing resources in a related language or a language variety. We believe that this is a very timely series of workshops, as research in language variation is much needed in today’s multi-lingual world, where several closely-related languages, language varieties, and dialects are in daily use, not only as spoken colloquial language but also in written media, e.g., in SMS, chats, and social networks.