Semantic Approaches in Natural Language Processing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Compilation of a Swiss German Dialect Corpus and Its Application to Pos Tagging

Compilation of a Swiss German Dialect Corpus and its Application to PoS Tagging Nora Hollenstein Noemi¨ Aepli University of Zurich University of Zurich [email protected] [email protected] Abstract Swiss German is a dialect continuum whose dialects are very different from Standard German, the official language of the German part of Switzerland. However, dealing with Swiss German in natural language processing, usually the detour through Standard German is taken. As writing in Swiss German has become more and more popular in recent years, we would like to provide data to serve as a stepping stone to automatically process the dialects. We compiled NOAH’s Corpus of Swiss German Dialects consisting of various text genres, manually annotated with Part-of- Speech tags. Furthermore, we applied this corpus as training set to a statistical Part-of-Speech tagger and achieved an accuracy of 90.62%. 1 Introduction Swiss German is not an official language of Switzerland, rather it includes dialects of Standard German, which is one of the four official languages. However, it is different from Standard German in terms of phonetics, lexicon, morphology and syntax. Swiss German is not dividable into a few dialects, in fact it is a dialect continuum with a huge variety. Swiss German is not only a spoken dialect but increasingly used in written form, especially in less formal text types. Often, Swiss German speakers write text messages, emails and blogs in Swiss German. However, in recent years it has become more and more popular and authors are publishing in their own dialect. Nonetheless, there is neither a writing standard nor an official orthography, which increases the variations dramatically due to the fact that people write as they please with their own style. -

Emacspeak — the Complete Audio Desktop User Manual

Emacspeak | The Complete Audio Desktop User Manual T. V. Raman Last Updated: 19 November 2016 Copyright c 1994{2016 T. V. Raman. All Rights Reserved. Permission is granted to make and distribute verbatim copies of this manual without charge provided the copyright notice and this permission notice are preserved on all copies. Short Contents Emacspeak :::::::::::::::::::::::::::::::::::::::::::::: 1 1 Copyright ::::::::::::::::::::::::::::::::::::::::::: 2 2 Announcing Emacspeak Manual 2nd Edition As An Open Source Project ::::::::::::::::::::::::::::::::::::::::::::: 3 3 Background :::::::::::::::::::::::::::::::::::::::::: 4 4 Introduction ::::::::::::::::::::::::::::::::::::::::: 6 5 Installation Instructions :::::::::::::::::::::::::::::::: 7 6 Basic Usage. ::::::::::::::::::::::::::::::::::::::::: 9 7 The Emacspeak Audio Desktop. :::::::::::::::::::::::: 19 8 Voice Lock :::::::::::::::::::::::::::::::::::::::::: 22 9 Using Online Help With Emacspeak. :::::::::::::::::::: 24 10 Emacs Packages. ::::::::::::::::::::::::::::::::::::: 26 11 Running Terminal Based Applications. ::::::::::::::::::: 45 12 Emacspeak Commands And Options::::::::::::::::::::: 49 13 Emacspeak Keyboard Commands. :::::::::::::::::::::: 361 14 TTS Servers ::::::::::::::::::::::::::::::::::::::: 362 15 Acknowledgments.::::::::::::::::::::::::::::::::::: 366 16 Concept Index :::::::::::::::::::::::::::::::::::::: 367 17 Key Index ::::::::::::::::::::::::::::::::::::::::: 368 Table of Contents Emacspeak :::::::::::::::::::::::::::::::::::::::::: 1 1 Copyright ::::::::::::::::::::::::::::::::::::::: -

A Unicellular Relative of Animals Generates a Layer of Polarized Cells

RESEARCH ARTICLE A unicellular relative of animals generates a layer of polarized cells by actomyosin- dependent cellularization Omaya Dudin1†*, Andrej Ondracka1†, Xavier Grau-Bove´ 1,2, Arthur AB Haraldsen3, Atsushi Toyoda4, Hiroshi Suga5, Jon Bra˚ te3, In˜ aki Ruiz-Trillo1,6,7* 1Institut de Biologia Evolutiva (CSIC-Universitat Pompeu Fabra), Barcelona, Spain; 2Department of Vector Biology, Liverpool School of Tropical Medicine, Liverpool, United Kingdom; 3Section for Genetics and Evolutionary Biology (EVOGENE), Department of Biosciences, University of Oslo, Oslo, Norway; 4Department of Genomics and Evolutionary Biology, National Institute of Genetics, Mishima, Japan; 5Faculty of Life and Environmental Sciences, Prefectural University of Hiroshima, Hiroshima, Japan; 6Departament de Gene`tica, Microbiologia i Estadı´stica, Universitat de Barcelona, Barcelona, Spain; 7ICREA, Barcelona, Spain Abstract In animals, cellularization of a coenocyte is a specialized form of cytokinesis that results in the formation of a polarized epithelium during early embryonic development. It is characterized by coordinated assembly of an actomyosin network, which drives inward membrane invaginations. However, whether coordinated cellularization driven by membrane invagination exists outside animals is not known. To that end, we investigate cellularization in the ichthyosporean Sphaeroforma arctica, a close unicellular relative of animals. We show that the process of cellularization involves coordinated inward plasma membrane invaginations dependent on an *For correspondence: actomyosin network and reveal the temporal order of its assembly. This leads to the formation of a [email protected] (OD); polarized layer of cells resembling an epithelium. We show that this stage is associated with tightly [email protected] (IR-T) regulated transcriptional activation of genes involved in cell adhesion. -

Manual De Supervivencia En Linux

FRANCISCO SOLSONA Y ELISA VISO (COORDINADORES) MANUAL DE SUPERVIVENCIA EN LINUX MAURICIO ALDAZOSA JOSÉ GALAVIZ IVÁN HERNÁNDEZ CANEK PELÁEZ KARLA RAMÍREZ FERNANDA SÁNCHEZ PUIG FRANCISCO SOLSONA MANUEL SUGAWARA ARTURO VÁZQUEZ ELISA VISO FACULTAD DE CIENCIAS, UNAM 2007 Esta obra aparece gracias al apoyo del proyecto PAPIME PE-100205 Manual de supervivencia en Linux 1ª edición, 2007 Diseño de portada: Laura Uribe ©Universidad Nacional Autónoma de México, Facultad de Ciencias Circuito exterior. Ciudad Universitaria. México 04510 [email protected] ISBN: 978-970-32-5040-0 Impreso y hecho en México Índice general Prefacio XII 1. Introducción a Unix 1 1.1. Historia .................................... 1 1.1.1. Sistemas UNIX libres ........................ 2 1.1.2. El proyecto GNU ........................... 3 1.2. Sistemas de tiempo compartido ........................ 4 1.2.1. Los sistemas multiusuario ...................... 4 1.3. Inicio de sesión ................................ 5 1.4. Emacs ..................................... 6 1.4.1. Introducción a Emacs ......................... 6 1.4.2. Emacs y tú .............................. 7 2. Ambientes gráficos en Linux 9 2.1. Ambientes de escritorio ............................ 9 2.2. KDE ...................................... 10 2.2.1. Configuración de KDE ........................ 10 2.2.2. KDE en 1, 2, 3 ............................ 14 2.2.3. Quemado de discos con K3b ..................... 14 2.2.4. Amarok ................................ 18 2.3. Emacs ..................................... 20 2.4. Comenzando con Emacs ........................... 20 3. Sistema de Archivos 23 3.1. El sistema de archivos ............................. 23 3.1.1. Rutas absolutas y relativas ...................... 26 3.2. Moviéndose en el árbol del sistema de archivos ............... 27 3.2.1. Permisos de archivos ......................... 30 3.2.2. Archivos estándar y redireccionamiento ............... 34 3.3. Otros comandos de Unix .......................... -

Latexsample-Thesis

INTEGRAL ESTIMATION IN QUANTUM PHYSICS by Jane Doe A dissertation submitted to the faculty of The University of Utah in partial fulfillment of the requirements for the degree of Doctor of Philosophy Department of Mathematics The University of Utah May 2016 Copyright c Jane Doe 2016 All Rights Reserved The University of Utah Graduate School STATEMENT OF DISSERTATION APPROVAL The dissertation of Jane Doe has been approved by the following supervisory committee members: Cornelius L´anczos , Chair(s) 17 Feb 2016 Date Approved Hans Bethe , Member 17 Feb 2016 Date Approved Niels Bohr , Member 17 Feb 2016 Date Approved Max Born , Member 17 Feb 2016 Date Approved Paul A. M. Dirac , Member 17 Feb 2016 Date Approved by Petrus Marcus Aurelius Featherstone-Hough , Chair/Dean of the Department/College/School of Mathematics and by Alice B. Toklas , Dean of The Graduate School. ABSTRACT Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. Blah blah blah blah blah blah blah blah blah blah blah blah blah blah blah. -

Bibliography

Bibliography [AG06] Ken Arnold and James Gosling. The Java Programming Language. Addison-Wesley, Reading, MA, USA, fourth edition, 2006. [Aha00] Dan Aharoni. Cogito, ergo sum! cognitive processes of students deal- ing with data structures. In Proceedings of SIGCSE’00, pages 26–30, ACM Press, March 2000. [AHU74] Alfred V. Aho, John E. Hopcroft, and Jeffrey D. Ullman. The Design and Analysis of Computer Algorithms. Addison-Wesley, Reading, MA, 1974. [AHU83] Alfred V. Aho, John E. Hopcroft, and Jeffrey D. Ullman. Data Struc- tures and Algorithms. Addison-Wesley, Reading, MA, 1983. [BB96] G. Brassard and P. Bratley. Fundamentals of Algorithmics. Prentice Hall, Upper Saddle River, NJ, 1996. [Ben75] John Louis Bentley. Multidimensional binary search trees used for associative searching. Communications of the ACM, 18(9):509–517, September 1975. ISSN: 0001-0782. [Ben82] John Louis Bentley. Writing Efficient Programs. Prentice Hall, Upper Saddle River, NJ, 1982. [Ben84] John Louis Bentley. Programming pearls: The back of the envelope. Communications of the ACM, 27(3):180–184, March 1984. [Ben85] John Louis Bentley. Programming pearls: Thanks, heaps. Communi- cations of the ACM, 28(3):245–250, March 1985. [Ben86] John Louis Bentley. Programming pearls: The envelope is back. Com- munications of the ACM, 29(3):176–182, March 1986. [Ben88] John Bentley. More Programming Pearls: Confessions of a Coder. Addison-Wesley, Reading, MA, 1988. [Ben00] John Bentley. Programming Pearls. Addison-Wesley, Reading, MA, second edition, 2000. 585 586 BIBLIOGRAPHY [BG00] Sara Baase and Allen Van Gelder. Computer Algorithms: Introduction to Design & Analysis. Addison-Wesley, Reading, MA, USA, third edition, 2000. [BM85] John Louis Bentley and Catherine C. -

Text Corpora and the Challenge of Newly Written Languages

Proceedings of the 1st Joint SLTU and CCURL Workshop (SLTU-CCURL 2020), pages 111–120 Language Resources and Evaluation Conference (LREC 2020), Marseille, 11–16 May 2020 c European Language Resources Association (ELRA), licensed under CC-BY-NC Text Corpora and the Challenge of Newly Written Languages Alice Millour∗, Karen¨ Fort∗y ∗Sorbonne Universite´ / STIH, yUniversite´ de Lorraine, CNRS, Inria, LORIA 28, rue Serpente 75006 Paris, France, 54000 Nancy, France [email protected], [email protected] Abstract Text corpora represent the foundation on which most natural language processing systems rely. However, for many languages, collecting or building a text corpus of a sufficient size still remains a complex issue, especially for corpora that are accessible and distributed under a clear license allowing modification (such as annotation) and further resharing. In this paper, we review the sources of text corpora usually called upon to fill the gap in low-resource contexts, and how crowdsourcing has been used to build linguistic resources. Then, we present our own experiments with crowdsourcing text corpora and an analysis of the obstacles we encountered. Although the results obtained in terms of participation are still unsatisfactory, we advocate that the effort towards a greater involvement of the speakers should be pursued, especially when the language of interest is newly written. Keywords: text corpora, dialectal variants, spelling, crowdsourcing 1. Introduction increasing number of speakers (see for instance, the stud- Speakers from various linguistic communities are increas- ies on specific languages carried by Rivron (2012) or Soria ingly writing their languages (Outinoff, 2012), and the In- et al. -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

Editor War - Wikipedia, the Free Encyclopedia Editor War from Wikipedia, the Free Encyclopedia

11/20/13 Editor war - Wikipedia, the free encyclopedia Editor war From Wikipedia, the free encyclopedia Editor war is the common name for the rivalry between users of the vi and Emacs text editors. The rivalry has become a lasting part of hacker culture and the free software community. Many flame wars have been fought between groups insisting that their editor of choice is the paragon of editing perfection, and insulting the others. Unlike the related battles over operating systems, programming languages, and even source code indent style, choice of editor usually only affects oneself. Contents 1 Differences between vi and Emacs 1.1 Benefits of vi-like editors 1.2 Benefits of Emacs 2 Humor 3 Current state of the editor war 4 See also 5 Notes 6 References 7 External links Differences between vi and Emacs The most important differences between vi and Emacs are presented in the following table: en.wikipedia.org/wiki/Editor_war 1/6 11/20/13 Editor war - Wikipedia, the free encyclopedia vi Emacs Emacs commands are key combinations for which modifier keys vi editing retains each permutation of typed keys. are held down while other keys are Keystroke This creates a path in the decision tree which pressed; a command gets executed execution unambiguously identifies any command. once completely typed. This still forms a decision tree of commands, but not one of individual keystrokes. Historically, vi is a smaller and faster program, but Emacs takes longer to start up (even with less capacity for customization. The vim compared to vim) and requires more version of vi has evolved to provide significantly memory. -

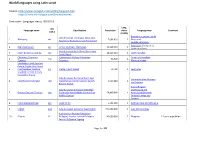

World Languages Using Latin Script

World languages using Latin script Source: http://www.omniglot.com/writing/langalph.htm https://www.ethnologue.com/browse/names Sort order : Language status, ISO 639-3 Lang, ISO Language name Classification Population status Language map Comment 639-3 (EGIDS) Botswana, Lesotho, South Indo-European, Germanic, West, Low 1. Afrikaans, afr 7,096,810 1 Africa and Saxon-Low Franconian, Low Franconian SwazilandNamibia Azerbaijan,Georgia,Iraq 2. Azeri,Azerbaijani azj Turkic, Southern, Azerbaijani 24,226,940 1 Jordan and Syria Indo-European Balto-Slavic Slavic West 3. Czech Bohemian Cestina ces 10,619,340 1 Czech Republic Czech-Slovak Chamorro,Chamorru Austronesian Malayo-Polynesian Guam and Northern 4. cha 94,700 1 Tjamoro Chamorro Mariana Islands Seychelles Creole,Seselwa Creole, Creole, Ilois, Kreol, 5. Kreol Seselwa, Seselwa, crs Creole, French based 72,700 1 Seychelles Seychelles Creole French, Seychellois Creole Indo-European Germanic North East Denmark Finland Norway 6. DanishDansk Rigsdansk dan Scandinavian Danish-Swedish Danish- 5,520,860 1 and Sweden Riksmal Danish AustriaBelgium Indo-European Germanic West High Luxembourg and 7. German Deutsch Tedesco deu German German Middle German East 69,800,000 1 NetherlandsDenmark Middle German Finland Norway and Sweden 8. Estonianestieesti keel ekk Uralic Finnic 1,132,500 1 Estonia Latvia and Lithuania 9. English eng Indo-European Germanic West English 341,000,000 1 over 140 countries Austronesian Malayo-Polynesian 10. Filipino fil Philippine Greater Central Philippine 45,000,000 1 Filippines L2 users population Central Philippine Tagalog Page 1 of 48 World languages using Latin script Lang, ISO Language name Classification Population status Language map Comment 639-3 (EGIDS) Denmark Finland Norway 11. -

Parsing Approaches for Swiss German

Master’s thesis presented to the Faculty of Arts and Social Sciences of the University of Zurich for the degree of Master of Arts UZH nmod case compound amod Parsing Approaches for Swiss German NOUN NOUN ADP ADJ NOUN Author: Noëmi Aepli Student ID Nr.: 10-719-250 Examiner: Prof. Dr. Martin Volk Supervisor: Dr. Simon Clematide Institute of Computational Linguistics Submission date: 10.01.2018 nmod case compound amod Parsing Approaches for Swiss German Abstract NOUN NOUN ADP ADJ NOUN Abstract This thesis presents work on universal dependency parsing for Swiss German. Natural language pars- ing describes the process of syntactic analysis in Natural Language Processing (NLP) and is a key area as many applications rely on its output. Building a statistical parser requires expensive resources which are only available for a few dozen languages. Hence, for the majority of the world’s languages, other ways have to be found to circumvent the low-resource problem. Triggered by such scenarios, research on different approaches to cross-lingual learning is going on. These methods seem promising for closely related languages and hence especially for dialects and varieties. Swiss German is a dialect continuum of the Alemannic dialect group. It comprises numerous vari- eties used in the German-speaking part of Switzerland. Although mainly oral varieties (Mundarten), they are frequently used in written communication. On the basis of their high acceptance in the Swiss culture and with the introduction of digital communication, Swiss German has undergone a spread over all kinds of communication forms and social media. Considering the lack of standard spelling rules, this leads to a huge linguistic variability because people write the way they speak. -

Ročník 68, 2017

2 ROČNÍK 68, 2017 JAZYKOVEDNÝ ČASOPIS ________________________________________________________________VEDEcKÝ ČASOPIS PrE OtáZKY tEórIE JAZYKA JOUrNAL Of LINGUIStIcS ScIENtIfIc JOUrNAL fOr thE thEOrY Of LANGUAGE ________________________________________________________________ hlavná redaktorka/Editor-in-chief: doc. Mgr. Gabriela Múcsková, PhD. Výkonní redaktori/Managing Editors: PhDr. Ingrid Hrubaničová, PhD., Mgr. Miroslav Zumrík, PhD. redakčná rada/Editorial Board: doc. PhDr. Ján Bosák, CSc. (Bratislava), PhDr. Klára Buzássyová, CSc. (Bratislava), prof. PhDr. Juraj Dolník, DrSc. (Bratislava), PhDr. Ingrid Hrubaničová, PhD. (Bra tislava), Doc. Mgr. Martina Ivanová, PhD. (Prešov), Mgr. Nicol Janočková, PhD. (Bratislava), Mgr. Alexandra Jarošová, CSc. (Bratislava), prof. PaedDr. Jana Kesselová, CSc. (Prešov), PhDr. Ľubor Králik, CSc. (Bratislava), PhDr. Viktor Krupa, DrSc. (Bratislava), doc. Mgr. Gabriela Múcsková, PhD. (Bratislava), Univ. Prof. Mag. Dr. Ste- fan Michael Newerkla (Viedeň – Rakúsko), Associate Prof. Mark Richard Lauersdorf, Ph.D. (Kentucky – USA), doc. Mgr. Martin Ološtiak, PhD. (Prešov), prof. PhDr. Slavomír Ondrejovič, DrSc. (Bratislava), prof. PaedDr. Vladimír Patráš, CSc. (Banská Bystrica), prof. PhDr. Ján Sabol, DrSc. (Košice), prof. PhDr. Juraj Vaňko, CSc. (Nitra), Mgr. Miroslav Zumrík, PhD. prof. PhDr. Pavol Žigo, CSc. (Bratislava). technický_______________________________________________________________ redaktor/technical editor: Mgr. Vladimír Radik Vydáva/Published by: Jazykovedný ústav Ľudovíta Štúra Slovenskej