Michael Barone Mcmaster University - Psychology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2018 Celebrity Birthday Book!

2 Contents 1 2018 17 1.1 January ............................................... 17 January 1 - Verne Troyer gets the start of a project (2018-01-01 00:02) . 17 January 2 - Jack Hanna gets animal considerations (2018-01-02 09:00) . 18 January 3 - Dan Harmon gets pestered (2018-01-03 09:00) . 18 January 4 - Dave Foley gets an outdoor slumber (2018-01-04 09:00) . 18 January 5 - deadmau5 gets a restructured week (2018-01-05 09:00) . 19 January 6 - Julie Chen gets variations on a dining invitation (2018-01-06 09:00) . 19 January 7 - Katie Couric gets a baristo’s indolence (2018-01-07 09:00) . 20 January 8 - Jenny Lewis gets a young Peter Pan (2018-01-08 09:00) . 20 January 9 - Joan Baez gets Mickey Brennan’d (2018-01-09 09:00) . 20 January 10 - Jemaine Clement gets incremental name dropping (2018-01-10 09:00) . 21 January 11 - Mary J. Blige gets transferable Bop-It skills (2018-01-11 09:00) . 22 January 12 - Raekwon gets world leader factoids (2018-01-12 09:00) . 22 January 13 - Julia Louis-Dreyfus gets a painful hallumination (2018-01-13 09:00) . 22 January 14 - Jason Bateman gets a squirrel’s revenge (2018-01-14 09:00) . 23 January 15 - Charo gets an avian alarm (2018-01-15 09:00) . 24 January 16 – Lin-Manuel Miranda gets an alternate path to a coveted award (2018-01-16 09:00) .................................... 24 January 17 - Joshua Malina gets a Baader-Meinhof’d rice pudding (2018-01-17 09:00) . 25 January 18 - Jason Segel gets a body donation (2018-01-18 09:00) . -

MIC Buzz Magazine Article 10402 Reference Table1 Cuba Watch 040517 Cuban Music Is Caribbean Music Not Latin Music 15.Numbers

Reference Information Table 1 (Updated 5th June 2017) For: Article 10402 | Cuba Watch NB: All content and featured images copyrights 04/05/2017 reserved to MIC Buzz Limited content and image providers and also content and image owners. Title: Cuban Music Is Caribbean Music, Not Latin Music. Item Subject Date and Timeline Name and Topic Nationality Document / information Website references / Origins 1 Danzon Mambo Creator 1938 -- One of his Orestes Lopez Cuban Born n Havana on December 29, 1911 Artist Biography by Max Salazar compositions, was It is known the world over in that it was Orestes Lopez, Arcano's celloist and (Celloist and pianist) broadcast by Arcaño pianist who invented the Danzon Mambo in 1938. Orestes's brother, bassist http://www.allmusic.com/artist/antonio-arcaño- in 1938, was a Israel "Cachao" Lopez, wrote the arrangements which enables Arcano Y Sus mn0001534741/biography Maravillas to enjoy world-wide recognition. Arcano and Cachao are alive. rhythmic danzón Orestes died December 1991 in Havana. And also: entitled ‘Mambo’ In 29 August 1908, Havana, Cuba. As a child López studied several instruments, including piano and cello, and he was briefly with a local symphony orchestra. His Artist Biography by allmusic.com brother, Israel ‘Cachao’ López, also became a musician and influential composer. From the late 20s onwards, López played with charanga bands such as that led by http://www.allmusic.com/artist/orestes-lopez- Miguel Vásquez and he also led and co-led bands. In 1937 he joined Antonio mn0000485432 Arcaño’s band, Sus Maravillas. Playing piano, cello and bass, López also wrote many arrangements in addition to composing some original music. -

Wonderful! 143: Rare, Exclusive Gak Published July 29Th, 2020 Listen on Themcelroy.Family

Wonderful! 143: Rare, Exclusive Gak Published July 29th, 2020 Listen on TheMcElroy.family [theme music plays] Rachel: I'm gonna get so sweaty in here. Griffin: Are you? Rachel: It is… hotototot. Griffin: Okay. Is this the show? Are we in it? Rachel: Hi, this is Rachel McElroy! Griffin: Hi, this is Griffin McElroy. Rachel: And this is Wonderful! Griffin: It‘s gettin‘ sweaaatyyy! Rachel: [laughs] Griffin: It‘s not—it doesn‘t feel that bad to me. Rachel: See, you're used to it. Griffin: Y'know what it was? Mm, I had my big fat gaming rig pumping out pixels and frames. Comin‘ at me hot and heavy. Master Chief was there. Just so fuckin‘—just poundin‘ out the bad guys, and it was getting hot and sweaty in here. So I apologize. Rachel: Griffin has a very sparse office that has 700 pieces of electronic equipment in it. Griffin: True. So then, one might actually argue it‘s not sparse at all. In fact, it is filled with electronic equipment. Yeah, that‘s true. I imagine if I get the PC running, I imagine if I get the 3D printer running, all at the same time, it‘s just gonna—it could be a sweat lodge. I could go on a real journey in here. But I don‘t think it‘s that bad, and we‘re only in here for a little bit, so let‘s… Rachel: And I will also say that a lot of these electronics help you make a better podcast, which… is a timely thing. -

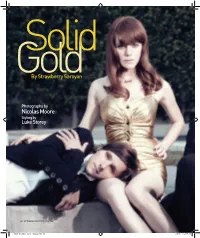

Nicolas Moore Styling by Luke Storey

Solid GoldBy Strawberry Saroyan Photographs by Nicolas Moore Styling by Luke Storey 62 SEPTEMBER 2007 WWW.SPIN.COM F_RILO_OPENER_SEPT_FINAL2.indd 62 7/26/07 7:52:38 PM Rilo Kiley wanted their bold, brassy new album to sound like a party. But with the prospect of mainstream success reopening old wounds and triggering self-doubt, can they find it in their hearts to have some fun? From left, on Blake Sennett: Cosa Nostra jacket, Band of Outsiders shirt. On Jenny Lewis: Scout dress. On Jason Boesel: Kosmetique Label jacket and shirt, J.Lindeberg tie, Gucci pants. On Pierre de Reeder: D&G jacket, Boss Black shirt, Juicy Couture Men’s pants. Photographed for Spin in Hollywood, California, June 29, 2007. Hair by Jason Murillo for FrankReps. Lina Hanson using Stila Cosmetics at MagnetLA. F_RILO_OPENER_SEPT_FINAL2.indd 63 7/26/07 7:53:20 PM Jenny Lewis’ apartment is a disaster. One mighte expectn the 31-year-oldn lead singery of Rilo Kiley to have scaled up by now. Nine years into their career, they are the model of the indie band made good—critical respect, Jdecent sales, sound track exposure—while Lewis’ solo debut, Rabbit Fur Coat, was a modest hit last year, with 112,000 copies sold. She has a love of pretty things—I see her, over the course of two summer weeks, wearing, among other things, a sparkly little jacket, a gold-trimmed minidress, and Chanel-esque heels. Her consumer lust is so strong that she deadpans at one point about her breakup with the band’s lead guitarist, Blake Sennett, “It was because of my shopping.” Lewis’ apartment is located on a slanted hill in the boho Silver Lake neigh- borhood of Los Angeles. -

Sam Cooke the Man Who Invented Soul Mp3, Flac, Wma

Sam Cooke The Man Who Invented Soul mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Funk / Soul Album: The Man Who Invented Soul Country: Europe Released: 2011 Style: Soul MP3 version RAR size: 1693 mb FLAC version RAR size: 1731 mb WMA version RAR size: 1929 mb Rating: 4.5 Votes: 167 Other Formats: AIFF MP4 WMA MMF DMF ADX XM Tracklist 1-1 You Send Me 1-2 Summertime (Pt. 1) 1-3 For Sentimental Reasons 1-4 Desire Me 1-5 Lonely Island 1-6 You Were Made For Me 1-7 Win Your Love For Me 1-8 Love You Most Of All 1-9 Everybody Loves To Cha Cha Cha 1-10 No One Can Take Your Place 1-11 Only Sixteen 1-12 With You 1-13 Crazy She Calls Me 1-14 I Got A Right To Sing The Blues 1-15 Comes Love 1-16 Ain't Nobody's Business 1-17 Little Things You Do 1-18 There, I've Said It Again 1-19 When I Fall In Love 1-20 Let's Go Steady Again 1-21 (What A) Wonderful World 2-1 Chain Gang 2-2 I Belong To Your Heart 2-3 Teenage Sonata 2-4 Love Me 2-5 Sad Mood (Unreleased Version) 2-6 If I Had You (I'd Be Happy) 2-7 You Belong To Me 2-8 Sad Mood 2-9 Tenderness 2-10 That's It-I Quit-I'm Movin' On 2-11 Hold On 2-12 Cupid 2-13 Tenderness (Unreleased Version) 2-14 Don't Get Around Much Any More 2-15 Nobody Knows You When You're Down And Out 2-16 Baby Won't You Please Come Home 2-17 Trouble In Mind 2-18 Out In The Cold Again 2-19 Exactly Like You 2-20 Since I Met You Baby 2-21 I'm Just A Lucky So And So 2-22 But Not For Me 2-23 You're Always On My Mind 2-24 (Don't Fight It) Feel It 2-25 It's All Right 2-26 Twistin' The Night Away 2-27 One More Time 2-28 Don't Cry On My Shoulder -

The Role of Emotion and Context in Musical Preference

The Role of Emotion and Context in Musical Preference Yading Song PhD thesis Thesis submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy of the University of London School of Electronic Engineering and Computer Science Queen Mary, University of London United Kingdom January 2016 Abstract The powerful emotional effects of music increasingly attract the attention of music informa- tion retrieval researchers and music psychologists. In the past decades, a gap exists between these two disciplines, and researchers have focused on different aspects of emotion in music. Music information retrieval researchers are concerned with computational tasks such as the classifica- tion of music by its emotional content, whereas music psychologists are more interested in the understanding of emotion in music. Many of the existing studies have investigated the above issues in the context of classical music, but the results may not be applicable to other genres. This thesis focusses on musical emotion in Western popular music combining knowledge from both disciplines. I compile a Western popular music emotion dataset based on online social tags, and present a music emotion classification system using audio features corresponding to four different musical dimensions. Listeners' perceived and induced emotional responses to the emotion dataset are compared, and I evaluate the reliability of emotion tags with listeners' ratings of emotion using two dominant models of emotion, namely the categorical and the dimensional emotion models. In the next experiment, I build a dataset of musical excerpts identified in a questionnaire, and I train my music emotion classification system with these audio recordings. -

諾箋護憲薫葦嵩叢 Br皿ant Years of Singing Pure Gospel with the Soul Stirrers, The

OCk and ro11 has always been a hybrid music, and before 1950, the most important hybrids pop were interracial. Black music was in- 恥m `しfluenced by white sounds (the Ink Spots, Ravens and Orioles with their sentimental ballads) and white music was intertwined with black (Bob Wills, Hank Williams and two generations of the honky-tOnk blues). ,Rky血皿and gQ?pel, the first great hy垣i立垂a血QnPf †he囲fti鎧T臆ha陣erled within black Though this hybrid produced a dutch of hits in the R&B market in the early Fifties, Only the most adventurous white fans felt its impact at the time; the rest had to wait for the comlng Of soul music in the Sixties to feel the rush of rock and ro11 sung gospel-Style. くくRhythm and gospel’’was never so called in its day: the word "gospel,, was not something one talked about in the context of such salacious songs as ’くHoney Love’’or ”Work with Me Annie.’’Yet these records, and others by groups like theeBQ聖二_ inoes-and the Drif曲 閣閣閣議竪醒語間監護圏監 Brown, Otis Redding and Wilson Pickett. Clyde McPhatter, One Of the sweetest voices of the Fifties. Originally a member of Billy Ward’s The combination of gospel singing with the risqu6 1yrics Dominoes, he became the lead singer of the original Drifters, and then went on to pop success as a soIoist (‘くA I.over’s Question,’’ (、I.over Please”). 諾箋護憲薫葦嵩叢 br皿ant years of singing pure gospel with the Soul Stirrers, the resulting schism among Cooke’s fans was deeper and longer lasting than the divisions among Bob Dylan’s partisans after he went electric in 1965. -

Xiami Music Genre 文档

xiami music genre douban 2021 年 02 月 14 日 Contents: 1 目录 3 2 23 3 流行 Pop 25 3.1 1. 国语流行 Mandarin Pop ........................................ 26 3.2 2. 粤语流行 Cantopop .......................................... 26 3.3 3. 欧美流行 Western Pop ........................................ 26 3.4 4. 电音流行 Electropop ......................................... 27 3.5 5. 日本流行 J-Pop ............................................ 27 3.6 6. 韩国流行 K-Pop ............................................ 27 3.7 7. 梦幻流行 Dream Pop ......................................... 28 3.8 8. 流行舞曲 Dance-Pop ......................................... 29 3.9 9. 成人时代 Adult Contemporary .................................... 29 3.10 10. 网络流行 Cyber Hit ......................................... 30 3.11 11. 独立流行 Indie Pop ......................................... 30 3.12 12. 女子团体 Girl Group ......................................... 31 3.13 13. 男孩团体 Boy Band ......................................... 32 3.14 14. 青少年流行 Teen Pop ........................................ 32 3.15 15. 迷幻流行 Psychedelic Pop ...................................... 33 3.16 16. 氛围流行 Ambient Pop ....................................... 33 3.17 17. 阳光流行 Sunshine Pop ....................................... 34 3.18 18. 韩国抒情歌曲 Korean Ballad .................................... 34 3.19 19. 台湾民歌运动 Taiwan Folk Scene .................................. 34 3.20 20. 无伴奏合唱 A cappella ....................................... 36 3.21 21. 噪音流行 Noise Pop ......................................... 37 3.22 22. 都市流行 City Pop ......................................... -

4/2/16 Slagg Brothers Rhythm & Blues, Soul

4/2/16 Slagg Brothers Rhythm & Blues, Soul & Grooves Show Animal 4:05 Def Leppard 1987, from the album Hysteria, became the band's first Top 10 hit Howlin' Wolf recorded "The Red Rooster" in 1961, written by Willie Dixon. Little Red Rooster 2:54 Sam Cooke This version 1963, with young Billy Preston on organ Backslop 2:33 Baby Earl & The Trinidads Born 1931 in Guthrie, Oklahoma, also known as Long Tall Marvin, an American rhythm Salty Dog 2:05 Marvin Phillips and blues singer and saxophonist. This from 55. Written by Jerry Leiber and Mike Stoller. This the original version, 1952, her only hit. Hound Dog 2:53 Big Mama Thornton Presley's 1956 version sold an estimated 10 million copies globally, was his best- selling song. 1981. Basically Paul Caplin, and was the support act on Duran Duran's first tour. He is Welcome to the Monkey 5:38 Animal Magnet Chairman of Caplin Systems, a software company that provides web technology for House financial service - one of the 100 fastest-growing technology firms in Britain. Included on Zevon's 1978 album Excitable Boy, it featured accompaniment by Werewolves Of London 3:29 Warren Zevon drummer Mick Fleetwood and bassist John McVie of Fleetwood Mac. From 1968 double album The Beatles (also known as "the White Album"). The song Blackbird 2:19 The Beatles was written and performed as a solo effort by Paul McCartney, though credited to Lennon–McCartney. Crocodiles 2:40 Echo & The Bunnymen 1980, from debut album of the same name. 1985, Hounds of Love is her fifth and best selling album. -

Universitá Degli Studi Di Milano Facoltà Di Scienze Matematiche, Fisiche E Naturali Dipartimento Di Tecnologie Dell'informazione

UNIVERSITÁ DEGLI STUDI DI MILANO FACOLTÀ DI SCIENZE MATEMATICHE, FISICHE E NATURALI DIPARTIMENTO DI TECNOLOGIE DELL'INFORMAZIONE SCUOLA DI DOTTORATO IN INFORMATICA Settore disciplinare INF/01 TESI DI DOTTORATO DI RICERCA CICLO XXIII SERENDIPITOUS MENTORSHIP IN MUSIC RECOMMENDER SYSTEMS Eugenio Tacchini Relatore: Prof. Ernesto Damiani Direttore della Scuola di Dottorato: Prof. Ernesto Damiani Anno Accademico 2010/2011 II Acknowledgements I would like to thank all the people who helped me during my Ph.D. First of all I would like to thank Prof. Ernesto Damiani, my advisor, not only for his support and the knowledge he imparted to me but also for his capacity of understanding my needs and for having let me follow my passions; thanks also to all the other people of the SESAR Lab, in particular to Paolo Ceravolo and Gabriele Gianini. Thanks to Prof. Domenico Ferrari, who gave me the possibility to work in an inspiring context after my graduation, helping me to understand the direction I had to take. Thanks to Prof. Ken Goldberg for having hosted me in his laboratory, the Berkeley Laboratory for Automation Science and Engineering at the University of California, Berkeley, a place where I learnt a lot; thanks also to all the people of the research group and in particular to Dmitry Berenson and Timmy Siauw for the very fruitful discussions about clustering, path searching and other aspects of my work. Thanks to all the people who accepted to review my work: Prof. Richard Chbeir, Prof. Ken Goldberg, Prof. Przemysław Kazienko, Prof. Ronald Maier and Prof. Robert Tolksdorf. Thanks to 7digital, our media partner for the experimental test, and in particular to Filip Denker. -

Sam Cooke This Is Sam Cooke Mp3, Flac, Wma

Sam Cooke This Is Sam Cooke mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Funk / Soul Album: This Is Sam Cooke Country: UK Released: 1971 Style: Soul MP3 version RAR size: 1988 mb FLAC version RAR size: 1606 mb WMA version RAR size: 1189 mb Rating: 4.1 Votes: 986 Other Formats: MMF AU WAV MIDI VOX AA DMF Tracklist A1 Frankie And Johnny A2 You Send Me A3 Sad Mood A4 Summertime A5 Chain Gang B1 Feel It B2 (I Love You) For Sentimental Reasons B3 Another Saturday Night B4 Wonderful World B5 Having A Party C1 Baby, Baby, Baby C2 Only Sixteen C3 Love Will Find A Way C4 Bring It On Home To Me C5 Twistin' The Night Away D1 Little Red Rooster D2 Cupid D3 Sugar Dumpling D4 Send Me Some Lovin' D5 Everybody Loves To Cha Cha Cha Credits Remastered By – Don Miller Notes Track A4 from "Porgy And Bess" Other versions Category Artist Title (Format) Label Category Country Year This Is Sam Cooke (2xLP, VPS-6027(e) Sam Cooke RCA VPS-6027(e) US 1971 Comp) This Is Sam Cooke (2xLP, VPS-6027(e) Sam Cooke RCA VPS-6027(e) US Unknown Comp, RE) This Is Sam Cooke (2xLP, VPS-6027(e) Sam Cooke RCA VPS-6027(e) Canada 1971 Comp) This Is Sam Cooke (2xLP, VPS-6027(e) Sam Cooke RCA VPS-6027(e) Canada 1971 Comp, "St) This Is Sam Cooke (2xLP, VPS-6027(e) Sam Cooke RCA VPS-6027(e) US 1971 Comp) Comments about This Is Sam Cooke - Sam Cooke Alsardin I've picked up a copy of this. -

Sam Cooke the Best of Sam Cooke Mp3, Flac, Wma

Sam Cooke The Best Of Sam Cooke mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Funk / Soul Album: The Best Of Sam Cooke Country: Jamaica Released: 1968 Style: Soul MP3 version RAR size: 1849 mb FLAC version RAR size: 1345 mb WMA version RAR size: 1932 mb Rating: 4.1 Votes: 591 Other Formats: VOC ASF FLAC APE MP2 DTS WAV Tracklist Hide Credits You Send Me A1 2:41 Written-By – L.C. Cook Only Sixteen A2 2:00 Written-By – B. Campbell* Everybody Loves To Cha Cha Cha A3 2:35 Written-By – Campbell* For Sentimental Reasons A4 2:35 Written-By – Deek Watson, William Best Wonderful World A5 2:02 Written-By – B. Campbell* Summertime A6 2:17 Written-By – Heyward*, Gershwin* Chain Gang B1 2:32 Written-By – S. Cooke* Cupid B2 2:30 Written-By – C. Cook*, S. Cooke* Twistin' The Night Away B3 2:32 Written-By – S. Cooke* Sad Mood B4 2:28 Written-By – S. Cooke* Having A Party B5 2:23 Written-By – S. Cooke* Bring It On Home To Me B6 2:37 Written-By – S. Cooke* Companies, etc. Pressed By – Federal Record Mfg. Co. Ltd. Manufactured By – Federal Record Mfg. Co. Ltd. Copyright (c) – Radio Corporation Of America Credits Producer, Liner Notes – Hugo & Luigi Notes Reissue. Jamaican press and labels. US imported jacket Unknown if this is a stereo or mono press (not clearly marked) Spine and Front cover: LSP-2625 Rear Jacket and Labels: LPM/LPS-2625 Barcode and Other Identifiers Matrix / Runout (Side A ): N5PY-3092-A FRM Best Of Sam Cooke Matrix / Runout (Side B ): N5PY-3093-B FRM Best Of Sam Cooke Rights Society: ASCAP Rights Society: BMI Other versions Category