Statistics in Historical Musicology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2018 Celebrity Birthday Book!

2 Contents 1 2018 17 1.1 January ............................................... 17 January 1 - Verne Troyer gets the start of a project (2018-01-01 00:02) . 17 January 2 - Jack Hanna gets animal considerations (2018-01-02 09:00) . 18 January 3 - Dan Harmon gets pestered (2018-01-03 09:00) . 18 January 4 - Dave Foley gets an outdoor slumber (2018-01-04 09:00) . 18 January 5 - deadmau5 gets a restructured week (2018-01-05 09:00) . 19 January 6 - Julie Chen gets variations on a dining invitation (2018-01-06 09:00) . 19 January 7 - Katie Couric gets a baristo’s indolence (2018-01-07 09:00) . 20 January 8 - Jenny Lewis gets a young Peter Pan (2018-01-08 09:00) . 20 January 9 - Joan Baez gets Mickey Brennan’d (2018-01-09 09:00) . 20 January 10 - Jemaine Clement gets incremental name dropping (2018-01-10 09:00) . 21 January 11 - Mary J. Blige gets transferable Bop-It skills (2018-01-11 09:00) . 22 January 12 - Raekwon gets world leader factoids (2018-01-12 09:00) . 22 January 13 - Julia Louis-Dreyfus gets a painful hallumination (2018-01-13 09:00) . 22 January 14 - Jason Bateman gets a squirrel’s revenge (2018-01-14 09:00) . 23 January 15 - Charo gets an avian alarm (2018-01-15 09:00) . 24 January 16 – Lin-Manuel Miranda gets an alternate path to a coveted award (2018-01-16 09:00) .................................... 24 January 17 - Joshua Malina gets a Baader-Meinhof’d rice pudding (2018-01-17 09:00) . 25 January 18 - Jason Segel gets a body donation (2018-01-18 09:00) . -

MIC Buzz Magazine Article 10402 Reference Table1 Cuba Watch 040517 Cuban Music Is Caribbean Music Not Latin Music 15.Numbers

Reference Information Table 1 (Updated 5th June 2017) For: Article 10402 | Cuba Watch NB: All content and featured images copyrights 04/05/2017 reserved to MIC Buzz Limited content and image providers and also content and image owners. Title: Cuban Music Is Caribbean Music, Not Latin Music. Item Subject Date and Timeline Name and Topic Nationality Document / information Website references / Origins 1 Danzon Mambo Creator 1938 -- One of his Orestes Lopez Cuban Born n Havana on December 29, 1911 Artist Biography by Max Salazar compositions, was It is known the world over in that it was Orestes Lopez, Arcano's celloist and (Celloist and pianist) broadcast by Arcaño pianist who invented the Danzon Mambo in 1938. Orestes's brother, bassist http://www.allmusic.com/artist/antonio-arcaño- in 1938, was a Israel "Cachao" Lopez, wrote the arrangements which enables Arcano Y Sus mn0001534741/biography Maravillas to enjoy world-wide recognition. Arcano and Cachao are alive. rhythmic danzón Orestes died December 1991 in Havana. And also: entitled ‘Mambo’ In 29 August 1908, Havana, Cuba. As a child López studied several instruments, including piano and cello, and he was briefly with a local symphony orchestra. His Artist Biography by allmusic.com brother, Israel ‘Cachao’ López, also became a musician and influential composer. From the late 20s onwards, López played with charanga bands such as that led by http://www.allmusic.com/artist/orestes-lopez- Miguel Vásquez and he also led and co-led bands. In 1937 he joined Antonio mn0000485432 Arcaño’s band, Sus Maravillas. Playing piano, cello and bass, López also wrote many arrangements in addition to composing some original music. -

Wonderful! 143: Rare, Exclusive Gak Published July 29Th, 2020 Listen on Themcelroy.Family

Wonderful! 143: Rare, Exclusive Gak Published July 29th, 2020 Listen on TheMcElroy.family [theme music plays] Rachel: I'm gonna get so sweaty in here. Griffin: Are you? Rachel: It is… hotototot. Griffin: Okay. Is this the show? Are we in it? Rachel: Hi, this is Rachel McElroy! Griffin: Hi, this is Griffin McElroy. Rachel: And this is Wonderful! Griffin: It‘s gettin‘ sweaaatyyy! Rachel: [laughs] Griffin: It‘s not—it doesn‘t feel that bad to me. Rachel: See, you're used to it. Griffin: Y'know what it was? Mm, I had my big fat gaming rig pumping out pixels and frames. Comin‘ at me hot and heavy. Master Chief was there. Just so fuckin‘—just poundin‘ out the bad guys, and it was getting hot and sweaty in here. So I apologize. Rachel: Griffin has a very sparse office that has 700 pieces of electronic equipment in it. Griffin: True. So then, one might actually argue it‘s not sparse at all. In fact, it is filled with electronic equipment. Yeah, that‘s true. I imagine if I get the PC running, I imagine if I get the 3D printer running, all at the same time, it‘s just gonna—it could be a sweat lodge. I could go on a real journey in here. But I don‘t think it‘s that bad, and we‘re only in here for a little bit, so let‘s… Rachel: And I will also say that a lot of these electronics help you make a better podcast, which… is a timely thing. -

ARSM (Associate of the Royal Schools of Music)

Qualification Specification ARSM (Associate of the Royal Schools of Music) Level 4 Diploma in Music Performance September 2020 Qualification Specification: ARSM Contents 1. Introduction 4 About ABRSM 4 About this qualification specification 5 About this qualification 5 Regulation (UK) 6 Regulation (Europe) 7 Regulation (Rest of world) 8 2. ARSM diploma 9 Syllabuses 9 Exam Regulations 9 Malpractice and maladministration 9 Entry requirements 10 Exam booking 11 Access (for candidates with specific needs) 11 Exam content 11 How the exam works 11 3. ARSM syllabus 14 Introducing the qualification 14 Exam requirements and information 14 • Subjects available 14 • Selecting repertoire 14 • Preparing for the exam 17 4. Assessment and marking 19 Assessment objectives 19 Mark allocation 19 Result categories 20 Synoptic assessment 20 Awarding 20 Marking criteria 20 5. After the exam 23 Results 23 Exam feedback 23 © 2016 by The Associated Board of the Royal Schools of Music. Updated in 2020. 6. Other assessments 24 DipABRSM, LRSM, FRSM 24 Programme form 25 Index 27 This September 2020 edition contains updated information to cover the introduction of a remotely-assessed option for taking the ARSM exam. There are no changes to the existing exam content and requirements. Throughout this document, the term ‘instrument’ is used to include ‘voice’ and ‘piece’ is used to include song. 1. Introduction About ABRSM At ABRSM we aim to support learners and teachers in every way we can. One way we do this is through the provision of high quality and respected music qualifications. These exams provide clear goals, reliable and consistent marking, and guidance for future learning. -

A Menuhin Centenary Celebration

PLEASE A Menuhin Centenary NOTE Celebration FEATURING The taking of photographs and the use of recording equipment of any kind during performances is strictly prohibited The Lord Menuhin Centenary Orchestra Philip Burrin - Conductor FEBRUARY Friday 19, 8:00 pm Earl Cameron Theatre, City Hall CORPORATE SPONSOR Programme Introduction and Allegro for Strings Op.47 Edward Elgar (1857 - 1934) Solo Quartet Jean Fletcher Violin 1 Suzanne Dunkerley Violin 2 Ross Cohen Viola Liz Tremblay Cello Absolute Zero Viola Quartet Sinfonia Tomaso Albinoni (1671 – 1751) “Story of Two Minstrels” Sancho Engaño (1922 – 1995) Menuetto Giacomo Puccini (1858 – 1924) Ross Cohen, Kate Eriksson, Jean Fletcher Karen Hayes, Jonathan Kightley, Kate Ross Two Elegiac Melodies Op.34 Edvard Grieg (1843 – 1907) The Wounded Heart The Last Spring St Paul’s Suite Op. 29 no.2 Gustav Holst (1874 – 1934) Jig Ostinato Intermezzo Finale (The Dargason) Solo Quartet Clare Applewhite Violin 1 Diane Wakefield Violin 2 Jonathan Kightley Viola Alison Johnstone Cello Intermission Concerto for Four Violins in B minor Op.3 No.10 Antonio Vivaldi (1678 – 1741) “L’estro armonico” Allegro Largo – Larghetto – Adagio Allegro Solo Violins Diane Wakefield Alison Black Cal Fell Sarah Bridgland Cello obbligato Alison Johnstone Concerto Grosso No. 1 Ernest Bloch (1880 – 1959) for Strings and Piano Obbligato Prelude Dirge Pastorale and Rustic Dances Fugue Piano Obbligato Andrea Hodson Yehudi Menuhin, Lord Menuhin of Stoke d’Abernon, (April 22, 1916 - March 12, 1999) One of the leading violin virtuosos of the 20th century, Menuhin grew up in San Francisco, where he studied violin from age four. He studied in Paris under the violinist and composer Georges Enesco, who deeply influenced his playing style and who remained a lifelong friend. -

Michael Barone Mcmaster University - Psychology

M.Sc Thesis - Michael Barone McMaster University - Psychology COGNITIVE APPROACHES TO MUSIC PREFERENCE USING LARGE DATASETS M.Sc Thesis - Michael Barone McMaster University - Psychology Bridging the gap: cognitive approaches to musical preference using large datasets Michael Barone July 18, 2017 M.Sc Thesis - Michael Barone McMaster University - Psychology McMaster University MASTER OF SCIENCE (2017) Hamilton, Ontario (Psychology, Neuroscience, & Behaviour) TITLE: Bridging the gap: cognitive approaches to music preference using large datasets AUTHOR: Michael David Barone, B.A. (McMaster University) SUPERVISOR: Professor Matthew H. Woolhouse NUMBER OF PAGES: vii, 49 ii M.Sc Thesis - Michael Barone McMaster University - Psychology This thesis examines whether research from cognitive psychology can be used to inform and predict behaviours germane to computational music analysis including genre choice, music feature preference, and consumption patterns from data provided by digital-music platforms. Specific topics of focus include: information integrity and consistency of large datasets, whether signal processing algorithms can be used to assess music preference across multiple genres, and the degree to which consumption behaviours can be derived and validated using more traditional experimental paradigms. Results suggest that psychologically motivated research can provide useful insights and metrics in the computationally focused area of global music consumption behaviour and digital music analysis. Limitations that remain within this interdisciplinary approach are addressed by providing refined analysis techniques for future work. iii M.Sc Thesis - Michael Barone McMaster University - Psychology Abstract Using a large dataset of digital music downloads, this thesis examines the extent to which cognitive-psychology research can generate and predict user behaviours relevant to the distinct fields of computer science and music perception. -

ABRSM Exam Regulations International 2020

ABRSM Exam Regulations International 2020 Visit www.abrsm.org for online exam services and up-to-date information Contents About ABRSM and these Exam Regulations ............................................................ 4 1 Exam subjects ....................................................................................................................... 5 2 Regulated qualifications ................................................................................................... 5 3 Prerequisites ......................................................................................................................... 5 4 Introduction and overlap of syllabuses ...................................................................... 6 5 Applicant’s role and responsibilities ........................................................................... 6 6 Exam entry ............................................................................................................................. 6 7 Payment .................................................................................................................................. 7 8 Withdrawals, non-attendance and fee refunds.........................................................7 9 Access (for candidates with specific needs) .............................................................7 Entering for the exam, 7 Supporting evidence, 7 Personal data, 8 Alternative to graded exams, 8 10 Practical exam dates ......................................................................................................... -

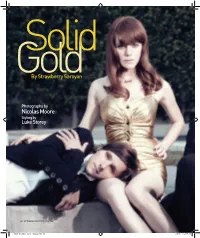

Nicolas Moore Styling by Luke Storey

Solid GoldBy Strawberry Saroyan Photographs by Nicolas Moore Styling by Luke Storey 62 SEPTEMBER 2007 WWW.SPIN.COM F_RILO_OPENER_SEPT_FINAL2.indd 62 7/26/07 7:52:38 PM Rilo Kiley wanted their bold, brassy new album to sound like a party. But with the prospect of mainstream success reopening old wounds and triggering self-doubt, can they find it in their hearts to have some fun? From left, on Blake Sennett: Cosa Nostra jacket, Band of Outsiders shirt. On Jenny Lewis: Scout dress. On Jason Boesel: Kosmetique Label jacket and shirt, J.Lindeberg tie, Gucci pants. On Pierre de Reeder: D&G jacket, Boss Black shirt, Juicy Couture Men’s pants. Photographed for Spin in Hollywood, California, June 29, 2007. Hair by Jason Murillo for FrankReps. Lina Hanson using Stila Cosmetics at MagnetLA. F_RILO_OPENER_SEPT_FINAL2.indd 63 7/26/07 7:53:20 PM Jenny Lewis’ apartment is a disaster. One mighte expectn the 31-year-oldn lead singery of Rilo Kiley to have scaled up by now. Nine years into their career, they are the model of the indie band made good—critical respect, Jdecent sales, sound track exposure—while Lewis’ solo debut, Rabbit Fur Coat, was a modest hit last year, with 112,000 copies sold. She has a love of pretty things—I see her, over the course of two summer weeks, wearing, among other things, a sparkly little jacket, a gold-trimmed minidress, and Chanel-esque heels. Her consumer lust is so strong that she deadpans at one point about her breakup with the band’s lead guitarist, Blake Sennett, “It was because of my shopping.” Lewis’ apartment is located on a slanted hill in the boho Silver Lake neigh- borhood of Los Angeles. -

Wells Angels G Iving Gifted Children a Chance the Angels’ Newsletter ISSUE 1 - SPRING 2019

Wells Angels G iving gifted children a chance the angels’ newsletter ISSUE 1 - SPRING 2019 Welcome to the first Wells Cathedral Chorister Trust newsletter of 2019. Many of you will be used to hearing our wonderful choir singing in the cathedral. What you may not know is how much work and dedication goes into producing this sublime music. We hope our new termly newsletter will give you a glimpse ‘behind the scenes’ of what the choristers get up to; what they achieve in school and on the sports fields as well as training daily to sing for us. The Chorister Trust is extremely grateful to our Wells Angels. Every contribution really does make a difference, often the difference that enables some choristers Pancake Fun! Some of our choristers took a break from their lessons and duties to to take up their places in the Choir and take part in a Shrove Tuesday Pancake Race, as part of a photoshoot for local media. develop into top musicians. Thankfully, the choristers involved were all expert flippers and there wasn’t too much The first of our Wells Angels ‘open pancake to clear up from Vicars’ Close or the Cloisters! (Photo: Jason Bryant) rehearsals’, to which all of our Angels are warmly invited, took place on Saturday 16 March. These are a termly opportunity to eavesdrop on one of the choristers’ daily events and see them at work. The dates for the next two open rehearsals are shown over the page. We also have another important date for your diaries. Our choristers are performing an informal concert, to include the toe-tapping fun that is Captain Noah & His Floating Zoo in the Cathedral on Saturday 4th May at 1pm. -

Viral Spiral Also by David Bollier

VIRAL SPIRAL ALSO BY DAVID BOLLIER Brand Name Bullies Silent Theft Aiming Higher Sophisticated Sabotage (with co-authors Thomas O. McGarity and Sidney Shapiro) The Great Hartford Circus Fire (with co-author Henry S. Cohn) Freedom from Harm (with co-author Joan Claybrook) VIRAL SPIRAL How the Commoners Built a Digital Republic of Their Own David Bollier To Norman Lear, dear friend and intrepid explorer of the frontiers of democratic practice © 2008 by David Bollier All rights reserved. No part of this book may be reproduced, in any form, without written permission from the publisher. The author has made an online version of the book available under a Creative Commons Attribution-NonCommercial license. It can be accessed at http://www.viralspiral.cc and http://www.onthecommons.org. Requests for permission to reproduce selections from this book should be mailed to: Permissions Department, The New Press, 38 Greene Street, New York,NY 10013. Published in the United States by The New Press, New York,2008 Distributed by W.W.Norton & Company,Inc., New York ISBN 978-1-59558-396-3 (hc.) CIP data available The New Press was established in 1990 as a not-for-profit alternative to the large, commercial publishing houses currently dominating the book publishing industry. The New Press operates in the public interest rather than for private gain, and is committed to publishing, in innovative ways, works of educational, cultural, and community value that are often deemed insufficiently profitable. www.thenewpress.com A Caravan book. For more information, visit www.caravanbooks.org. Composition by dix! This book was set in Bembo Printed in the United States of America 10987654321 CONTENTS Acknowledgments vii Introduction 1 Part I: Harbingers of the Sharing Economy 21 1. -

Christian Responsibility in the US Foster System

Southeastern University FireScholars Selected Honors Theses Spring 4-28-2017 From the Father’s Heart to our Hands: Christian Responsibility in the U.S. Foster System Amelia Tam Southeastern University - Lakeland Follow this and additional works at: http://firescholars.seu.edu/honors Part of the Christianity Commons, Family, Life Course, and Society Commons, and the Social Work Commons Recommended Citation Tam, Amelia, "From the Father’s Heart to our Hands: Christian Responsibility in the U.S. Foster System" (2017). Selected Honors Theses. 73. http://firescholars.seu.edu/honors/73 This Thesis is brought to you for free and open access by FireScholars. It has been accepted for inclusion in Selected Honors Theses by an authorized administrator of FireScholars. For more information, please contact [email protected]. FROM THE FATHER’S HEART TO OUR HANDS: CHRISTIAN RESPONSIBILITY IN THE U.S. FOSTER SYSTEM by Amelia Kathryn Tam Submitted to the Honors Program Committee in partial fulfillment of the requirements for University Honors Scholars Southeastern University 2017 Copyright © 2017 by Amelia Tam All rights reserved i ABSTRACT Nearly half a million children are currently served by the child welfare system in the United States. This overwhelming strain on state departments and non-profit placement agencies is compounded by the fact that there are not enough available homes. There appears to be a shortage of capable and resilient foster and adoptive parents. Thousands of children who are ready to be adopted do not have anyone to take them in, and thousands more float in the system until new families agree to foster. This seeming shortage of homes is absurd considering the wealth of compassion and capability within the American church. -

A 3Rd Strike No Light a Few Good Men Have I Never a Girl Called Jane

A 3rd Strike No Light A Few Good Men Have I Never A Girl Called Jane He'S Alive A Little Night Music Send In The Clowns A Perfect Circle Imagine A Teens Bouncing Off The Ceiling A Teens Can't Help Falling In Love With You A Teens Floor Filler A Teens Halfway Around The World A1 Caught In The Middle A1 Ready Or Not A1 Summertime Of Our Lives A1 Take On Me A3 Woke Up This Morning Aaliyah Are You That Somebody Aaliyah At Your Best (You Are Love) Aaliyah Come Over Aaliyah Hot Like Fire Aaliyah If Your Girl Only Knew Aaliyah Journey To The Past Aaliyah Miss You Aaliyah More Than A Woman Aaliyah One I Gave My Heart To Aaliyah Rock The Boat Aaliyah Try Again Aaliyah We Need A Resolution Aaliyah & Tank Come Over Abandoned Pools Remedy ABBA Angel Eyes ABBA As Good As New ABBA Chiquita ABBA Dancing Queen ABBA Day Before You Came ABBA Does Your Mother Know ABBA Fernando ABBA Gimmie Gimmie Gimmie ABBA Happy New Year ABBA Hasta Manana ABBA Head Over Heels ABBA Honey Honey ABBA I Do I Do I Do I Do I Do ABBA I Have A Dream ABBA Knowing Me, Knowing You ABBA Lay All Your Love On Me ABBA Mama Mia ABBA Money Money Money ABBA Name Of The Game ABBA One Of Us ABBA Ring Ring ABBA Rock Me ABBA So Long ABBA SOS ABBA Summer Night City ABBA Super Trouper ABBA Take A Chance On Me ABBA Thank You For The Music ABBA Voulezvous ABBA Waterloo ABBA Winner Takes All Abbott, Gregory Shake You Down Abbott, Russ Atmosphere ABC Be Near Me ABC Look Of Love ABC Poison Arrow ABC When Smokey Sings Abdul, Paula Blowing Kisses In The Wind Abdul, Paula Cold Hearted Abdul, Paula Knocked