A Comparison Between Different Chess Rating Systems for Ranking Evolutionary Algorithms

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chess for Dummies‰

01_584049 ffirs.qxd 7/29/05 9:19 PM Page iii Chess FOR DUMmIES‰ 2ND EDITION by James Eade 01_584049 ffirs.qxd 7/29/05 9:19 PM Page ii 01_584049 ffirs.qxd 7/29/05 9:19 PM Page i Chess FOR DUMmIES‰ 2ND EDITION 01_584049 ffirs.qxd 7/29/05 9:19 PM Page ii 01_584049 ffirs.qxd 7/29/05 9:19 PM Page iii Chess FOR DUMmIES‰ 2ND EDITION by James Eade 01_584049 ffirs.qxd 7/29/05 9:19 PM Page iv Chess For Dummies®, 2nd Edition Published by Wiley Publishing, Inc. 111 River St. Hoboken, NJ 07030-5774 www.wiley.com Copyright © 2005 by Wiley Publishing, Inc., Indianapolis, Indiana Published simultaneously in Canada No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning, or otherwise, except as permit- ted under Sections 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, 978-750-8400, fax 978-646-8600. Requests to the Publisher for permission should be addressed to the Legal Department, Wiley Publishing, Inc., 10475 Crosspoint Blvd., Indianapolis, IN 46256, 317-572-3447, fax 317-572-4355, or online at http://www.wiley.com/go/permissions. Trademarks: Wiley, the Wiley Publishing logo, For Dummies, the Dummies Man logo, A Reference for the Rest of Us!, The Dummies Way, Dummies Daily, The Fun and Easy Way, Dummies.com, and related trade dress are trademarks or registered trademarks of John Wiley & Sons, Inc., and/or its affiliates in the United States and other countries, and may not be used without written permission. -

Here Should That It Was Not a Cheap Check Or Spite Check Be Better Collaboration Amongst the Federations to Gain Seconds

Chess Contents Founding Editor: B.H. Wood, OBE. M.Sc † Executive Editor: Malcolm Pein Editorial....................................................................................................................4 Editors: Richard Palliser, Matt Read Malcolm Pein on the latest developments in the game Associate Editor: John Saunders Subscriptions Manager: Paul Harrington 60 Seconds with...Chris Ross.........................................................................7 Twitter: @CHESS_Magazine We catch up with Britain’s leading visually-impaired player Twitter: @TelegraphChess - Malcolm Pein The King of Wijk...................................................................................................8 Website: www.chess.co.uk Yochanan Afek watched Magnus Carlsen win Wijk for a sixth time Subscription Rates: United Kingdom A Good Start......................................................................................................18 1 year (12 issues) £49.95 Gawain Jones was pleased to begin well at Wijk aan Zee 2 year (24 issues) £89.95 3 year (36 issues) £125 How Good is Your Chess?..............................................................................20 Europe Daniel King pays tribute to the legendary Viswanathan Anand 1 year (12 issues) £60 All Tied Up on the Rock..................................................................................24 2 year (24 issues) £112.50 3 year (36 issues) £165 John Saunders had to work hard, but once again enjoyed Gibraltar USA & Canada Rocking the Rock ..............................................................................................26 -

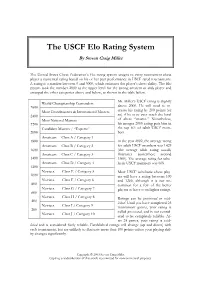

The USCF Elo Rating System by Steven Craig Miller

The USCF Elo Rating System By Steven Craig Miller The United States Chess Federation’s Elo rating system assigns to every tournament chess player a numerical rating based on his or her past performance in USCF rated tournaments. A rating is a number between 0 and 3000, which estimates the player’s chess ability. The Elo system took the number 2000 as the upper level for the strong amateur or club player and arranged the other categories above and below, as shown in the table below. Mr. Miller’s USCF rating is slightly World Championship Contenders 2600 above 2000. He will need to in- Most Grandmasters & International Masters crease his rating by 200 points (or 2400 so) if he is to ever reach the level Most National Masters of chess “master.” Nonetheless, 2200 his meager 2000 rating puts him in Candidate Masters / “Experts” the top 6% of adult USCF mem- 2000 bers. Amateurs Class A / Category 1 1800 In the year 2002, the average rating Amateurs Class B / Category 2 for adult USCF members was 1429 1600 (the average adult rating usually Amateurs Class C / Category 3 fluctuates somewhere around 1400 1500). The average rating for scho- Amateurs Class D / Category 4 lastic USCF members was 608. 1200 Novices Class E / Category 5 Most USCF scholastic chess play- 1000 ers will have a rating between 100 Novices Class F / Category 6 and 1200, although it is not un- 800 common for a few of the better Novices Class G / Category 7 players to have even higher ratings. 600 Novices Class H / Category 8 Ratings can be provisional or estab- 400 lished. -

Elo World, a Framework for Benchmarking Weak Chess Engines

Elo World, a framework for with a rating of 2000). If the true outcome (of e.g. a benchmarking weak chess tournament) doesn’t match the expected outcome, then both player’s scores are adjusted towards values engines that would have produced the expected result. Over time, scores thus become a more accurate reflection DR. TOM MURPHY VII PH.D. of players’ skill, while also allowing for players to change skill level. This system is carefully described CCS Concepts: • Evaluation methodologies → Tour- elsewhere, so we can just leave it at that. naments; • Chess → Being bad at it; The players need not be human, and in fact this can facilitate running many games and thereby getting Additional Key Words and Phrases: pawn, horse, bishop, arbitrarily accurate ratings. castle, queen, king The problem this paper addresses is that basically ACH Reference Format: all chess tournaments (whether with humans or com- Dr. Tom Murphy VII Ph.D.. 2019. Elo World, a framework puters or both) are between players who know how for benchmarking weak chess engines. 1, 1 (March 2019), to play chess, are interested in winning their games, 13 pages. https://doi.org/10.1145/nnnnnnn.nnnnnnn and have some reasonable level of skill. This makes 1 INTRODUCTION it hard to give a rating to weak players: They just lose every single game and so tend towards a rating Fiddly bits aside, it is a solved problem to maintain of −∞.1 Even if other comparatively weak players a numeric skill rating of players for some game (for existed to participate in the tournament and occasion- example chess, but also sports, e-sports, probably ally lose to the player under study, it may still be dif- also z-sports if that’s a thing). -

Rulebook Changes Since the 6Th Edition Rulebook Changes

Rulebook changes since the 6th edition Rulebook Changes Compiled by Dave Kuhns and Tim Just (2016) Since the publication of the 6th edition of the US Chess rulebook, changes have been made in US Chess policy or in the wording of the rules. Below is a collection of those changes. Any additions or corrections can be sent to US Chess. US Chess Policy Changes Please note that policy changes are different than rules changes and often occur more than once per year. Check the US Chess web page for the most current changes. Scholastic Policy Change: When taking entries for all National Scholastic Chess tournaments with ‘under’ and/or unrated sections, US Chess will require players to disclose at the time they enter the tournament whether they have one or more ratings in other over the board rating system(s). US Chess shall seriously consider, in consultation with the Scholastic Council and the Ratings Committee, using this rating information to determine section and prize eligibility in accordance with US Chess rules 28D and 28E. This policy will take effect immediately and will be in effect for all future US Chess national scholastic tournaments that have ‘under’ and/or unrated sections (new, 2014). Rationale: There are areas of the country in which many scholastic tournaments are being held that are not submitted to US Chess to be rated. As a result, some players come to the national scholastic tournaments with ratings that do not reflect their current playing strength. In order to ensure fair competition, we need to be able to use all available information about these players’ true playing strength. -

YEARBOOK the Information in This Yearbook Is Substantially Correct and Current As of December 31, 2020

OUR HERITAGE 2020 US CHESS YEARBOOK The information in this yearbook is substantially correct and current as of December 31, 2020. For further information check the US Chess website www.uschess.org. To notify US Chess of corrections or updates, please e-mail [email protected]. U.S. CHAMPIONS 2002 Larry Christiansen • 2003 Alexander Shabalov • 2005 Hakaru WESTERN OPEN BECAME THE U.S. OPEN Nakamura • 2006 Alexander Onischuk • 2007 Alexander Shabalov • 1845-57 Charles Stanley • 1857-71 Paul Morphy • 1871-90 George H. 1939 Reuben Fine • 1940 Reuben Fine • 1941 Reuben Fine • 1942 2008 Yury Shulman • 2009 Hikaru Nakamura • 2010 Gata Kamsky • Mackenzie • 1890-91 Jackson Showalter • 1891-94 Samuel Lipchutz • Herman Steiner, Dan Yanofsky • 1943 I.A. Horowitz • 1944 Samuel 2011 Gata Kamsky • 2012 Hikaru Nakamura • 2013 Gata Kamsky • 2014 1894 Jackson Showalter • 1894-95 Albert Hodges • 1895-97 Jackson Reshevsky • 1945 Anthony Santasiere • 1946 Herman Steiner • 1947 Gata Kamsky • 2015 Hikaru Nakamura • 2016 Fabiano Caruana • 2017 Showalter • 1897-06 Harry Nelson Pillsbury • 1906-09 Jackson Isaac Kashdan • 1948 Weaver W. Adams • 1949 Albert Sandrin Jr. • 1950 Wesley So • 2018 Samuel Shankland • 2019 Hikaru Nakamura Showalter • 1909-36 Frank J. Marshall • 1936 Samuel Reshevsky • Arthur Bisguier • 1951 Larry Evans • 1952 Larry Evans • 1953 Donald 1938 Samuel Reshevsky • 1940 Samuel Reshevsky • 1942 Samuel 2020 Wesley So Byrne • 1954 Larry Evans, Arturo Pomar • 1955 Nicolas Rossolimo • Reshevsky • 1944 Arnold Denker • 1946 Samuel Reshevsky • 1948 ONLINE: COVID-19 • OCTOBER 2020 1956 Arthur Bisguier, James Sherwin • 1957 • Robert Fischer, Arthur Herman Steiner • 1951 Larry Evans • 1952 Larry Evans • 1954 Arthur Bisguier • 1958 E. -

Elo and Glicko Standardised Rating Systems by Nicolás Wiedersheim Sendagorta Introduction “Manchester United Is a Great Team” Argues My Friend

Elo and Glicko Standardised Rating Systems by Nicolás Wiedersheim Sendagorta Introduction “Manchester United is a great team” argues my friend. “But it will never be better than Manchester City”. People rate everything, from films and fashion to parks and politicians. It’s usually an effortless task. A pool of judges quantify somethings value, and an average is determined. However, what if it’s value is unknown. In this scenario, economists turn to auction theory or statistical rating systems. “Skill” is one of these cases. The Elo and Glicko rating systems tend to be the go-to when quantifying skill in Boolean games. Elo Rating System Figure 1: Arpad Elo As I started playing competitive chess, I came across the Figure 2: Top Elo Ratings for 2014 FIFA World Cup Elo rating system. It is a method of calculating a player’s relative skill in zero-sum games. Quantifying ability is helpful to determine how much better some players are, as well as for skill-based matchmaking. It was devised in 1960 by Arpad Elo, a professor of Physics and chess master. The International Chess Federation would soon adopt this rating system to divide the skill of its players. The algorithm’s success in categorising people on ability would start to gain traction in other win-lose games; Association football, NBA, and “esports” being just some of the many examples. Esports are highly competitive videogames. The Elo rating system consists of a formula that can determine your quantified skill based on the outcome of your games. The “skill” trend follows a gaussian bell curve. -

Ranking Systems Used in Gaming Assessments And/Or Competitive Games

Research Memorandum ETS RM–15-03 Ranking Systems Used in Gaming Assessments and/or Competitive Games Ourania Rotou Xiaoyu Qian Matthias von Davier July 2015 ETS Research Memorandum Series EIGNOR EXECUTIVE EDITOR James Carlson Principal Psychometrician ASSOCIATE EDITORS Beata Beigman Klebanov Donald Powers Research Scientist Managing Principal Research Scientist Heather Buzick Gautam Puhan Research Scientist Principal Psychometrician Brent Bridgeman John Sabatini Distinguished Presidential Appointee Managing Principal Research Scientist Keelan Evanini Matthias von Davier Managing Research Scientist Senior Research Director Marna Golub-Smith Rebecca Zwick Principal Psychometrician Distinguished Presidential Appointee Shelby Haberman Distinguished Presidential Appointee PRODUCTION EDITORS Kim Fryer Ayleen Stellhorn Manager, Editing Services Editor Since its 1947 founding, ETS has conducted and disseminated scientific research to support its products and services, and to advance the measurement and education fields. In keeping with these goals, ETS is committed to making its research freely available to the professional community and to the general public. Published accounts of ETS research, including papers in the ETS Research Memorandum series, undergo a formal peer-review process by ETS staff to ensure that they meet established scientific and professional standards.All such ETS-conducted peer reviews are in addition to any reviews that outside organizations may provide as part of their own publication processes. Peer review notwithstanding, the positions expressed in the ETS Research Memorandum series and other published accounts of ETS research are those of the authors and not necessarily those of the Officers andTrustees of Educational Testing Service. The Daniel Eignor Editorship is named in honor of Dr. Daniel R. Eignor, who from 2001 until 2011 served the Research and Development division as Editor for the ETS Research Report series. -

Extensions of the Elo Rating System for Margin of Victory

Extensions of the Elo Rating System for Margin of Victory MathSport International 2019 - Athens, Greece Stephanie Kovalchik 1 / 46 2 / 46 3 / 46 4 / 46 5 / 46 6 / 46 7 / 46 How Do We Make Match Forecasts? 8 / 46 It Starts with Player Ratings Assume the the ith player has some true ability θi. Models of player abilities assume game outcomes are a function of the difference in abilities Prob(Wij = 1) = F(θi − θj) 9 / 46 Paired Comparison Models Bradley-Terry models are a general class of paired comparison models of latent abilities with a logistic function for win probabilities. 1 F(θi − θj) = 1 + α−(θi−θj) With BT, player abilities are treated as Hxed in time which is unrealistic in most cases. 10 / 46 Bobby Fischer 11 / 46 Fischer's Meteoric Rise 12 / 46 Arpad Elo 13 / 46 In His Own Words 14 / 46 Ability is a Moving Target 15 / 46 Standard Elo Can be broken down into two steps: 1. Estimate (E-Step) 2. Update (U-Step) 16 / 46 Standard Elo E-Step For tth match of player i against player j, the chance that player i wins is estimated as, 1 W^ ijt = 1 + 10−(Rit−Rjt)/σ 17 / 46 Elo Derivation Elo supposed that the ratings of any two competitors were independent and normal with shared standard deviation δ. Given this, he likened the chance of a win to the chance of observing a difference in ratings for ratings drawn from the same distribution, 2 Rit − Rjt ∼ N(0, 2δ ) which leads to, Rit − Rjt P(Rit − Rjt > 0) = Φ( ) √2δ and ≈ 1/(1 + 10−(Rit−Rjt)/2δ) Elo's formula was just a hack for the cumulative normal density function. -

Package 'Playerratings'

Package ‘PlayerRatings’ March 1, 2020 Version 1.1-0 Date 2020-02-28 Title Dynamic Updating Methods for Player Ratings Estimation Author Alec Stephenson and Jeff Sonas. Maintainer Alec Stephenson <[email protected]> Depends R (>= 3.5.0) Description Implements schemes for estimating player or team skill based on dynamic updating. Implemented methods include Elo, Glicko, Glicko-2 and Stephenson. Contains pdf documentation of a reproducible analysis using approximately two million chess matches. Also contains an Elo based method for multi-player games where the result is a placing or a score. This includes zero-sum games such as poker and mahjong. LazyData yes License GPL-3 NeedsCompilation yes Repository CRAN Date/Publication 2020-03-01 15:50:06 UTC R topics documented: aflodds . .2 elo..............................................3 elom.............................................5 fide .............................................7 glicko . .9 glicko2 . 11 hist.rating . 13 kfide............................................. 14 kgames . 15 krating . 16 kriichi . 17 1 2 aflodds metrics . 17 plot.rating . 19 predict.rating . 20 riichi . 22 steph . 23 Index 26 aflodds Australian Football Game Results and Odds Description The aflodds data frame has 675 rows and 9 variables. It shows the results and betting odds for 675 Australian football games played by 18 teams from 26th March 2009 until 24th June 2012. Usage aflodds Format This data frame contains the following columns: Date A date object showing the date of the game. Week The number of weeks since 25th March 2009. HomeTeam The home team name. AwayTeam The away team name. HomeScore The home team score. AwayScore The home team score. Score A numeric vector giving the value one, zero or one half for a home win, an away win or a draw respectively. -

Where Organized Chess in America Began

Where Organized Chess in America Began EMPIRE CHESS Spring 2013 Volume XXXVI, No. 1 $5.00 We are the champions! Empire Chess P.O. Box 340511 Brooklyn, NY 11234 NEW YORK STATE CHESS ASSOCIATION, INC. www.nysca.net The New York State Chess Association, Inc., America‘s oldest chess organization, is a not-for-profit organization dedicated to promoting chess in New York State at all levels. As the State Affiliate of the United States Chess Federation, its Directors also serve as USCF Voting Members and Delegates. President Bill Goichberg PO Box 249 2013: A Record-Setting Winter Salisbury Mills, NY 12577 [email protected] Both the New York State Scholastic Championships and the Greater Vice President Polly Wright New York Scholastics set records for attendance this year. For the 57 Joyce Road Greater New York event, the 942 players was the highest total since Eastchester, NY 10709 [email protected] 1999 at the world’s longest-running scholastic chess tournament. Treasurer Karl Heck The States in Saratoga drew an Upstate record of almost 700 players, 5426 Wright Street, CR 67 East Durham, NY 12423 and was the second-highest attendance for all time. [email protected] Membership Secretary In a world where Internet chess has supposedly blown up the hub-and- Phyllis Benjamin P.O. Box 340511 spoke model of chess clubs and face-to-face chess tournaments, what’s Brooklyn, NY 11234-0511 happening? [email protected] Board of Directors Upstate Downstate More chess is being played than ever, and chess likely has a better Phil Ferguson Phyllis Benjamin public perception than ever before. -

The US Chess Rating System

The US Chess Rating system Mark E. Glickman Thomas Doan Harvard University Estima Proposal September 2, 2020 The following algorithm is the procedure to rate US Chess events. The procedure applies to six separate rating systems, three of which are for over-the-board (OTB) events, and three of which are for online (OL) events : the Blitz system, Quick Chess (QC) system, the Regular system, the online Blitz system, the online Quick system, and the online Regular system.1 In this document, the systems will be abbreviated to OTBB/OTBQ/OTBR for the OTB systems and OLB/OLQ/OLR for the on-line systems. The formulas apply to each system separately. Note that this document describes only how ratings are computed, and does not set the rules that govern their use. 1 Structure of the Rating Algorithm Before an event, a player is either unrated in a particular rating system, or has a rating based on N games. Ratings are stored as floating point values, such as 1643.759 and 1431.034. Official ratings are expressed as rounded to the nearest whole number (1644 and 1431 in the above example). A player's rating is termed established if it is based upon more than 25 games. Assume the player competes in m games during the event. Post-event ratings are computed in a sequence of five steps: • The first step sets temporary initial ratings for unrated players. • The second step calculates an \effective" number of games played by each player. 1 For a time control, write mm as the total \main time" in minutes, and ss as the delay or increment in seconds.