A Causal Inference Approach to Measure the Vulnerability of Urban Metro Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Town Centre Policies Background Report (2015)

Town Centre Policies Background Report (2015) Contents Page Chapter 1: Introduction 4 Policy Context 4 Survey Method 5 Structure of the Report 5 Chapter 2: Review of Existing Town Centres 6 Major Centres 6 Kilburn 7 Wembley 11 District Centres 15 Burnt Oak 15 Colindale 18 Cricklewood 21 Ealing Road 25 Kenton 29 Kingsbury 33 Neasden 36 Preston Road 39 Wembley Park 45 Willesden Green 49 Local Centres 53 Kensal Rise 53 Queen’s Park 57 Sudbury 61 Chapter 3: Proposed New Local Centres 65 Church Lane 65 Chapter 4: Review of Frontage 70 Policy Context 70 Approach 70 Vacancy Levels 71 Primary Frontage 72 Secondary Frontage 74 Neighbourhood Centres 75 Conclusions 76 1 Monitoring 76 Appendix A 78 Figures 1. Change in Primary Frontage Kilburn 2. Retail Composition of Kilburn 3. Change in Primary Frontage in Wembley 4. Retail Composition of Wembley 5. Change in Primary Frontage in Burnt Oak 6. Retail Composition of Burnt Oak 7. Change in Primary Frontage in Colindale 8. Retail Composition of Colindale 9. Change in Primary Frontage in Cricklewood 10. Retail Composition of Cricklewood 11. Change in Primary Frontage in Ealing Road 12. Retail Composition of Ealing Road 13. Change in Primary Frontage in Harlesden 14. Retail Composition of Harlesden 15. Change in Primary Frontage in Kenton 16. Retail Composition of Kenton 17. Change in Primary Frontage in Kingsbury 18. Retail Composition of Kingsbury 19. Change in Primary Frontage in Neasden 20. Retail Composition of Neasden 21. Change in Primary Frontage in Preston Road 22. Retail Composition of Preston Road 23. Change in Primary Frontage in Wembley Park 24. -

Travellers Rest, Kenton Road, Harrow, London Ha3 8At Freehold Development Opportunity

TRAVELLERS REST, KENTON ROAD, HARROW, LONDON HA3 8AT FREEHOLD DEVELOPMENT OPPORTUNITY geraldeve.com TRAVELLERS REST, KENTON ROAD, HARROW, LONDON HA3 8AT 1 The Opportunity • Site area of approximately 1.7 acres (0.69 ha). • Located in Kenton within the London Borough of Harrow, ten miles north west of central London. • Residential development opportunity for circa 180 units (subject to planning consent), supported by a detailed feasibility study with a net saleable area of 11,271 sq m (121,000 sq ft). • Existing accommodation includes 119-bedroom hotel and restaurant, with approximately 90 parking spaces. • Excellent transport connections (London Fare Zone 4) being adjacent to Kenton Underground Station (Bakerloo Line), and Northwick Park (Metropolitan Line). • Central London is accessible in approximately 30 minutes (Kenton Station to London Paddington Station / London Euston Station), or 20 minutes from Northwick Park to Baker Street. • Hotel accommodation in this location is not protected by Local Plan or London Plan Policy. Therefore, the loss of the existing hotel would not be contrary to Policy. • None of the existing buildings are listed, locally listed or lie within a Conservation Area. 2 TRAVELLERS REST, KENTON ROAD, HARROW, LONDON HA3 8AT Location & Situation The site is located in Kenton within the London Borough of Harrow, in a predominantly residential area approximately nine miles north west of central London and within London Fare Zone 4. The surrounding area is predominantly residential in character and there are a wide range of local retailers located on Kenton Road. Immediately to the south of the site is the Kenton Bridge Medical Centre and a large Sainsbury’s Superstore. -

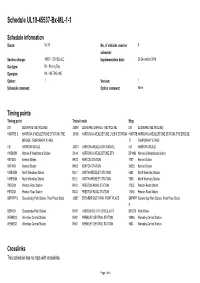

Standard Schedule UL19-45537-Bx-ML-1-1

Schedule UL19-45537-Bx-ML-1-1 Schedule information Route: UL19 No. of vehicles used on 8 schedule: Service change: 45537 - SCHEDULE Implementation date: 26 December 2016 Day type: Bx - Boxing Day Operator: ML - METROLINE Option: 1 Version: 1 Schedule comment: Option comment: None Timing points Timing point Transit node Stop EW EDGWARE (METROLINE) J5309 EDGWARE GARAGE - METROLINE EW EDGWARE (METROLINE) HARRTB S HARROW & WEALDSTONE STATION, THE J5108 HARROW & WEALDSTONE L U/B R STATION HARRTB HARROW & WEALDSTONE STATION, THE BRIDGE, BRIDGE, TEMPORARY STAND S TEMPORARY STAND HD HARROW WEALD J5313 HARROW WEALD BUS GARAGE HD HARROW WEALD HWDNSN Harrow & Wealdstone Station SA14 HARROW & WEALDSTONE STN BP1495 Harrow & Wealdstone Station KNTNSN Kenton Station RK02 KENTON STATION 1757 Kenton Station KNTNSN Kenton Station RK02 KENTON STATION 34328 Kenton Station NWEMSN North Wembley Station RL01 NORTH WEMBLEY STATION 4592 North Wembley Station NWEMSN North Wembley Station RL01 NORTH WEMBLEY STATION 7805 North Wembley Station PSRDSN Preston Road Station R403 PRESTON ROAD STATION 17055 Preston Road Station PSRDSN Preston Road Station R403 PRESTON ROAD STATION 17056 Preston Road Station SBPKPP S Stonebridge Park Station, Point Place Stand J3857 STONEBRIDGE PARK, POINT PLACE SBPKPP Stonebridge Park Station, Point Place Stand S SBPKSN Stonebridge Park Station RH11 HARROW RD NTH CIRCULAR R BP5376 Point Place WEMBCS Wembley Central Station RH01 WEMBLEY CENTRAL STATION 14946 Wembley Central Station WEMBCS Wembley Central Station RH01 WEMBLEY CENTRAL STATION 1933 Wembley Central Station Crosslinks This schedule has no trips with crosslinks. Page 1 of 5 UL19-45537-Bx-ML-1-1 Trip Duty Duty Bus HD EW HARRT HWDN KNTNS PSRDS NWEM WEMB SBPKS SBPKP HD Form Time Next No. -

Make Harrow Your Happy Place by Choosing a Home at Greenstock Lane

Make Harrow your happy place by choosing a home at Greenstock Lane. This delightful collection of one and A thriving high street is nearby as two bedroom apartments is available is the Harrow recreation ground and through Shared Ownership. Located many other attractive green spaces. close to Harrow town centre in north- west London, they offer the perfect Long associated with nearby Harrow base to enjoy the charm and tranquility School, the famous independent boarding of this popular outer London suburb. school for boys, this is an area with an enticing local history, high-proportion Built on the site previously occupied by the of listed buildings and village-like feel Harrow Hotel on Pinner Road, Greenstock due to the pockets of Victorian terraces, Lane is located between Harrow-on-the- detached Edwardian houses and modern Hill and West Harrow train stations offering town centre flats. There is a strong sense swift access into central London. of community and local pride that sets Harrow apart from the rest of London. CGI indicative only. 01 02 CGI indicative only. "Architecture has been carefully considered to ensure all apartments benefit from an abundance of natural light. 03 04 Greenstock Lane is The local area has a wide array of amenities including cafés, pubs, bars perfectly located for and restaurants, creating a hub that Harrow’s busy shopping prides itself on home-grown talent. and leisure facilities. Two large shopping centres are a short walk away: St Anns showcases over 40 high street brands including H&M, WHSmith, Tiger, M&S, Schuh and many more, while St George’s offers more shops as well as a suite of restaurants and a 12-screen Vue cinema. -

Development Policies - Preferred Options

May 2007 Development Policies - Preferred Options 1 May 2007 Development Policies - Preferred Options 2 May 2007 | Development Policies - Preferred Options Contents Contents 1 Introduction 2 1 Promoting a Quality Environment 2 A Better Townscape - By Design 2 Towards a Sustainable Brent, 2020 44 Environmental Protection 54 Enhancing Open Space and Biodiversity 65 2 Meeting Housing Needs 88 3 Connecting Places 106 4 A Strong Local Economy 136 Business, Industry and Warehousing 136 Town Centres and Shopping 140 Culture, Leisure and Tourism 165 5 Enabling Community Facilities 172 1 Glossary 1 May 2007 | Development Policies - Preferred Options Contents May 2007 | Development Policies - Preferred Options 1. Introduction 1 May 2007 | Development Policies - Preferred Options 1. Introduction Purpose of the Development Policies Preferred Options 1.1 This document has been produced by Brent Council as a basis for consultation on the second stage of preparing Brent’s new Local Development Framework (LDF). It builds on the earlier Issues & Options consultation stage in September 2005, taking account of views expressed then in identifying a preferred approach to the future development of the Borough. It also reflects, and builds upon, the Core Strategy Preferred Options which was the subject of public consultation in October- December 2006 What is a Local Development Framework? 1.2 The Local Development Framework will replace Brent's Unitary Development Plan 2004. The Council is required to prepare the LDF by the Planning and Compulsory Purchase Act 2004, and it will provide a strategic planning framework for the Borough, guiding change to 2016 and beyond. When adopted, Brent's LDF, together with the London Plan, will form the statutory Development Plan for the Borough. -

Tokyngton Wards Are Major Destinations in Themselves in Addition to Being Residential Areas

ELECTORAL REVIEW OF THE LONDON BOROUGH OF BRENT WARDING PATTERN SUBMISSION BY THE BRENT CONSERVATIVE GROUP RESPONSE TO THE LGBCE CONSULTATION NOVEMBER 2018 1 | P a g e Introduction Why Brent? During the current London Government Boundary Commission Executive (LGBCE) review process, it has become clear to us that since the previous review in 2000, warding levels have developed out of balance. Brent Council meets the Commission’s criteria for electoral inequality with 7 wards having a variance outside 10%. The outliers are Brondesbury Park at -16% and Tokyngton at 28%. Electoral review process The electoral review will have two distinct parts: Council size – The Brent conservative group welcomes to reduce the number of councillors to 57 from current 63. We appreciate that this will require some existing wards to be redrawn, and recognise that this will represent an opportunity to examine whether the existing boundaries are an appropriate reflection of how Brent has developed since 2000. In addition, the establishment of new developments such as South Kilburn Regeneration, Wembley Regeneration, Alperton and Burnt Oak and Colindale area. Ward boundaries – The Commission will re-draw ward boundaries so that they meet their statutory criteria. Should the Commission require any further detail on our scheme we would be very happy to pass on additional information or to arrange a meeting with Commission members or officers to run through the proposals. 2 | P a g e Interests & identities of local communities The Commission will be looking for evidence on a range of issues to support our reasoning. The best evidence for community identity is normally a combination of factual information such as the existence of communication links, facilities and organisations along with an explanation of how local people use those facilities. -

Wembley Park to Harrow Weald

Cabinet 11 December 2017 Report from the Strategic Director of Regeneration and Environment Quietway - Phase 2: Wembley Park To Harrow Weald Kenton, Northwick Park, Preston, Wembley Wards Affected: Central Key or Non-Key Decision: Key Open or Part/Fully Exempt: Open No. of Appendices: 2 Background Papers: None Rachel Best, Contact Officer: Transportation Planning Manager Tel: 020 8937 5249, [email protected] 1.0 Purpose of the Report 1.1 This report introduces the proposed phase 2 Quietway cycle route from Wembley Park to Harrow Weald. This includes two spurs: one to Wembley Central station; and the second along Churchill Avenue to Kenton Road. The programme is at an early stage with only an indicative route, passing through the central and northern parts of the borough. 1.2 Quietways are important as they provide a network of routes on safer, lower- traffic back streets, aimed at new and less confident cyclists and the proposed phase 2 route from Wembley Park to Harrow Weald forms part of the wider cycle network outlined in the Brent Cycle Strategy 2016 – 2021. 1.3 Seven Quietway routes identified in the pilot phase are being implemented, including one from Regent’s Park to Gladstone Park, which will benefit Brent residents. 1.4 The Quietway programme has evolved to include improvements for pedestrians as well as cyclists. Implementation will involve improvements to junctions and signage to make cycling and walking safer. 2.0 Recommendation(s) 2.1 That Cabinet: 2.1.1 Agrees the route of the proposed Quietway through Brent and for the scheme to be continued to detailed design and consultation. -

The Coronavirus and the Underground

THE CORONAVIRUS AND THE UNDERGROUND What first began in China in December 2019, and has been gathering pace worldwide since, it wasn’t until March that the London Underground began to be noticeably affected, by a fall in passenger numbers by an estimated 20%, later increased to be 70%, and 80% as this issue closed for press. Where possible, office-based staff who could work from home were told to do so, which was soon followed by an announcement that service levels would be reduced in accordance with Government advice. This would serve three purposes, (1) to save running “ghost trains”, (2) providing a service for essential workers and (3) reducing social contact. The measures announced by TfL are summarised thus. • Reduction of services. • No Waterloo & City Line from Friday 20 March. • No Night Tube (and also no Night Overground) from Friday night/Saturday 20/21 March 2020. • The possible closure of up to 40 (mostly) non-interchange stations: Arsenal Chancery Lane Kilburn Park St. James’s Park Barbican Charing Cross Lambeth North St. John’s Wood Bayswater Clapham South Lancaster Gate Southwark Bermondsey Covent Garden Manor House South Wimbledon Blackhorse Road Gloucester Road Mansion House Swiss Cottage Borough Goodge Street Mornington Crescent Stepney Green Bounds Green Great Portland Street Pimlico Temple Bow Road Hampstead Queensway Tooting Bec Caledonian Road Holland Park Redbridge Tufnell Park Chalk Farm Hyde Park Corner Regent’s Park Warwick Avenue All of the stations are “Section 12” stations, governed by the Fire Precautions (Sub-surface Railway Stations) Regulations 2009 which means there must be a specified minimum number of staff on duty, otherwise it would be illegal to open to passengers. -

HARROW COUNCIL TRAFFIC and ROAD SAFETY ADVISORY PANEL WEDNESDAY 22 SEPTEMBER 2004 INFORMATION CIRCULAR PART I 1. Minutes Of

HARROW COUNCIL TRAFFIC AND ROAD SAFETY ADVISORY PANEL WEDNESDAY 22 SEPTEMBER 2004 INFORMATION CIRCULAR PART I Enc. 1. Minutes of Recent Bus & Highway Liaison Meetings and Rail Liaison Meetings: (Pages 1 - 36) Enc. 2. Transport Local Implementation Plan (LIP) Processes: (Pages 37 - 40) PART II - NIL This page is intentionally left blank Information Item 1 Pages 1 to 36 INFORMATION ITEM 1 RAIL LIAISON MEETING BUS & HIGHWAY LIAISON MEETING The following minutes of the above meetings, are attached for information: • Wednesday 7 April 2004 – minutes agreed • Wednesday 7 July 2004 – subject to approval at the next meeting, scheduled for Wednesday 6 October 2004. FOR INFORMATION Author: Helen McGrath, Transportation Administrator Tel: 020 8424 7569 Email: [email protected] 1 This page is intentionally left blank 2 Harrow Council Minutes of the Rail Liaison Meeting Wednesday 7 April 2004 Present Harrow Council John Nickolay JN Councillor Alan Blann AB Councillor Thaya Idaikkadar TI Councillor Steve Swain SJS Transportation Manager Hanif Islam HI Transport Planner Fuad Omar FO Travel Plan Officer Helen McGrath HM Minutes Rail Operators Pat Hansberry PH Metropolitan Line, London Underground Limited Phil Wood PW Planning, London Underground Limited Mike Guy MG London Underground Limited Christopher Upfold CU Accessibility, London Underground Limited Matt Ball MB South Central Charlie Johnston CJ Silverlink County Ian Polush IP Silverlink Metro Harrow Public Transport Users Association Anthony Wood AW Chairman ACTION 1.0 APOLOGIES Councillor Adrian Pinkus – Harrow Council Chris Mair – Network Rail Julian Drury – Silverlink Stuart Yeatman – Chiltern Rail a) Minutes of Previous Meeting Agreed. b) Matters Arising To be dealt with under appropriate heading. -

Coach Travel Guide 2019/2020 Welcome Vectare’S Five Star Service

Coach travel guide 2019/2020 Welcome Vectare’s Five Star Service Dear Parents / Guardians, All of our vehicles are clean, modern and comfortable, and they are either operated in-house by the school or by one of our approved coach Welcome to our inaugural coach travel guide, containing Each of the routes will only operate subject to sufficient details of our new school coach service due to launch bookings being made to make operating a route operators. This means that all vehicles inspected and maintained to the in September 2019. We are pleased to be able to offer worthwhile. As such, we would politely request that all highest standard, giving you peace of mind that your daughter is travelling girls a new way of getting to and from school, and the bookings are made as soon as possible, with annual in safety. decision to introduce school coach services has been bookings being made by July 25th at the latest. After this taken in response to parental feedback and the very date we will review all bookings, select appropriately positive response to our school travel survey. Many sized vehicles for each route we plan to operate, and girls have also been speaking to us about the need for confirm to parents the final details of the coach services We use dedicated drivers who work on the same route. These drivers get sustainable travel options for their journey to school, that will operate from September. to know the pupils and are subject to an enhanced disclosure and barring and whilst many students currently travel to school via service check (DBS) and full MiDAS / CPC training. -

Underground Diary

UNDERGROUND DIARY FEBRUARY 2019 Friday 1 February began with westbound C&H trains non-stopping Westbourne Park from the start of traffic until 07.40 because of defective OPO equipment and no staff being available to despatch trains. A defective Jubilee Line train at Dollis Hill at 06.30 caused a 20-minute southbound delay, aggravated by a points failure at West Hampstead at 07.05. As a result, all West Hampstead reversers during the morning peak were cancelled, which equated to six trains. A fire alarm activation at Warwick Avenue at 10.25 necessitated the station’s closure until 10.50. All SSR lines were suspended through Moorgate from 11.05 because of a track circuit failure, resuming at 12.05. A Network Rail points failure caused the District Line to be suspended between Turnham Green and Richmond from 12.35. Two trains were stalled between stations, that between Kew Gardens and Richmond authorised to return to Kew Gardens and then to Gunnersbury, where it crossed over and returned eastbound. After that move had been completed, the westbound train stalled approaching Gunnersbury was able to proceed into the station and then reverse, following which a limited service resumed between Turnham Green and Gunnersbury. Through services to Richmond resumed at 14.15. Another defective Jubilee Line train at Waterloo at 19.05 resulted in a suspension on the ‘extension’. The offending train moved off to Southwark and was detrained there, with services resuming at 19.30. A person under an eastbound District Line train at Southfields suspended the Wimbledon branch of the District Line from 23.00, with a limited service resuming to Parsons Green at 23.25. -

223 Bus Time Schedule & Line Route

223 bus time schedule & line map 223 Wembley Central - Harrow View In Website Mode The 223 bus line (Wembley Central - Harrow) has 2 routes. For regular weekdays, their operation hours are: (1) Harrow: 12:10 AM - 11:43 PM (2) Wembley Central: 5:10 AM - 11:55 PM Use the Moovit App to ƒnd the closest 223 bus station near you and ƒnd out when is the next 223 bus arriving. Direction: Harrow 223 bus Time Schedule 31 stops Harrow Route Timetable: VIEW LINE SCHEDULE Sunday 12:10 AM - 11:43 PM Monday 12:10 AM - 11:43 PM Wembley Central Station (CN) High Road, London Tuesday 12:10 AM - 11:43 PM Ealing Road (CR) Wednesday 12:10 AM - 11:43 PM 5 Coronet Parade, Ealing Road Thursday 12:10 AM - 11:43 PM High Road Wembley (CV) Friday 12:10 AM - 11:43 PM 8 Ealing Road, London Saturday 12:10 AM - 11:43 PM Wembley Central Station (CN) High Road, London Ark Elvin Academy / Park Lane (SG) 383 High Road Wembley, London 223 bus Info Direction: Harrow Wembley Triangle (SM) Stops: 31 356-368 High Road, London Trip Duration: 40 min Line Summary: Wembley Central Station (CN), Ealing Wembley Stadium Station (SD) Road (CR), High Road Wembley (CV), Wembley Central Station (CN), Ark Elvin Academy / Park Lane Lakeside Way (D) (SG), Wembley Triangle (SM), Wembley Stadium Station (SD), Lakeside Way (D), Wembley Arena (G), Wembley Arena (G) Empire Way (J), Wembley Park (M), Corringham Road (P), Mayƒelds (W), Beverley Gardens (N), Empire Way (J) Ravenscroft Avenue (PC), Preston Road Station Empire Way, London (PB), Fernleigh Court (W), Montpelier Rise (W), South Kenton