Developing an In-Kernel File Sharing Server Solution Based on Server Message Block Protocol

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

OES 2 SP3: Novell CIFS for Linux Administration Guide 9.2.1 Mapping Drives from a Windows 2000 Or XP Client

www.novell.com/documentation Novell CIFS Administration Guide Open Enterprise Server 2 SP3 May 03, 2013 Legal Notices Novell, Inc., makes no representations or warranties with respect to the contents or use of this documentation, and specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose. Further, Novell, Inc., reserves the right to revise this publication and to make changes to its content, at any time, without obligation to notify any person or entity of such revisions or changes. Further, Novell, Inc., makes no representations or warranties with respect to any software, and specifically disclaims any express or implied warranties of merchantability or fitness for any particular purpose. Further, Novell, Inc., reserves the right to make changes to any and all parts of Novell software, at any time, without any obligation to notify any person or entity of such changes. Any products or technical information provided under this Agreement may be subject to U.S. export controls and the trade laws of other countries. You agree to comply with all export control regulations and to obtain any required licenses or classification to export, re-export or import deliverables. You agree not to export or re-export to entities on the current U.S. export exclusion lists or to any embargoed or terrorist countries as specified in the U.S. export laws. You agree to not use deliverables for prohibited nuclear, missile, or chemical biological weaponry end uses. See the Novell International Trade Service Web page (http://www.novell.com/info/exports/) for more information on exporting Novell software. -

Oracle® ZFS Storage Appliance Security Guide, Release OS8.6.X

® Oracle ZFS Storage Appliance Security Guide, Release OS8.6.x Part No: E76480-01 September 2016 Oracle ZFS Storage Appliance Security Guide, Release OS8.6.x Part No: E76480-01 Copyright © 2014, 2016, Oracle and/or its affiliates. All rights reserved. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS. Oracle programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, delivered to U.S. Government end users are "commercial computer software" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, use, duplication, disclosure, modification, and adaptation of the programs, including any operating system, integrated software, any programs installed on the hardware, and/or documentation, shall be subject to license terms and license restrictions applicable to the programs. -

Shared Resource Matrix Methodology: an Approach to Identifying Storage and Timing Channels

Shared Resource Matrix Methodology: An Approach to Identifying Storage and Timing Channels RICHARD A. KEMMERER University of California, Santa Barbara Recognizing and dealing with storage and timing channels when performing the security analysis of a computer system is an elusive task. Methods for discovering and dealing with these channels have mostly been informal, and formal methods have been restricted to a particular specification language. A methodology for discovering storage and timing channels that can be used through all phases of the software life cycle to increase confidence that all channels have been identified is presented. The methodology is presented and applied to an example system having three different descriptions: English, formal specification, and high-order language implementation. Categories and Subject Descriptors: C.2.0 [Computer-Communication Networks]: General--se- curity and protection; D.4.6 ]Operating Systems]: Security and Protection--information flow controls General Terms: Security Additional Key Words and Phrases: Protection, confinement, flow analysis, covert channels, storage channels, timing channels, validation 1. INTRODUCTION When performing a security analysis of a system, both overt and covert channels of the system must be considered. Overt channels use the system's protected data objects to transfer information. That is, one subject writes into a data object and another subject reads from the object. Subjects in this context are not only active users, but are also processes and procedures acting on behalf of the user. The channels, such as buffers, files, and I/O devices, are overt because the entity used to hold the information is a data object; that is, it is an object that is normally viewed as a data container. -

Mac OS X Server Administrator's Guide

034-9285.S4AdminPDF 6/27/02 2:07 PM Page 1 Mac OS X Server Administrator’s Guide K Apple Computer, Inc. © 2002 Apple Computer, Inc. All rights reserved. Under the copyright laws, this publication may not be copied, in whole or in part, without the written consent of Apple. The Apple logo is a trademark of Apple Computer, Inc., registered in the U.S. and other countries. Use of the “keyboard” Apple logo (Option-Shift-K) for commercial purposes without the prior written consent of Apple may constitute trademark infringement and unfair competition in violation of federal and state laws. Apple, the Apple logo, AppleScript, AppleShare, AppleTalk, ColorSync, FireWire, Keychain, Mac, Macintosh, Power Macintosh, QuickTime, Sherlock, and WebObjects are trademarks of Apple Computer, Inc., registered in the U.S. and other countries. AirPort, Extensions Manager, Finder, iMac, and Power Mac are trademarks of Apple Computer, Inc. Adobe and PostScript are trademarks of Adobe Systems Incorporated. Java and all Java-based trademarks and logos are trademarks or registered trademarks of Sun Microsystems, Inc. in the U.S. and other countries. Netscape Navigator is a trademark of Netscape Communications Corporation. RealAudio is a trademark of Progressive Networks, Inc. © 1995–2001 The Apache Group. All rights reserved. UNIX is a registered trademark in the United States and other countries, licensed exclusively through X/Open Company, Ltd. 062-9285/7-26-02 LL9285.Book Page 3 Tuesday, June 25, 2002 3:59 PM Contents Preface How to Use This Guide 39 What’s Included -

SYSTEM V RELEASE 4 Migration Guide

- ATlaT UN/~ SYSTEM V RELEASE 4 Migration Guide UNIX Software Operation Copyright 1990,1989,1988,1987,1986,1985,1984,1983 AT&T All Rights Reserved Printed In USA Published by Prentice-Hall, Inc. A Division of Simon & Schuster Englewood Cliffs, New Jersey 07632 No part of this publication may be reproduced or transmitted in any form or by any means-graphic, electronic, electrical, mechanical, or chemical, including photocopying, recording in any medium, tap ing, by any computer or information storage and retrieval systems, etc., without prior permissions in writing from AT&T. IMPORTANT NOTE TO USERS While every effort has been made to ensure the accuracy of all information in this document, AT&T assumes no liability to any party for any loss or damage caused by errors or omissions or by state ments of any kind in this document, its updates, supplements, or special editions, whether such er rors are omissions or statements resulting from negligence, accident, or any other cause. AT&T furth er assumes no liability arising out of the application or use of any product or system described herein; nor any liability for incidental or consequential damages arising from the use of this docu ment. AT&T disclaims all warranties regarding the information contained herein, whether expressed, implied or statutory, including implied warranties of merchantability or fitness for a particular purpose. AT&T makes no representation that the interconnection of products in the manner described herein will not infringe on existing or future patent rights, nor do the descriptions contained herein imply the granting or license to make, use or sell equipment constructed in accordance with this description. -

Softnas Deployment Guide for High- Performance SQL Storage

SoftNAS Deployment Guide for High- Performance SQL Storage Introduction SoftNAS cloud NAS systems are based on an innovative, memory-centric storage architecture that delivers unparalleled NAS performance, efficiency, and value. They incorporate a hybrid disk storage technology that tailors the usage of data disks, log solid- state cache drives (SSDs), and read cache SSDs to the data share's specific needs. Additional features include variable storage record size, data compression, and multiple connectivity options. As a Cloud NAS solution, SoftNAS cloud NAS systems provide an excellent base for Microsoft Windows Server deployments by providing iSCSI or Fibre Channel block storage for Microsoft SQL Server, and network file system (NFS) or server message block (SMB) file storage for Microsoft Windows client access. This document covers the best practices to follow when deploying Microsoft SQL Server on a SoftNAS cloud NAS system. The intended audience is storage administrators and Microsoft SQL Server database administrators. Maintaining High Availability As with any business-critical application, high availability is a crucial design criterion to be considered when deploying a Microsoft SQL Server installation. Microsoft SQL Server 2016 can be installed on local and/or shared file systems, and SoftNAS cloud NAS systems can satisfy both of these options. Local file systems (from the Microsoft Windows Server perspective) are hosted as block volumes—iSCSI and/or Fibre-Channel-connected LUNs and file systems as SMB and/or NFS volumes. High availability starts with the network connectivity supporting the storage and server interconnectivity. Any design for the storage infrastructure should avoid single points of failure. Because many white papers and publications cover storage-area networking and network-attached storage resilience, those topics are not covered in detail in this paper. -

CIFS/NFS) Administrator's Guide

Hitachi Data Ingestor File System Protocols (CIFS/NFS) Administrator's Guide Product Version Getting Help Contents MK-90HDI035-13 © 2013- 2015 Hitachi, Ltd. All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying and recording, or stored in a database or retrieval system for any purpose without the express written permission of Hitachi, Ltd. Hitachi, Ltd., reserves the right to make changes to this document at any time without notice and assume no responsibility for its use. This document contains the most current information available at the time of publication. When new or revised information becomes available, this entire document will be updated and distributed to all registered users. Some of the features described in this document might not be currently available. Refer to the most recent product announcement for information about feature and product availability, or contact Hitachi Data Systems Corporation at https://portal.hds.com. Notice: Hitachi, Ltd., products and services can be ordered only under the terms and conditions of the applicable Hitachi Data Systems Corporation agreements. he use of Hitachi, Ltd., products is governed by the terms of your agreements with Hitachi Data Systems Corporation. Hitachi is a registered trademark of Hitachi, Ltd., in the United States and other countries. Hitachi Data Systems is a registered trademark and service mark of Hitachi, Ltd., in the United States and other countries. Archivas, Essential NAS Platform, HiCommand, Hi-Track, ShadowImage, Tagmaserve, Tagmasoft, Tagmasolve, Tagmastore, TrueCopy, Universal Star Network, and Universal Storage Platform are registered trademarks of Hitachi Data Systems Corporation. -

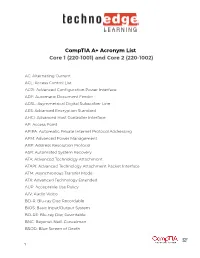

Comptia A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002)

CompTIA A+ Acronym List Core 1 (220-1001) and Core 2 (220-1002) AC: Alternating Current ACL: Access Control List ACPI: Advanced Configuration Power Interface ADF: Automatic Document Feeder ADSL: Asymmetrical Digital Subscriber Line AES: Advanced Encryption Standard AHCI: Advanced Host Controller Interface AP: Access Point APIPA: Automatic Private Internet Protocol Addressing APM: Advanced Power Management ARP: Address Resolution Protocol ASR: Automated System Recovery ATA: Advanced Technology Attachment ATAPI: Advanced Technology Attachment Packet Interface ATM: Asynchronous Transfer Mode ATX: Advanced Technology Extended AUP: Acceptable Use Policy A/V: Audio Video BD-R: Blu-ray Disc Recordable BIOS: Basic Input/Output System BD-RE: Blu-ray Disc Rewritable BNC: Bayonet-Neill-Concelman BSOD: Blue Screen of Death 1 BYOD: Bring Your Own Device CAD: Computer-Aided Design CAPTCHA: Completely Automated Public Turing test to tell Computers and Humans Apart CD: Compact Disc CD-ROM: Compact Disc-Read-Only Memory CD-RW: Compact Disc-Rewritable CDFS: Compact Disc File System CERT: Computer Emergency Response Team CFS: Central File System, Common File System, or Command File System CGA: Computer Graphics and Applications CIDR: Classless Inter-Domain Routing CIFS: Common Internet File System CMOS: Complementary Metal-Oxide Semiconductor CNR: Communications and Networking Riser COMx: Communication port (x = port number) CPU: Central Processing Unit CRT: Cathode-Ray Tube DaaS: Data as a Service DAC: Discretionary Access Control DB-25: Serial Communications -

OSI Model and Network Protocols

CHAPTER4 FOUR OSI Model and Network Protocols Objectives 1.1 Explain the function of common networking protocols . TCP . FTP . UDP . TCP/IP suite . DHCP . TFTP . DNS . HTTP(S) . ARP . SIP (VoIP) . RTP (VoIP) . SSH . POP3 . NTP . IMAP4 . Telnet . SMTP . SNMP2/3 . ICMP . IGMP . TLS 134 Chapter 4: OSI Model and Network Protocols 4.1 Explain the function of each layer of the OSI model . Layer 1 – physical . Layer 2 – data link . Layer 3 – network . Layer 4 – transport . Layer 5 – session . Layer 6 – presentation . Layer 7 – application What You Need To Know . Identify the seven layers of the OSI model. Identify the function of each layer of the OSI model. Identify the layer at which networking devices function. Identify the function of various networking protocols. Introduction One of the most important networking concepts to understand is the Open Systems Interconnect (OSI) reference model. This conceptual model, created by the International Organization for Standardization (ISO) in 1978 and revised in 1984, describes a network architecture that allows data to be passed between computer systems. This chapter looks at the OSI model and describes how it relates to real-world networking. It also examines how common network devices relate to the OSI model. Even though the OSI model is conceptual, an appreciation of its purpose and function can help you better understand how protocol suites and network architectures work in practical applications. The OSI Seven-Layer Model As shown in Figure 4.1, the OSI reference model is built, bottom to top, in the following order: physical, data link, network, transport, session, presentation, and application. -

Linux for Zseries: Device Drivers and Installation Commands (March 4, 2002) Summary of Changes

Linux for zSeries Device Drivers and Installation Commands (March 4, 2002) Linux Kernel 2.4 LNUX-1103-07 Linux for zSeries Device Drivers and Installation Commands (March 4, 2002) Linux Kernel 2.4 LNUX-1103-07 Note Before using this document, be sure to read the information in “Notices” on page 207. Eighth Edition – (March 2002) This edition applies to the Linux for zSeries kernel 2.4 patch (made in September 2001) and to all subsequent releases and modifications until otherwise indicated in new editions. © Copyright International Business Machines Corporation 2000, 2002. All rights reserved. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Summary of changes .........v Chapter 5. Linux for zSeries Console || Edition 8 changes.............v device drivers............27 Edition 7 changes.............v Console features .............28 Edition 6 changes ............vi Console kernel parameter syntax .......28 Edition 5 changes ............vi Console kernel examples ..........28 Edition 4 changes ............vi Usingtheconsole............28 Edition 3 changes ............vii Console – Use of VInput ..........30 Edition 2 changes ............vii Console limitations ............31 About this book ...........ix Chapter 6. Channel attached tape How this book is organized .........ix device driver ............33 Who should read this book .........ix Tapedriverfeatures...........33 Assumptions..............ix Tape character device front-end........34 Tape block -

Samba-3 by Example

Samba-3 by Example Practical Exercises in Successful Samba Deployment John H. Terpstra May 27, 2009 ABOUT THE COVER ARTWORK The cover artwork of this book continues the freedom theme of the first edition of \Samba-3 by Example". The history of civilization demonstrates the fragile nature of freedom. It can be lost in a moment, and once lost, the cost of recovering liberty can be incredible. The last edition cover featured Alfred the Great who liberated England from the constant assault of Vikings and Norsemen. Events in England that finally liberated the common people came about in small steps, but the result should not be under-estimated. Today, as always, freedom and liberty are seldom appreciated until they are lost. If we can not quantify what is the value of freedom, we shall be little motivated to protect it. Samba-3 by Example Cover Artwork: The British houses of parliament are a symbol of the Westminster system of government. This form of government permits the people to govern themselves at the lowest level, yet it provides for courts of appeal that are designed to protect freedom and to hold back all forces of tyranny. The clock is a pertinent symbol of the importance of time and place. The information technology industry is being challenged by the imposition of new laws, hostile litigation, and the imposition of significant constraint of practice that threatens to remove the freedom to develop and deploy open source software solutions. Samba is a software solution that epitomizes freedom of choice in network interoperability for Microsoft Windows clients. -

Importance of DNS Suffixes and Netbios

Importance of DNS Suffixes and NetBIOS Priasoft DNS Suffixes? What are DNS Suffixes, and why are they important? DNS Suffixes are text that are appended to a host name in order to query DNS for an IP address. DNS works by use of “Domains”, equitable to namespaces and usually are a textual value that may or may not be “dotted” with other domains. “Support.microsoft.com” could be considers a domain or namespace for which there are likely many web servers that can respond to requests to that domain. There could be a server named SUPREDWA.support.microsoft.com, for example. The DNS suffix in this case is the domain “support.microsoft.com”. When an IP address is needed for a host name, DNS can only respond based on hosts that it knows about based on domains. DNS does not currently employ a “null” domain that can contain just server names. As such, if the IP address of a server named “Server1” is needed, more detail must be added to that name before querying DNS. A suffix can be appended to that name so that the DNS sever can look at the records of the domain, looking for “Server1”. A client host can be configured with multiple DNS suffixes so that there is a “best chance” of discovery for a host name. NetBIOS? NetBIOS is an older Microsoft technology from a time before popularity of DNS. WINS, for those who remember, was the Microsoft service that kept a table of names (NetBIOS names) for which IP address info could be returned.