A Distributional Perspective on Reinforcement Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Volume 108, Issue 12

BObcaTS TEAM UP BU STUDENT WINS WITH CHRISTMAS MCIE AwaRD pg. 2 CHEER pg. 3 VOL. 108 | ISSUE NO.12| NOVEMBER 28TH, 2017 ...caFFEINE... SINCE 1910 LONG NIGHT AG A INST PROCR A STIN A TION ANOTHER SUCCESS Students cracking down and getting those assignments out of the way. Photo Credit: Patrick Gohl. Patrick Gohl, Reporter am sure the word has spread Robbins Library on Wednesday in the curriculum area. If you of the whole event. I will now tinate. I around campus already, ex- the 22nd of November. were a little late for your sched- remedy this grievous error and Having made it this far in ams are just around the cor- The event was designed to uled session you were likely to make mention of the free food. the semester, one could be led ner. ‘Tis the season to toss your combat study procrastination, get bumped back as there were Healthy snacks such as apples to believe, quite incorrectly, amassed library of class notes in and encourage students to be- many students looking for help and bananas were on offer from that the home stretch is more of frustration, to scream at your gin their exam preparation. It all to gain that extra edge on their the get go along with tea and the same. This falsehood might computer screen like a mad- started at 7:00PM and ran until assignments and exams. coffee. Those that managed be an alluring belief to grasp man, and soak your pillow with 3:00AM the following morn- In addition to the academic to last until midnight were re- hold of when the importance to tears of desperation. -

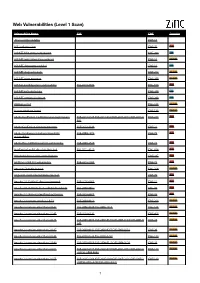

Web Vulnerabilities (Level 1 Scan)

Web Vulnerabilities (Level 1 Scan) Vulnerability Name CVE CWE Severity .htaccess file readable CWE-16 ASP code injection CWE-95 High ASP.NET MVC version disclosure CWE-200 Low ASP.NET application trace enabled CWE-16 Medium ASP.NET debugging enabled CWE-16 Low ASP.NET diagnostic page CWE-200 Medium ASP.NET error message CWE-200 Medium ASP.NET padding oracle vulnerability CVE-2010-3332 CWE-310 High ASP.NET path disclosure CWE-200 Low ASP.NET version disclosure CWE-200 Low AWStats script CWE-538 Medium Access database found CWE-538 Medium Adobe ColdFusion 9 administrative login bypass CVE-2013-0625 CVE-2013-0629CVE-2013-0631 CVE-2013-0 CWE-287 High 632 Adobe ColdFusion directory traversal CVE-2013-3336 CWE-22 High Adobe Coldfusion 8 multiple linked XSS CVE-2009-1872 CWE-79 High vulnerabilies Adobe Flex 3 DOM-based XSS vulnerability CVE-2008-2640 CWE-79 High AjaxControlToolkit directory traversal CVE-2015-4670 CWE-434 High Akeeba backup access control bypass CWE-287 High AmCharts SWF XSS vulnerability CVE-2012-1303 CWE-79 High Amazon S3 public bucket CWE-264 Medium AngularJS client-side template injection CWE-79 High Apache 2.0.39 Win32 directory traversal CVE-2002-0661 CWE-22 High Apache 2.0.43 Win32 file reading vulnerability CVE-2003-0017 CWE-20 High Apache 2.2.14 mod_isapi Dangling Pointer CVE-2010-0425 CWE-20 High Apache 2.x version equal to 2.0.51 CVE-2004-0811 CWE-264 Medium Apache 2.x version older than 2.0.43 CVE-2002-0840 CVE-2002-1156 CWE-538 Medium Apache 2.x version older than 2.0.45 CVE-2003-0132 CWE-400 Medium Apache 2.x version -

Kabbalah, Magic & the Great Work of Self Transformation

KABBALAH, MAGIC AHD THE GREAT WORK Of SELf-TRAHSfORMATIOH A COMPL€T€ COURS€ LYAM THOMAS CHRISTOPHER Llewellyn Publications Woodbury, Minnesota Contents Acknowledgments Vl1 one Though Only a Few Will Rise 1 two The First Steps 15 three The Secret Lineage 35 four Neophyte 57 five That Darkly Splendid World 89 SIX The Mind Born of Matter 129 seven The Liquid Intelligence 175 eight Fuel for the Fire 227 ntne The Portal 267 ten The Work of the Adept 315 Appendix A: The Consecration ofthe Adeptus Wand 331 Appendix B: Suggested Forms ofExercise 345 Endnotes 353 Works Cited 359 Index 363 Acknowledgments The first challenge to appear before the new student of magic is the overwhehning amount of published material from which he must prepare a road map of self-initiation. Without guidance, this is usually impossible. Therefore, lowe my biggest thanks to Peter and Laura Yorke of Ra Horakhty Temple, who provided my first exposure to self-initiation techniques in the Golden Dawn. Their years of expe rience with the Golden Dawn material yielded a structure of carefully selected ex ercises, which their students still use today to bring about a gradual transformation. WIthout such well-prescribed use of the Golden Dawn's techniques, it would have been difficult to make progress in its grade system. The basic structure of the course in this book is built on a foundation of the Golden Dawn's elemental grade system as my teachers passed it on. In particular, it develops further their choice to use the color correspondences of the Four Worlds, a piece of the original Golden Dawn system that very few occultists have recognized as an ini tiatory tool. -

Open Source Katalog 2009 – Seite 1

Optaros Open Source Katalog 2009 – Seite 1 OPEN SOURCE KATALOG 2009 350 Produkte/Projekte für den Unternehmenseinsatz OPTAROS WHITE PAPER Applikationsentwicklung Assembly Portal BI Komponenten Frameworks Rules Engine SOA Web Services Programmiersprachen ECM Entwicklungs- und Testumgebungen Open Source VoIP CRM Frameworks eCommerce BI Infrastrukturlösungen Programmiersprachen ETL Integration Office-Anwendungen Geschäftsanwendungen ERP Sicherheit CMS Knowledge Management DMS ESB © Copyright 2008. Optaros Open Source Katalog 2009 - Seite 2 Optaros Referenz-Projekte als Beispiele für Open Source-Einsatz im Unternehmen Kunde Projektbeschreibung Technologien Intranet-Plattform zur Automatisierung der •JBossAS Geschäftsprozesse rund um „Information Systems •JBossSeam Compliance“ •jQuery Integrationsplattform und –architektur NesOA als • Mule Enterprise Bindeglied zwischen Vertriebs-/Service-Kanälen und Service Bus den Waren- und Logistiksystemen •JBossMiddleware stack •JBossMessaging CRM-Anwendung mit Fokus auf Sales-Force- •SugarCRM Automation Online-Community für die Entwickler rund um die •AlfrescoECM Endeca-Search-Software; breit angelegtes •Liferay Enterprise Portal mit Selbstbedienungs-, •Wordpress Kommunikations- und Diskussions-Funktionalitäten Swisscom Labs: Online-Plattform für die •AlfrescoWCMS Bereitstellung von zukünftigen Produkten (Beta), •Spring, JSF zwecks Markt- und Early-Adopter-Feedback •Nagios eGovernment-Plattform zur Speicherung und •AlfrescoECM Zurverfügungstellung von Verwaltungs- • Spring, Hibernate Dokumenten; integriert -

Groupware Enterprise Collaboration Suite

Groupware Enterprise Collaboration Suite Horde Groupware ± the free, enterprise ready, browser based collaboration suite. Manage and share calendars, contacts, tasks and notes with the standards compliant components from the Horde Project. Horde Groupware Webmail Edition ± the complete, stable communication solution. Combine the successful Horde Groupware with one of the most popular webmail applications available and trust in ten years experience in open source software development. Extend the Horde Groupware suites with any of the Horde modules, like file manager, bookmark manager, photo gallery, wiki, and many more. Core features of Horde Groupware Public and shared resources (calendars, address books, task lists etc.) Unlimited resources per user 40 translations, right-to-left languages, unicode support Global categories (tags) Customizable portal screen with applets for weather, quotes, etc. 27 different color themes Online help system Import and export of external groupware data Synchronization with PDAs, mobile phones, groupware clients Integrated user management, group support and permissions system User preferences with configurable default values WCAG 1.0 Priority 2/Section 508 accessibility Webmail AJAX, mobile and traditional browser interfaces IMAP and POP3 support Message filtering Message searching HTML message composition with WYSIWIG editor Spell checking Built in attachment viewers Encrypting and signing of messages (S/MIME and PGP) Quota support AJAX Webmail Application-like user interface Classical -

Organizing Your PHP Projects

Organizing Your PHP Projects Paul M. Jones Read These • “Mythical Man-Month”, Brooks • “Art of Project Management”, Berkun • “Peopleware”, DeMarco and Lister 2 Project Planning in One Lesson • Examine real-world projects • The One Lesson for organizing your project • Elements of The One Lesson • The One Lesson in practice 3 About Me • Web Architect • PHP since 1999 (PHP 3) • Solar Framework (lead) • Savant Template System (lead) • Zend Framework (found. contrib.) • PEAR Group (2007-2008) 4 About You • Project lead/manager? • Improve team consistency? • Want to share your code with others? • Want to use code from others? • Want to reduce 5 Goals for Organizing • Security • Integration and extension • Adaptable to change • Predictable and maintainable • Teamwork consistency • Re-use rules on multiple projects 6 Project Research; or, “Step 1: Study Underpinnings” 7 Project Evolution Tracks One-Off Heap Standalone App Library ? Modular App Collection Framework CMS 8 One-Off Heap • No discernible architecture • Browse directly to the scripts • Add to it piece by piece • Little to no separation of concerns • All variables are global • Unmanageable, difficult to extend 9 Standalone Application • One-off heap ++ • Series of separate page scripts and common includes • Installed in web root • Each responsible for global execution environment • Script variables still global 10 Standalone Application: Typical Main Script // Setup or bootstrapping define('INCLUDE_PATH', dirname(__FILE__) . '/'); include_once INCLUDE_PATH . 'inc/prepend.inc.php'; include_once INCLUDE_PATH . 'lib/foo.class.php'; include_once INCLUDE_PATH . 'lib/View.class.php'; // Actions (if we're lucky) $foo = new Foo(); $data = $foo->getData(); // Display (if we're lucky) $view = new View(INCLUDE_PATH . 'tpl/'); $view->assign($data); echo $view->fetch('template.tpl'); // Teardown include_once INCLUDE_PATH . -

Best Php Webmail Software

Best php webmail software Get the answer to "What are the best self-hosted webmail clients? in your config/ file) if you need messages to appear instantly. Free and open source webmail software for the masses, written in PHP. Install it on your web servers for personal or commercial use, redistribute, integrate with other software, or alter the source code (provided that. These clients can work under many types of platforms such as PHP, ASP Here, we have compiled a collection of seven webmail. SquirrelMail is one of the best webmail clients written purely in PHP. It supports basic email protocols such as SMTP, IMAP, and others. Webmail's software's are scripts which run on your servers and give you browser based mail client interface like Gmail, Yahoo etc. There are. For this roundup we have compiled a list of Best Webmail Clients for both T-dah is a free PHP webmail application which is built from the. Hastymail2 is a full featured IMAP/SMTP client written in PHP. Our goal is to create a fast, secure, compliant web mail client that has great usability. Hastymail2 is much more lightweight than most popular web based mail applications but still. RainLoop Webmail - Simple, modern & fast web-based email client. Also known as “Horde IMP”, Horde Mail is a free and open source web-mail client written in PHP. Its development started in , so it's a. Check out these 10 amazing webmail clients worth considering and see how In today's article, we're going to highlight some of the best webmail clients It's free to use and can be installed on any server that supports PHP. -

(E: Tilrtin, and J. Fivzsi.;.V.) , 17

, DOCUMENT 11ESp5E r . ED 172 500 s- EC 1114906 AUTHOR .- Jordan., Jule E.,El. -,, Tina Exceptional Child Education at the Eic;:ntennial; A . '3:14ra-de of 'Progress! Pavised ,Editicn. 1 INSTITUTION coiocil for ,Efceptional 'Child rin, ioston-, Va. ... .IVormation S...rvic,)s and Publications. SPONS AGENCY -National Inst. -lot .Educ,ition (DHFW) , Washington, .t1. '.. ., D.C.. .., . PUB DAT '77 ,./; i. NOTE . 121 p. ;A.: Product ot the E7?,TC Clearinghouse or. Handkcarpped.- a.nd Gifted Zhitdren; Some small print . and. photographs may not re produce clearly "AVAILABLE*FlOM The Council..for.Exceptio::ial Childrctn, Publication Sales Unit, 1920 As.nociation Drive, r.e.st'on,Virginia 220,91(PublicatiOn No. 1.45,/$5.5.0) .' EDRS PRICE MF01/PC05 'Plus Post-age. DESCRIPTORS *Edtloation-a. Trends; i-A.mi.ntary S'econdary, Education; -'*Equal Education; *Federal. I.,-gislati.on; *Handicapped Childr-en; Interviews; Pa: ant RolP; rT=.?acher. Fducation; *Trend Analysis ABSTRACT Thitteer. ontr: but?.J, irape.rs,int.ervis.--ws, and diesous.sions focus on historical. truss in the ?ducatior..f handicappedhildren And youth.l'h-firs:. .section provid thr warspectivesa th., status :-.)f,---xcpticinal child ..:-Aucationhrot gh' int-..rvt.:wsith math- rs of C''..sr..-4r..--7-(.3..-i.3.11dOlph,i:. ,Willi in C. Pertins,.AndA. .Q11i--±),tl,=:?,u:: --.0od-,..dtA.-7.' 1 t.:. oli for *.E.,:,H r icapped (E: tilrtin, and J.fivzsi.;.v.), _17.:,.1, Th.=i2,..;un.:1.1 for 1:xc.,!.,ptionel Children (W. Ge9r and. F..Jor,,,:3).1.11. .-..3'CO:',.1.3--.Cti...)::ptC7-..7-if:Lt:_;t 1-.,,,, following -eight papers: "EsPf cially -for- sp,,cial -,..iu.::itors; `,--nse..--o-f. -

June 2001 ;Login: 3 Letters to the Editor

motd by Rob Kolstad Excellence Dr. Rob Kolstad has long On Debbie Scherrer’s recommendation from several years ago, I took my parents to served as editor of view the “World Famous” Lippazzaner Stallions last night at their Pueblo, Colorado ;login:. He is also head coach of the USENIX- tour stop. I had no expectations, having ridden horses a few times and even enjoyed sponsored USA Com- seeing well-made photographs of them. Most people’s favorite part is the “airs”: the puting Olympiad. jumping and rearing up (as in “Hi ho Silver, away!” from the Lone Ranger intro). Those horses were definitely coordinated, ever moreso considering they weigh in four digits. As horses go, they were excellent. <[email protected]> We hear a lot about excellence in our industry. You can hardly turn through a trade magazine without someone extolling the excellence of their product, their service, their record, or their management style. Entire books discuss excellence. I think it’s a desir- able quality. Of course, excellence is decidedly not perfection. Perfection is yet another step and is seen only rarely in our world. There are those who felt Michael Jordan’s basketball skills bordered on perfection; others admire Tiger Woods’s golfing performances. Let’s stick to excellence for this discussion – perfection is just really challenging in general. Personally, I like excellence. I thought Dan Klein’s talk from several years ago about the parallels between Blazon (a language for describing coats-of-arms) and Postscript was excellent. I think some of Mark Burgess’s thoughts on system administration are excel- lent, even though I think I disagree with a few of the others. -

Steps to Install Horde Webmail Server on Ubuntu

Steps to Install Horde Webmail Server on Ubuntu Version: 1.3 Date: 21 Jan 2012 Presented By: Document conventions: Pink box represents commands to use. command to use Pale Green box shows the configuration files. #This is our configuration, replace this value with your environment Gray box represents the standard output on terminal. Standard Output on terminal Page | 3 Prepared by: SwapnInfoways IT Solution Table of Content 1. Introduction to Ubuntu Linux& Horde Webmail 2. Prerequisites / Checklist 3. Installation Steps. 4. Configure and run horde webmail. 5. Testing Horde webmail. Page | 4 Prepared by: SwapnInfoways IT Solution 1. Introduction to Ubuntu Linux & Horde Webmail 1.1 Ubuntu Linux :Ubuntu is a computer operating system based on the Debian Linux distribution and distributed as free and open source software. It is named after the Southern African philosophy of Ubuntu ("humanity towards others"). Ubuntu is designed primarily for use on personal computers, although a server edition also exists. Ubuntu is sponsored by the UK-based company Canonical Ltd., owned by South African entrepreneur Mark Shuttleworth. Canonical generates revenue by selling technical support and services related to Ubuntu, while the operating system itself is entirely free of charge. The Ubuntu project is entirely committed to the principles of free software development; people are encouraged to use free software, improve it, and pass it on. Ubuntu is composed of many software packages, the vast majority of which are distributed under a free software license. The only exceptions are some proprietary hardware drivers. The main license used is the GNU General Public License (GNU GPL) which, along with the GNU Lesser General Public License (GNU LGPL), explicitly declares that users are free to run, copy, distribute, study, change, develop and improve the software. -

Amélioration De La Sécurité Par La Conception Des Logiciels Web Theodoor Scholte

Amélioration de la sécurité par la conception des logiciels web Theodoor Scholte To cite this version: Theodoor Scholte. Amélioration de la sécurité par la conception des logiciels web. Web. Télécom ParisTech, 2012. Français. NNT : 2012ENST0024. tel-01225776 HAL Id: tel-01225776 https://pastel.archives-ouvertes.fr/tel-01225776 Submitted on 6 Nov 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. 2012-ENST-024 EDITE - ED 130 Doctorat ParisTech T H È S E pour obtenir le grade de docteur délivré par TELECOM ParisTech Spécialité « Réseaux et Sécurité » présentée et soutenue publiquement par Theodoor SCHOLTE le 11/5/2012 Securing Web Applications by Design Directeur de thèse : Prof. Engin KIRDA Jury Thorsten HOLZ , Professeur, Ruhr-Universit at Bochum, Germany Rapporteur Martin JOHNS , Senior Researcher, SAP AG, Germany Rapporteur Davide BALZAROTTI , Professeur, Institut EURECOM, France Examinateur Angelos KEROMYTIS , Professeur, Columbia University, USA Examinateur Thorsten STRUFE , Professeur, Technische Universit at Darmstadt, Germany Examinateur TELECOM ParisTech école de l’Institut Télécom - membre de ParisTech Acknowledgements This dissertation would not have been possible without the support of many people. First, I would like to thank my parents. They have thaught and are teaching me every day a lot. -

An Empirical Study of the Effects of Open Source

AN EMPIRICAL STUDY OF THE EFFECTS OF OPEN SOURCE ADOPTION ON SOFTWARE DEVELOPMENT ECONOMICS by Di Wu A thesis submitted to the Faculty of Graduate Studies and Research in partial fulfillment of the requirements for the degree of Master of Applied Science in Technology Innovation Management Department of Systems and Computer Engineering, Carleton University Ottawa, Canada, K1S 5B6 March 2007 © Copyright 2007 Di Wu Reproduced with permission of the copyright owner. Further reproduction prohibited without permission. Library and Bibliotheque et Archives Canada Archives Canada Published Heritage Direction du Branch Patrimoine de I'edition 395 Wellington Street 395, rue Wellington Ottawa ON K1A 0N4 Ottawa ON K1A 0N4 Canada Canada Your file Votre reference ISBN: 978-0-494-27005-9 Our file Notre reference ISBN: 978-0-494-27005-9 NOTICE: AVIS: The author has granted a non L'auteur a accorde une licence non exclusive exclusive license allowing Library permettant a la Bibliotheque et Archives and Archives Canada to reproduce,Canada de reproduire, publier, archiver, publish, archive, preserve, conserve,sauvegarder, conserver, transmettre au public communicate to the public by par telecommunication ou par I'lnternet, preter, telecommunication or on the Internet,distribuer et vendre des theses partout dans loan, distribute and sell theses le monde, a des fins commerciales ou autres, worldwide, for commercial or non sur support microforme, papier, electronique commercial purposes, in microform,et/ou autres formats. paper, electronic and/or any other formats. The author retains copyright L'auteur conserve la propriete du droit d'auteur ownership and moral rights in et des droits moraux qui protege cette these.