June 2001 ;Login: 3 Letters to the Editor

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

DETECTING BOTS in INTERNET CHAT by SRITI KUMAR Under The

DETECTING BOTS IN INTERNET CHAT by SRITI KUMAR (Under the Direction of Kang Li) ABSTRACT Internet chat is a real-time communication tool that allows on-line users to communicate via text in virtual spaces, called chat rooms or channels. The abuse of Internet chat by bots also known as chat bots/chatterbots poses a serious threat to the users and quality of service. Chat bots target popular chat networks to distribute spam and malware. We first collect data from a large commercial chat network and then conduct a series of analysis. While analyzing the data, different patterns were detected which represented different bot behaviors. Based on the analysis on the dataset, we proposed a classification system with three main components (1) content- based classifiers (2) machine learning classifier (3) communicator. All three components of the system complement each other in detecting bots. Evaluation of the system has shown some measured success in detecting bots in both log-based dataset and in live chat rooms. INDEX WORDS: Yahoo! Chat room, Chat Bots, ChatterBots, SPAM, YMSG DETECTING BOTS IN INTERNET CHAT by SRITI KUMAR B.E., Visveswariah Technological University, India, 2006 A Thesis Submitted to the Graduate Faculty of The University of Georgia in Partial Fulfillment of the Requirements for the Degree MASTER OF SCIENCE ATHENS, GEORGIA 2010 © 2010 Sriti Kumar All Rights Reserved DETECTING BOTS IN INTERNET CHAT by SRITI KUMAR Major Professor: Kang Li Committee: Lakshmish Ramaxwamy Prashant Doshi Electronic Version Approved: Maureen Grasso Dean of the Graduate School The University of Georgia December 2010 DEDICATION I would like to dedicate my work to my mother to be patient with me, my father for never questioning me, my brother for his constant guidance and above all for their unconditional love. -

Volume 108, Issue 12

BObcaTS TEAM UP BU STUDENT WINS WITH CHRISTMAS MCIE AwaRD pg. 2 CHEER pg. 3 VOL. 108 | ISSUE NO.12| NOVEMBER 28TH, 2017 ...caFFEINE... SINCE 1910 LONG NIGHT AG A INST PROCR A STIN A TION ANOTHER SUCCESS Students cracking down and getting those assignments out of the way. Photo Credit: Patrick Gohl. Patrick Gohl, Reporter am sure the word has spread Robbins Library on Wednesday in the curriculum area. If you of the whole event. I will now tinate. I around campus already, ex- the 22nd of November. were a little late for your sched- remedy this grievous error and Having made it this far in ams are just around the cor- The event was designed to uled session you were likely to make mention of the free food. the semester, one could be led ner. ‘Tis the season to toss your combat study procrastination, get bumped back as there were Healthy snacks such as apples to believe, quite incorrectly, amassed library of class notes in and encourage students to be- many students looking for help and bananas were on offer from that the home stretch is more of frustration, to scream at your gin their exam preparation. It all to gain that extra edge on their the get go along with tea and the same. This falsehood might computer screen like a mad- started at 7:00PM and ran until assignments and exams. coffee. Those that managed be an alluring belief to grasp man, and soak your pillow with 3:00AM the following morn- In addition to the academic to last until midnight were re- hold of when the importance to tears of desperation. -

Attacker Chatbots for Randomised and Interactive Security Labs, Using Secgen and Ovirt

Hackerbot: Attacker Chatbots for Randomised and Interactive Security Labs, Using SecGen and oVirt Z. Cliffe Schreuders, Thomas Shaw, Aimée Mac Muireadhaigh, Paul Staniforth, Leeds Beckett University Abstract challenges, rewarding correct solutions with flags. We deployed an oVirt infrastructure to host the VMs, and Capture the flag (CTF) has been applied with success in leveraged the SecGen framework [6] to generate lab cybersecurity education, and works particularly well sheets, provision VMs, and provide randomisation when learning offensive techniques. However, between students. defensive security and incident response do not always naturally fit the existing approaches to CTF. We present 2. Related Literature Hackerbot, a unique approach for teaching computer Capture the flag (CTF) is a type of cyber security game security: students interact with a malicious attacker which involves collecting flags by solving security chatbot, who challenges them to complete a variety of challenges. CTF events give professionals, students, security tasks, including defensive and investigatory and enthusiasts an opportunity to test their security challenges. Challenges are randomised using SecGen, skills in competition. CTFs emerged out of the and deployed onto an oVirt infrastructure. DEFCON hacker conference [7] and remain common Evaluation data included system performance, mixed activities at cybersecurity conferences and online [8]. methods questionnaires (including the Instructional Some events target students with the goal of Materials Motivation Survey (IMMS) and the System encouraging interest in the field: for example, PicoCTF Usability Scale (SUS)), and group interviews/focus is an annual high school competition [9], and CSAW groups. Results were encouraging, finding the approach CTF is an annual competition for students in Higher convenient, engaging, fun, and interactive; while Education (HE) [10]. -

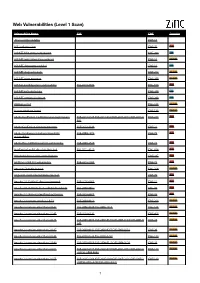

Web Vulnerabilities (Level 1 Scan)

Web Vulnerabilities (Level 1 Scan) Vulnerability Name CVE CWE Severity .htaccess file readable CWE-16 ASP code injection CWE-95 High ASP.NET MVC version disclosure CWE-200 Low ASP.NET application trace enabled CWE-16 Medium ASP.NET debugging enabled CWE-16 Low ASP.NET diagnostic page CWE-200 Medium ASP.NET error message CWE-200 Medium ASP.NET padding oracle vulnerability CVE-2010-3332 CWE-310 High ASP.NET path disclosure CWE-200 Low ASP.NET version disclosure CWE-200 Low AWStats script CWE-538 Medium Access database found CWE-538 Medium Adobe ColdFusion 9 administrative login bypass CVE-2013-0625 CVE-2013-0629CVE-2013-0631 CVE-2013-0 CWE-287 High 632 Adobe ColdFusion directory traversal CVE-2013-3336 CWE-22 High Adobe Coldfusion 8 multiple linked XSS CVE-2009-1872 CWE-79 High vulnerabilies Adobe Flex 3 DOM-based XSS vulnerability CVE-2008-2640 CWE-79 High AjaxControlToolkit directory traversal CVE-2015-4670 CWE-434 High Akeeba backup access control bypass CWE-287 High AmCharts SWF XSS vulnerability CVE-2012-1303 CWE-79 High Amazon S3 public bucket CWE-264 Medium AngularJS client-side template injection CWE-79 High Apache 2.0.39 Win32 directory traversal CVE-2002-0661 CWE-22 High Apache 2.0.43 Win32 file reading vulnerability CVE-2003-0017 CWE-20 High Apache 2.2.14 mod_isapi Dangling Pointer CVE-2010-0425 CWE-20 High Apache 2.x version equal to 2.0.51 CVE-2004-0811 CWE-264 Medium Apache 2.x version older than 2.0.43 CVE-2002-0840 CVE-2002-1156 CWE-538 Medium Apache 2.x version older than 2.0.45 CVE-2003-0132 CWE-400 Medium Apache 2.x version -

Tomenet-Guide.Pdf

.==========================================================================+−−. | TomeNET Guide | +==========================================================================+− | Latest update: 17. September 2021 − written by C. Blue ([email protected]) | | for TomeNET version v4.7.4b − official websites are: : | https://www.tomenet.eu/ (official main site, formerly www.tomenet.net) | https://muuttuja.org/tomenet/ (Mikael’s TomeNET site) | Runes & Runemastery sections by Kurzel ([email protected]) | | You should always keep this guide up to date: Either go to www.tomenet.eu | to obtain the latest copy or simply run the TomeNET−Updater.exe in your | TomeNET installation folder (desktop shortcut should also be available) | to update it. | | If your text editor cannot display the guide properly (needs fixed−width | font like for example Courier), simply open it in any web browser instead. +−−− | Welcome to this guide! | Although I’m trying, I give no guarantee that this guide | a) contains really every detail/issue about TomeNET and | b) is all the time 100% accurate on every occasion. | Don’t blame me if something differs or is missing; it shouldn’t though. | | If you have any suggestions about the guide or the game, please use the | /rfe command in the game or write to the official forum on www.tomenet.eu. : \ Contents −−−−−−−− (0) Quickstart (If you don’t like to read much :) (0.1) Start & play, character validation, character timeout (0.1a) Colours and colour blindness (0.1b) Photosensitivity / Epilepsy issues (0.2) Command reference -

Multi-Phase IRC Botnet and Botnet Behavior Detection Model

International Journal of Computer Applications (0975 – 8887) Volume 66– No.15, March 2013 Multi-phase IRC Botnet and Botnet Behavior Detection Model Aymen Hasan Rashid Al Awadi Bahari Belaton Information Technology Research Development School of Computer Sciences Universiti Sains Center, University of Kufa, Najaf, Iraq Malaysia 11800 USM, Penang, Malaysia School of Computer Sciences Universiti Sains Malaysia 11800 USM, Penang, Malaysia ABSTRACT schools, banks and any of governmental institutes making use of system vulnerabilities and software bugs to separate and Botnets are considered one of the most dangerous and serious execute a lot of malicious activities. Recently, bots can be the security threats facing the networks and the Internet. major one of the major sources for distributing or performing Comparing with the other security threats, botnet members many kinds of scanning related attacks (Distributed Denial-of- have the ability to be directed and controlled via C&C Service DoS) [1], spamming [2], click fraud [3], identity messages from the botmaster over common protocols such as fraud, sniffing traffic and key logging [4] etc. The nature of IRC and HTTP, or even over covert and unknown the bots activities is to respond to the botmaster's control applications. As for IRC botnets, general security instances command simultaneously. This responding will enable the like firewalls and IDSes do not provide by themselves a viable botmaster to get the full benefit from the infected hosts to solution to prevent them completely. These devices could not attack another target like in DDoS [5]. From what stated differentiate well between the legitimate and malicious traffic earlier, the botnet can be defined as a group of connected of the IRC protocol. -

IRC:N Käyttö Yritysten Viestinnässä

Maarit Klami IRC:n käyttö yritysten viestinnässä Metropolia Ammattikorkeakoulu Insinööri (AMK) Tietotekniikan koulutusohjelma Insinöörityö 3.5.2012 Tiivistelmä Tekijä Maarit Klami Otsikko IRC:n käyttö yritysten viestinnässä Sivumäärä 34 sivua + 2 liitettä Aika 3.5.2012 Tutkinto insinööri (AMK) Koulutusohjelma tietotekniikka Suuntautumisvaihtoehto ohjelmistotekniikka Ohjaaja lehtori Peter Hjort Tässä työssä perehdyttiin IRC:n käyttömahdollisuuksiin yritysten sisäisessä ja ulkoisessa viestinnässä. Työssä verrattiin IRC:tä muihin pikaviestimiin sekä sosiaalisen median tarjoamiin viestintä- ja markkinointimahdollisuuksiin. Eräs tarkastelluista seikoista on yrityksen koon vaikutus siihen, kuinka mittava operaatio IRC:n käyttöönotto yrityksessä on. Työssä on esitetty myös mahdollisuuksia muiden pikaviestimien integroimiseksi IRC:hen. Työ on tehty mielenkiinnosta käyttää IRC:tä viestinnän apuna yrityksissä. Tietoturva on keskeisessä roolissa yritysten toiminnassa, josta johtuen asia on huomioitava myös viestintäjärjestelmien käytöönotossa ja päivittäisessä käytössä. Työssä tietoturvaa on käsitelty sekä tekniseltä että inhimilliseltä kannalta. Työssä yhtenä keskeisenä osana on tutustuttu IRC-bottien hyödyntämiseen yritysten toiminnassa. Osana työtä tehtiin Eggdropilla IRC-botti, jolle perusominaisuuksien laajentamiseksi ohjelmoitiin TCL-ohjelmointikieltä käyttäen halutun toiminnalisuuden toteuttava skripti. Työssä esitellään botin käyttöönoton vaatimat toimenpiteet sekä skriptin totetuttaminen. Lisäksi työssä on esitelty erilaisia bottien toiminnallisuuksia -

Eggdrop IRC-Botti Pelaajayhteisölle

Eggdrop IRC -botti pelaajayhteisölle Heikki Hyvönen Opinnäytetyö ___. ___. ______ ________________________________ Ammattikorkeakoulututkinto SAVONIA-AMMATTIKORKEAKOULU OPINNÄYTETYÖ Tiivistelmä Koulutusala Luonnontieteiden ala Koulutusohjelma Tietojenkäsittelyn koulutusohjelma Työn tekijä(t) Heikki Hyvönen Työn nimi Eggdrop IRC -botti pelaajayhteisölle Päiväys 15.5.2012 Sivumäärä/Liitteet 29 Ohjaaja(t) Marja -Riitta Kivi Toimeksiantaja/Yhteistyökumppani(t) Pelaajayhte isö Tiivistelmä Tässä opinnäytetyössä luodaan IRC-botti pelaajayhteisön käyttöön. Botin tarkoitus on ylläpitää kuria ja järjestystä pelaajayhteisön IRC-kanavalla. IRC (Internet Relay Chat ) on suomalaisen Jarkko Oikarisen kehittämä pikaviestinpalvelu, jossa ihmiset ympäri maailman voivat kokoontua keskustelemaan itse perustamilleen kanaville. IRC:n suosio on laskenut melkoisesti sen vuoden 2004 saavuttamista huippulukemista. Nykyään suosituimmalla IRC-verkolla on käyttäjiä enää noin 80 000, kun vuonna 2004 niitä oli vielä yli 200 000. Eggdrop on suosituin IRC-botti ja sitä käytetään tässäkin opinnäytetyössä. Eggdropin parhaat ominaisuudet ovat partyline-chat sekä TCL-scripteillä luodut laajennukset. Tässä työssä kerrotaan mitä tarvitaan, jotta saadaan aikaan toimiva IRC-botti. Avainsanat Irc, Eggdrop SAVONIA UNIVERSITY OF APPLIED SCIENCES THESIS Abstract Field of Study Natural Sciences Degree Programme Degree Programme in Computer Science Author(s) Heikki Hyvönen Title of Thesis Eggdrop IRC bot to a gaming community Date 15.5.2012 Pages/Appendices 29 Supervisor(s) Marja-Riitta Kivi Client Organisation /Partners Gaming community Abstract The goal of this thesis was to create an IRC bot for a gaming community. The bot is used to main- tain law and order in the community's IRC channel. IRC (Internet Relay Chat ), developed by Finnish Jarkko Oikarinen, is a real time text messaging protocol where people all around the world can discuss together on channels created by them- selves. -

Kabbalah, Magic & the Great Work of Self Transformation

KABBALAH, MAGIC AHD THE GREAT WORK Of SELf-TRAHSfORMATIOH A COMPL€T€ COURS€ LYAM THOMAS CHRISTOPHER Llewellyn Publications Woodbury, Minnesota Contents Acknowledgments Vl1 one Though Only a Few Will Rise 1 two The First Steps 15 three The Secret Lineage 35 four Neophyte 57 five That Darkly Splendid World 89 SIX The Mind Born of Matter 129 seven The Liquid Intelligence 175 eight Fuel for the Fire 227 ntne The Portal 267 ten The Work of the Adept 315 Appendix A: The Consecration ofthe Adeptus Wand 331 Appendix B: Suggested Forms ofExercise 345 Endnotes 353 Works Cited 359 Index 363 Acknowledgments The first challenge to appear before the new student of magic is the overwhehning amount of published material from which he must prepare a road map of self-initiation. Without guidance, this is usually impossible. Therefore, lowe my biggest thanks to Peter and Laura Yorke of Ra Horakhty Temple, who provided my first exposure to self-initiation techniques in the Golden Dawn. Their years of expe rience with the Golden Dawn material yielded a structure of carefully selected ex ercises, which their students still use today to bring about a gradual transformation. WIthout such well-prescribed use of the Golden Dawn's techniques, it would have been difficult to make progress in its grade system. The basic structure of the course in this book is built on a foundation of the Golden Dawn's elemental grade system as my teachers passed it on. In particular, it develops further their choice to use the color correspondences of the Four Worlds, a piece of the original Golden Dawn system that very few occultists have recognized as an ini tiatory tool. -

Open Source Katalog 2009 – Seite 1

Optaros Open Source Katalog 2009 – Seite 1 OPEN SOURCE KATALOG 2009 350 Produkte/Projekte für den Unternehmenseinsatz OPTAROS WHITE PAPER Applikationsentwicklung Assembly Portal BI Komponenten Frameworks Rules Engine SOA Web Services Programmiersprachen ECM Entwicklungs- und Testumgebungen Open Source VoIP CRM Frameworks eCommerce BI Infrastrukturlösungen Programmiersprachen ETL Integration Office-Anwendungen Geschäftsanwendungen ERP Sicherheit CMS Knowledge Management DMS ESB © Copyright 2008. Optaros Open Source Katalog 2009 - Seite 2 Optaros Referenz-Projekte als Beispiele für Open Source-Einsatz im Unternehmen Kunde Projektbeschreibung Technologien Intranet-Plattform zur Automatisierung der •JBossAS Geschäftsprozesse rund um „Information Systems •JBossSeam Compliance“ •jQuery Integrationsplattform und –architektur NesOA als • Mule Enterprise Bindeglied zwischen Vertriebs-/Service-Kanälen und Service Bus den Waren- und Logistiksystemen •JBossMiddleware stack •JBossMessaging CRM-Anwendung mit Fokus auf Sales-Force- •SugarCRM Automation Online-Community für die Entwickler rund um die •AlfrescoECM Endeca-Search-Software; breit angelegtes •Liferay Enterprise Portal mit Selbstbedienungs-, •Wordpress Kommunikations- und Diskussions-Funktionalitäten Swisscom Labs: Online-Plattform für die •AlfrescoWCMS Bereitstellung von zukünftigen Produkten (Beta), •Spring, JSF zwecks Markt- und Early-Adopter-Feedback •Nagios eGovernment-Plattform zur Speicherung und •AlfrescoECM Zurverfügungstellung von Verwaltungs- • Spring, Hibernate Dokumenten; integriert -

Introducción a Linux Equivalencias Windows En Linux Ivalencias

No has iniciado sesión Discusión Contribuciones Crear una cuenta Acceder Página discusión Leer Editar Ver historial Buscar Introducción a Linux Equivalencias Windows en Linux Portada < Introducción a Linux Categorías de libros Equivalencias Windows en GNU/Linux es una lista de equivalencias, reemplazos y software Cam bios recientes Libro aleatorio análogo a Windows en GNU/Linux y viceversa. Ayuda Contenido [ocultar] Donaciones 1 Algunas diferencias entre los programas para Windows y GNU/Linux Comunidad 2 Redes y Conectividad Café 3 Trabajando con archivos Portal de la comunidad 4 Software de escritorio Subproyectos 5 Multimedia Recetario 5.1 Audio y reproductores de CD Wikichicos 5.2 Gráficos 5.3 Video y otros Imprimir/exportar 6 Ofimática/negocios Crear un libro 7 Juegos Descargar como PDF Versión para im primir 8 Programación y Desarrollo 9 Software para Servidores Herramientas 10 Científicos y Prog s Especiales 11 Otros Cambios relacionados 12 Enlaces externos Subir archivo 12.1 Notas Páginas especiales Enlace permanente Información de la Algunas diferencias entre los programas para Windows y y página Enlace corto GNU/Linux [ editar ] Citar esta página La mayoría de los programas de Windows son hechos con el principio de "Todo en uno" (cada Idiomas desarrollador agrega todo a su producto). De la misma forma, a este principio le llaman el Añadir enlaces "Estilo-Windows". Redes y Conectividad [ editar ] Descripción del programa, Windows GNU/Linux tareas ejecutadas Firefox (Iceweasel) Opera [NL] Internet Explorer Konqueror Netscape / -

Botnets, Zombies, and Irc Security

Botnets 1 BOTNETS, ZOMBIES, AND IRC SECURITY Investigating Botnets, Zombies, and IRC Security Seth Thigpen East Carolina University Botnets 2 Abstract The Internet has many aspects that make it ideal for communication and commerce. It makes selling products and services possible without the need for the consumer to set foot outside his door. It allows people from opposite ends of the earth to collaborate on research, product development, and casual conversation. Internet relay chat (IRC) has made it possible for ordinary people to meet and exchange ideas. It also, however, continues to aid in the spread of malicious activity through botnets, zombies, and Trojans. Hackers have used IRC to engage in identity theft, sending spam, and controlling compromised computers. Through the use of carefully engineered scripts and programs, hackers can use IRC as a centralized location to launch DDoS attacks and infect computers with robots to effectively take advantage of unsuspecting targets. Hackers are using zombie armies for their personal gain. One can even purchase these armies via the Internet black market. Thwarting these attacks and promoting security awareness begins with understanding exactly what botnets and zombies are and how to tighten security in IRC clients. Botnets 3 Investigating Botnets, Zombies, and IRC Security Introduction The Internet has become a vast, complex conduit of information exchange. Many different tools exist that enable Internet users to communicate effectively and efficiently. Some of these tools have been developed in such a way that allows hackers with malicious intent to take advantage of other Internet users. Hackers have continued to create tools to aid them in their endeavors.