3. Quantum Gases

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Part 5: the Bose-Einstein Distribution

PHYS393 – Statistical Physics Part 5: The Bose-Einstein Distribution Distinguishable and indistinguishable particles In the previous parts of this course, we derived the Boltzmann distribution, which described how the number of distinguishable particles in different energy states varied with the energy of those states, at different temperatures: N εj nj = e− kT . (1) Z However, in systems consisting of collections of identical fermions or identical bosons, the wave function of the system has to be either antisymmetric (for fermions) or symmetric (for bosons) under interchange of any two particles. With the allowed wave functions, it is no longer possible to identify a particular particle with a particular energy state. Instead, all the particles are “shared” between the occupied states. The particles are said to be indistinguishable . Statistical Physics 1 Part 5: The Bose-Einstein Distribution Indistinguishable fermions In the case of indistinguishable fermions, the wave function for the overall system must be antisymmetric under the interchange of any two particles. One consequence of this is the Pauli exclusion principle: any given state can be occupied by at most one particle (though we can’t say which particle!) For example, for a system of two fermions, a possible wave function might be: 1 ψ(x1, x 2) = [ψ (x1)ψ (x2) ψ (x2)ψ (x1)] . (2) √2 A B − A B Here, x1 and x2 are the coordinates of the two particles, and A and B are the two occupied states. If we try to put the two particles into the same state, then the wave function vanishes. Statistical Physics 2 Part 5: The Bose-Einstein Distribution Indistinguishable fermions Finding the distribution with the maximum number of microstates for a system of identical fermions leads to the Fermi-Dirac distribution: g n = i . -

The Debye Model of Lattice Heat Capacity

The Debye Model of Lattice Heat Capacity The Debye model of lattice heat capacity is more involved than the relatively simple Einstein model, but it does keep the same basic idea: the internal energy depends on the energy per phonon (ε=ℏΩ) times the ℏΩ/kT average number of phonons (Planck distribution: navg=1/[e -1]) times the number of modes. It is in the number of modes that the difference between the two models occurs. In the Einstein model, we simply assumed that each mode was the same (same frequency, Ω), and that the number of modes was equal to the number of atoms in the lattice. In the Debye model, we assume that each mode has its own K (that is, has its own λ). Since Ω is related to K (by the dispersion relation), each mode has a different Ω. 1. Number of modes In the Debye model we assume that the normal modes consist of STANDING WAVES. If we have travelling waves, they will carry energy through the material; if they contain the heat energy (that will not move) they need to be standing waves. Standing waves are waves that do travel but go back and forth and interfere with each other to create standing waves. Recall that Newton's Second Law gave us atoms that oscillate: [here x = sa where s is an integer and a the lattice spacing] iΩt iKsa us = uo e e ; and if we use the form sin(Ksa) for eiKsa [both indicate oscillations]: iΩt us = uo e sin(Ksa) . We now apply the boundary conditions on our solution: to have standing waves both ends of the wave must be tied down, that is, we must have u0 = 0 and uN = 0. -

Competition Between Paramagnetism and Diamagnetism in Charged

Competition between paramagnetism and diamagnetism in charged Fermi gases Xiaoling Jian, Jihong Qin, and Qiang Gu∗ Department of Physics, University of Science and Technology Beijing, Beijing 100083, China (Dated: November 10, 2018) The charged Fermi gas with a small Lande-factor g is expected to be diamagnetic, while that with a larger g could be paramagnetic. We calculate the critical value of the g-factor which separates the dia- and para-magnetic regions. In the weak-field limit, gc has the same value both at high and low temperatures, gc = 1/√12. Nevertheless, gc increases with the temperature reducing in finite magnetic fields. We also compare the gc value of Fermi gases with those of Boltzmann and Bose gases, supposing the particle has three Zeeman levels σ = 1, 0, and find that gc of Bose and Fermi gases is larger and smaller than that of Boltzmann gases,± respectively. PACS numbers: 05.30.Fk, 51.60.+a, 75.10.Lp, 75.20.-g I. INTRODUCTION mK and 10 µK, respectively. The ions can be regarded as charged Bose or Fermi gases. Once the quantum gas Magnetism of electron gases has been considerably has both the spin and charge degrees of freedom, there studied in condensed matter physics. In magnetic field, arises the competition between paramagnetism and dia- the magnetization of a free electron gas consists of two magnetism, as in electrons. Different from electrons, the independent parts. The spin magnetic moment of elec- g-factor for different magnetic ions is diverse, ranging trons results in the paramagnetic part (the Pauli para- from 0 to 2. -

Lecture 3: Fermi-Liquid Theory 1 General Considerations Concerning Condensed Matter

Phys 769 Selected Topics in Condensed Matter Physics Summer 2010 Lecture 3: Fermi-liquid theory Lecturer: Anthony J. Leggett TA: Bill Coish 1 General considerations concerning condensed matter (NB: Ultracold atomic gasses need separate discussion) Assume for simplicity a single atomic species. Then we have a collection of N (typically 1023) nuclei (denoted α,β,...) and (usually) ZN electrons (denoted i,j,...) interacting ∼ via a Hamiltonian Hˆ . To a first approximation, Hˆ is the nonrelativistic limit of the full Dirac Hamiltonian, namely1 ~2 ~2 1 e2 1 Hˆ = 2 2 + NR −2m ∇i − 2M ∇α 2 4πǫ r r α 0 i j Xi X Xij | − | 1 (Ze)2 1 1 Ze2 1 + . (1) 2 4πǫ0 Rα Rβ − 2 4πǫ0 ri Rα Xαβ | − | Xiα | − | For an isolated atom, the relevant energy scale is the Rydberg (R) – Z2R. In addition, there are some relativistic effects which may need to be considered. Most important is the spin-orbit interaction: µ Hˆ = B σ (v V (r )) (2) SO − c2 i · i × ∇ i Xi (µB is the Bohr magneton, vi is the velocity, and V (ri) is the electrostatic potential at 2 3 2 ri as obtained from HˆNR). In an isolated atom this term is o(α R) for H and o(Z α R) for a heavy atom (inner-shell electrons) (produces fine structure). The (electron-electron) magnetic dipole interaction is of the same order as HˆSO. The (electron-nucleus) hyperfine interaction is down relative to Hˆ by a factor µ /µ 10−3, and the nuclear dipole-dipole SO n B ∼ interaction by a factor (µ /µ )2 10−6. -

Solid State Physics 2 Lecture 5: Electron Liquid

Physics 7450: Solid State Physics 2 Lecture 5: Electron liquid Leo Radzihovsky (Dated: 10 March, 2015) Abstract In these lectures, we will study itinerate electron liquid, namely metals. We will begin by re- viewing properties of noninteracting electron gas, developing its Greens functions, analyzing its thermodynamics, Pauli paramagnetism and Landau diamagnetism. We will recall how its thermo- dynamics is qualitatively distinct from that of a Boltzmann and Bose gases. As emphasized by Sommerfeld (1928), these qualitative di↵erence are due to the Pauli principle of electons’ fermionic statistics. We will then include e↵ects of Coulomb interaction, treating it in Hartree and Hartree- Fock approximation, computing the ground state energy and screening. We will then study itinerate Stoner ferromagnetism as well as various response functions, such as compressibility and conduc- tivity, and screening (Thomas-Fermi, Debye). We will then discuss Landau Fermi-liquid theory, which will allow us understand why despite strong electron-electron interactions, nevertheless much of the phenomenology of a Fermi gas extends to a Fermi liquid. We will conclude with discussion of electrons on the lattice, treated within the Hubbard and t-J models and will study transition to a Mott insulator and magnetism 1 I. INTRODUCTION A. Outline electron gas ground state and excitations • thermodynamics • Pauli paramagnetism • Landau diamagnetism • Hartree-Fock theory of interactions: ground state energy • Stoner ferromagnetic instability • response functions • Landau Fermi-liquid theory • electrons on the lattice: Hubbard and t-J models • Mott insulators and magnetism • B. Background In these lectures, we will study itinerate electron liquid, namely metals. In principle a fully quantum mechanical, strongly Coulomb-interacting description is required. -

On Thermality of CFT Eigenstates

On thermality of CFT eigenstates Pallab Basu1 , Diptarka Das2 , Shouvik Datta3 and Sridip Pal2 1International Center for Theoretical Sciences-TIFR, Shivakote, Hesaraghatta Hobli, Bengaluru North 560089, India. 2Department of Physics, University of California San Diego, La Jolla, CA 92093, USA. 3Institut f¨urTheoretische Physik, Eidgen¨ossischeTechnische Hochschule Z¨urich, 8093 Z¨urich,Switzerland. E-mail: [email protected], [email protected], [email protected], [email protected] Abstract: The Eigenstate Thermalization Hypothesis (ETH) provides a way to under- stand how an isolated quantum mechanical system can be approximated by a thermal density matrix. We find a class of operators in (1+1)-d conformal field theories, consisting of quasi-primaries of the identity module, which satisfy the hypothesis only at the leading order in large central charge. In the context of subsystem ETH, this plays a role in the deviation of the reduced density matrices, corresponding to a finite energy density eigen- state from its hypothesized thermal approximation. The universal deviation in terms of the square of the trace-square distance goes as the 8th power of the subsystem fraction and is suppressed by powers of inverse central charge (c). Furthermore, the non-universal deviations from subsystem ETH are found to be proportional to the heavy-light-heavy p arXiv:1705.03001v2 [hep-th] 26 Aug 2017 structure constants which are typically exponentially suppressed in h=c, where h is the conformal scaling dimension of the finite energy density -

Particle Characterisation In

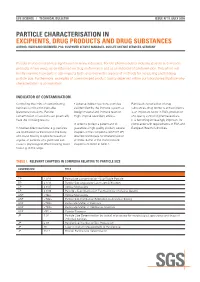

LIFE SCIENCE I TECHNICAL BULLETIN ISSUE N°11 /JULY 2008 PARTICLE CHARACTERISATION IN EXCIPIENTS, DRUG PRODUCTS AND DRUG SUBSTANCES AUTHOR: HILDEGARD BRÜMMER, PhD, CUSTOMER SERVICE MANAGER, SGS LIFE SCIENCE SERVICES, GERMANY Particle characterization has significance in many industries. For the pharmaceutical industry, particle size impacts products in two ways: as an influence on drug performance and as an indicator of contamination. This article will briefly examine how particle size impacts both, and review the arsenal of methods for measuring and tracking particle size. Furthermore, examples of compromised product quality observed within our laboratories illustrate why characterization is so important. INDICATOR OF CONTAMINATION Controlling the limits of contaminating • Adverse indirect reactions: particles Particle characterisation of drug particles is critical for injectable are identified by the immune system as substances, drug products and excipients (parenteral) solutions. Particle foreign material and immune reaction is an important factor in R&D, production contamination of solutions can potentially might impose secondary effects. and quality control of pharmaceuticals. have the following results: It is becoming increasingly important for In order to protect a patient and to compliance with requirements of FDA and • Adverse direct reactions: e.g. particles guarantee a high quality product, several European Health Authorities. are distributed via the blood in the body chapters in the compendia (USP, EP, JP) and cause toxicity to specific tissues or describe techniques for characterisation organs, or particles of a given size can of limits. Some of the most relevant cause a physiological effect blocking blood chapters are listed in Table 1. flow e.g. in the lungs. -

Chapter 3 Bose-Einstein Condensation of an Ideal

Chapter 3 Bose-Einstein Condensation of An Ideal Gas An ideal gas consisting of non-interacting Bose particles is a ¯ctitious system since every realistic Bose gas shows some level of particle-particle interaction. Nevertheless, such a mathematical model provides the simplest example for the realization of Bose-Einstein condensation. This simple model, ¯rst studied by A. Einstein [1], correctly describes important basic properties of actual non-ideal (interacting) Bose gas. In particular, such basic concepts as BEC critical temperature Tc (or critical particle density nc), condensate fraction N0=N and the dimensionality issue will be obtained. 3.1 The ideal Bose gas in the canonical and grand canonical ensemble Suppose an ideal gas of non-interacting particles with ¯xed particle number N is trapped in a box with a volume V and at equilibrium temperature T . We assume a particle system somehow establishes an equilibrium temperature in spite of the absence of interaction. Such a system can be characterized by the thermodynamic partition function of canonical ensemble X Z = e¡¯ER ; (3.1) R where R stands for a macroscopic state of the gas and is uniquely speci¯ed by the occupa- tion number ni of each single particle state i: fn0; n1; ¢ ¢ ¢ ¢ ¢ ¢g. ¯ = 1=kBT is a temperature parameter. Then, the total energy of a macroscopic state R is given by only the kinetic energy: X ER = "ini; (3.2) i where "i is the eigen-energy of the single particle state i and the occupation number ni satis¯es the normalization condition X N = ni: (3.3) i 1 The probability -

Bose-Einstein Condensation of Photons and Grand-Canonical Condensate fluctuations

Bose-Einstein condensation of photons and grand-canonical condensate fluctuations Jan Klaers Institute for Applied Physics, University of Bonn, Germany Present address: Institute for Quantum Electronics, ETH Zürich, Switzerland Martin Weitz Institute for Applied Physics, University of Bonn, Germany Abstract We review recent experiments on the Bose-Einstein condensation of photons in a dye-filled optical microresonator. The most well-known example of a photon gas, pho- tons in blackbody radiation, does not show Bose-Einstein condensation. Instead of massively populating the cavity ground mode, photons vanish in the cavity walls when they are cooled down. The situation is different in an ultrashort optical cavity im- printing a low-frequency cutoff on the photon energy spectrum that is well above the thermal energy. The latter allows for a thermalization process in which both tempera- ture and photon number can be tuned independently of each other or, correspondingly, for a non-vanishing photon chemical potential. We here describe experiments demon- strating the fluorescence-induced thermalization and Bose-Einstein condensation of a two-dimensional photon gas in the dye microcavity. Moreover, recent measurements on the photon statistics of the condensate, showing Bose-Einstein condensation in the grandcanonical ensemble limit, will be reviewed. 1 Introduction Quantum statistical effects become relevant when a gas of particles is cooled, or its den- sity is increased, to the point where the associated de Broglie wavepackets spatially over- arXiv:1611.10286v1 [cond-mat.quant-gas] 30 Nov 2016 lap. For particles with integer spin (bosons), the phenomenon of Bose-Einstein condensation (BEC) then leads to macroscopic occupation of a single quantum state at finite tempera- tures [1]. -

![Arxiv:2102.13616V2 [Cond-Mat.Quant-Gas] 30 Jul 2021 That Preserve Stability of the Underlying Problem1](https://docslib.b-cdn.net/cover/6442/arxiv-2102-13616v2-cond-mat-quant-gas-30-jul-2021-that-preserve-stability-of-the-underlying-problem1-456442.webp)

Arxiv:2102.13616V2 [Cond-Mat.Quant-Gas] 30 Jul 2021 That Preserve Stability of the Underlying Problem1

Self-stabilized Bose polarons Richard Schmidt1, 2 and Tilman Enss3 1Max-Planck-Institute of Quantum Optics, Hans-Kopfermann-Straße 1, 85748 Garching, Germany 2Munich Center for Quantum Science and Technology, Schellingstraße 4, 80799 Munich, Germany 3Institut f¨urTheoretische Physik, Universit¨atHeidelberg, 69120 Heidelberg, Germany (Dated: August 2, 2021) The mobile impurity in a Bose-Einstein condensate (BEC) is a paradigmatic many-body problem. For weak interaction between the impurity and the BEC, the impurity deforms the BEC only slightly and it is well described within the Fr¨ohlich model and the Bogoliubov approximation. For strong local attraction this standard approach, however, fails to balance the local attraction with the weak repulsion between the BEC particles and predicts an instability where an infinite number of bosons is attracted toward the impurity. Here we present a solution of the Bose polaron problem beyond the Bogoliubov approximation which includes the local repulsion between bosons and thereby stabilizes the Bose polaron even near and beyond the scattering resonance. We show that the Bose polaron energy remains bounded from below across the resonance and the size of the polaron dressing cloud stays finite. Our results demonstrate how the dressing cloud replaces the attractive impurity potential with an effective many-body potential that excludes binding. We find that at resonance, including the effects of boson repulsion, the polaron energy depends universally on the effective range. Moreover, while the impurity contact is strongly peaked at positive scattering length, it remains always finite. Our solution highlights how Bose polarons are self-stabilized by repulsion, providing a mechanism to understand quench dynamics and nonequilibrium time evolution at strong coupling. -

From Quantum Chaos and Eigenstate Thermalization to Statistical

Advances in Physics,2016 Vol. 65, No. 3, 239–362, http://dx.doi.org/10.1080/00018732.2016.1198134 REVIEW ARTICLE From quantum chaos and eigenstate thermalization to statistical mechanics and thermodynamics a,b c b a Luca D’Alessio ,YarivKafri,AnatoliPolkovnikov and Marcos Rigol ∗ aDepartment of Physics, The Pennsylvania State University, University Park, PA 16802, USA; bDepartment of Physics, Boston University, Boston, MA 02215, USA; cDepartment of Physics, Technion, Haifa 32000, Israel (Received 22 September 2015; accepted 2 June 2016) This review gives a pedagogical introduction to the eigenstate thermalization hypothesis (ETH), its basis, and its implications to statistical mechanics and thermodynamics. In the first part, ETH is introduced as a natural extension of ideas from quantum chaos and ran- dom matrix theory (RMT). To this end, we present a brief overview of classical and quantum chaos, as well as RMT and some of its most important predictions. The latter include the statistics of energy levels, eigenstate components, and matrix elements of observables. Build- ing on these, we introduce the ETH and show that it allows one to describe thermalization in isolated chaotic systems without invoking the notion of an external bath. We examine numerical evidence of eigenstate thermalization from studies of many-body lattice systems. We also introduce the concept of a quench as a means of taking isolated systems out of equi- librium, and discuss results of numerical experiments on quantum quenches. The second part of the review explores the implications of quantum chaos and ETH to thermodynamics. Basic thermodynamic relations are derived, including the second law of thermodynamics, the fun- damental thermodynamic relation, fluctuation theorems, the fluctuation–dissipation relation, and the Einstein and Onsager relations. -

Statistical Physics– a Second Course

Statistical Physics– a second course Finn Ravndal and Eirik Grude Flekkøy Department of Physics University of Oslo September 3, 2008 2 Contents 1 Summary of Thermodynamics 5 1.1 Equationsofstate .......................... 5 1.2 Lawsofthermodynamics. 7 1.3 Maxwell relations and thermodynamic derivatives . .... 9 1.4 Specificheatsandcompressibilities . 10 1.5 Thermodynamicpotentials . 12 1.6 Fluctuations and thermodynamic stability . .. 15 1.7 Phasetransitions ........................... 16 1.8 EntropyandGibbsParadox. 18 2 Non-Interacting Particles 23 1 2.1 Spin- 2 particlesinamagneticfield . 23 2.2 Maxwell-Boltzmannstatistics . 28 2.3 Idealgas................................ 32 2.4 Fermi-Diracstatistics. 35 2.5 Bose-Einsteinstatistics. 36 3 Statistical Ensembles 39 3.1 Ensemblesinphasespace . 39 3.2 Liouville’stheorem . .. .. .. .. .. .. .. .. .. .. 42 3.3 Microcanonicalensembles . 45 3.4 Free particles and multi-dimensional spheres . .... 48 3.5 Canonicalensembles . 50 3.6 Grandcanonicalensembles . 54 3.7 Isobaricensembles .......................... 58 3.8 Informationtheory . .. .. .. .. .. .. .. .. .. .. 62 4 Real Gases and Liquids 67 4.1 Correlationfunctions. 67 4.2 Thevirialtheorem .......................... 73 4.3 Mean field theory for the van der Waals equation . 76 4.4 Osmosis ................................ 80 3 4 CONTENTS 5 Quantum Gases and Liquids 83 5.1 Statisticsofidenticalparticles. .. 83 5.2 Blackbodyradiationandthephotongas . 88 5.3 Phonons and the Debye theory of specific heats . 96 5.4 Bosonsatnon-zerochemicalpotential .