LITAKER-THESIS-2013.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Central Coast Astronomy Virtual Star Party May 15Th 7Pm Pacific

Central Coast Astronomy Virtual Star Party May 15th 7pm Pacific Welcome to our Virtual Star Gazing session! We’ll be focusing on objects you can see with binoculars or a small telescope, so after our session, you can simply walk outside, look up, and understand what you’re looking at. CCAS President Aurora Lipper and astronomer Kent Wallace will bring you a virtual “tour of the night sky” where you can discover, learn, and ask questions as we go along! All you need is an internet connection. You can use an iPad, laptop, computer or cell phone. When 7pm on Saturday night rolls around, click the link on our website to join our class. CentralCoastAstronomy.org/stargaze Before our session starts: Step 1: Download your free map of the night sky: SkyMaps.com They have it available for Northern and Southern hemispheres. Step 2: Print out this document and use it to take notes during our time on Saturday. This document highlights the objects we will focus on in our session together. Celestial Objects: Moon: The moon 4 days after new, which is excellent for star gazing! *Image credit: all astrophotography images are courtesy of NASA & ESO unless otherwise noted. All planetarium images are courtesy of Stellarium. Central Coast Astronomy CentralCoastAstronomy.org Page 1 Main Focus for the Session: 1. Canes Venatici (The Hunting Dogs) 2. Boötes (the Herdsman) 3. Coma Berenices (Hair of Berenice) 4. Virgo (the Virgin) Central Coast Astronomy CentralCoastAstronomy.org Page 2 Canes Venatici (the Hunting Dogs) Canes Venatici, The Hunting Dogs, a modern constellation created by Polish astronomer Johannes Hevelius in 1687. -

Messier Objects

Messier Objects From the Stocker Astroscience Center at Florida International University Miami Florida The Messier Project Main contributors: • Daniel Puentes • Steven Revesz • Bobby Martinez Charles Messier • Gabriel Salazar • Riya Gandhi • Dr. James Webb – Director, Stocker Astroscience center • All images reduced and combined using MIRA image processing software. (Mirametrics) What are Messier Objects? • Messier objects are a list of astronomical sources compiled by Charles Messier, an 18th and early 19th century astronomer. He created a list of distracting objects to avoid while comet hunting. This list now contains over 110 objects, many of which are the most famous astronomical bodies known. The list contains planetary nebula, star clusters, and other galaxies. - Bobby Martinez The Telescope The telescope used to take these images is an Astronomical Consultants and Equipment (ACE) 24- inch (0.61-meter) Ritchey-Chretien reflecting telescope. It has a focal ratio of F6.2 and is supported on a structure independent of the building that houses it. It is equipped with a Finger Lakes 1kx1k CCD camera cooled to -30o C at the Cassegrain focus. It is equipped with dual filter wheels, the first containing UBVRI scientific filters and the second RGBL color filters. Messier 1 Found 6,500 light years away in the constellation of Taurus, the Crab Nebula (known as M1) is a supernova remnant. The original supernova that formed the crab nebula was observed by Chinese, Japanese and Arab astronomers in 1054 AD as an incredibly bright “Guest star” which was visible for over twenty-two months. The supernova that produced the Crab Nebula is thought to have been an evolved star roughly ten times more massive than the Sun. -

Of Galaxies, Stars, Planets and People

The Cosmic Journeys of Galaxies,A Research Programme forStars, the Armagh Observatory and Planetarium Planets and People This document was produced by the staff of the Armagh Observatory and Planetarium, in particular through discussions and contributions from the tenured astronomers, together with input from the Governors and the Management Committee. The document was edited by the Director, Michael Burton and designed by Aileen McKee. Produced in March 2017 Front Cover Images The Four Pillars of the Armagh Observatory and Planetarium Research Outreach The Armagh Observatory was founded in 1790 as The Armagh Planetarium was founded by Dr Eric part of Archbishop Richard Robinson’s vision to see Lindsay, the seventh director of the Observatory, as the creation of a University in the City of Armagh. part of his vision to communicate the excitement of It is the oldest scientific institution in Northern astronomy and science to the public. It opened on Ireland and the longest continuously operating the 1st of May, 1968 and is the oldest operating astronomical research institution in the UK and planetarium in the UK and Ireland. Ireland. History Heritage Dreyer's NGC – the New General Catalogue – was The Observatory has been measuring the weather published in 1888 by JLE Dreyer, fourth Director of conditions at 9am every day since 1794, a the Observatory. It has been used extensively by meteorological record covering more than 200 astronomers ever since. This is his annotated copy, years, believed to be longest standing in the British complete with all known corrections at the time. Isles. This image shows the sunshine recorder and Galaxies and nebulae are still often cited by their anemometer. -

Whirlpools and Pinwheels on the Sky

Whirlpools and pinwheels on the sky Domingos Soares Galaxies exist in many different forms and count by, at least, the hundreds of billions, according to estimations made from the observations of the Hubble Space Telescope. Among all of them, there are no doubts that the most spectacular are the so-called \spiral galaxies". Our own galaxy, the Milky Way galaxy, is a member of that family. Spiral galaxies are so named because of the distinctive aspect they present to the observer, namely, of a spiral-shaped structure similar to a whirlpool or a pinwheel. They are also called \disk galaxies" because the galactic material | stars, gas and interstellar dust | is distributed in the shape of a \thick" disk. The stars constitute the main constituents of galaxies, at least with respect to their visual appearance. They emit the major part of the visible light of a galaxy, be it a spiral or not. The typical visual aspect of spiral galaxies is due to the structures that astronomers call \spiral arms". The most bright stars of a galaxy are those that delineate the arms. These, however, contribute little to the total mass of the galaxy, but due to their extraordinary brightness they are the most noticeable on a visual inspection. The spiral galaxy disks rotate. And this is typical in those galaxies: the galactic material spins around the disk center, which is called \galactic center". But the rotation is not like the spin of a rigid disk, like, for example, a CD, in which all the points make a full turn in the same time interval. -

Guide Du Ciel Profond

Guide du ciel profond Olivier PETIT 8 mai 2004 2 Introduction hjjdfhgf ghjfghfd fg hdfjgdf gfdhfdk dfkgfd fghfkg fdkg fhdkg fkg kfghfhk Table des mati`eres I Objets par constellation 21 1 Androm`ede (And) Andromeda 23 1.1 Messier 31 (La grande Galaxie d'Androm`ede) . 25 1.2 Messier 32 . 27 1.3 Messier 110 . 29 1.4 NGC 404 . 31 1.5 NGC 752 . 33 1.6 NGC 891 . 35 1.7 NGC 7640 . 37 1.8 NGC 7662 (La boule de neige bleue) . 39 2 La Machine pneumatique (Ant) Antlia 41 2.1 NGC 2997 . 43 3 le Verseau (Aqr) Aquarius 45 3.1 Messier 2 . 47 3.2 Messier 72 . 49 3.3 Messier 73 . 51 3.4 NGC 7009 (La n¶ebuleuse Saturne) . 53 3.5 NGC 7293 (La n¶ebuleuse de l'h¶elice) . 56 3.6 NGC 7492 . 58 3.7 NGC 7606 . 60 3.8 Cederblad 211 (N¶ebuleuse de R Aquarii) . 62 4 l'Aigle (Aql) Aquila 63 4.1 NGC 6709 . 65 4.2 NGC 6741 . 67 4.3 NGC 6751 (La n¶ebuleuse de l’œil flou) . 69 4.4 NGC 6760 . 71 4.5 NGC 6781 (Le nid de l'Aigle ) . 73 TABLE DES MATIERES` 5 4.6 NGC 6790 . 75 4.7 NGC 6804 . 77 4.8 Barnard 142-143 (La tani`ere noire) . 79 5 le B¶elier (Ari) Aries 81 5.1 NGC 772 . 83 6 le Cocher (Aur) Auriga 85 6.1 Messier 36 . 87 6.2 Messier 37 . 89 6.3 Messier 38 . -

GALAXIES WHAT ARE the DEEP SKY OBJECTS? •Deep-Sky Objects Are Astronomical Objects Other Than Individual Stars and Solar System Objects (Sun, Moon, Planets, Comets)

GALAXIES WHAT ARE THE DEEP SKY OBJECTS? •Deep-sky objects are astronomical objects other than individual stars and solar system objects (Sun, Moon, planets, comets). TYPES OF DEEP SKY OBJECTS •Nebulae •Clusters •Galaxies CHARLES MESSIER • Known for the Messier catalogue of galaxies, nebulae and star clusters M1 to M110 • He was a French astronomer who lived in the 18th century. • He was a comet hunter and the purpose of the catalogue was to record the sky objects that looked as comets but were not comets because they would not move in the sky. UNITS TO MEASURE DISTANCE • A Light-year is the distance that light travels in a year with a speed of approximately 300,000 kilometers per second • Closest star to the Sun is Proxima Centauri at 4.37 light years. • A Parsec is the equal to about 3.26 light years GALAXIES • A galaxy is an enormous collection of gas, dust and billions of stars held together by gravity. One galaxy can have hundreds of billions of stars and be as large as 200,000 light years across. • Galaxy is derived from the Greek galaxias meaning "milky", a reference to the Milky Way. • Many galaxies are believed to have black holes at their active center. The Milky Way's central black hole, known as Sagittarius A, has a mass four million times that of our Sun. GALAXIES FACTS • There are potentially more than 170 billion galaxies in the observable universe. Some, called dwarf galaxies, are very small with about 10 million stars, while others are huge containing an estimated 100 trillion stars. -

Fy10 Budget by Program

AURA/NOAO FISCAL YEAR ANNUAL REPORT FY 2010 Revised Submitted to the National Science Foundation March 16, 2011 This image, aimed toward the southern celestial pole atop the CTIO Blanco 4-m telescope, shows the Large and Small Magellanic Clouds, the Milky Way (Carinae Region) and the Coal Sack (dark area, close to the Southern Crux). The 33 “written” on the Schmidt Telescope dome using a green laser pointer during the two-minute exposure commemorates the rescue effort of 33 miners trapped for 69 days almost 700 m underground in the San Jose mine in northern Chile. The image was taken while the rescue was in progress on 13 October 2010, at 3:30 am Chilean Daylight Saving time. Image Credit: Arturo Gomez/CTIO/NOAO/AURA/NSF National Optical Astronomy Observatory Fiscal Year Annual Report for FY 2010 Revised (October 1, 2009 – September 30, 2010) Submitted to the National Science Foundation Pursuant to Cooperative Support Agreement No. AST-0950945 March 16, 2011 Table of Contents MISSION SYNOPSIS ............................................................................................................ IV 1 EXECUTIVE SUMMARY ................................................................................................ 1 2 NOAO ACCOMPLISHMENTS ....................................................................................... 2 2.1 Achievements ..................................................................................................... 2 2.2 Status of Vision and Goals ................................................................................ -

A Basic Requirement for Studying the Heavens Is Determining Where In

Abasic requirement for studying the heavens is determining where in the sky things are. To specify sky positions, astronomers have developed several coordinate systems. Each uses a coordinate grid projected on to the celestial sphere, in analogy to the geographic coordinate system used on the surface of the Earth. The coordinate systems differ only in their choice of the fundamental plane, which divides the sky into two equal hemispheres along a great circle (the fundamental plane of the geographic system is the Earth's equator) . Each coordinate system is named for its choice of fundamental plane. The equatorial coordinate system is probably the most widely used celestial coordinate system. It is also the one most closely related to the geographic coordinate system, because they use the same fun damental plane and the same poles. The projection of the Earth's equator onto the celestial sphere is called the celestial equator. Similarly, projecting the geographic poles on to the celest ial sphere defines the north and south celestial poles. However, there is an important difference between the equatorial and geographic coordinate systems: the geographic system is fixed to the Earth; it rotates as the Earth does . The equatorial system is fixed to the stars, so it appears to rotate across the sky with the stars, but of course it's really the Earth rotating under the fixed sky. The latitudinal (latitude-like) angle of the equatorial system is called declination (Dec for short) . It measures the angle of an object above or below the celestial equator. The longitud inal angle is called the right ascension (RA for short). -

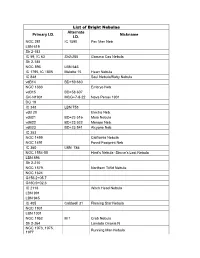

List of Bright Nebulae Primary I.D. Alternate I.D. Nickname

List of Bright Nebulae Alternate Primary I.D. Nickname I.D. NGC 281 IC 1590 Pac Man Neb LBN 619 Sh 2-183 IC 59, IC 63 Sh2-285 Gamma Cas Nebula Sh 2-185 NGC 896 LBN 645 IC 1795, IC 1805 Melotte 15 Heart Nebula IC 848 Soul Nebula/Baby Nebula vdB14 BD+59 660 NGC 1333 Embryo Neb vdB15 BD+58 607 GK-N1901 MCG+7-8-22 Nova Persei 1901 DG 19 IC 348 LBN 758 vdB 20 Electra Neb. vdB21 BD+23 516 Maia Nebula vdB22 BD+23 522 Merope Neb. vdB23 BD+23 541 Alcyone Neb. IC 353 NGC 1499 California Nebula NGC 1491 Fossil Footprint Neb IC 360 LBN 786 NGC 1554-55 Hind’s Nebula -Struve’s Lost Nebula LBN 896 Sh 2-210 NGC 1579 Northern Trifid Nebula NGC 1624 G156.2+05.7 G160.9+02.6 IC 2118 Witch Head Nebula LBN 991 LBN 945 IC 405 Caldwell 31 Flaming Star Nebula NGC 1931 LBN 1001 NGC 1952 M 1 Crab Nebula Sh 2-264 Lambda Orionis N NGC 1973, 1975, Running Man Nebula 1977 NGC 1976, 1982 M 42, M 43 Orion Nebula NGC 1990 Epsilon Orionis Neb NGC 1999 Rubber Stamp Neb NGC 2070 Caldwell 103 Tarantula Nebula Sh2-240 Simeis 147 IC 425 IC 434 Horsehead Nebula (surrounds dark nebula) Sh 2-218 LBN 962 NGC 2023-24 Flame Nebula LBN 1010 NGC 2068, 2071 M 78 SH 2 276 Barnard’s Loop NGC 2149 NGC 2174 Monkey Head Nebula IC 2162 Ced 72 IC 443 LBN 844 Jellyfish Nebula Sh2-249 IC 2169 Ced 78 NGC Caldwell 49 Rosette Nebula 2237,38,39,2246 LBN 943 Sh 2-280 SNR205.6- G205.5+00.5 Monoceros Nebula 00.1 NGC 2261 Caldwell 46 Hubble’s Var. -

Astronomy in the Park 2014 Schedule

Astronomy in the Park 2014 Schedule Members of the Stockton Astronomical Society will volunteer to setup their telescopes for the public at S.J. County Oak Grove Regional Park on the first Saturday after the New Moon. During the months of April through October the Nature Center will also be open with indoor astronomy activities. If the sky is cloudy or there is bad weather the telescopes may not be available. During the months of November through March the Nature Center will not be open. Also, if the sky is not clear the event may be canceled and we will try again the next month. • S.J. County charges a Parking Fee of $6.00 per vehicle but the Nature Center and telescope viewing is free. • SAS volunteers can contact James Rexroth for free parking passes. • Indoor astronomy themed activities for kids (both big & small) will be in the Nature Center. • Telescope viewing starts after Sunset, the Moon will be visible in the telescopes from the start. As the sky darkens more objects become visible. Darker and clearer sky is better for viewing. • Every month we will feature a different deep sky object. We will point one of our large telescopes at this object during the last hour of the evening. These objects are dim and fuzzy and are best seen in large aperture telescopes. • To view objects it may be necessary to stand on a ladder or step stool that will be next to the telescope. For more information call: James Rexroth S.J. County Oak Grove Regional Park (Nature Center) (209) 953-8814 Doug Christensen Stockton Astronomical Society (209) 462-0798 -

Strategic Goal 5: Encourage the Pursuit of Appropriate Partnerships with the Emerging Commercial Space Sector

National Aeronautics and Space Administration FFiscaliscal YYeaear 20102010 PERFORMANCEPERFORMANCE ANDAND ACCOUNTABILITYACCOUNTABILITY REPORTREPORT www.nasa.gov NASA’s Performance and Accountability Report The National Aeronautics and Space Administration (NASA) produces an annual Performance and Accountability Report (PAR) to share the Agency’s progress toward achieving its Strategic Goals with the American people. In addition to performance information, the PAR also presents the Agency’s fi nancial statements as well as NASA’s management challenges and the plans and efforts to overcome them. NASA’s Fiscal Year (FY) 2010 PAR satisfi es many U.S. government reporting requirements including the Government Performance and Results Act of 1993, the Chief Financial Offi cers Act of 1990, and the Federal Financial Management Improvement Act of 1996. NASA’s FY 2010 PAR contains the following sections: Management’s Discussion and Analysis The Management’s Discussion and Analysis (MD&A) section highlights NASA’s overall performance; including pro- grammatic, fi nancial, and management activities. The MD&A includes a description of NASA’s organizational structure and describes the Agency’s performance management system and management controls (i.e., values, policies, and procedures) that help program and fi nancial managers achieve results and safeguard the integrity of NASA’s programs. Detailed Performance The Detailed Performance section provides more in-depth information on NASA’s progress toward achieving mile- stones and goals as defi ned in the Agency’s Strategic Plan and NASA’s FY 2010 Performance Plan Update. It also includes plans for correcting performance measures that NASA did not achieve in FY 2010 and an update on the mea- sures that NASA did not complete in FY 2009. -

The Messier Catalog

The Messier Catalog Messier 1 Messier 2 Messier 3 Messier 4 Messier 5 Crab Nebula globular cluster globular cluster globular cluster globular cluster Messier 6 Messier 7 Messier 8 Messier 9 Messier 10 open cluster open cluster Lagoon Nebula globular cluster globular cluster Butterfly Cluster Ptolemy's Cluster Messier 11 Messier 12 Messier 13 Messier 14 Messier 15 Wild Duck Cluster globular cluster Hercules glob luster globular cluster globular cluster Messier 16 Messier 17 Messier 18 Messier 19 Messier 20 Eagle Nebula The Omega, Swan, open cluster globular cluster Trifid Nebula or Horseshoe Nebula Messier 21 Messier 22 Messier 23 Messier 24 Messier 25 open cluster globular cluster open cluster Milky Way Patch open cluster Messier 26 Messier 27 Messier 28 Messier 29 Messier 30 open cluster Dumbbell Nebula globular cluster open cluster globular cluster Messier 31 Messier 32 Messier 33 Messier 34 Messier 35 Andromeda dwarf Andromeda Galaxy Triangulum Galaxy open cluster open cluster elliptical galaxy Messier 36 Messier 37 Messier 38 Messier 39 Messier 40 open cluster open cluster open cluster open cluster double star Winecke 4 Messier 41 Messier 42/43 Messier 44 Messier 45 Messier 46 open cluster Orion Nebula Praesepe Pleiades open cluster Beehive Cluster Suburu Messier 47 Messier 48 Messier 49 Messier 50 Messier 51 open cluster open cluster elliptical galaxy open cluster Whirlpool Galaxy Messier 52 Messier 53 Messier 54 Messier 55 Messier 56 open cluster globular cluster globular cluster globular cluster globular cluster Messier 57 Messier