A Multi-Media Exchange Format for Time-Series Dataset Curation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ROOT I/O Compression Improvements for HEP Analysis

EPJ Web of Conferences 245, 02017 (2020) https://doi.org/10.1051/epjconf/202024502017 CHEP 2019 ROOT I/O compression improvements for HEP analysis Oksana Shadura1;∗ Brian Paul Bockelman2;∗∗ Philippe Canal3;∗∗∗ Danilo Piparo4;∗∗∗∗ and Zhe Zhang1;y 1University of Nebraska-Lincoln, 1400 R St, Lincoln, NE 68588, United States 2Morgridge Institute for Research, 330 N Orchard St, Madison, WI 53715, United States 3Fermilab, Kirk Road and Pine St, Batavia, IL 60510, United States 4CERN, Meyrin 1211, Geneve, Switzerland Abstract. We overview recent changes in the ROOT I/O system, enhancing it by improving its performance and interaction with other data analysis ecosys- tems. Both the newly introduced compression algorithms, the much faster bulk I/O data path, and a few additional techniques have the potential to significantly improve experiment’s software performance. The need for efficient lossless data compression has grown significantly as the amount of HEP data collected, transmitted, and stored has dramatically in- creased over the last couple of years. While compression reduces storage space and, potentially, I/O bandwidth usage, it should not be applied blindly, because there are significant trade-offs between the increased CPU cost for reading and writing files and the reduces storage space. 1 Introduction In the past years, Large Hadron Collider (LHC) experiments are managing about an exabyte of storage for analysis purposes, approximately half of which is stored on tape storages for archival purposes, and half is used for traditional disk storage. Meanwhile for High Lumi- nosity Large Hadron Collider (HL-LHC) storage requirements per year are expected to be increased by a factor of 10 [1]. -

![Arxiv:2004.10531V1 [Cs.OH] 8 Apr 2020](https://docslib.b-cdn.net/cover/5419/arxiv-2004-10531v1-cs-oh-8-apr-2020-215419.webp)

Arxiv:2004.10531V1 [Cs.OH] 8 Apr 2020

ROOT I/O compression improvements for HEP analysis Oksana Shadura1;∗ Brian Paul Bockelman2;∗∗ Philippe Canal3;∗∗∗ Danilo Piparo4;∗∗∗∗ and Zhe Zhang1;y 1University of Nebraska-Lincoln, 1400 R St, Lincoln, NE 68588, United States 2Morgridge Institute for Research, 330 N Orchard St, Madison, WI 53715, United States 3Fermilab, Kirk Road and Pine St, Batavia, IL 60510, United States 4CERN, Meyrin 1211, Geneve, Switzerland Abstract. We overview recent changes in the ROOT I/O system, increasing per- formance and enhancing it and improving its interaction with other data analy- sis ecosystems. Both the newly introduced compression algorithms, the much faster bulk I/O data path, and a few additional techniques have the potential to significantly to improve experiment’s software performance. The need for efficient lossless data compression has grown significantly as the amount of HEP data collected, transmitted, and stored has dramatically in- creased during the LHC era. While compression reduces storage space and, potentially, I/O bandwidth usage, it should not be applied blindly: there are sig- nificant trade-offs between the increased CPU cost for reading and writing files and the reduce storage space. 1 Introduction In the past years LHC experiments are commissioned and now manages about an exabyte of storage for analysis purposes, approximately half of which is used for archival purposes, and half is used for traditional disk storage. Meanwhile for HL-LHC storage requirements per year are expected to be increased by factor 10 [1]. arXiv:2004.10531v1 [cs.OH] 8 Apr 2020 Looking at these predictions, we would like to state that storage will remain one of the major cost drivers and at the same time the bottlenecks for HEP computing. -

On Audio-Visual File Formats

On Audio-Visual File Formats Summary • digital audio and digital video • container, codec, raw data • different formats for different purposes Reto Kromer • AV Preservation by reto.ch • audio-visual data transformations Film Preservation and Restoration Hyderabad, India 8–15 December 2019 1 2 Digital Audio • sampling Digital Audio • quantisation 3 4 Sampling • 44.1 kHz • 48 kHz • 96 kHz • 192 kHz digitisation = sampling + quantisation 5 6 Quantisation • 16 bit (216 = 65 536) • 24 bit (224 = 16 777 216) • 32 bit (232 = 4 294 967 296) Digital Video 7 8 Digital Video Resolution • resolution • SD 480i / SD 576i • bit depth • HD 720p / HD 1080i • linear, power, logarithmic • 2K / HD 1080p • colour model • 4K / UHD-1 • chroma subsampling • 8K / UHD-2 • illuminant 9 10 Bit Depth Linear, Power, Logarithmic • 8 bit (28 = 256) «medium grey» • 10 bit (210 = 1 024) • linear: 18% • 12 bit (212 = 4 096) • power: 50% • 16 bit (216 = 65 536) • logarithmic: 50% • 24 bit (224 = 16 777 216) 11 12 Colour Model • XYZ, L*a*b* • RGB / R′G′B′ / CMY / C′M′Y′ • Y′IQ / Y′UV / Y′DBDR • Y′CBCR / Y′COCG • Y′PBPR 13 14 15 16 17 18 RGB24 00000000 11111111 00000000 00000000 00000000 00000000 11111111 00000000 00000000 00000000 00000000 11111111 00000000 11111111 11111111 11111111 11111111 00000000 11111111 11111111 11111111 11111111 00000000 11111111 19 20 Compression Uncompressed • uncompressed + data simpler to process • lossless compression + software runs faster • lossy compression – bigger files • chroma subsampling – slower writing, transmission and reading • born -

Building and Installing Xen 4.X and Linux Kernel 3.X on Ubuntu and Debian Linux

Building and Installing Xen 4.x and Linux Kernel 3.x on Ubuntu and Debian Linux Version 2.3 Author: Teo En Ming (Zhang Enming) Website #1: http://www.teo-en-ming.com Website #2: http://www.zhang-enming.com Email #1: [email protected] Email #2: [email protected] Email #3: [email protected] Mobile Phone(s): +65-8369-2618 / +65-9117-5902 / +65-9465-2119 Country: Singapore Date: 10 August 2013 Saturday 4:56 A.M. Singapore Time 1 Installing Prerequisite Software sudo apt-get install ocaml-findlib sudo apt-get install bcc bin86 gawk bridge-utils iproute libcurl3 libcurl4-openssl-dev bzip2 module-init-tools transfig tgif texinfo texlive-latex-base texlive-latex-recommended texlive-fonts-extra texlive-fonts-recommended pciutils-dev mercurial build-essential make gcc libc6-dev zlib1g-dev python python-dev python-twisted libncurses5-dev patch libvncserver-dev libsdl-dev libjpeg62-dev iasl libbz2-dev e2fslibs-dev git-core uuid-dev ocaml libx11-dev bison flex sudo apt-get install gcc-multilib sudo apt-get install xz-utils libyajl-dev gettext sudo apt-get install git-core kernel-package fakeroot build-essential libncurses5-dev 2 Linux Kernel 3.x with Xen Virtualization Support (Dom0 and DomU) In this installation document, we will build/compile Xen 4.1.3-rc1-pre and Linux kernel 3.3.0-rc7 from sources. sudo apt-get install aria2 aria2c -x 5 http://www.kernel.org/pub/linux/kernel/v3.0/testing/linux-3.3-rc7.tar.bz2 tar xfvj linux-3.3-rc7.tar.bz2 cd linux-3.3-rc7 Page 1 of 25 (C) 2013 Teo En Ming (Zhang Enming) 3 Configuring the Linux kernel cp /boot/config-3.0.0-12-generic .config make oldconfig Accept the defaults for new kernel configuration options by pressing enter. -

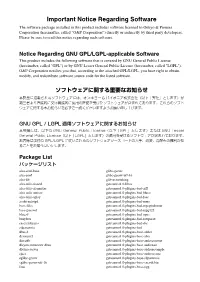

Important Notice Regarding Software

Important Notice Regarding Software The software package installed in this product includes software licensed to Onkyo & Pioneer Corporation (hereinafter, called “O&P Corporation”) directly or indirectly by third party developers. Please be sure to read this notice regarding such software. Notice Regarding GNU GPL/LGPL-applicable Software This product includes the following software that is covered by GNU General Public License (hereinafter, called "GPL") or by GNU Lesser General Public License (hereinafter, called "LGPL"). O&P Corporation notifies you that, according to the attached GPL/LGPL, you have right to obtain, modify, and redistribute software source code for the listed software. ソフトウェアに関する重要なお知らせ 本製品に搭載されるソフトウェアには、オンキヨー & パイオニア株式会社(以下「弊社」とします)が 第三者より直接的に又は間接的に使用の許諾を受けたソフトウェアが含まれております。これらのソフト ウェアに関する本お知らせを必ずご一読くださいますようお願い申し上げます。 GNU GPL / LGPL 適用ソフトウェアに関するお知らせ 本製品には、以下の GNU General Public License(以下「GPL」とします)または GNU Lesser General Public License(以下「LGPL」とします)の適用を受けるソフトウェアが含まれております。 お客様は添付の GPL/LGPL に従いこれらのソフトウェアソースコードの入手、改変、再配布の権利があ ることをお知らせいたします。 Package List パッケージリスト alsa-conf-base glibc-gconv alsa-conf glibc-gconv-utf-16 alsa-lib glib-networking alsa-utils-alsactl gstreamer1.0-libav alsa-utils-alsamixer gstreamer1.0-plugins-bad-aiff alsa-utils-amixer gstreamer1.0-plugins-bad-bluez alsa-utils-aplay gstreamer1.0-plugins-bad-faac avahi-autoipd gstreamer1.0-plugins-bad-mms base-files gstreamer1.0-plugins-bad-mpegtsdemux base-passwd gstreamer1.0-plugins-bad-mpg123 bluez5 gstreamer1.0-plugins-bad-opus busybox gstreamer1.0-plugins-bad-rawparse -

Opus, a Free, High-Quality Speech and Audio Codec

Opus, a free, high-quality speech and audio codec Jean-Marc Valin, Koen Vos, Timothy B. Terriberry, Gregory Maxwell 29 January 2014 Xiph.Org & Mozilla What is Opus? ● New highly-flexible speech and audio codec – Works for most audio applications ● Completely free – Royalty-free licensing – Open-source implementation ● IETF RFC 6716 (Sep. 2012) Xiph.Org & Mozilla Why a New Audio Codec? http://xkcd.com/927/ http://imgs.xkcd.com/comics/standards.png Xiph.Org & Mozilla Why Should You Care? ● Best-in-class performance within a wide range of bitrates and applications ● Adaptability to varying network conditions ● Will be deployed as part of WebRTC ● No licensing costs ● No incompatible flavours Xiph.Org & Mozilla History ● Jan. 2007: SILK project started at Skype ● Nov. 2007: CELT project started ● Mar. 2009: Skype asks IETF to create a WG ● Feb. 2010: WG created ● Jul. 2010: First prototype of SILK+CELT codec ● Dec 2011: Opus surpasses Vorbis and AAC ● Sep. 2012: Opus becomes RFC 6716 ● Dec. 2013: Version 1.1 of libopus released Xiph.Org & Mozilla Applications and Standards (2010) Application Codec VoIP with PSTN AMR-NB Wideband VoIP/videoconference AMR-WB High-quality videoconference G.719 Low-bitrate music streaming HE-AAC High-quality music streaming AAC-LC Low-delay broadcast AAC-ELD Network music performance Xiph.Org & Mozilla Applications and Standards (2013) Application Codec VoIP with PSTN Opus Wideband VoIP/videoconference Opus High-quality videoconference Opus Low-bitrate music streaming Opus High-quality music streaming Opus Low-delay -

FFV1, Matroska, LPCM (And More)

MediaConch Implementation and policy checking on FFV1, Matroska, LPCM (and more) Jérôme Martinez, MediaArea Innovation Workshop ‑ March 2017 What is MediaConch? MediaConch is a conformance checker Implementation checker Policy checker Reporter Fixer What is MediaConch? Implementation and Policy reporter What is MediaConch? Implementation report: Policy report: What is MediaConch? General information about your files What is MediaConch? Inspect your files What is MediaConch? Policy editor What is MediaConch? Public policies What is MediaConch? Fixer Segment sizes in Matroska Matroska “bit flip” correction FFV1 “bit flip” correction Integration Archivematica is an integrated suite of open‑source software tools that allows users to process digital objects from ingest to access in compliance with the ISO‑OAIS functional model MediaConch interfaces Graphical interface Web interface Command line Server (REST API) (Work in progress) a library (.dll/.so/.dylib) MediaConch output formats XML (native format) Text HTML (Work in progress) PDF Tweakable! (with XSL) Open source GPLv3+ and MPLv2+ Relies on MediaInfo (metadata extraction tool) Use well‑known open source libraries: Qt, sqlite, libevent, libxml2, libxslt, libexslt... Supported formats Priorities for the implementation checker Matroska FFV1 PCM Can accept any format supported by MediaInfo for the policy checker MXF + JP2k QuickTime/MOV Audio files (WAV, BWF, AIFF...) ... Supported formats Can be expanded By plugins Support of PDF checker: VeraPDF plugin Support of TIFF checker: DPF Manager plugin You use another checker? Let us know By internal development More tests on your preferred format is possible It depends on you! Versatile Several input formats are accepted FFV1 from MOV or AVI Matroska with other video formats (Work in progress) Extraction of a PDF or TIFF aachement from a Matroska container and analyze with a plugin (e.g. -

UG1144 (V2020.1) July 24, 2020 Revision History

See all versions of this document PetaLinux Tools Documentation Reference Guide UG1144 (v2020.1) July 24, 2020 Revision History Revision History The following table shows the revision history for this document. Section Revision Summary 07/24/2020 Version 2020.1 Appendix H: Partitioning and Formatting an SD Card Added a new appendix. 06/03/2020 Version 2020.1 Chapter 2: Setting Up Your Environment Added the Installing a Preferred eSDK as part of the PetaLinux Tool section. Chapter 4: Configuring and Building Added the PetaLinux Commands with Equivalent devtool Commands section. Chapter 6: Upgrading the Workspace Added new sections: petalinux-upgrade Options, Upgrading Between Minor Releases (2020.1 Tool with 2020.2 Tool) , Upgrading the Installed Tool with More Platforms, and Upgrading the Installed Tool with your Customized Platform. Chapter 7: Customizing the Project Added new sections: Creating Partitioned Images Using Wic and Configuring SD Card ext File System Boot. Chapter 8: Customizing the Root File System Added the Appending Root File System Packages section. Chapter 10: Advanced Configurations Updated PetaLinux Menuconfig System. Chapter 11: Yocto Features Added the Adding Extra Users to the PetaLinux System section. Appendix A: Migration Added Tool/Project Directory Structure. UG1144 (v2020.1) July 24, 2020Send Feedback www.xilinx.com PetaLinux Tools Documentation Reference Guide 2 Table of Contents Revision History...............................................................................................................2 -

Recommended File Formats for Long-Term Archiving and for Web Dissemination in Phaidra

Recommended file formats for long-term archiving and for web dissemination in Phaidra Edited by Gianluca Drago May 2019 License: https://creativecommons.org/licenses/by-nc-sa/4.0/ Premise This document is intended to provide an overview of the file formats to be used depending on two possible destinations of the digital document: long-term archiving uploading to Phaidra and subsequent web dissemination When the document uploaded to Phaidra is also the only saved file, the two destinations end up coinciding, but in general one will probably want to produce two different files, in two different formats, so as to meet the differences in requirements and use in the final destinations. In the following tables, the recommendations for long-term archiving are distinct from those for dissemination in Phaidra. There are no absolute criteria for choosing the file format. The choice is always dependent on different evaluations that the person who is carrying out the archiving will have to make on a case by case basis and will often result in a compromise between the best achievable quality and the limits imposed by the costs of production, processing and storage of files, as well as, for the preceding, by the opportunity of a conversion to a new format. 1 This choice is particularly significant from the perspective of long-term archiving, for which a quality that respects the authenticity and integrity of the original document and a format that guarantees long-term access to data are desirable. This document should be seen more as an aid to the reasoned choice of the person carrying out the archiving than as a list of guidelines to be followed to the letter. -

OSS Disclosure Document OSS Licenses Used in RN AIVI 1

OSS Disclosure Document Date: 08-10-2017 OSS Licenses used in RN_AIVI CM-CI1/PJ-CB Page 1 Project 1 Overview .................................................. 12 2 OSS Licenses used in the project .......................... 13 3 Package details for OSS Licenses usage .................... 14 AES - Advanced Encryption Standard – 1.0 ..................... 14 Alsa Libraries - 1.0.29 ...................................... 14 Alsa Plugins - 1.0.29 ........................................ 14 Alsa Utils - 1.0.29 .......................................... 14 APMD - 3.2.2 ................................................. 15 ATK - 2.8.0 .................................................. 15 Attr - 2.4.47 ................................................ 15 Audio File Library - 0.2.7 ................................... 15 Avahi - 0.6.31 ............................................... 15 Bash - 3.2.48 .............................................. 15 BidiReferenceCpp - 26 ...................................... 15 Bison - 2.5.2., 3.0.2, 3.0.4 ............................... 16 Blktrace - 1.0.5 ........................................... 16 BlueZ - 4.101, 5.33 ........................................ 16 BPSTL ...................................................... 16 Btrfs-progs – 4.1.2 ........................................ 16 Busybox - 1.23.2 ........................................... 16 Bzip2 - 1.0.6 .............................................. 16 Cairo Vector Graphics Library - 1.12.14, 1.12.16, 1.14.2 ... 17 Cairo-Pixman - 0.30.2,0.32.6 .............................. -

White Paper November 2017

White paper November 2017 XperiaTM Z4 Tablet SGP771 White paper | Xperia™ Z4 Tablet Purpose of this document Sony product White papers are intended to give an overview of a product and provide details in relevant areas of technology. NOTE: The illustration that appears on the title page is for reference only. All screen images and elements are subject to change without prior notice. This document is published by Sony Mobile This White paper is published by: Communications Inc., without any warranty*. Improvements and changes to this text Sony Mobile Communications Inc., necessitated by typographical errors, 4-12-3 Higashi-Shinagawa, Shinagawa-ku, inaccuracies of current information or improvements to programs and/or equipment Tokyo, 140-0002 Japan may be made by Sony Mobile Communications Inc. at any time and without notice. Such www.sonymobile.com changes will, however, be incorporated into new editions of this document. Printed versions are to be regarded as temporary reference copies only. © Sony Mobile Communications Inc., 2009-2017. All rights reserved. You are hereby granted a *All implied warranties, including without license to download and/or print a copy of this limitation the implied warranties of document. merchantability or fitness for a particular Any rights not expressly granted herein are purpose, are excluded. In no event shall Sony or its licensors be liable for incidental or reserved. consequential damages of any nature, including but not limited to lost profits or commercial loss, First released version (March 2015) -

Improving Compression-Ratio in Backup

Institutionen för systemteknik Department of Electrical Engineering Examensarbete Improving compression ratio in backup Examensarbete utfört i Informationskodning/Bildkodning av Mattias Zeidlitz Författare Mattias Zeidlitz LITH-ISY-EX--12/4588--SE Linköping 2012 TEKNISKA HÖGSKOLAN LINKÖPINGS UNIVERSITET Department of Electrical Engineering Linköpings tekniska högskola Linköping University Institutionen för systemteknik S-581 83 Linköping, Sweden 581 83 Linköping Improving compression-ratio in backup ............................................................................ Examensarbete utfört i Informationskodning/Bildkodning vid Linköpings tekniska högskola av Mattias Zeidlitz ............................................................. LITH-ISY-EX--12/4588--SE Presentationsdatum Institution och avdelning 2012-06-13 Institutionen för systemteknik Publiceringsdatum (elektronisk version) Department of Electrical Engineering Datum då du ämnar publicera exjobbet Språk Typ av publikation ISBN (licentiatavhandling) Svenska Licentiatavhandling ISRN LITH-ISY-EX--12/4588--SE x Annat (ange nedan) x Examensarbete Serietitel (licentiatavhandling) C-uppsats D-uppsats Engelska Rapport Serienummer/ISSN (licentiatavhandling) Antal sidor Annat (ange nedan) 58 URL för elektronisk version http://www.ep.liu.se Publikationens titel Improving compression ratio in backup Författare Mattias Zeidlitz Sammanfattning Denna rapport beskriver ett examensarbete genomfört på Degoo Backup AB i Stockholm under våren 2012. Syftet var att designa en kompressionssvit