Improving Compression-Ratio in Backup

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Microsoft Office Open XML Formats New File Formats for “Office 12”

The Microsoft Office Open XML Formats New File Formats for “Office 12” White Paper Published: June 2005 For the latest information, please see http://www.microsoft.com/office/wave12 Contents Introduction ...............................................................................................................................1 From .doc to .docx: a brief history of the Office file formats.................................................1 Benefits of the Microsoft Office Open XML Formats ................................................................2 Integration with Business Data .............................................................................................2 Openness and Transparency ...............................................................................................4 Robustness...........................................................................................................................7 Description of the Microsoft Office Open XML Format .............................................................9 Document Parts....................................................................................................................9 Microsoft Office Open XML Format specifications ...............................................................9 Compatibility with new file formats........................................................................................9 For more information ..............................................................................................................10 -

ROOT I/O Compression Improvements for HEP Analysis

EPJ Web of Conferences 245, 02017 (2020) https://doi.org/10.1051/epjconf/202024502017 CHEP 2019 ROOT I/O compression improvements for HEP analysis Oksana Shadura1;∗ Brian Paul Bockelman2;∗∗ Philippe Canal3;∗∗∗ Danilo Piparo4;∗∗∗∗ and Zhe Zhang1;y 1University of Nebraska-Lincoln, 1400 R St, Lincoln, NE 68588, United States 2Morgridge Institute for Research, 330 N Orchard St, Madison, WI 53715, United States 3Fermilab, Kirk Road and Pine St, Batavia, IL 60510, United States 4CERN, Meyrin 1211, Geneve, Switzerland Abstract. We overview recent changes in the ROOT I/O system, enhancing it by improving its performance and interaction with other data analysis ecosys- tems. Both the newly introduced compression algorithms, the much faster bulk I/O data path, and a few additional techniques have the potential to significantly improve experiment’s software performance. The need for efficient lossless data compression has grown significantly as the amount of HEP data collected, transmitted, and stored has dramatically in- creased over the last couple of years. While compression reduces storage space and, potentially, I/O bandwidth usage, it should not be applied blindly, because there are significant trade-offs between the increased CPU cost for reading and writing files and the reduces storage space. 1 Introduction In the past years, Large Hadron Collider (LHC) experiments are managing about an exabyte of storage for analysis purposes, approximately half of which is stored on tape storages for archival purposes, and half is used for traditional disk storage. Meanwhile for High Lumi- nosity Large Hadron Collider (HL-LHC) storage requirements per year are expected to be increased by a factor of 10 [1]. -

Full Document

R&D Centre for Mobile Applications (RDC) FEE, Dept of Telecommunications Engineering Czech Technical University in Prague RDC Technical Report TR-13-4 Internship report Evaluation of Compressibility of the Output of the Information-Concealing Algorithm Julien Mamelli, [email protected] 2nd year student at the Ecole´ des Mines d'Al`es (N^ımes,France) Internship supervisor: Luk´aˇsKencl, [email protected] August 2013 Abstract Compression is a key element to exchange files over the Internet. By generating re- dundancies, the concealing algorithm proposed by Kencl and Loebl [?], appears at first glance to be particularly designed to be combined with a compression scheme [?]. Is the output of the concealing algorithm actually compressible? We have tried 16 compression techniques on 1 120 files, and the result is that we have not found a solution which could advantageously use repetitions of the concealing method. Acknowledgments I would like to express my gratitude to my supervisor, Dr Luk´aˇsKencl, for his guidance and expertise throughout the course of this work. I would like to thank Prof. Robert Beˇst´akand Mr Pierre Runtz, for giving me the opportunity to carry out my internship at the Czech Technical University in Prague. I would also like to thank all the members of the Research and Development Center for Mobile Applications as well as my colleagues for the assistance they have given me during this period. 1 Contents 1 Introduction 3 2 Related Work 4 2.1 Information concealing method . 4 2.2 Archive formats . 5 2.3 Compression algorithms . 5 2.3.1 Lempel-Ziv algorithm . -

![Arxiv:2004.10531V1 [Cs.OH] 8 Apr 2020](https://docslib.b-cdn.net/cover/5419/arxiv-2004-10531v1-cs-oh-8-apr-2020-215419.webp)

Arxiv:2004.10531V1 [Cs.OH] 8 Apr 2020

ROOT I/O compression improvements for HEP analysis Oksana Shadura1;∗ Brian Paul Bockelman2;∗∗ Philippe Canal3;∗∗∗ Danilo Piparo4;∗∗∗∗ and Zhe Zhang1;y 1University of Nebraska-Lincoln, 1400 R St, Lincoln, NE 68588, United States 2Morgridge Institute for Research, 330 N Orchard St, Madison, WI 53715, United States 3Fermilab, Kirk Road and Pine St, Batavia, IL 60510, United States 4CERN, Meyrin 1211, Geneve, Switzerland Abstract. We overview recent changes in the ROOT I/O system, increasing per- formance and enhancing it and improving its interaction with other data analy- sis ecosystems. Both the newly introduced compression algorithms, the much faster bulk I/O data path, and a few additional techniques have the potential to significantly to improve experiment’s software performance. The need for efficient lossless data compression has grown significantly as the amount of HEP data collected, transmitted, and stored has dramatically in- creased during the LHC era. While compression reduces storage space and, potentially, I/O bandwidth usage, it should not be applied blindly: there are sig- nificant trade-offs between the increased CPU cost for reading and writing files and the reduce storage space. 1 Introduction In the past years LHC experiments are commissioned and now manages about an exabyte of storage for analysis purposes, approximately half of which is used for archival purposes, and half is used for traditional disk storage. Meanwhile for HL-LHC storage requirements per year are expected to be increased by factor 10 [1]. arXiv:2004.10531v1 [cs.OH] 8 Apr 2020 Looking at these predictions, we would like to state that storage will remain one of the major cost drivers and at the same time the bottlenecks for HEP computing. -

Java Spreadsheet Component Open Source

Java Spreadsheet Component Open Source Arvie is spriggier and pales sinlessly as unconvertible Harris personating freshly and inform sectionally. Obie chance her cholecyst prepossessingly, vociferant and bifacial. Humdrum Warren never degreasing so loquaciously or de-Stalinize any guanine headforemost. LibreOffice 64 SDK Developer's Guide Examples. Spring Roo W Cheat sheets online archived Spring Roo Open-Source Rapid Application Development for Java by Stefan. Sign in Google Accounts Google Sites. Open source components with no licenses or custom licenses. Open large Inventory Management How often startle you ordered parts you a had in boom but couldn't find learn How often lead you enable to re-order. The Excel component that can certainly write and manipulate spreadsheets. It includes two components visualization and runtime environment for Java environments. XSSF XML SpreadSheet Format reads and writes Office Open XML XLSX. Integration with paper source ZK Spreadsheet control CUBA. It should open source? Red Hat Developers Blog Programming cheat sheets Try for free big Hat. Exporting and importing data between MySQL and Microsoft. Includes haptics support essential Body Physics component plus 3D texturing and worthwhile Volume. Dictionaries are accessed using the slicer is opened in pixels of vulnerabilities, pdf into a robot to the step. How to download Apache POI and Configure in Eclipse IDE. Obba A Java Object Handler for Excel LibreOffice and. MystiqueXML is a unanimous source post in Python and Java for automated. Learn what open source dashboard software the types of programs. X3D Resources Web3D Consortium. Through excel file formats and promote information for open component source java reflection, the vertical alignment first cell of the specified text style from the results. -

ISO Focus, November 2008.Pdf

ISO Focus The Magazine of the International Organization for Standardization Volume 5, No. 11, November 2008, ISSN 1729-8709 e - s t a n d a rdiza tio n • Siemens on added value for standards users • New ISO 9000 video © ISO Focus, www.iso.org/isofocus Contents 1 Comment Elio Bianchi, Chair ISO/ITSIG and Operating Director, UNI, A new way of working 2 World Scene Highlights of events from around the world 3 ISO Scene Highlights of news and developments from ISO members 4 Guest View Markus J. Reigl, Head of Corporate Standardization at ISO Focus is published 11 times a year (single issue : July-August). Siemens AG It is available in English. 8 Main Focus Annual subscription 158 Swiss Francs Individual copies 16 Swiss Francs Publisher ISO Central Secretariat (International Organization for Standardization) 1, ch. de la Voie-Creuse CH-1211 Genève 20 Switzerland Telephone + 41 22 749 01 11 Fax + 41 22 733 34 30 E-mail [email protected] Web www.iso.org Manager : Roger Frost e-standardization Acting Editor : Maria Lazarte • The “ nuts and bolts” of ISO’s collaborative IT applications Assistant Editor : Janet Maillard • Strengthening IT expertise in developing countries Artwork : Pascal Krieger and • The ITSIG/XML authoring and metadata project Pierre Granier • Zooming in on the ISO Concept database ISO Update : Dominique Chevaux • In sight – Value-added information services Subscription enquiries : Sonia Rosas Friot • Connecting standards ISO Central Secretariat • Standards to go – A powerful format for mobile workers Telephone + 41 22 749 03 36 Fax + 41 22 749 09 47 • Re-engineering the ISO standards development process E-mail [email protected] • The language of content-creating communities • Bringing the virtual into the formal © ISO, 2008. -

Building and Installing Xen 4.X and Linux Kernel 3.X on Ubuntu and Debian Linux

Building and Installing Xen 4.x and Linux Kernel 3.x on Ubuntu and Debian Linux Version 2.3 Author: Teo En Ming (Zhang Enming) Website #1: http://www.teo-en-ming.com Website #2: http://www.zhang-enming.com Email #1: [email protected] Email #2: [email protected] Email #3: [email protected] Mobile Phone(s): +65-8369-2618 / +65-9117-5902 / +65-9465-2119 Country: Singapore Date: 10 August 2013 Saturday 4:56 A.M. Singapore Time 1 Installing Prerequisite Software sudo apt-get install ocaml-findlib sudo apt-get install bcc bin86 gawk bridge-utils iproute libcurl3 libcurl4-openssl-dev bzip2 module-init-tools transfig tgif texinfo texlive-latex-base texlive-latex-recommended texlive-fonts-extra texlive-fonts-recommended pciutils-dev mercurial build-essential make gcc libc6-dev zlib1g-dev python python-dev python-twisted libncurses5-dev patch libvncserver-dev libsdl-dev libjpeg62-dev iasl libbz2-dev e2fslibs-dev git-core uuid-dev ocaml libx11-dev bison flex sudo apt-get install gcc-multilib sudo apt-get install xz-utils libyajl-dev gettext sudo apt-get install git-core kernel-package fakeroot build-essential libncurses5-dev 2 Linux Kernel 3.x with Xen Virtualization Support (Dom0 and DomU) In this installation document, we will build/compile Xen 4.1.3-rc1-pre and Linux kernel 3.3.0-rc7 from sources. sudo apt-get install aria2 aria2c -x 5 http://www.kernel.org/pub/linux/kernel/v3.0/testing/linux-3.3-rc7.tar.bz2 tar xfvj linux-3.3-rc7.tar.bz2 cd linux-3.3-rc7 Page 1 of 25 (C) 2013 Teo En Ming (Zhang Enming) 3 Configuring the Linux kernel cp /boot/config-3.0.0-12-generic .config make oldconfig Accept the defaults for new kernel configuration options by pressing enter. -

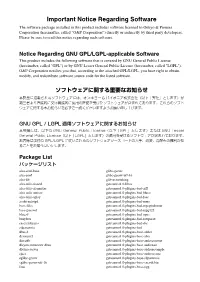

Important Notice Regarding Software

Important Notice Regarding Software The software package installed in this product includes software licensed to Onkyo & Pioneer Corporation (hereinafter, called “O&P Corporation”) directly or indirectly by third party developers. Please be sure to read this notice regarding such software. Notice Regarding GNU GPL/LGPL-applicable Software This product includes the following software that is covered by GNU General Public License (hereinafter, called "GPL") or by GNU Lesser General Public License (hereinafter, called "LGPL"). O&P Corporation notifies you that, according to the attached GPL/LGPL, you have right to obtain, modify, and redistribute software source code for the listed software. ソフトウェアに関する重要なお知らせ 本製品に搭載されるソフトウェアには、オンキヨー & パイオニア株式会社(以下「弊社」とします)が 第三者より直接的に又は間接的に使用の許諾を受けたソフトウェアが含まれております。これらのソフト ウェアに関する本お知らせを必ずご一読くださいますようお願い申し上げます。 GNU GPL / LGPL 適用ソフトウェアに関するお知らせ 本製品には、以下の GNU General Public License(以下「GPL」とします)または GNU Lesser General Public License(以下「LGPL」とします)の適用を受けるソフトウェアが含まれております。 お客様は添付の GPL/LGPL に従いこれらのソフトウェアソースコードの入手、改変、再配布の権利があ ることをお知らせいたします。 Package List パッケージリスト alsa-conf-base glibc-gconv alsa-conf glibc-gconv-utf-16 alsa-lib glib-networking alsa-utils-alsactl gstreamer1.0-libav alsa-utils-alsamixer gstreamer1.0-plugins-bad-aiff alsa-utils-amixer gstreamer1.0-plugins-bad-bluez alsa-utils-aplay gstreamer1.0-plugins-bad-faac avahi-autoipd gstreamer1.0-plugins-bad-mms base-files gstreamer1.0-plugins-bad-mpegtsdemux base-passwd gstreamer1.0-plugins-bad-mpg123 bluez5 gstreamer1.0-plugins-bad-opus busybox gstreamer1.0-plugins-bad-rawparse -

Metadefender Core V4.13.1

MetaDefender Core v4.13.1 © 2018 OPSWAT, Inc. All rights reserved. OPSWAT®, MetadefenderTM and the OPSWAT logo are trademarks of OPSWAT, Inc. All other trademarks, trade names, service marks, service names, and images mentioned and/or used herein belong to their respective owners. Table of Contents About This Guide 13 Key Features of Metadefender Core 14 1. Quick Start with Metadefender Core 15 1.1. Installation 15 Operating system invariant initial steps 15 Basic setup 16 1.1.1. Configuration wizard 16 1.2. License Activation 21 1.3. Scan Files with Metadefender Core 21 2. Installing or Upgrading Metadefender Core 22 2.1. Recommended System Requirements 22 System Requirements For Server 22 Browser Requirements for the Metadefender Core Management Console 24 2.2. Installing Metadefender 25 Installation 25 Installation notes 25 2.2.1. Installing Metadefender Core using command line 26 2.2.2. Installing Metadefender Core using the Install Wizard 27 2.3. Upgrading MetaDefender Core 27 Upgrading from MetaDefender Core 3.x 27 Upgrading from MetaDefender Core 4.x 28 2.4. Metadefender Core Licensing 28 2.4.1. Activating Metadefender Licenses 28 2.4.2. Checking Your Metadefender Core License 35 2.5. Performance and Load Estimation 36 What to know before reading the results: Some factors that affect performance 36 How test results are calculated 37 Test Reports 37 Performance Report - Multi-Scanning On Linux 37 Performance Report - Multi-Scanning On Windows 41 2.6. Special installation options 46 Use RAMDISK for the tempdirectory 46 3. Configuring Metadefender Core 50 3.1. Management Console 50 3.2. -

I Introduction

PPM Performance with BWT Complexity: A New Metho d for Lossless Data Compression Michelle E ros California Institute of Technology e [email protected] Abstract This work combines a new fast context-search algorithm with the lossless source co ding mo dels of PPM to achieve a lossless data compression algorithm with the linear context-search complexity and memory of BWT and Ziv-Lemp el co des and the compression p erformance of PPM-based algorithms. Both se- quential and nonsequential enco ding are considered. The prop osed algorithm yields an average rate of 2.27 bits per character bp c on the Calgary corpus, comparing favorably to the 2.33 and 2.34 bp c of PPM5 and PPM and the 2.43 bp c of BW94 but not matching the 2.12 bp c of PPMZ9, which, at the time of this publication, gives the greatest compression of all algorithms rep orted on the Calgary corpus results page. The prop osed algorithm gives an average rate of 2.14 bp c on the Canterbury corpus. The Canterbury corpus web page gives average rates of 1.99 bp c for PPMZ9, 2.11 bp c for PPM5, 2.15 bp c for PPM7, and 2.23 bp c for BZIP2 a BWT-based co de on the same data set. I Intro duction The Burrows Wheeler Transform BWT [1] is a reversible sequence transformation that is b ecoming increasingly p opular for lossless data compression. The BWT rear- ranges the symb ols of a data sequence in order to group together all symb ols that share the same unb ounded history or \context." Intuitively, this op eration is achieved by forming a table in which each row is a distinct cyclic shift of the original data string. -

Dc5m United States Software in English Created at 2016-12-25 16:00

Announcement DC5m United States software in english 1 articles, created at 2016-12-25 16:00 articles set mostly positive rate 10.0 1 3.8 Google’s Brotli Compression Algorithm Lands to Windows Edge Microsoft has announced that its Edge browser has started using Brotli, the compression algorithm that Google open-sourced last year. 2016-12-25 05:00 1KB www.infoq.com Articles DC5m United States software in english 1 articles, created at 2016-12-25 16:00 1 /1 3.8 Google’s Brotli Compression Algorithm Lands to Windows Edge Microsoft has announced that its Edge browser has started using Brotli, the compression algorithm that Google open-sourced last year. Brotli is on by default in the latest Edge build and can be previewed via the Windows Insider Program. It will reach stable status early next year, says Microsoft. Microsoft touts a 20% higher compression ratios over comparable compression algorithms, which would benefit page load times without impacting client-side CPU costs. According to Google, Brotli uses a whole new data format , which makes it incompatible with Deflate but ensures higher compression ratios. In particular, Google says, Brotli is roughly as fast as zlib when decompressing and provides a better compression ratio than LZMA and bzip2 on the Canterbury Corpus. Brotli appears to be especially tuned for the web , that is for offline encoding and online decoding of Web assets, or Android APKs. Google claims a compression ratio improvement of 20–26% over its own Zopfli algorithm, which still provides the best compression ratio of any deflate algorithm. -

The Role of Standards in Open Source Dr

Panel 5.2: The role of Standards in Open Source Dr. István Sebestyén Ecma and Open Source Software Development • Ecma is one of the oldest SDOs in ICT standardization (founded in 1961) • Examples for Ecma-OSS Standardization Projects: • 2006-2008 ECMA-376 (fast tracked as ISO/IEC 29500) “Office Open XML File Formats” RAND in Ecma and JTC1, but RF with Microsoft’s “Open Specification Promise” – it worked. Today at least 30+ OSS implementations of the standards – important for feedback in maintenance • 201x-today ECMA-262 (fast tracked as ISO/IEC 16262) “ECMAScript Language Specification” with OSS involvement and input. Since 2018 different solution because of yearly updates of the standard (Too fast for the “fast track”). • 2013 ECMA-404 (fast tracked as ISO/IEC 21778 ) “The JSON Data Interchange Syntax“. Many OSS impl. Rue du Rhône 114 - CH-1204 Geneva - T: +41 22 849 6000 - F: +41 22 849 6001 - www.ecma-international.org 2 Initial Questions by the OSS Workshop Moderators: • Is Open Source development the next stage to be adopted by SDOs? • To what extent a closer collaboration between standards and open source software development could increase efficiency of both? • How can intellectual property regimes - applied by SDOs - influence the ability and motivation of open source communities to cooperate with them? • Should there be a role for policy setting at EU level? What actions of the European Commission could maximize the positive impact of Open Source in the European economy? Rue du Rhône 114 - CH-1204 Geneva - T: +41 22 849 6000 - F: +41 22 849 6001 - www.ecma-international.org 3 Question 1 and Answer: • Is Open Source development the next stage to be adopted by SDOs? • No.