Video Quality Measurement for 3G Handset

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

High Level Architecture Framework

Java Media Framework Multimedia Systems: Module 3 Lesson 1 Summary: Sources: H JMF Core Model H JMF 2.0 API Programmers m Guide from Sun: Architecture http://java.sun.com/products/java-media/jmf/2.1/guide/ m Models: time, event, data H JMF 2.0 API H JMF Core Functionality H JMF White Paper from IBM m Presentation http://www- 4.ibm.com/software/developer/library/jmf/jmfwhite. m Processing html m Capture m Storage and Transmission H JMF Extensibility High Level Architecture H A demultiplexer extracts individual tracks of media data JMF Applications, Applets, Beans from a multiplexed media stream. A mutliplexer performs the JMF Presentation and Processing API opposite function, it takes individual tracks of media data and merges them into a single JMF Plug-In API multiplexed media stream. H A codec performs media-data Muxes & Codecs Effects Renderers Demuxes compression and decompression. Each codec has certain input formats that it can handle and H A renderer is an abstraction of a certain output formats that it can presentation device. For audio, the generate presentation device is typically the H An effect filter modifies the computer's hardware audio card track data in some way, often to that outputs sound to the speakers. create special effects such as For video, the presentation device is blur or echo typically the computer monitor. Framework JMF H Media Streams m A media stream is the media data obtained from a local file, acquired over the network, or captured from a camera or microphone. Media streams often contain multiple channels of data called tracks. -

Download Media Player Codec Pack Version 4.1 Media Player Codec Pack

download media player codec pack version 4.1 Media Player Codec Pack. Description: In Microsoft Windows 10 it is not possible to set all file associations using an installer. Microsoft chose to block changes of file associations with the introduction of their Zune players. Third party codecs are also blocked in some instances, preventing some files from playing in the Zune players. A simple workaround for this problem is to switch playback of video and music files to Windows Media Player manually. In start menu click on the "Settings". In the "Windows Settings" window click on "System". On the "System" pane click on "Default apps". On the "Choose default applications" pane click on "Films & TV" under "Video Player". On the "Choose an application" pop up menu click on "Windows Media Player" to set Windows Media Player as the default player for video files. Footnote: The same method can be used to apply file associations for music, by simply clicking on "Groove Music" under "Media Player" instead of changing Video Player in step 4. Media Player Codec Pack Plus. Codec's Explained: A codec is a piece of software on either a device or computer capable of encoding and/or decoding video and/or audio data from files, streams and broadcasts. The word Codec is a portmanteau of ' co mpressor- dec ompressor' Compression types that you will be able to play include: x264 | x265 | h.265 | HEVC | 10bit x265 | 10bit x264 | AVCHD | AVC DivX | XviD | MP4 | MPEG4 | MPEG2 and many more. File types you will be able to play include: .bdmv | .evo | .hevc | .mkv | .avi | .flv | .webm | .mp4 | .m4v | .m4a | .ts | .ogm .ac3 | .dts | .alac | .flac | .ape | .aac | .ogg | .ofr | .mpc | .3gp and many more. -

XMP SPECIFICATION PART 3 STORAGE in FILES Copyright © 2016 Adobe Systems Incorporated

XMP SPECIFICATION PART 3 STORAGE IN FILES Copyright © 2016 Adobe Systems Incorporated. All rights reserved. Adobe XMP Specification Part 3: Storage in Files NOTICE: All information contained herein is the property of Adobe Systems Incorporated. No part of this publication (whether in hardcopy or electronic form) may be reproduced or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written consent of Adobe Systems Incorporated. Adobe, the Adobe logo, Acrobat, Acrobat Distiller, Flash, FrameMaker, InDesign, Illustrator, Photoshop, PostScript, and the XMP logo are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States and/or other countries. MS-DOS, Windows, and Windows NT are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. Apple, Macintosh, Mac OS and QuickTime are trademarks of Apple Computer, Inc., registered in the United States and other countries. UNIX is a trademark in the United States and other countries, licensed exclusively through X/Open Company, Ltd. All other trademarks are the property of their respective owners. This publication and the information herein is furnished AS IS, is subject to change without notice, and should not be construed as a commitment by Adobe Systems Incorporated. Adobe Systems Incorporated assumes no responsibility or liability for any errors or inaccuracies, makes no warranty of any kind (express, implied, or statutory) with respect to this publication, and expressly disclaims any and all warranties of merchantability, fitness for particular purposes, and noninfringement of third party rights. Contents 1 Embedding XMP metadata in application files . -

Making Speech Recognition Work on the Web Christopher J. Varenhorst

Making Speech Recognition Work on the Web by Christopher J. Varenhorst Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Masters of Engineering in Computer Science and Engineering at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY May 2011 c Massachusetts Institute of Technology 2011. All rights reserved. Author.................................................................... Department of Electrical Engineering and Computer Science May 20, 2011 Certified by . James R. Glass Principal Research Scientist Thesis Supervisor Certified by . Scott Cyphers Research Scientist Thesis Supervisor Accepted by . Christopher J. Terman Chairman, Department Committee on Graduate Students Making Speech Recognition Work on the Web by Christopher J. Varenhorst Submitted to the Department of Electrical Engineering and Computer Science on May 20, 2011, in partial fulfillment of the requirements for the degree of Masters of Engineering in Computer Science and Engineering Abstract We present an improved Audio Controller for Web-Accessible Multimodal Interface toolkit { a system that provides a simple way for developers to add speech recognition to web pages. Our improved system offers increased usability and performance for users and greater flexibility for developers. Tests performed showed a %36 increase in recognition response time in the best possible networking conditions. Preliminary tests shows a markedly improved users experience. The new Wowza platform also provides a means of upgrading other Audio Controllers easily. Thesis Supervisor: James R. Glass Title: Principal Research Scientist Thesis Supervisor: Scott Cyphers Title: Research Scientist 2 Contents 1 Introduction and Background 7 1.1 WAMI - Web Accessible Multimodal Toolkit . 8 1.1.1 Existing Java applet . 11 1.2 SALT . -

Microsoft Powerpoint

Development of Multimedia WebApp on Tizen Platform 1. HTML Multimedia 2. Multimedia Playing with HTML5 Tags (1) HTML5 Video (2) HTML5 Audio (3) HTML Pulg-ins (4) HTML YouTube (5) Accessing Media Streams and Playing (6) Multimedia Contents Mgmt (7) Capturing Images 3. Multimedia Processing Web Device API Multimedia WepApp on Tizen - 1 - 1. HTML Multimedia • What is Multimedia ? − Multimedia comes in many different formats. It can be almost anything you can hear or see. − Examples : Pictures, music, sound, videos, records, films, animations, and more. − Web pages often contain multimedia elements of different types and formats. • Multimedia Formats − Multimedia elements (like sounds or videos) are stored in media files. − The most common way to discover the type of a file, is to look at the file extension. ⇔ When a browser sees the file extension .htm or .html, it will treat the file as an HTML file. ⇔ The .xml extension indicates an XML file, and the .css extension indicates a style sheet file. ⇔ Pictures are recognized by extensions like .gif, .png and .jpg. − Multimedia files also have their own formats and different extensions like: .swf, .wav, .mp3, .mp4, .mpg, .wmv, and .avi. Multimedia WepApp on Tizen - 2 - 2. Multimedia Playing with HTML5 Tags (1) HTML5 Video • Some of the popular video container formats include the following: Audio Video Interleave (.avi) Flash Video (.flv) MPEG 4 (.mp4) Matroska (.mkv) Ogg (.ogv) • Browser Support Multimedia WepApp on Tizen - 3 - • Common Video Format Format File Description .mpg MPEG. Developed by the Moving Pictures Expert Group. The first popular video format on the MPEG .mpeg web. -

On Audio-Visual File Formats

On Audio-Visual File Formats Summary • digital audio and digital video • container, codec, raw data • different formats for different purposes Reto Kromer • AV Preservation by reto.ch • audio-visual data transformations Film Preservation and Restoration Hyderabad, India 8–15 December 2019 1 2 Digital Audio • sampling Digital Audio • quantisation 3 4 Sampling • 44.1 kHz • 48 kHz • 96 kHz • 192 kHz digitisation = sampling + quantisation 5 6 Quantisation • 16 bit (216 = 65 536) • 24 bit (224 = 16 777 216) • 32 bit (232 = 4 294 967 296) Digital Video 7 8 Digital Video Resolution • resolution • SD 480i / SD 576i • bit depth • HD 720p / HD 1080i • linear, power, logarithmic • 2K / HD 1080p • colour model • 4K / UHD-1 • chroma subsampling • 8K / UHD-2 • illuminant 9 10 Bit Depth Linear, Power, Logarithmic • 8 bit (28 = 256) «medium grey» • 10 bit (210 = 1 024) • linear: 18% • 12 bit (212 = 4 096) • power: 50% • 16 bit (216 = 65 536) • logarithmic: 50% • 24 bit (224 = 16 777 216) 11 12 Colour Model • XYZ, L*a*b* • RGB / R′G′B′ / CMY / C′M′Y′ • Y′IQ / Y′UV / Y′DBDR • Y′CBCR / Y′COCG • Y′PBPR 13 14 15 16 17 18 RGB24 00000000 11111111 00000000 00000000 00000000 00000000 11111111 00000000 00000000 00000000 00000000 11111111 00000000 11111111 11111111 11111111 11111111 00000000 11111111 11111111 11111111 11111111 00000000 11111111 19 20 Compression Uncompressed • uncompressed + data simpler to process • lossless compression + software runs faster • lossy compression – bigger files • chroma subsampling – slower writing, transmission and reading • born -

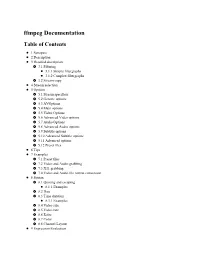

Ffmpeg Documentation Table of Contents

ffmpeg Documentation Table of Contents 1 Synopsis 2 Description 3 Detailed description 3.1 Filtering 3.1.1 Simple filtergraphs 3.1.2 Complex filtergraphs 3.2 Stream copy 4 Stream selection 5 Options 5.1 Stream specifiers 5.2 Generic options 5.3 AVOptions 5.4 Main options 5.5 Video Options 5.6 Advanced Video options 5.7 Audio Options 5.8 Advanced Audio options 5.9 Subtitle options 5.10 Advanced Subtitle options 5.11 Advanced options 5.12 Preset files 6 Tips 7 Examples 7.1 Preset files 7.2 Video and Audio grabbing 7.3 X11 grabbing 7.4 Video and Audio file format conversion 8 Syntax 8.1 Quoting and escaping 8.1.1 Examples 8.2 Date 8.3 Time duration 8.3.1 Examples 8.4 Video size 8.5 Video rate 8.6 Ratio 8.7 Color 8.8 Channel Layout 9 Expression Evaluation 10 OpenCL Options 11 Codec Options 12 Decoders 13 Video Decoders 13.1 rawvideo 13.1.1 Options 14 Audio Decoders 14.1 ac3 14.1.1 AC-3 Decoder Options 14.2 ffwavesynth 14.3 libcelt 14.4 libgsm 14.5 libilbc 14.5.1 Options 14.6 libopencore-amrnb 14.7 libopencore-amrwb 14.8 libopus 15 Subtitles Decoders 15.1 dvdsub 15.1.1 Options 15.2 libzvbi-teletext 15.2.1 Options 16 Encoders 17 Audio Encoders 17.1 aac 17.1.1 Options 17.2 ac3 and ac3_fixed 17.2.1 AC-3 Metadata 17.2.1.1 Metadata Control Options 17.2.1.2 Downmix Levels 17.2.1.3 Audio Production Information 17.2.1.4 Other Metadata Options 17.2.2 Extended Bitstream Information 17.2.2.1 Extended Bitstream Information - Part 1 17.2.2.2 Extended Bitstream Information - Part 2 17.2.3 Other AC-3 Encoding Options 17.2.4 Floating-Point-Only AC-3 Encoding -

Capabilities of the Horchow Auditorium and the Orientation

Performance Capabilities of Horchow Auditorium and Atrium at the Dallas Museum of Art Horchow Auditorium Capacity and Stage: The auditorium seats 333 people (with a 12 removable chair option in the back), maxing out the capacity at 345). The stage is 45’ X 18’and the screen is 27’ X 14’. A height adjustable podium, microphone, podium clock and light are standard equipment available. Installed/Available Equipment Sound: Lighting: 24 channel sound board 24 fixed lights 4 stage monitors (with up to 4 Mixes) 5 movers (these give a wide array of lighting looks) 6 hardwired microphones 4 wireless lavaliere microphones 2 handheld wireless microphones (with headphone option) 9-foot Steinway Concert Grand Piano 3 Bose towers (these have been requested by Acoustic performers before and work very well) Music stands Projection Panasonic PTRQ32 4K 20,000 Lumen Laser Projector Preferred Video Formats in Horchow Blu Ray DVD Apple ProRes 4:2:2 Standard in a .mov wrapper H.264 in a .mov wrapper Formats we can use, but are not optimal MPEG-1/2 Dirac / VC-2 DivX® (1/2/3/4/5/6) MJPEG (A/B) MPEG-4 ASP WMV 1/2 XviD WMV 3 / WMV-9 / VC-1 3ivX D4 Sorenson 1/3 H.261/H.263 / H.263i DV H.264 / MPEG-4 AVC On2 VP3/VP5/VP6 Cinepak Indeo Video v3 (IV32) Theora Real Video (1/2/3/4) Atrium Capacity and Stage: The Atrium seats up to 500 people (chair rental required). The stage available to be installed in the Atrium is 16’ x 12’ x 1’. -

Autodesk White Paper

AUTOCAD RASTER DESIGN 2010 FEATURES AND BENEFITS AutoCAD® Raster Design 2010 Features and Benefits Make the most of rasterized scanned drawings, maps, aerial photos, satellite imagery, and digital elevation models. Get more out of your raster data and enhance your designs, plans, presentations, ® and maps with AutoCAD Raster Design software. Contents Introduction ...................................................................................................................... 2 New Features and Enhancements .................................................................................. 2 Image Display ................................................................................................................... 3 Image Editing and Cleanup ............................................................................................. 4 Vectorization Tools with SmartCorrect .......................................................................... 6 Raster Entity Manipulation (REM) with SmartPick ....................................................... 7 Georeferenced Image Display and Analysis .................................................................. 8 Image Transformations .................................................................................................. 10 www.autodesk.com/rasterdesign AUTOCAD RASTER DESIGN 2010 FEATURES AND BENEFITS Introduction Extend the power of AutoCAD® and AutoCAD-based software by using AutoCAD Raster Design software for a wide range of applications. Get more out of your raster -

Mixbus V4 1 — Last Update: 2017/12/19 Harrison Consoles

Mixbus v4 1 — Last update: 2017/12/19 Harrison Consoles Harrison Consoles Copyright Information 2017 No part of this publication may be copied, reproduced, transmitted, stored on a retrieval system, or translated into any language, in any form or by any means without the prior written consent of an authorized officer of Harrison Consoles, 1024 Firestone Parkway, La Vergne, TN 37086. Table of Contents Introduction ................................................................................................................................................ 5 About This Manual (online version and PDF download)........................................................................... 7 Features & Specifications.......................................................................................................................... 9 What’s Different About Mixbus? ............................................................................................................ 11 Operational Differences from Other DAWs ............................................................................................ 13 Installation ................................................................................................................................................ 16 Installation – Windows ......................................................................................................................... 17 Installation – OS X ............................................................................................................................... -

(A/V Codecs) REDCODE RAW (.R3D) ARRIRAW

What is a Codec? Codec is a portmanteau of either "Compressor-Decompressor" or "Coder-Decoder," which describes a device or program capable of performing transformations on a data stream or signal. Codecs encode a stream or signal for transmission, storage or encryption and decode it for viewing or editing. Codecs are often used in videoconferencing and streaming media solutions. A video codec converts analog video signals from a video camera into digital signals for transmission. It then converts the digital signals back to analog for display. An audio codec converts analog audio signals from a microphone into digital signals for transmission. It then converts the digital signals back to analog for playing. The raw encoded form of audio and video data is often called essence, to distinguish it from the metadata information that together make up the information content of the stream and any "wrapper" data that is then added to aid access to or improve the robustness of the stream. Most codecs are lossy, in order to get a reasonably small file size. There are lossless codecs as well, but for most purposes the almost imperceptible increase in quality is not worth the considerable increase in data size. The main exception is if the data will undergo more processing in the future, in which case the repeated lossy encoding would damage the eventual quality too much. Many multimedia data streams need to contain both audio and video data, and often some form of metadata that permits synchronization of the audio and video. Each of these three streams may be handled by different programs, processes, or hardware; but for the multimedia data stream to be useful in stored or transmitted form, they must be encapsulated together in a container format. -

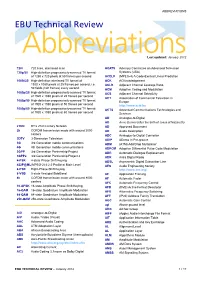

ABBREVIATIONS EBU Technical Review

ABBREVIATIONS EBU Technical Review AbbreviationsLast updated: January 2012 720i 720 lines, interlaced scan ACATS Advisory Committee on Advanced Television 720p/50 High-definition progressively-scanned TV format Systems (USA) of 1280 x 720 pixels at 50 frames per second ACELP (MPEG-4) A Code-Excited Linear Prediction 1080i/25 High-definition interlaced TV format of ACK ACKnowledgement 1920 x 1080 pixels at 25 frames per second, i.e. ACLR Adjacent Channel Leakage Ratio 50 fields (half frames) every second ACM Adaptive Coding and Modulation 1080p/25 High-definition progressively-scanned TV format ACS Adjacent Channel Selectivity of 1920 x 1080 pixels at 25 frames per second ACT Association of Commercial Television in 1080p/50 High-definition progressively-scanned TV format Europe of 1920 x 1080 pixels at 50 frames per second http://www.acte.be 1080p/60 High-definition progressively-scanned TV format ACTS Advanced Communications Technologies and of 1920 x 1080 pixels at 60 frames per second Services AD Analogue-to-Digital AD Anno Domini (after the birth of Jesus of Nazareth) 21CN BT’s 21st Century Network AD Approved Document 2k COFDM transmission mode with around 2000 AD Audio Description carriers ADC Analogue-to-Digital Converter 3DTV 3-Dimension Television ADIP ADress In Pre-groove 3G 3rd Generation mobile communications ADM (ATM) Add/Drop Multiplexer 4G 4th Generation mobile communications ADPCM Adaptive Differential Pulse Code Modulation 3GPP 3rd Generation Partnership Project ADR Automatic Dialogue Replacement 3GPP2 3rd Generation Partnership