What Distinguish One from Its Peers in Social Networks?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Alurdock, M Ott, and Signed by 43 for Contest for a Two-Weeks' Trial Period, Jaies Are Nominees Dance Formal According to Sterling H

J. I . vo=MM ,L- I .206 - -. I' - irol.LX, No. 17 CAMBRIDGE, MASS., FRIDAY, APRIL 5, 1940 Price Five Cents I~~~~ qayer, Reeves Hours Of T.C.A. Office April Are Exterlded To 6 P.M. 10 Set Alurdock, M ott, And Signed By 43 For Contest For a two-weeks' trial period, Jaies Are Nominees Dance Formal according to Sterling H. Ivison, Jr., '41, president of the organiza- Six Will Compete In Final tion, the T.C.A. office will remain Of Stratton Prize; For 41 PresideUn7y rwsvo Bands Will Furnnish open for service until six o'clock rather than closing at five. The Judges Chosen Colltinuous Music Chemical Society office is to be managed entirely Visits For Dancing Forty Candidates by the student cabinet during the The final competition for the 1939- Carter Ink Plant Today last hour. 40 Stratton Prizes will be given in Slated To Run TICKETS COST $2.50 If the change proves success- Huntington Hall, Room 10-250, at A plant trip to the Carter's Ink For Office ful, the new policy will be con- 3:15 P.MI. on Wednesday, April 10. plant this afternoon, April 6, has been planned by the Chemical So- Klen Reeves and his oi chesti a and tinued during the coming year President Karl T. Compton has in- Balloting To Be Staged In Leon M~ayer's orchestra have been under the present administration. vited all students and professors to ciety. The trip is open to all who Main Lobby Next ;iilned to provide continuous music One of the reasons for the change attend. -

At Pontiac Mingled in the Throng

Cifatetuoctl) pittiuftealer “The Only Ntwipipir in the Work! That Ghrea a Whoop Abook ChMtoworth" SIXTY-SEVENTH YEAR CHATSWORTH ILLINOIS, THURSDAY. SEPTEMBER 19. 1940 EDIGRAPHS Departures and Arrivals Supervisors Pass Budget Still a Fise Is it a stronger character who repents or the one who resists? Of $ 1 6 8 ,8 0 5 (Pontiac Daily Leader) Alter A budget carrying $168,806 for general county purposes for the year beginning earsV 1WI1 adopted by the Livingston county ♦ Old Age Pension board of supervisors Just previous ♦ Catholic Church Was ♦ Miss Arlene Shafer Some wives have to get to the adjournment of the Sep and Kenneth Roeenboon wrinkled faces and knotty Advocate Greeted by tember term last Thursday after Dedicated in 1890— S-i--- J Lw D aw m —L - t f hands before husbands begin noon. This compares with $171,- Birthday Being Observed jomeo Dy ner. mscnoi r to appreciate them. Huge Crowd Sunday 410 appropriated for the current ♦ fiscal year. An estimated crowd of 10,000 In addition to the appropriation The congregation of Saints Miss Arlene Shafer and Kenneth A presidential campaign persons attended the second annu war cry, “stick and stones for county purposes, the board al-,er and P®ul’s Catholic H. Rosenboom were married Sun al Townsend reunion held in the so appropriated $27,000 for relief celebrate the fiftieth day at 11:30 in a pretty ceremony will break my bones, but Falrbury fairground Sunday. names will never hurt me.” of the blind; $20,000 for mothers’ of the dedication of In the Chatsworth Evangelical Dr. -

Billboard-1940-04-27

The World's Foremost Amusement Weekly 1'1411. 19F 2;. 1Millir 15 tent rr. '`: ` r. www.americanradiohistory.com April 27, 1940 The Billboard A TRIBUTE THE TO THE OLDEST YOUNGEST SHOWMAN IN THE WORLD g5UN BOOKING ir j S SUN AGENCY ACTIVE AND AT THE HEAD OF A RECORD STILL 1889 HIS OWN ORIGINAL 1940 BOOKING AGENCY AND STILL HANGING UP RECORDS-YEAR AFTER YEAR! ARE THE ORIGINATORS OF "TAB MUSICALS" AND CAN BOOK YOU "STREAM- THEATRES' WE LINED" MUSICAL REVUES- PRODUCTIONS COMPLETE WITH BANDS AT YOUR BUDGET. OVER 50 THEATRES USING "GUS SUN UNITS." JOIN THE "PARADE" OF SATISFIED THEATRE MANAGERS! FAIRS, 150 SATISFIED FAIR MANAGERS LAST YEAR TOLD THEIR FAIR FRIEND SECRETARIES HOW THEY ESTABLISHED RECORD BUSINESS WITH "GUS SUN SHOWS." RESULTS-THE BIGGEST BUSINESS IN "GUS SUN" HISTORY TO DATE WISE FAIR MANAGERS ARE BOOKING WITH "SUN" JOIN THE PARADE OF SATISFIED FAIR SECRETARIES! REALLY ESTABLISHED A POLICY OF "GUS SUN SENSATIONAL ACTS" FOR PARKS LAST PARKS! WE YEAR AND ACTUALLY BOOSTED ATTENDANCE 100%. RESULTS- 15 PARKS HAVE ALREADY SIGNED FOR "SUN ACTS" ON A "WEEKLY BUDGET BASIS." WISE PARK MANAGERS-JOIN THE "PARADE OF SATISFIED PARK MANAGERS." STAR RADIO ATTRACTIONS NAME BANDS HEADLINERS EVERYTHING IN THE LINE OF "TOP FLIGHT" ENTERTAINMENT FOR THEATRES, FAIRS, PARKS, SPECIAL EVENTS BOOKED ON SHORT NOTICE WRITE WIRE PHONE O EXECUTIVE OFFICES THE ENTIRE UPPER FLOOR OF 'SUN BOOKS SUN ROOKS/úf SUN'S REGENT THEATRE BLDG. ' .rÚNDER THE SUN" /UNDER THE. SUN" SPRINGFIELD OHIO BRANCH OFFICES BRANCA OFFICES CHICAGO COLUMBUS WOODS THEATRE BLDG. GRAND THEATRE BLDG. BOYLE WOOLFOLK, MGR. ERNIE CREECH, MGR. -

1940-03-22.Pdf

...._1'-- .......;::-- .... ?!3;.?ug -, I . -- ~...-- .-- i t!I~- -Bu,y lDiel Uat!· '~uy Easter seals .1 : - £Uter SeaLt I ~'-Help I Biillg Joy to cnppie<1 I ·~ Crippled Boy~ wJ"d-'GiJis CbildreD I lVSiW 1_' . Volum~ )69, Number 39 $1.50 ~ER ~AR IN ADV~~~ ~North~neGTonpsl,,;:~~Ub_G~';~ift~eaIil.SefJPOll J * _.~_ :Juvenile '1rial'; ~IPresidesat CQur~!NOttbYille'S rIVe SponsorInler"pubi- "", -,:jWillMar;~Ea~ter : 1 " .~' IsLegion Projecl ; ~hurches To .Hol~ Banqu~t,March~71 JSDndaY-~II.Vdlagel ~ ~. 'Tuesday, Mar.-26i Ire ·o.:eSer~ces Philip _ Apter ana W.eish! - '~~';<Lrrhree Churche; Plan __S~·j, ' - Villag~~s Will Participate T1u'ee Protestant Chuh:!h<!s . _.}. t~ ~~'ili! - , in:' Male QUar~et Will -Bef Y,: ~"""~ _ 1.1.1 ~ rille Servic:es; Min~$tersl' -', ' , , ""-~~A:\~<' Court Proep.dure-;-_!;?etroit - Unjte for Afternoon Ob- _ Feat1;t;'?s of Progra~. at: - : ~?~ <'}'~;",1:~~'~JtPrepat'"~o-S~ecial S'ermons} />'- ~ , w- :<:;<~! Offidals Assist Amerman,' r servance; LutheraD5 an!! P P~~byt~rian __Chur('h;~ . ";'. ':: ttt~~~;;i f~~ ;V0rshI _ Hours - , t? ,.J,f Of iJ ~ i!1'1'",~\.;;~< ~ h; Owe~-and¥r~: q~tholis Meet in E.venin~ tfarris0J?-Presid~s 1 ~>- _, ISpecIal Easter MUSl~! •. " . ~~ , ~t, ~ t' ~)J3~Y Will Be 'Tried' I ~StQres·Win~iQ5e -?ctaRans. 'Exchangit~ and Leg-; I ~~ter mil be ,r,ar.ced m. N0x:th-~" l <:) 'J \ ,,'I>, ""'~ ;~~ I~.. :I. ~l A J;veIllie :oun_m~k tnal F."}:J In observ== of GOOd Friday fonIlair~.smIl spoiisoi" thlJ .first I:~]]:s~nday.",M:U-_ 2~. _\\i.~ servlces-lfiU~'" ~-__ •i' ••J)~ :11' '? '~' " !be llejd :~esa;ay e"enm~. -

OTR Digest (143) Winter 2014

> cu 0:::: ""O C: cu ..0 0 co OldDmelladio 'DIGESJ' Old Time Radio No. 143 Winter 2014 The Old Time Radio Oigesl is printed _BOOKS AND PAPER published and distributed by _,.._..... ..... -----·-------··----·- --4-· -·--... __ .., __ .,.____ - .. - -- RMS & Associates Edited by Bob Burchett We have one of Che htrgest selections in thr USA of out of print books and pa1,cr items on all aspec ts of radio broadcast in~- MAY 16& 11, 2014 Published quarterly four l11nes a year -----·--- -------------- ----------------- ·-·- -··-·----- One-year subscription is $15 per year Hoo ks: A large assortment of books on th~· history of broadcasting, Single copies $3.75 each Pasl issues are available. Make checks radio writ ing, stars' biographies. radio show-;. and radio play~. payable to Old Time Radio Digest. A lso books on hrnacl casting techniques. social impact l1f ,a<lio t' IC .. Business and editorial office ~phcmcl'a : RMS & Associates, 10280 Gunpowder Rd Materia l on speci fi e radio station-.;. radio scri pts. Florence. Kentucky 41042 advcr1ising literature, radio prc.:miums. NAB annual repo11s, etc. 859 2820333 ------------- --- ------- ·---------- bob [email protected] ORDER OUR CA TALOG Advertising rates as of January 1, 2013 Our fa.H ca,"/of!. (lt2 .'i) 11·m i-1s 11c>d in ./11/y :!U I rJ 011d i11chnh•t m•,•1 300 11e111 < Full page ad $20 size 4 5/8 x 7 i11c/r1d1111{ n 111ce varl<'t)' of items II e have 11<'1·,·r \ecn hc{o,-<' plus ,i 1111111bt•1 of Half page ad S10 size 4 5/8 x 3 oldjcNorites //,at were nn, 111<'111ded 111 our fa.If c·utalug /11mt 1te111.1 111 th,• Half page ad $10 size2x7 catafflg are .will avr1ilahle. -

Columbia Pictures: Portrait of a Studio

University of Kentucky UKnowledge Film and Media Studies Arts and Humanities 1992 Columbia Pictures: Portrait of a Studio Bernard F. Dick Click here to let us know how access to this document benefits ou.y Thanks to the University of Kentucky Libraries and the University Press of Kentucky, this book is freely available to current faculty, students, and staff at the University of Kentucky. Find other University of Kentucky Books at uknowledge.uky.edu/upk. For more information, please contact UKnowledge at [email protected]. Recommended Citation Dick, Bernard F., "Columbia Pictures: Portrait of a Studio" (1992). Film and Media Studies. 8. https://uknowledge.uky.edu/upk_film_and_media_studies/8 COLUMBIA PICTURES This page intentionally left blank COLUMBIA PICTURES Portrait of a Studio BERNARD F. DICK Editor THE UNIVERSITY PRESS OF KENTUCKY Copyright © 1992 by The University Press of Kentucky Paperback edition 2010 Scholarly publisher for the Commonwealth, serving Bellarmine University, Berea College, Centre College of Kentucky, Eastern Kentucky University, The Filson Historical Society, Georgetown College, Kentucky Historical Society, Kentucky State University, Morehead State University, Murray State University, Northern Kentucky University, Transylvania University, University of Kentucky, University of Louisville, and Western Kentucky University. All rights reserved. Editorial and Sales Offices: The University Press of Kentucky 663 South Limestone Street, Lexington, Kentucky 40508-4008 www.kentuckypress.com Cataloging-in-Publication Data for the hardcover edition is available from the Library of Congress ISBN 978-0-8131-3019-4 (pbk: alk. paper) This book is printed on acid-free recycled paper meeting the requirements of the American National Standard for Permanence in Paper for Printed Library Materials. -

Torrance Herald

TORRANCE HERALD. To THUB8PAY. MAY i, Latest .statistic: weighed but three pounds ami' 5-1 averaKe length JTwo Babies Born 'Pay-off' Attempt eight ounces. She lost eight i Here During Week ounces the second week but now Bl In City Politics Two babies were born during appears to have a firm grip on e past week at Torramv Mem life. The baby Is .still confined orial hospital. They were: A in the Incubator but may be re Hit by Citizens daughter to Mr. and Mrs: J. Y. leased to within M H e n o, Hermosa Beach, last (Contlnm-.l from Pago 1-A) Thursday, and a daughter K UKiiinsl tlic iisiirimtion of our Mr. and Mrs. J. M. Splcer, Man Belgium te the most densely right to linlnl4Trr.pt. (! son ice hattan Beach, last Friday, populated country In E u r o p e. nf pnlilir (iffiri'rs UN Inns as daughter of There are about 710 persons to Ihnui* otricors arc giving snt- and Mrs. Fernand De Passe of i the square mile. i«ric;'t<ir.v Hprvicc. We helievo 927 Arlington avenue, who was -- -- thut Rpnorully RpeaklnK, the a premature arrival April 10,1 A Philadelphia!! favors putting various departments of the now tips the beam at four [old jokes under instead Of on city are \W\I\K operated In an pounds, When born, the baby the ether. efficient inunnvr. If reason for criticism exists, It Is the Amer ican way to give the offend- lag official mi opportunity of ( niifiirinlng to the policies of the nc\v administration. -

A Statistical Survey of Sequels, Series Films, Prequels

SEQUEL OR TITLE YEAR STUDIO ORIGINAL TV/DTV RELATED TO DIRECTOR SERIES? STARRING BASED ON RUN TIME ON DVD? VIEWED? NOTES 1918 1985 GUADALUPE YES KEN HARRISON WILLIAM CONVERSE-ROBERTS,HALLIE FOOTE PLAY 94 N ROY SCHEIDER, HELEN 2010 1984 MGM NO 2001: A SPACE ODYSSEY PETER HYAMS SEQUEL MIRREN, JOHN LITHGOW ORIGINAL 116 N JONATHAN TUCKER, JAMES DEBELLO, 100 GIRLS 2001 DREAM ENT YES DTV MICHAEL DAVIS EMANUELLE CHRIQUI, KATHERINE HEIGL ORIGINAL 94 N 100 WOMEN 2002 DREAM ENT NO DTV 100 GIRLS MICHAEL DAVIS SEQUEL CHAD DONELLA, JENNIFER MORRISON ORIGINAL 98 N AKA - GIRL FEVER GLENN CLOSE, JEFF DANIELS, 101 DALMATIANS 1996 WALT DISNEY YES STEPHEN HEREK JOELY RICHARDSON NOVEL 103 Y WILFRED JACKSON, CLYDE GERONIMI, WOLFGANG ROD TAYLOR, BETTY LOU GERSON, 101 DALMATIANS (Animated) 1951 WALT DISNEY YES REITHERMAN MARTHA WENTWORTH, CATE BAUER NOVEL 79 Y 101 DALMATIANS II: PATCH'S LONDON BOBBY LOCKWOOD, SUSAN BLAKESLEE, ADVENTURE 2002 WALT DISNEY NO DTV 101 DALMATIANS (Animated) SEQUEL SAMUEL WEST, KATH SOUCIE ORIGINAL 70 N GLENN CLOSE, GERARD DEPARDIEU, 102 DALMATIANS 2000 WALT DISNEY NO 101 DALMATIANS KEVIN LIMA SEQUEL IOAN GRUFFUDD, ALICE EVANS ORIGINAL 100 N PAUL WALKER, TYRESE GIBSON, 2 FAST, 2 FURIOUS 2003 UNIVERSAL NO FAST AND THE FURIOUS, THE JOHN SINGLETON SEQUEL EVA MENDES, COLE HAUSER ORIGINAL 107 Y KEIR DULLEA, DOUGLAS 2001: A SPACE ODYSSEY 1968 MGM YES STANLEY KUBRICK RAIN NOVEL 141 Y MICHAEL TREANOR, MAX ELLIOT 3 NINJAS 1992 TRI-STAR YES JON TURTLETAUB SLADE, CHAD POWER, VICTOR WONG ORIGINAL 84 N MAX ELLIOT SLADE, VICTOR WONG, 3 NINJAS KICK BACK 1994 TRI-STAR NO 3 NINJAS CHARLES KANGANIS SEQUEL SEAN FOX, J. -

A Brief History of Comic Book Movies, DOI 10.1007/978-3-319-47184-6 80 BIBLIOGRAPHY

BIBLIOGRAPHY Akitunde, Anthonia. 2011 X-Men: First Class. Fast Company, May 18. Web. Alaniz, José. 2015. Death, Disability, and the Superhero: The Silver Age and Beyond. Jackson: University Press of Mississippi. Print. Arnold, Andrew. 2001. Anticipating a Ghost World. Time, July 20. Web. Booker, M. K. 2007. May Contain Graphic Material: Comic Books, Graphic Novels, and Film. Westport, CT: Praeger Publishers. Print. ———. 2001. Batman Unmasked: Analyzing a Cultural Icon. New York: Bloomsbury Academic. Print. Brenner, Robin E. 2007. Understanding Manga and Animé. Westport, CT: Libraries Unlimited. Print. Brock, Ben. 2014, February 4. Bryan Singer Says Superman Returns was Made for “More of a Female Audience,” Sequel Would’ve Featured Darkseid. The Playlist. Web. Brooker, Will. 2012. Hunting the Dark Knight: Twenty-First Century Batman. London: I.B. Tauris. Print. Brown, Steven T. 2010. Tokyo Cyberpunk: Posthumanism in Japanese Visual Culture. New York: Palgrave Macmillan. Print. ———. 2006. Cinema Animé: Critical Engagements with Japanese Animation. New York: Palgrave Macmillan. Print. Bukatman, Scott. 2016. Hellboy’s World: Comics and Monsters on the Margins. Berkeley: University of California Press. Print. ———. 2011. Why I Hate Superhero Movies. Cinema Journal 50(3): 118–122. Print. Burke, Liam. 2015. The Comic Book Film Adaptation: Exploring Modern Hollywood’s Leading Genre. University Press of Mississippi. Print. ———. 2008. Superhero Movies. Harpenden, England: Pocket Essentials. Print. © The Author(s) 2017 79 W.W. Dixon, R. Graham, A Brief History of Comic Book Movies, DOI 10.1007/978-3-319-47184-6 80 BIBLIOGRAPHY Caldecott, Stratford. 2013. Comic Book Salvation. Chesterton Review 39(1/2): 283–288. Print. Chang, Justin. 2014, August 20. -

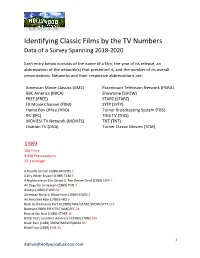

Identifying Classic Films by the TV Numbers Data of a Survey Spanning 2018-2020

Identifying Classic Films by the TV Numbers Data of a Survey Spanning 2018-2020 Each entry below consists of the name of a film, the year of its release, an abbreviation of the network(s) that presented it, and the number of its overall presentations. Networks and their respective abbreviations are: American Movie Classics (AMC) Paramount Television Network (PARA) BBC America (BBCA) Showtime (SHOW) FREE (FREE) STARZ (STARZ) FX Movie Channel (FXM) SYFY (SYFY) Home Box Office (HBO) Turner Broadcasting System (TBS) IFC (IFC) THIS TV (THIS) MOVIES! TV Network (MOVIES) TNT (TNT) Ovation TV (OVA) Turner Classic Movies (TCM) 1989 150 Films 4,958 Presentations 33,1 Average A Deadly Silence (1989) MOVIES 1 A Dry White Season (1989) TCM 4 A Nightmare on Elm Street 5: The Dream Child (1989) SYFY 7 All Dogs Go to Heaven (1989) THIS 7 Always (1989) STARZ 69 American Ninja 3: Blood Hunt (1989) STARZ 2 An Innocent Man (1989) HBO 5 Back to the Future Part II (1989) MAX/STARZ/SHOW/SYFY 272 Batman (1989) SYFY/TNT/AMC/IFC 24 Best of the Best (1989) STARZ 16 Bill & Ted’s Excellent Adventure (1989) STARZ 140 Black Rain (1989) SHOW/MOVIES/MAX 85 Blind Fury (1989) THIS 15 1 [email protected] Born on the Fourth of July (1989) MAX/BBCA/OVA/STARZ/HBO 201 Breaking In (1989) THIS 5 Brewster’s Millions (1989) STARZ 2 Bridge to Silence (1989) THIS 9 Cabin Fever (1989) MAX 2 Casualties of War (1989) SHOW 3 Chances Are (1989) MOVIES 9 Chattahoochi (1989) THIS 9 Cheetah (1989) TCM 1 Cinema Paradise (1989) MAX 3 Coal Miner’s Daughter (1989) STARZ 1 Collision -

Catholic Digest

Motion Picture Classifications Ordination in Erie student body voluntarily attend a special Peace Day Mass and re- Msgr. Ryan's Article Issued by the National Legion of Decency To Be Followed by ceive Holy Communion for the in- On Justice Black Put tention of the Holy Father that Only the more recent films are listed here; space limitations make Mass at St. Brigid's Into Congress Record ft impossible to publish a complete list. The classification of any pic- peace may return to the world. At lures, however, will be gladly given to those who inquire at this office Rev. Paul Micheli, son of Mr. a convocation of faculty and stu- Phone COurt 0661. and Ifra Eugene Micheli, of St dent body a lay speaker would Washington, Apr. 10 (JO—An Parishes, organizations and individuals desiring to receive the Brigid'a Pariah, Pittsburgh, will present the Pope's program for article '"Due Process' and Mr. weekly reports issued by the National Legion of Decency caw order be ordained to the priesthood for peace. Justice Black", which Rt Rev. them by writing to the Legion's offices, *85 Madison Ave., New York the Diocese of Erie in St Peter's In the evening an outdoor rally Msgr. John A. Ryan wrote for the The chargc for the reports is $2.00 per year. A complete list gMna would be held under the direction April issue of "The Catholic the classification of older films may be obtained at the same address. Cathedral, Erie, on Thursday ] morning, May 2, by Most Rev. of student leaden, closing with World", has been Inserted in the ¡John Mark Gannon, Bishop of Erie, Benediction of the Blessed Sacra- "Congressional Record" on the CLASS A-l:—Unobjectionable and will celebrate his First Solemn ment motion of Senator Robert F. -

THE SPECTRUM for EVERYBODY =IN V'ulume L"7

SKIRT AND SWEATER FUN AT THE PROM TONIGHT FIELD HOUSE TONIGHT FOR EVERYBODY THE SPECTRUM FOR EVERYBODY =IN v'uLUME L"7. Z 545a STATE COLLEGE STATION, NORTH DAKOTA, FRIDAY, MARCH 8, 1940 NUMBER 23 Tuxedos. Won't BISON PICTURE Eversull, Sevrinson, Beyers Madrigal Club To Be SCHEDULE Cafeteria Will Monday Convo Feature To be taken in the YMCA Work At Frosh auditorium. - Represent AC At St. Louis Professor Hywel C. Rowland's Serve Turkey Watch Old Main bulletin board Among nearly 12,000 educators as- in student participation in college gov- Madrigal Club from the University Prom Tonight for notices of group pictures to sembled at St. Louis, Feb. 24-29, were ernment, faculty committees, and of North Dakota will make its an- For All-College be taken next week. three NDAC faculty members, Pres. other administrative activities." nual appearance at NDAC in con- vocation, Monday at 11:20. This Field House Scene Of All senior proofs must be re- Frank L. Eversull, Dean C. A. Sevrin- Dean Sevrinson was particularly Finnegan To Announce famous choral group comes to turned to Voss and all fraternity son, and Dr. Otto J. Beyers. The pleased with the convention theme, Freshman Frolic; Novel Fargo to the Plymouth Con- New Basketball Captain and sorority glossy prints must be occasion was the seventeenth annual "What Is Right with the Schools ?" Grand March Featured brought to the Bison office by convention of the American Associ- which he observed, "approached edu- gregational church Sunday eve- At Dinner Tuesday Night ning at 8 p. m. and will be housed By BUD DeCAMP March 15.