Open Hearing on Foreign Influence Operations' Use

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

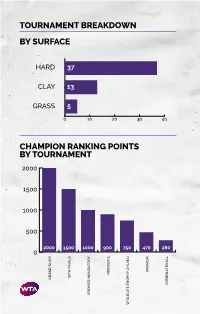

Tournament Breakdown by Surface Champion Ranking Points By

TOURNAMENT BREAKDOWN BY SURFACE HAR 37 CLAY 13 GRASS 5 0 10 20 30 40 CHAMPION RANKING POINTS BY TOURNAMENT 2000 1500 1000 500 2000 1500 1000 900 750 470 280 0 PREMIER PREMIER TA FINALS TA GRAN SLAM INTERNATIONAL PREMIER MANATORY TA ELITE TROPHY HUHAI TROPHY ELITE TA 55 WTA TOURNAMENTS BY REGION BY COUNTRY 8 CHINA 2 SPAIN 1 MOROCCO UNITED STATES 2 SWITZERLAND 7 OF AMERICA 1 NETHERLANDS 3 AUSTRALIA 1 AUSTRIA 1 NEW ZEALAND 3 GREAT BRITAIN 1 COLOMBIA 1 QATAR 3 RUSSIA 1 CZECH REPUBLIC 1 ROMANIA 2 CANADA 1 FRANCE 1 THAILAND 2 GERMANY 1 HONG KONG 1 TURKEY UNITED ARAB 2 ITALY 1 HUNGARY 1 EMIRATES 2 JAPAN 1 SOUTH KOREA 1 UZBEKISTAN 2 MEXICO 1 LUXEMBOURG TOURNAMENTS TOURNAMENTS International Tennis Federation As the world governing body of tennis, the Davis Cup by BNP Paribas and women’s Fed Cup by International Tennis Federation (ITF) is responsible for BNP Paribas are the largest annual international team every level of the sport including the regulation of competitions in sport and most prized in the ITF’s rules and the future development of the game. Based event portfolio. Both have a rich history and have in London, the ITF currently has 210 member nations consistently attracted the best players from each and six regional associations, which administer the passing generation. Further information is available at game in their respective areas, in close consultation www.daviscup.com and www.fedcup.com. with the ITF. The Olympic and Paralympic Tennis Events are also an The ITF is committed to promoting tennis around the important part of the ITF’s responsibilities, with the world and encouraging as many people as possible to 2020 events being held in Tokyo. -

Von Influencer*Innen Lernen Youtube & Co

STUDIEN MARIUS LIEDTKE UND DANIEL MARWECKI VON INFLUENCER*INNEN LERNEN YOUTUBE & CO. ALS SPIELFELDER LINKER POLITIK UND BILDUNGSARBEIT MARIUS LIEDTKE UND DANIEL MARWECKI VON INFLUENCER*INNEN LERNEN YOUTUBE & CO. ALS SPIELFELDER LINKER POLITIK UND BILDUNGSARBEIT Studie im Auftrag der Rosa-Luxemburg-Stiftung MARIUS LIEDTKE arbeitet in Berlin als freier Medienschaffender und Redakteur. Außerdem beendet er aktuell sein Master-Studium der Soziokulturellen Studien an der Europa-Universität Viadrina in Frankfurt (Oder). In seiner Abschlussarbeit setzt er die Forschung zu linken politischen Influencer*innen auf YouTube fort. DANIEL MARWECKI ist Dozent für Internationale Politik und Geschichte an der University of Leeds. Dazu nimmt er Lehraufträge an der SOAS University of London wahr, wo er auch 2018 promovierte. Er mag keinen akademi- schen Jargon, sondern progressives Wissen für alle. IMPRESSUM STUDIEN 7/2019, 1. Auflage wird herausgegeben von der Rosa-Luxemburg-Stiftung V. i. S. d. P.: Henning Heine Franz-Mehring-Platz 1 · 10243 Berlin · www.rosalux.de ISSN 2194-2242 · Redaktionsschluss: November 2019 Illustration Titelseite: Frank Ramspott/iStockphoto Lektorat: TEXT-ARBEIT, Berlin Layout/Herstellung: MediaService GmbH Druck und Kommunikation Gedruckt auf Circleoffset Premium White, 100 % Recycling Inhalt INHALT Vorwort. 4 Zusammenfassung. 5 Einleitung ��������������������������������������������������������������������������������������������������������������������������������������������������������������� 6 Teil 1 Das Potenzial von YouTube -

Eastern Progress Eastern Progress 1972-1973

Eastern Progress Eastern Progress 1972-1973 Eastern Kentucky University Year 1973 Eastern Progress - 12 Apr 1973 Eastern Kentucky University This paper is posted at Encompass. http://encompass.eku.edu/progress 1972-73/26 r » r r Elections To Be Held Wednesday Four Candidates Vie For Student Association Presidential Position there we can gel it up here," Kelley—Hughes in dormitories, Kelley and Peters—Clay He also feels that there should Slade—Rowland they planned lo use the Gray—Vaughn Gray continued. Hughes see a realistic fee for be open visitation during the Progress in a controlled students, payable at weekend with hours being,"say, Steve Slade, a junior from manner, Rowland was quick lo However, Miss Vaughn and The second set of candidates "Government is to listen to its Gray do not feel that everyone for office are Bob Kelley, a registration for (he ser- Cynthiana, and Steve Rowland, explain. Gary Gray, sophomore from constituency and do those "I would have no...uh not should live off campus. "Let senior broadcasting major from vice.' a junior from Louisville are the Royal Oak, Michigan, and Carla things which the constituency even try to have any influence freshmen live in the dorm one Cincinnati, Ohio, and Bill A "realistic policy of open fourth pair of candidates to seek Vaughn, sophomore from wants it to do," said Dave over the Progress. If you have year...so they can mature just a Hughes, a junior pre-med major visitation" is also on the plat- the offices of president and vice- Middlesboro, are Ihe first two form. -

Democratic Strain and Popular Discontent in Europe: Responding to the Challenges Facing Liberal Democracies

DEMOCRATIC STRAIN AND POPULAR DISCONTENT IN EUROPE: RESPONDING TO THE CHALLENGES FACING LIBERAL DEMOCRACIES Report of the Standing Committee on Foreign Affairs and International Development Michael Levitt, Chair JUNE 2019 42nd PARLIAMENT, 1st SESSION Published under the authority of the Speaker of the House of Commons SPEAKER’S PERMISSION The proceedings of the House of Commons and its Committees are hereby made available to provide greater public access. The parliamentary privilege of the House of Commons to control the publication and broadcast of the proceedings of the House of Commons and its Committees is nonetheless reserved. All copyrights therein are also reserved. Reproduction of the proceedings of the House of Commons and its Committees, in whole or in part and in any medium, is hereby permitted provided that the reproduction is accurate and is not presented as official. This permission does not extend to reproduction, distribution or use for commercial purpose of financial gain. Reproduction or use outside this permission or without authorization may be treated as copyright infringement in accordance with the Copyright Act. Authorization may be obtained on written application to the Office of the Speaker of the House of Commons. Reproduction in accordance with this permission does not constitute publication under the authority of the House of Commons. The absolute privilege that applies to the proceedings of the House of Commons does not extend to these permitted reproductions. Where a reproduction includes briefs to a Standing Committee of the House of Commons, authorization for reproduction may be required from the authors in accordance with the Copyright Act. -

Online Dialogue and Interaction Disruption. a Latin American Government’S Use of Twitter Affordances to Dissolve Online Criticism

Online Dialogue and Interaction Disruption. A Latin American Government’s Use of Twitter Affordances to Dissolve Online Criticism Dialogo en línea e interacciones interrumpidas. El uso que un gobierno Latinoamericano hace de las posibilidades tecnológicas de Twitter para disolver críticas en línea Carlos Davalos University of Wisconsin-Madison Orcid http://orcid.org/0000-0001-6846-1661 [email protected] Abstract: Few academic studies have focused on how Latin American governments operate online. Political communica- tion studies focused on social media interactions have overwhelmingly dedicated efforts to understand how regular citizens interact and behave online. Through the analysis of hashtags and other online strategies that were used during Mexican Pres- ident Enrique Peña Nieto’s (EPN) term to critique or manifest unconformity regarding part of the government’s performance, this study observes how members of a Latin American democratic regime weaponized a social media platform to dissipate criticism. More specifically, it proposes that the manipulation of social media affordances can debilitate essential democratic attributes like freedom of expression. Using a qualitative approach, consisting of observation, textual analysis, and online eth- nography, findings show that some Mexican government’s manipulation of inconvenient Twitter conversations could impact or even disrupt potential offline crises. Another objective of the presented research is to set a baseline for future efforts focused on how Latin American democratic regimes behave and generate digital communication on social media platforms. Keywords: Mexico, citizens, government, social media, disruption, social capital, affordances, weaponization, Twitter, online, criticisms Resumen: Son pocos los proyectos académicos dirigidos a entender la forma en que gobiernos Latinoamericanos operan en línea/Internet. -

Hate Crime Report 031008

HATE CRIMES IN THE OSCE REGION -INCIDENTS AND RESPONSES ANNUAL REPORT FOR 2007 Warsaw, October 2008 Foreword In 2007, violent manifestations of intolerance continued to take place across the OSCE region. Such acts, although targeting individuals, affected entire communities and instilled fear among victims and members of their communities. The destabilizing effect of hate crimes and the potential for such crimes and incidents to threaten the security of individuals and societal cohesion – by giving rise to wider-scale conflict and violence – was acknowledged in the decision on tolerance and non-discrimination adopted by the OSCE Ministerial Council in Madrid in November 2007.1 The development of this report is based on the task the Office for Democratic Institutions and Human Rights (ODIHR) received “to serve as a collection point for information and statistics on hate crimes and relevant legislation provided by participating States and to make this information publicly available through … its report on Challenges and Responses to Hate-Motivated Incidents in the OSCE Region”.2 A comprehensive consultation process with governments and civil society takes place during the drafting of the report. In February 2008, ODIHR issued a first call to the nominated national points of contact on combating hate crime, to civil society, and to OSCE institutions and field operations to submit information for this report. The requested information included updates on legislative developments, data on hate crimes and incidents, as well as practical initiatives for combating hate crime. I am pleased to note that the national points of contact provided ODIHR with information and updates on a more systematic basis. -

Indices of the Comparative Civilizations Review, No. 1-83

Comparative Civilizations Review Volume 84 Number 84 Spring 2021 Article 14 2021 Indices of the Comparative Civilizations Review, No. 1-83 Follow this and additional works at: https://scholarsarchive.byu.edu/ccr Part of the Comparative Literature Commons, History Commons, International and Area Studies Commons, Political Science Commons, and the Sociology Commons Recommended Citation (2021) "Indices of the Comparative Civilizations Review, No. 1-83," Comparative Civilizations Review: Vol. 84 : No. 84 , Article 14. Available at: https://scholarsarchive.byu.edu/ccr/vol84/iss84/14 This End Matter is brought to you for free and open access by the Journals at BYU ScholarsArchive. It has been accepted for inclusion in Comparative Civilizations Review by an authorized editor of BYU ScholarsArchive. For more information, please contact [email protected], [email protected]. et al.: Indices of the <i>Comparative Civilizations Review</i>, No. 1-83 Comparative Civilizations Review 139 Indices of the Comparative Civilizations Review, No. 1-83 A full history of the origins of the Comparative Civilizations Review may be found in Michael Palencia-Roth’s (2006) "Bibliographical History and Indices of the Comparative Civilizations Review, 1-50." (Comparative Civilizations Review: Vol. 54: Pages 79 to 127.) The current indices to CCR will exist as an article in the hardcopy publication, as an article in the online version of CCR, and online as a separate searchable document accessed from the CCR website. The popularity of CCR papers will wax and wane with time, but as of September 14, 2020, these were the ten most-popular, based on the average number of full-text downloads per day since the paper was posted. -

I ANKARA UNIVERSITY INSTITUTE of SOCIAL SCIENCES

ANKARA UNIVERSITY INSTITUTE OF SOCIAL SCIENCES FACULTY OF COMMUNICATIONS COMPARATIVE ANALYSIS OF IMPROVING NEWS TRUSTWORTHINESS IN KENYA AND TURKEY IN THE WAKE OF FAKE NEWS IN DIGITAL ERA. MASTER’S THESIS ABDINOOR ADEN MAALIM SUPERVISOR DR. ÖĞR. ÜYESİ ERGİN ŞAFAK DİKMEN ANKARA- 2021 i TEZ ONAY SAYFASI TÜRKİYE CUMHURİYETİ ANKARA ÜNİVERSİTESİ SOSYAL BİLİMLER ENSTİTÜSÜ (MEDYA VE İLETİŞİM ÇALIŞMALARI ANABİLİM DALI) Dijital Çağdaki Sahte Haberlerin Sonucunda Türkiye ve Kenya’da Artan Haber Güvenilirliğinin Karşılaştırmalı Analizi (YÜKSEK LİSANS TEZİ) Tez Danışmanı DR. ÖĞR. Üyesi ERGİN ŞAFAK DİKMEN TEZ JÜRİSİ ÜYELERİ Adı ve Soyadı İmzası 1- PROF.DR. ABDULREZAK ALTUN 2- DR. ÖĞR. Üyesi ERGİN ŞAFAK DİKMEN 3- Doç. Dr. FATMA BİLGE NARİN. Tez Savunması Tarihi 17-06-2021 ii T.C. ANKARA ÜNİVERSİTESİ Sosyal Bilimler Enstitüsü Müdürlüğü’ne, DR. ÖĞR. Üyesi ERGİN ŞAFAK DİKMEN danışmanlığında hazırladığım “Dijital Çağdaki Sahte Haberlerin Sonucunda Türkiye ve Kenya’da Artan Haber Güvenilirliğinin Karşılaştırmalı Analizi (Ankara.2021) ” adlı yüksek lisans - doktora/bütünleşik doktora tezimdeki bütün bilgilerin akademik kurallara ve etik davranış ilkelerine uygun olarak toplanıp sunulduğunu, başka kaynaklardan aldığım bilgileri metinde ve kaynakçada eksiksiz olarak gösterdiğimi, çalışma sürecinde bilimsel araştırma ve etik kurallarına uygun olarak davrandığımı ve aksinin ortaya çıkması durumunda her türlü yasal sonucu kabul edeceğimi beyan ederim. Tarih: 3/8/2021 Adı-Soyadı ve İmza ABDINOOR ADEN MAALIM iii ANKARA UNIVERSITY INSTITUTE OF SOCIAL SCIENCES FACULTY OF COMMUNICATIONS COMPARATIVE ANALYSIS OF IMPROVING NEWS TRUSTWORTHINESS IN KENYA AND TURKEY IN THE WAKE OF FAKE NEWS IN DIGITAL ERA. MASTER’S THESIS ABDINOOR ADEN MAALIM SUPERVISOR DR. ÖĞR. ÜYESİ ERGİN ŞAFAK DİKMEN ANKARA- 2021 iv DECLARATION I hereby declare with honour that this Master’s thesis is my original work and has been written by myself in accordance with the academic rules and ethical requirements. -

Christie and Mrs. Mccain

Oliver Harper: [0:11] It's my great pleasure to have been asked yesterday to introduce Cindy McCain and Governor Christie. The Harpers have had the special privilege of being friends with the McCains for many years, social friends, political friends, and great admirers of the family. [0:33] Cindy is a remarkable person. She's known for her incredible service to the community, to the country, and to the world. She has a passion for doing things right and making the world better. [0:48] Cindy, after her education at USC in special ed, became a teacher at Agua Fria High School in special ed and had a successful career there. She also progressed to become the leader of the family company, Hensley & Company, and continues to lead them to great heights. [1:13] Cindy's interest in the world and special issues has become very apparent over the years. She serves on the board of the HALO Trust. She serves on the board of Operation Smile and of CARE. [1:31] Her special leadership as part of the McCain Institute and her special interest and where her heart is now is in conquering the terrible scourge of human trafficking and bringing those victims of human trafficking to a full, free life as God intended them to have. [1:57] I want to tell you just a quick personal experience with the McCains. Sharon and I will socialize with them often. We'll say, "What are you going to be doing next week, Sharon or Ollie?" [2:10] Then we'll ask the McCains what they're doing. -

Different Shades of Black. the Anatomy of the Far Right in the European Parliament

Different Shades of Black. The Anatomy of the Far Right in the European Parliament Ellen Rivera and Masha P. Davis IERES Occasional Papers, May 2019 Transnational History of the Far Right Series Cover Photo: Protesters of right-wing and far-right Flemish associations take part in a protest against Marra-kesh Migration Pact in Brussels, Belgium on Dec. 16, 2018. Editorial credit: Alexandros Michailidis / Shutter-stock.com @IERES2019 Different Shades of Black. The Anatomy of the Far Right in the European Parliament Ellen Rivera and Masha P. Davis IERES Occasional Papers, no. 2, May 15, 2019 Transnational History of the Far Right Series Transnational History of the Far Right Series A Collective Research Project led by Marlene Laruelle At a time when global political dynamics seem to be moving in favor of illiberal regimes around the world, this re- search project seeks to fill in some of the blank pages in the contemporary history of the far right, with a particular focus on the transnational dimensions of far-right movements in the broader Europe/Eurasia region. Of all European elections, the one scheduled for May 23-26, 2019, which will decide the composition of the 9th European Parliament, may be the most unpredictable, as well as the most important, in the history of the European Union. Far-right forces may gain unprecedented ground, with polls suggesting that they will win up to one-fifth of the 705 seats that will make up the European parliament after Brexit.1 The outcome of the election will have a profound impact not only on the political environment in Europe, but also on the trans- atlantic and Euro-Russian relationships. -

STATEMENT on UNIDENTIFIED FLYING OBJECTS Submitted to The

INTRODUCTION:.................................................................................................................................................................. 1 SCOPE AND BACKGROUND OF PRESENT COMMENTS:........................................................................................ 2 THE UNCONVENTIONAL NATURE OF THE UFO PROBLEM:................................................................................ 3 SOME ALTERNATIVE HYPOTHESES:............................................................................................................................. 3 SOME REMARKS ON INTERVIEWING EXPERIENCE AND TYPES OF UFO CASES ENCOUNTERED:....... 5 WHY DON'T PILOTS SEE UFOs?....................................................................................................................................10 WHY ARE UFOs ONLY SEEN BY LONE INDIVIDUALS, WHY NO MULTIPLE-WITNESS SIGHTINGS? .....16 WHY AREN'T UFOs EVER SEEN IN CITIES? WHY JUST IN OUT-OF-THE-WAY PLACES?...........................22 WHY DON'T ASTRONOMERS EVER SEE UFOs? .......................................................................................................27 METEOROLOGISTS AND WEATHER OBSERVERS LOOK AT THE SKIES FREQUENTLY. WHY DON'T THEY SEE UFOs?................................................................................................................................................................30 DON'T WEATHER BALLOONS AND RESEARCH BALLOONS ACCOUNT FOR MANY UFOs?......................34 WHY AREN'T UFOs EVER TRACKED BY RADAR?....................................................................................................38 -

Technological Seduction and Self-Radicalization

Journal of the American Philosophical Association () – © American Philosophical Association DOI:./apa.. Technological Seduction and Self-Radicalization ABSTRACT: Many scholars agree that the Internet plays a pivotal role in self- radicalization, which can lead to behaviors ranging from lone-wolf terrorism to participation in white nationalist rallies to mundane bigotry and voting for extremist candidates. However, the mechanisms by which the Internet facilitates self-radicalization are disputed; some fault the individuals who end up self- radicalized, while others lay the blame on the technology itself. In this paper, we explore the role played by technological design decisions in online self- radicalization in its myriad guises, encompassing extreme as well as more mundane forms. We begin by characterizing the phenomenon of technological seduction. Next, we distinguish between top-down seduction and bottom-up seduction. We then situate both forms of technological seduction within the theoretical model of dynamical systems theory. We conclude by articulating strategies for combating online self-radicalization. KEYWORDS: technological seduction, virtue epistemology, digital humanities, dynamical systems theory, philosophy of technology, nudge . Self-Radicalization The Charleston church mass shooting, which left nine people dead, was planned and executed by white supremacist Dylann Roof. According to prosecutors, Roof did not adopt his convictions ‘through his personal associations or experiences with white supremacist groups or individuals or others’ (Berman : ). Instead, they developed through his own efforts and engagement online. The same is true of Jose Pimentel, who in began reading online Al Qaeda websites, after which he built homemade pipe bombs in an effort to target veterans returning from Iraq and Afghanistan (Weimann ).