Facial Animation of Game Characters

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

UPA : Redesigning Animation

This document is downloaded from DR‑NTU (https://dr.ntu.edu.sg) Nanyang Technological University, Singapore. UPA : redesigning animation Bottini, Cinzia 2016 Bottini, C. (2016). UPA : redesigning animation. Doctoral thesis, Nanyang Technological University, Singapore. https://hdl.handle.net/10356/69065 https://doi.org/10.32657/10356/69065 Downloaded on 05 Oct 2021 20:18:45 SGT UPA: REDESIGNING ANIMATION CINZIA BOTTINI SCHOOL OF ART, DESIGN AND MEDIA 2016 UPA: REDESIGNING ANIMATION CINZIA BOTTINI School of Art, Design and Media A thesis submitted to the Nanyang Technological University in partial fulfillment of the requirement for the degree of Doctor of Philosophy 2016 “Art does not reproduce the visible; rather, it makes visible.” Paul Klee, “Creative Credo” Acknowledgments When I started my doctoral studies, I could never have imagined what a formative learning experience it would be, both professionally and personally. I owe many people a debt of gratitude for all their help throughout this long journey. I deeply thank my supervisor, Professor Heitor Capuzzo; my cosupervisor, Giannalberto Bendazzi; and Professor Vibeke Sorensen, chair of the School of Art, Design and Media at Nanyang Technological University, Singapore for showing sincere compassion and offering unwavering moral support during a personally difficult stage of this Ph.D. I am also grateful for all their suggestions, critiques and observations that guided me in this research project, as well as their dedication and patience. My gratitude goes to Tee Bosustow, who graciously -

Computerising 2D Animation and the Cleanup Power of Snakes

Computerising 2D Animation and the Cleanup Power of Snakes. Fionnuala Johnson Submitted for the degree of Master of Science University of Glasgow, The Department of Computing Science. January 1998 ProQuest Number: 13818622 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a com plete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. uest ProQuest 13818622 Published by ProQuest LLC(2018). Copyright of the Dissertation is held by the Author. All rights reserved. This work is protected against unauthorized copying under Title 17, United States C ode Microform Edition © ProQuest LLC. ProQuest LLC. 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106- 1346 GLASGOW UNIVERSITY LIBRARY U3 ^coji^ \ Abstract Traditional 2D animation remains largely a hand drawn process. Computer-assisted animation systems do exists. Unfortunately the overheads these systems incur have prevented them from being introduced into the traditional studio. One such prob lem area involves the transferral of the animator’s line drawings into the computer system. The systems, which are presently available, require the images to be over- cleaned prior to scanning. The resulting raster images are of unacceptable quality. Therefore the question this thesis examines is; given a sketchy raster image is it possible to extract a cleaned-up vector image? Current solutions fail to extract the true line from the sketch because they possess no knowledge of the problem area. -

The University of Chicago Looking at Cartoons

THE UNIVERSITY OF CHICAGO LOOKING AT CARTOONS: THE ART, LABOR, AND TECHNOLOGY OF AMERICAN CEL ANIMATION A DISSERTATION SUBMITTED TO THE FACULTY OF THE DIVISION OF THE HUMANITIES IN CANDIDACY FOR THE DEGREE OF DOCTOR OF PHILOSOPHY DEPARTMENT OF CINEMA AND MEDIA STUDIES BY HANNAH MAITLAND FRANK CHICAGO, ILLINOIS AUGUST 2016 FOR MY FAMILY IN MEMORY OF MY FATHER Apparently he had examined them patiently picture by picture and imagined that they would be screened in the same way, failing at that time to grasp the principle of the cinematograph. —Flann O’Brien CONTENTS LIST OF FIGURES...............................................................................................................................v ABSTRACT.......................................................................................................................................vii ACKNOWLEDGMENTS....................................................................................................................viii INTRODUCTION LOOKING AT LABOR......................................................................................1 CHAPTER 1 ANIMATION AND MONTAGE; or, Photographic Records of Documents...................................................22 CHAPTER 2 A VIEW OF THE WORLD Toward a Photographic Theory of Cel Animation ...................................72 CHAPTER 3 PARS PRO TOTO Character Animation and the Work of the Anonymous Artist................121 CHAPTER 4 THE MULTIPLICATION OF TRACES Xerographic Reproduction and One Hundred and One Dalmatians.......174 -

Animação De Personagem 3D: Expressão Facial

209 ANIMAÇÃO DE PERSONAGEM 3D: EXPRESSÃO FACIAL 3D CHARACTERS ANIMATION: FACIAL EXPRESSION LA ANIMACIÓN DE PERSONAJES 3D: EXPRESIÓN FACIAL José Carlos Miranda * ([email protected]) RESUMO Durante os últimos anos, emergiu um interesse crescente em animação 3D de caracteres e expressões faciais. EsTe facto está relacionado com a nova participação de actores virtuais em televisão, cinema e indústrias de jogos interactivos, entre outros. Nestes casos, o objectivo principal é criar personagens virtuais que simulam o corpo e todos os detalhes de movimento do rosto, da forma mais realista possível. Neste artigo é efectuada uma revisão dos métodos mais usados na animação 3D. O método base do esqueleto é o mais utilizado para criar os movimentos principais, como o andar, por exemplo; os métodos base de mistura de formas são usados para criar deformações mais subtis. Em áreas que exigem um maior controlo e realismo, como alguns músculos do rosto, é possível adicionar camadas de deformação. Normalmente, curvas de NURBS ou grelhas de FFD são usadas para realçar algumas características do rosto. Os vários métodos têm vantagens e desvantagens. Assim, a melhor solução é combinar as diferentes técnicas. Palavras-chave: Animação de personagem, Objectos de Deformação, Transformação, Animação do esqueleto, Expressão Facial. ABSTRACT During the last years a growing interest in 3D animation of characters and facial expressions has emerged. This is related with the new participation of virtual actors in television, cinema and interactive games’ industries among others. In these cases, the main goal is to create virtual characters that simulate body and all the facial movement details, in the most realistic possible way. -

Lokasi SMK Muhammadiyah 2 Klaten Utara (SMEA)

LAPORAN INDIVIDU PRAKTIK LAPANGAN TERBIMBING (PLT) Lokasi SMK Muhammadiyah 2 Klaten Utara (SMEA) Jl. Mayor Kusmanto, Setran, Gergunung, Klaten Utara, Klaten, Jawa Tengah Disusun Guna Memenuhi Tugas Mata Kuliah Praktik Lapangan Terbimbing Periode 15 September 2017 – 15 November 2017 Disusun Oleh : DYAH AYU MEGAWATI NIM. 14520241023 PROGRAM STUDI PENDIDIKAN TEKNIK INFORMATIKA FAKULTAS TEKNIK UNIVERSITAS NEGERI YOGYAKARTA 2017 ii KATA PENGANTAR Puji syukur kehadirat Allah SWT yang telah memberikan limpahan rahmat, taufik, dan karunia-Nya kepada penyusun sehingga diberi kemudahan dalam melaksanakan kegiata Praktik Lapangan Terbimbing (PLT) Universitas Negeri Yogyakarta di SMK Muhammadiyah 2 Klaten Utara. Laporan ini merupakan hasil kegiatan yang telah dilakukan selama melaksanakan kegiatan PLT di SMK Muhammadiyah 2 Klaten Utara yang dimulai pada tanggal 15 September 2017 sampai dengan 15 November 2017. Selama melaksanakan Praktik Lapangan Terbimbing, baik saat persiapan, pelaksanaan kegiatan sampai penyusunan laporan ini banyak mendapatkan bantuan, dukungan, dan bimbingan dari berbagai pihak. Untuk itu, dengan segala kerendahan hati penulis ucapkan terima kasih kepada : 1. Allah SWT yang telah memberikan rahmat dan hidayah serta kesehatan selama melaksanakan kegiatan PLT. 2. Keluarga yang saya cinta terutama kepada orangtua yang telah memberikan dukungan moral dan materi. 3. Bapak Prof. Sutrisna Aji Wibawa, M.Pd selaku rektor Universitas Negeri Yogyakarta yang telah memberikan izin dan kesempatan melaksanakan PLT. 4. Pihak Lembaga Pengembangan dan Penjaminan Mutu Pendidikan (LPPMP) yang telah menyelenggarakan kegiatan PLT dengan baik 5. Bapak Prof. Herman Dwi Surjono, Ph.D. selaku Dosen Pamong dan Dosen Pembimbing Lapangan PLT yang telah memberikan bimbingan dan pemantauan hingga penyusunan laporan ini. 6. Ibu Dra. Hj. Wafir selaku Kepala Sekolah SMK Muhammadiyah 2 Klaten Utara yang telah memberikan izin, sarana, dan prasarana, serta kesempatan kepada mahasiswa untuk melaksanakan program PLT. -

Exposing Minstrelsy and Racial Representation Within American Tap Dance Performances of The

UNIVERSITY OF CALIFORNIA Los Angeles Masks in Disguise: Exposing Minstrelsy and Racial Representation within American Tap Dance Performances of the Stage, Screen, and Sound Cartoon, 1900-1950 A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Culture and Performance by Brynn Wein Shiovitz 2016 © Copyright by Brynn Wein Shiovitz 2016 ABSTRACT OF THE DISSERTATION Masks in Disguise: Exposing Minstrelsy and Racial Representation within American Tap Dance Performances of the Stage, Screen, and Sound Cartoon, 1900-1950 by Brynn Wein Shiovitz Doctor of Philosophy in Culture and Performance University of California, Los Angeles, 2016 Professor Susan Leigh Foster, Chair Masks in Disguise: Exposing Minstrelsy and Racial Representation within American Tap Dance Performances of the Stage, Screen, and Sound Cartoon, 1900-1950, looks at the many forms of masking at play in three pivotal, yet untheorized, tap dance performances of the twentieth century in order to expose how minstrelsy operates through various forms of masking. The three performances that I examine are: George M. Cohan’s production of Little Johnny ii Jones (1904), Eleanor Powell’s “Tribute to Bill Robinson” in Honolulu (1939), and Terry- Toons’ cartoon, “The Dancing Shoes” (1949). These performances share an obvious move away from the use of blackface makeup within a minstrel context, and a move towards the masked enjoyment in “black culture” as it contributes to the development of a uniquely American form of entertainment. In bringing these three disparate performances into dialogue I illuminate the many ways in which American entertainment has been built upon an Africanist aesthetic at the same time it has generally disparaged the black body. -

Best Picture of the Yeari Best. Rice of the Ear

SUMMER 1984 SUP~LEMENT I WORLD'S GREATEST SELECTION OF THINGS TO SHOW Best picture of the yeari Best. rice of the ear. TERMS OF ENDEARMENT (1983) SHIRLEY MacLAINE, DEBRA WINGER Story of a mother and daughter and their evolving relationship. Winner of 5 Academy Awards! 30B-837650-Beta 30H-837650-VHS .............. $39.95 JUNE CATALOG SPECIAL! Buy any 3 videocassette non-sale titles on the same order with "Terms" and pay ONLY $30 for "Terms". Limit 1 per family. OFFER EXPIRES JUNE 30, 1984. Blackhawk&;, SUMMER 1984 Vol. 374 © 1984 Blackhawk Films, Inc., One Old Eagle Brewery, Davenport, Iowa 52802 Regular Prices good thru June 30, 1984 VIDEOCASSETTE Kew ReleMe WORLDS GREATEST SHE Cl ION Of THINGS TO SHOW TUMBLEWEEDS ( 1925) WILLIAMS. HART William S. Hart came to the movies in 1914 from a long line of theatrical ex perience, mostly Shakespearean and while to many he is the strong, silent Western hero of film he is also the peer of John Ford as a major force in shaping and developing this genre we enjoy, the Western. In 1889 in what is to become Oklahoma Territory the Cherokee Strip is just a graz ing area owned by Indians and worked day and night be the itinerant cowboys called 'tumbleweeds'. Alas, it is the end of the old West as the homesteaders are moving in . Hart becomes involved with a homesteader's daughter and her evil brother who has a scheme to jump the line as "sooners". The scenes of the gigantic land rush is one of the most noted action sequences in film history. -

Using Dragonframe 4.Pdf

Using DRAGONFRAME 4 Welcome Dragonframe is a stop-motion solution created by professional anima- tors—for professional animators. It's designed to complement how the pros animate. We hope this manual helps you get up to speed with Dragonframe quickly. The chapters in this guide give you the information you need to know to get proficient with Dragonframe: “Big Picture” on page 1 helps you get started with Dragonframe. “User Interface” on page 13 gives a tour of Dragonframe’s features. “Camera Connections” on page 39 helps you connect cameras to Drag- onframe. “Cinematography Tools” on page 73 and “Animation Tools” on page 107 give details on Dragonframe’s main workspaces. “Using the Timeline” on page 129 explains how to use the timeline in the Animation window to edit frames. “Alternative Shooting Techniques (Non Stop Motion)” on page 145 explains how to use Dragonframe for time-lapse. “Managing Your Projects and Files” on page 149 shows how to use Dragonframe to organize and manage your project. “Working with Audio Clips” on page 159 and “Reading Dialogue Tracks” on page 171 explain how to add an audip clip and create a track reading. “Using the X-Sheet” on page 187 explains our virtual exposure sheet. “Automate Lighting with DMX” on page 211 describes how to use DMX to automate lights. “Adding Input and Output Triggers” on page 241 has an overview of using Dragonframe to trigger events. “Motion Control” on page 249 helps you integrate your rig with the Arc Motion Control workspace or helps you use other motion control rigs. -

Authoring Embodied Conversational Agents for Tutor-User Interfaces

137 XNAgent: Authoring Embodied Conversational Agents for Tutor-User Interfaces Andrew M. Olney, Patrick Hays, & Whitney L. Cade Institute for Intelligent Systems & Department of Psychology 365 Innovation Drive Memphis, Tennessee 38152 {aolney,dphays,wlcade}@memphis.edu http://iis.memphis.edu Abstract. Embodied conversational agents are virtual characters that engage users in conversation with appropriate speech, gesture, and facial expression. The high cost of developing embodied conversational agents has led to a recent increase in open source agent platforms. In this paper, we present XNAgent, an open source platform for embodied conversational agents based on the XNA Framework. By leveraging the high-level class structure of the XNA Frame- work, XNAgent provides a compact implementation that is suitable both as a starting point for the development of a more advanced system and as a teaching tool for AI curricula. In this paper we describe how we created an embodied conversational agent in XNA using skeletal and morph animation, motion cap- ture, and event-driven animation and how this process can facilitate the use of embodied conversational agents in the Generalized Intelligent Framework for Tutoring. Keywords: XNA, ECA, GIFT, agent, HCI, conversation, interface, tutoring 1 Introduction It is well known that we unconsciously and automatically interact with computers using social norms [1]. Embodied conversational agents (ECAs) capitalize on this phenomena as characters with human-like communicative capabilities. By doing so, ECAs leverage pointing, gestures, facial expressions, and voice to create a richer hu- man-computer interface. As a result ECAs have been used in diverse AI applications, including education [2], where they form an important part of the tutor-user interface. -

Crisis Communication Effectiveness in The

©2008 Gina M. Serafin ALL RIGHTS RESERVED MEDIA MINDFULNESS: DEVELOPING THE ABILITY AND MOTIVATION TO PROCESS ADVERTISEMENTS by GINA MARCELLO-SERAFIN A Dissertation submitted to the Graduate School-New Brunswick Rutgers, The State University of New Jersey in partial fulfillment of the requirements for the degree of Doctor of Philosophy Graduate Program in Communication, Information and Library Studies written under the direction of Professor Robert Kubey and approved by _________________________________ _________________________________ _________________________________ _________________________________ New Brunswick, New Jersey May, 2008 ABSTRACT OF THE DISSERTATION Media Mindfulness: Developing the Motivation and Ability to Process Advertisements by GINA M. SERAFIN Dissertation Director: Robert Kubey The present study utilized the theories of flow, mindfulness, and the elaboration likelihood model of persuasion to explore which factors may influence the cognitive processing of advertisements by students who participated in a five-week media education curriculum. The purpose of this study was to determine if students who participated in a media education curriculum that focused on advertising differed in their cognitive processing, attitudes, and knowledge of advertisements from students who did not participate in the curriculum. Participants were eighth grade middle school students from an affluent community in Morris County, New Jersey. Differences in attitudes, number of thoughts, and knowledge were investigated. A grounded theory approach was used to analyze student thought listings. The results showed that students who participated in the media education curriculum were more mindful of their advertising consumption. Additionally, students had more positive attitudes toward advertising, language arts class, and working as a member of a team. Students who participated in the curriculum were also more knowledgeable about advertising. -

Animation Is a Process Where Characters Or Objects Are Created As Moving Images

3D animation is a process where characters or objects are created as moving images. Rather than traditional flat or 2d characters, these 3D animation images give the impression of being able to move around characters and observe them from all angles, just like real life. 3D animation technology is relatively new and if done by hand would take thousands of hours to complete one short section of moving film. The employment of computers and software has simplified and accelerated the 3D animation process. As a result, the number of 3D animators as well as the use of 3D animation technology has increased. What will I learn in a 3D animation program? Your 3D animation program course content depends largely upon the 3D animation program in which you enroll. Some programs allow you to choose the courses that you are interested in. Other 3D animation programs are more structured and are intended to train you for a specific career or 3D animation role within the industry. You may learn about character creation, storyboarding, special effects, image manipulation, image capture, modeling, and various computer aided 3D animation design packages. Some 3D animation courses may cover different methods of capturing and recreating movement for 3D animation. You may learn "light suit" technology, in which suits worn by actors pinpoint the articulation of joints by a light. This if filmed from various different angles and used to replicate animated movement in a realistic way). What skills will I need for a 3D animation program? You'll need to have both creativity and attention to detail for a career in 3D animation. -

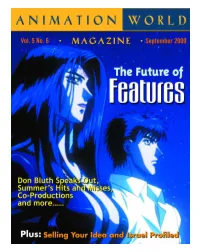

Cartoons Gerard Raiti Looks Into Why Some Cartoons Make Successful Live-Action Features While Others Don’T

Table of Contents SEPTEMBER 2000 VOL.5 NO.6 4 Editor’s Notebook A success and a failure? 6 Letters: [email protected] FEATURE FILMS 8 A Conversation With The New Don Bluth After Titan A.E.’s quick demise at the box office and the even quicker demise of Fox’s state-of-the-art animation studio in Phoenix, Larry Lauria speaks with Don Bluth on his future and that of animation’s. 13 Summer’s Sleepers and Keepers Martin “Dr. Toon” Goodman analyzes the summer’s animated releases and relays what we can all learn from their successes and failures. 17 Anime Theatrical Features With the success of such features as Pokemon, are beleaguered U.S. majors going to look for 2000 more Japanese imports? Fred Patten explains the pros and cons by giving a glimpse inside the Japanese film scene. 21 Just the Right Amount of Cheese:The Secrets to Good Live-Action Adaptations of Cartoons Gerard Raiti looks into why some cartoons make successful live-action features while others don’t. Academy Award-winning producer Bruce Cohen helps out. 25 Indie Animated Features:Are They Possible? Amid Amidi discovers the world of producing theatrical-length animation without major studio backing and ponders if the positives outweigh the negatives… Education and Training 29 Pitching Perfect:A Word From Development Everyone knows a great pitch starts with a great series concept, but in addition to that what do executives like to see? Five top executives from major networks give us an idea of what makes them sit up and take notice… 34 Drawing Attention — How to Get Your Work Noticed Janet Ginsburg reveals the subtle timing of when an agent is needed and when an agent might hinder getting that job.