Software Optimization Guide for AMD Family 17H Processors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Effective Virtual CPU Configuration with QEMU and Libvirt

Effective Virtual CPU Configuration with QEMU and libvirt Kashyap Chamarthy <[email protected]> Open Source Summit Edinburgh, 2018 1 / 38 Timeline of recent CPU flaws, 2018 (a) Jan 03 • Spectre v1: Bounds Check Bypass Jan 03 • Spectre v2: Branch Target Injection Jan 03 • Meltdown: Rogue Data Cache Load May 21 • Spectre-NG: Speculative Store Bypass Jun 21 • TLBleed: Side-channel attack over shared TLBs 2 / 38 Timeline of recent CPU flaws, 2018 (b) Jun 29 • NetSpectre: Side-channel attack over local network Jul 10 • Spectre-NG: Bounds Check Bypass Store Aug 14 • L1TF: "L1 Terminal Fault" ... • ? 3 / 38 Related talks in the ‘References’ section Out of scope: Internals of various side-channel attacks How to exploit Meltdown & Spectre variants Details of performance implications What this talk is not about 4 / 38 Related talks in the ‘References’ section What this talk is not about Out of scope: Internals of various side-channel attacks How to exploit Meltdown & Spectre variants Details of performance implications 4 / 38 What this talk is not about Out of scope: Internals of various side-channel attacks How to exploit Meltdown & Spectre variants Details of performance implications Related talks in the ‘References’ section 4 / 38 OpenStack, et al. libguestfs Virt Driver (guestfish) libvirtd QMP QMP QEMU QEMU VM1 VM2 Custom Disk1 Disk2 Appliance ioctl() KVM-based virtualization components Linux with KVM 5 / 38 OpenStack, et al. libguestfs Virt Driver (guestfish) libvirtd QMP QMP Custom Appliance KVM-based virtualization components QEMU QEMU VM1 VM2 Disk1 Disk2 ioctl() Linux with KVM 5 / 38 OpenStack, et al. libguestfs Virt Driver (guestfish) Custom Appliance KVM-based virtualization components libvirtd QMP QMP QEMU QEMU VM1 VM2 Disk1 Disk2 ioctl() Linux with KVM 5 / 38 libguestfs (guestfish) Custom Appliance KVM-based virtualization components OpenStack, et al. -

AMD EPYC™ 7371 Processors Accelerating HPC Innovation

AMD EPYC™ 7371 Processors Solution Brief Accelerating HPC Innovation March, 2019 AMD EPYC 7371 processors (16 core, 3.1GHz): Exceptional Memory Bandwidth AMD EPYC server processors deliver 8 channels of memory with support for up The right choice for HPC to 2TB of memory per processor. Designed from the ground up for a new generation of solutions, AMD EPYC™ 7371 processors (16 core, 3.1GHz) implement a philosophy of Standards Based AMD is committed to industry standards, choice without compromise. The AMD EPYC 7371 processor delivers offering you a choice in x86 processors outstanding frequency for applications sensitive to per-core with design innovations that target the performance such as those licensed on a per-core basis. evolving needs of modern datacenters. No Compromise Product Line Compute requirements are increasing, datacenter space is not. AMD EPYC server processors offer up to 32 cores and a consistent feature set across all processor models. Power HPC Workloads Tackle HPC workloads with leading performance and expandability. AMD EPYC 7371 processors are an excellent option when license costs Accelerate your workloads with up to dominate the overall solution cost. In these scenarios the performance- 33% more PCI Express® Gen 3 lanes. per-dollar of the overall solution is usually best with a CPU that can Optimize Productivity provide excellent per-core performance. Increase productivity with tools, resources, and communities to help you “code faster, faster code.” Boost AMD EPYC processors’ innovative architecture translates to tremendous application performance with Software performance. More importantly, the performance you’re paying for can Optimization Guides and Performance be matched to the appropriate to the performance you need. -

Superh RISC Engine SH-1/SH-2

SuperH RISC Engine SH-1/SH-2 Programming Manual September 3, 1996 Hitachi America Ltd. Notice When using this document, keep the following in mind: 1. This document may, wholly or partially, be subject to change without notice. 2. All rights are reserved: No one is permitted to reproduce or duplicate, in any form, the whole or part of this document without Hitachi’s permission. 3. Hitachi will not be held responsible for any damage to the user that may result from accidents or any other reasons during operation of the user’s unit according to this document. 4. Circuitry and other examples described herein are meant merely to indicate the characteristics and performance of Hitachi’s semiconductor products. Hitachi assumes no responsibility for any intellectual property claims or other problems that may result from applications based on the examples described herein. 5. No license is granted by implication or otherwise under any patents or other rights of any third party or Hitachi, Ltd. 6. MEDICAL APPLICATIONS: Hitachi’s products are not authorized for use in MEDICAL APPLICATIONS without the written consent of the appropriate officer of Hitachi’s sales company. Such use includes, but is not limited to, use in life support systems. Buyers of Hitachi’s products are requested to notify the relevant Hitachi sales offices when planning to use the products in MEDICAL APPLICATIONS. Introduction The SuperH RISC engine family incorporates a RISC (Reduced Instruction Set Computer) type CPU. A basic instruction can be executed in one clock cycle, realizing high performance operation. A built-in multiplier can execute multiplication and addition as quickly as DSP. -

Evaluation of AMD EPYC

Evaluation of AMD EPYC Chris Hollowell <[email protected]> HEPiX Fall 2018, PIC Spain What is EPYC? EPYC is a new line of x86_64 server CPUs from AMD based on their Zen microarchitecture Same microarchitecture used in their Ryzen desktop processors Released June 2017 First new high performance series of server CPUs offered by AMD since 2012 Last were Piledriver-based Opterons Steamroller Opteron products cancelled AMD had focused on low power server CPUs instead x86_64 Jaguar APUs ARM-based Opteron A CPUs Many vendors are now offering EPYC-based servers, including Dell, HP and Supermicro 2 How Does EPYC Differ From Skylake-SP? Intel’s Skylake-SP Xeon x86_64 server CPU line also released in 2017 Both Skylake-SP and EPYC CPU dies manufactured using 14 nm process Skylake-SP introduced AVX512 vector instruction support in Xeon AVX512 not available in EPYC HS06 official GCC compilation options exclude autovectorization Stock SL6/7 GCC doesn’t support AVX512 Support added in GCC 4.9+ Not heavily used (yet) in HEP/NP offline computing Both have models supporting 2666 MHz DDR4 memory Skylake-SP 6 memory channels per processor 3 TB (2-socket system, extended memory models) EPYC 8 memory channels per processor 4 TB (2-socket system) 3 How Does EPYC Differ From Skylake (Cont)? Some Skylake-SP processors include built in Omnipath networking, or FPGA coprocessors Not available in EPYC Both Skylake-SP and EPYC have SMT (HT) support 2 logical cores per physical core (absent in some Xeon Bronze models) Maximum core count (per socket) Skylake-SP – 28 physical / 56 logical (Xeon Platinum 8180M) EPYC – 32 physical / 64 logical (EPYC 7601) Maximum socket count Skylake-SP – 8 (Xeon Platinum) EPYC – 2 Processor Inteconnect Skylake-SP – UltraPath Interconnect (UPI) EYPC – Infinity Fabric (IF) PCIe lanes (2-socket system) Skylake-SP – 96 EPYC – 128 (some used by SoC functionality) Same number available in single socket configuration 4 EPYC: MCM/SoC Design EPYC utilizes an SoC design Many functions normally found in motherboard chipset on the CPU SATA controllers USB controllers etc. -

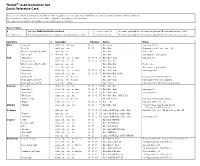

Thumb® 16-Bit Instruction Set Quick Reference Card

Thumb® 16-bit Instruction Set Quick Reference Card This card lists all Thumb instructions available on Thumb-capable processors earlier than ARM®v6T2. In addition, it lists all Thumb-2 16-bit instructions. The instructions shown on this card are all 16-bit in Thumb-2, except where noted otherwise. All registers are Lo (R0-R7) except where specified. Hi registers are R8-R15. Key to Tables § See Table ARM architecture versions. <loreglist+LR> A comma-separated list of Lo registers. plus the LR, enclosed in braces, { and }. <loreglist> A comma-separated list of Lo registers, enclosed in braces, { and }. <loreglist+PC> A comma-separated list of Lo registers. plus the PC, enclosed in braces, { and }. Operation § Assembler Updates Action Notes Move Immediate MOVS Rd, #<imm> N Z Rd := imm imm range 0-255. Lo to Lo MOVS Rd, Rm N Z Rd := Rm Synonym of LSLS Rd, Rm, #0 Hi to Lo, Lo to Hi, Hi to Hi MOV Rd, Rm Rd := Rm Not Lo to Lo. Any to Any 6 MOV Rd, Rm Rd := Rm Any register to any register. Add Immediate 3 ADDS Rd, Rn, #<imm> N Z C V Rd := Rn + imm imm range 0-7. All registers Lo ADDS Rd, Rn, Rm N Z C V Rd := Rn + Rm Hi to Lo, Lo to Hi, Hi to Hi ADD Rd, Rd, Rm Rd := Rd + Rm Not Lo to Lo. Any to Any T2 ADD Rd, Rd, Rm Rd := Rd + Rm Any register to any register. Immediate 8 ADDS Rd, Rd, #<imm> N Z C V Rd := Rd + imm imm range 0-255. -

Readingsample

Embedded Robotics Mobile Robot Design and Applications with Embedded Systems Bearbeitet von Thomas Bräunl Neuausgabe 2008. Taschenbuch. xiv, 546 S. Paperback ISBN 978 3 540 70533 8 Format (B x L): 17 x 24,4 cm Gewicht: 1940 g Weitere Fachgebiete > Technik > Elektronik > Robotik Zu Inhaltsverzeichnis schnell und portofrei erhältlich bei Die Online-Fachbuchhandlung beck-shop.de ist spezialisiert auf Fachbücher, insbesondere Recht, Steuern und Wirtschaft. Im Sortiment finden Sie alle Medien (Bücher, Zeitschriften, CDs, eBooks, etc.) aller Verlage. Ergänzt wird das Programm durch Services wie Neuerscheinungsdienst oder Zusammenstellungen von Büchern zu Sonderpreisen. Der Shop führt mehr als 8 Millionen Produkte. CENTRAL PROCESSING UNIT . he CPU (central processing unit) is the heart of every embedded system and every personal computer. It comprises the ALU (arithmetic logic unit), responsible for the number crunching, and the CU (control unit), responsible for instruction sequencing and branching. Modern microprocessors and microcontrollers provide on a single chip the CPU and a varying degree of additional components, such as counters, timing coprocessors, watchdogs, SRAM (static RAM), and Flash-ROM (electrically erasable ROM). Hardware can be described on several different levels, from low-level tran- sistor-level to high-level hardware description languages (HDLs). The so- called register-transfer level is somewhat in-between, describing CPU compo- nents and their interaction on a relatively high level. We will use this level in this chapter to introduce gradually more complex components, which we will then use to construct a complete CPU. With the simulation system Retro [Chansavat Bräunl 1999], [Bräunl 2000], we will be able to actually program, run, and test our CPUs. -

Reverse Engineering X86 Processor Microcode

Reverse Engineering x86 Processor Microcode Philipp Koppe, Benjamin Kollenda, Marc Fyrbiak, Christian Kison, Robert Gawlik, Christof Paar, and Thorsten Holz, Ruhr-University Bochum https://www.usenix.org/conference/usenixsecurity17/technical-sessions/presentation/koppe This paper is included in the Proceedings of the 26th USENIX Security Symposium August 16–18, 2017 • Vancouver, BC, Canada ISBN 978-1-931971-40-9 Open access to the Proceedings of the 26th USENIX Security Symposium is sponsored by USENIX Reverse Engineering x86 Processor Microcode Philipp Koppe, Benjamin Kollenda, Marc Fyrbiak, Christian Kison, Robert Gawlik, Christof Paar, and Thorsten Holz Ruhr-Universitat¨ Bochum Abstract hardware modifications [48]. Dedicated hardware units to counter bugs are imperfect [36, 49] and involve non- Microcode is an abstraction layer on top of the phys- negligible hardware costs [8]. The infamous Pentium fdiv ical components of a CPU and present in most general- bug [62] illustrated a clear economic need for field up- purpose CPUs today. In addition to facilitate complex and dates after deployment in order to turn off defective parts vast instruction sets, it also provides an update mechanism and patch erroneous behavior. Note that the implementa- that allows CPUs to be patched in-place without requiring tion of a modern processor involves millions of lines of any special hardware. While it is well-known that CPUs HDL code [55] and verification of functional correctness are regularly updated with this mechanism, very little is for such processors is still an unsolved problem [4, 29]. known about its inner workings given that microcode and the update mechanism are proprietary and have not been Since the 1970s, x86 processor manufacturers have throughly analyzed yet. -

Mairjason2015phd.Pdf (1.650Mb)

Power Modelling in Multicore Computing Jason Mair a thesis submitted for the degree of Doctor of Philosophy at the University of Otago, Dunedin, New Zealand. 2015 Abstract Power consumption has long been a concern for portable consumer electron- ics, but has recently become an increasing concern for larger, power-hungry systems such as servers and clusters. This concern has arisen from the asso- ciated financial cost and environmental impact, where the cost of powering and cooling a large-scale system deployment can be on the order of mil- lions of dollars a year. Such a substantial power consumption additionally contributes significant levels of greenhouse gas emissions. Therefore, software-based power management policies have been used to more effectively manage a system’s power consumption. However, man- aging power consumption requires fine-grained power values for evaluating the run-time tradeoff between power and performance. Despite hardware power meters providing a convenient and accurate source of power val- ues, they are incapable of providing the fine-grained, per-application power measurements required in power management. To meet this challenge, this thesis proposes a novel power modelling method called W-Classifier. In this method, a parameterised micro-benchmark is designed to reproduce a selection of representative, synthetic workloads for quantifying the relationship between key performance events and the cor- responding power values. Using the micro-benchmark enables W-Classifier to be application independent, which is a novel feature of the method. To improve accuracy, W-Classifier uses run-time workload classification and derives a collection of workload-specific linear functions for power estima- tion, which is another novel feature for power modelling. -

Pipeliningpipelining

ChapterChapter 99 PipeliningPipelining Jin-Fu Li Department of Electrical Engineering National Central University Jungli, Taiwan Outline ¾ Basic Concepts ¾ Data Hazards ¾ Instruction Hazards Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 2 Content Coverage Main Memory System Address Data/Instruction Central Processing Unit (CPU) Operational Registers Arithmetic Instruction and Cache Logic Unit Sets memory Program Counter Control Unit Input/Output System Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 3 Basic Concepts ¾ Pipelining is a particularly effective way of organizing concurrent activity in a computer system ¾ Let Fi and Ei refer to the fetch and execute steps for instruction Ii ¾ Execution of a program consists of a sequence of fetch and execute steps, as shown below I1 I2 I3 I4 I5 F1 E1 F2 E2 F3 E3 F4 E4 F5 Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 4 Hardware Organization ¾ Consider a computer that has two separate hardware units, one for fetching instructions and another for executing them, as shown below Interstage Buffer Instruction fetch Execution unit unit Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 5 Basic Idea of Instruction Pipelining 12 3 4 5 Time I1 F1 E1 I2 F2 E2 I3 F3 E3 I4 F4 E4 F E Advanced Reliable Systems (ARES) Lab. Jin-Fu Li, EE, NCU 6 A 4-Stage Pipeline 12 3 4 567 Time I1 F1 D1 E1 W1 I2 F2 D2 E2 W2 I3 F3 D3 E3 W3 I4 F4 D4 E4 W4 D: Decode F: Fetch Instruction E: Execute W: Write instruction & fetch operation results operands B1 B2 B3 Advanced Reliable Systems (ARES) Lab. -

A Statistical Performance Model of the Opteron Processor

A Statistical Performance Model of the Opteron Processor Jeanine Cook Jonathan Cook Waleed Alkohlani New Mexico State University New Mexico State University New Mexico State University Klipsch School of Electrical Department of Computer Klipsch School of Electrical and Computer Engineering Science and Computer Engineering [email protected] [email protected] [email protected] ABSTRACT m5 [11], Simics/GEMS [25], and more recently MARSSx86 [5]{ Cycle-accurate simulation is the dominant methodology for the only one supporting the popular x86 architecture. Some processor design space analysis and performance prediction. of these have not been validated against real hardware in However, with the prevalence of multi-core, multi-threaded the multi-core mode (SESC and M5), and the others that architectures, this method has become highly impractical as are validated show large errors of up to 50% [5]. All cycle- the sole means for design due to its extreme slowdowns. We accurate multi-core simulators are very slow; a few hundred have developed a statistical technique for modeling multi- thousand simulated instructions per second is the most op- core processors that is based on Monte Carlo methods. Us- timistic speed with the help of native mode execution. Fur- ing this method, processor models of contemporary archi- ther, the architectures supported by many of these simula- tectures can be developed and applied to performance pre- tors are now obsolete. diction, bottleneck detection, and limited design space anal- ysis. To date, we have accurately modeled the IBM Cell, the To address these issues and to satisfy our own desire for Intel Itanium, and the Sun Niagara 1 and Niagara 2 proces- performance models of contemporary processors, we devel- sors [34, 33, 10]. -

The RISC-V Compressed Instruction Set Manual

The RISC-V Compressed Instruction Set Manual Version 1.7 Warning! This draft specification will change before being accepted as standard, so implementations made to this draft specification will likely not conform to the future standard. Andrew Waterman, Yunsup Lee, David Patterson, Krste Asanovi´c CS Division, EECS Department, University of California, Berkeley fwaterman|yunsup|pattrsn|[email protected] May 28, 2015 This document is also available as Technical Report UCB/EECS-2015-157. 2 RISC-V Compressed ISA V1.7 1.1 Introduction This excerpt from the RISC-V User-Level ISA Specification describes the current draft proposal for the RISC-V standard compressed instruction set extension, named \C", which reduces static and dynamic code size by adding short 16-bit instruction encodings for common integer operations. The C extension can be added to any of the base ISAs (RV32I, RV64I, RV128I), and we use the generic term \RVC" to cover any of these. Typically, over half of the RISC-V instructions in a program can be replaced with RVC instructions, resulting in greater than a 25% code-size reduction. Section 1.7 describes a possible extended set of instructions for RVC, for which we would like your opinion. Please send your comments to the isa-dev mailing list at [email protected]. 1.2 Overview RVC uses a simple compression scheme that offers shorter 16-bit versions of common 32-bit RISC-V instructions when: • the immediate or address offset is small, or • one of the registers is the zero register (x0) or the ABI stack pointer (x2), or • the destination register and the first source register are identical, or • the registers used are the 8 most popular ones. -

Power Modeling

CHAPTER 5 POWER MODELING Jason Mair1, Zhiyi Huang1, David Eyers1, Leandro Cupertino2, Georges Da Costa2, Jean-Marc Pierson2 and Helmut Hlavacs3 1Department of Computer Science, University of Otago, New Zealand 2Institute for Research in Informatics of Toulouse (IRIT), University of Toulouse III, France 3Faculty of Computer Science, University of Vienna, Austria 5.1 Introduction Power consumption has long been a concern for portable consumer electronics, with many manufacturers explicitly seeking to maximize battery life in order to improve the usability of devices such as laptops and smart phones. However, it has recently become a concern in the domain of much larger, more power hungry systems such as servers, clusters and data centers. This new drive to improve energy efficiency is in part due to the increasing deployment of large-scale systems in more businesses and industries, which have two pri- mary motives for saving energy. Firstly, there is the traditional economic incentive for a business to reduce their operating costs, where the cost of powering and cooling a large data center can be on the order of millions of dollars [18]. Reducing the total cost of own- ership for servers could help to stimulate further deployments. As servers become more affordable, deployments will increase in businesses where concerns over lifetime costs pre- viously prevented adoption. The second motivating factor is the increasing awareness of the environmental impact—e.g. greenhouse gas emissions—caused by power production. Reducing energy consumption can help a business indirectly reduce their environmental impact, making them more clean and green. The most commonly adopted solution for reducing power consumption is a hardware- based approach, where old, inefficient hardware is replaced with newer, more energy effi- COST Action 0804, edition.