How to Troubleshoot Slow Network Performance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

What Is UNIX? the Directory Structure Basic Commands Find

What is UNIX? UNIX is an operating system like Windows on our computers. By operating system, we mean the suite of programs which make the computer work. It is a stable, multi-user, multi-tasking system for servers, desktops and laptops. The Directory Structure All the files are grouped together in the directory structure. The file-system is arranged in a hierarchical structure, like an inverted tree. The top of the hierarchy is traditionally called root (written as a slash / ) Basic commands When you first login, your current working directory is your home directory. In UNIX (.) means the current directory and (..) means the parent of the current directory. find command The find command is used to locate files on a Unix or Linux system. find will search any set of directories you specify for files that match the supplied search criteria. The syntax looks like this: find where-to-look criteria what-to-do All arguments to find are optional, and there are defaults for all parts. where-to-look defaults to . (that is, the current working directory), criteria defaults to none (that is, select all files), and what-to-do (known as the find action) defaults to ‑print (that is, display the names of found files to standard output). Examples: find . –name *.txt (finds all the files ending with txt in current directory and subdirectories) find . -mtime 1 (find all the files modified exact 1 day) find . -mtime -1 (find all the files modified less than 1 day) find . -mtime +1 (find all the files modified more than 1 day) find . -

CS101 Lecture 8

What is a program? What is a “Window Manager” ? What is a “GUI” ? How do you navigate the Unix directory tree? What is a wildcard? Readings: See CCSO’s Unix pages and 8-2 Applications Unix Engineering Workstations UNIX- operating system / C- programming language / Matlab Facilitate machine independent program development Computer program(software): a sequence of instructions that tells the computer what to do. A program takes raw data as input and produces information as output. System Software: • Operating Systems Unix,Windows,MacOS,VM/CMS,... • Editor Programs gedit, pico, vi Applications Software: • Translators and Interpreters gcc--gnu c compiler matlab – interpreter • User created Programs!!! 8-4 X-windows-a Window Manager and GUI(Graphical User Interface) Click Applications and follow the menus or click on an icon to run a program. Click Terminal to produce a command line interface. When the following slides refer to Unix commands it is assumed that these are entered on the command line that begins with the symbol “>” (prompt symbol). Data, information, computer instructions, etc. are saved in secondary storage (auxiliary storage) in files. Files are collected or organized in directories. Each user on a multi-user system has his/her own home directory. In Unix users and system administrators organize files and directories in a hierarchical tree. secondary storage Home Directory When a user first logs in on a computer, the user will be “in” the users home directory. To find the name of the directory type > pwd (this is an acronym for print working directory) So the user is in the directory “gambill” The string “/home/gambill” is called a path. -

Trees, Binary Search Trees, Heaps & Applications Dr. Chris Bourke

Trees Trees, Binary Search Trees, Heaps & Applications Dr. Chris Bourke Department of Computer Science & Engineering University of Nebraska|Lincoln Lincoln, NE 68588, USA [email protected] http://cse.unl.edu/~cbourke 2015/01/31 21:05:31 Abstract These are lecture notes used in CSCE 156 (Computer Science II), CSCE 235 (Dis- crete Structures) and CSCE 310 (Data Structures & Algorithms) at the University of Nebraska|Lincoln. This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License 1 Contents I Trees4 1 Introduction4 2 Definitions & Terminology5 3 Tree Traversal7 3.1 Preorder Traversal................................7 3.2 Inorder Traversal.................................7 3.3 Postorder Traversal................................7 3.4 Breadth-First Search Traversal..........................8 3.5 Implementations & Data Structures.......................8 3.5.1 Preorder Implementations........................8 3.5.2 Inorder Implementation.........................9 3.5.3 Postorder Implementation........................ 10 3.5.4 BFS Implementation........................... 12 3.5.5 Tree Walk Implementations....................... 12 3.6 Operations..................................... 12 4 Binary Search Trees 14 4.1 Basic Operations................................. 15 5 Balanced Binary Search Trees 17 5.1 2-3 Trees...................................... 17 5.2 AVL Trees..................................... 17 5.3 Red-Black Trees.................................. 19 6 Optimal Binary Search Trees 19 7 Heaps 19 -

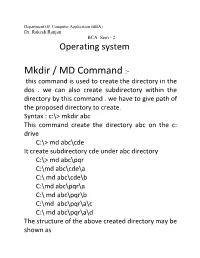

Mkdir / MD Command :- This Command Is Used to Create the Directory in the Dos

Department Of Computer Application (BBA) Dr. Rakesh Ranjan BCA Sem - 2 Operating system Mkdir / MD Command :- this command is used to create the directory in the dos . we can also create subdirectory within the directory by this command . we have to give path of the proposed directory to create. Syntax : c:\> mkdir abc This command create the directory abc on the c: drive C:\> md abc\cde It create subdirectory cde under abc directory C:\> md abc\pqr C:\md abc\cde\a C:\ md abc\cde\b C:\md abc\pqr\a C:\ md abc\pqr\b C:\md abc\pqr\a\c C:\ md abc\pqr\a\d The structure of the above created directory may be shown as ABC CDE PQR A B A B C D Tree command :- this command in dos is used to display the structure of the directory and subdirectory in DOS. C:\> tree This command display the structure of c: Drive C:\> tree abc It display the structure of abc directory on the c: drive Means that tree command display the directory structure of the given directory of given path. RD Command :- RD stands for Remove directory command . this command is used to remove the directory from the disk . it should be noted that directory which to be removed must be empty otherwise , can not be removed . Syntax :- c:\ rd abc It display error because , abc is not empty C:\ rd abc\cde It also display error because cde is not empty C:\ rd abc\cde\a It works and a directory will remove from the disk C:\ rd abc\cde\b It will remove the b directory C:\rd abc\cde It will remove the cde directory because cde is now empty. -

UNIX (Solaris/Linux) Quick Reference Card Logging in Directory Commands at the Login: Prompt, Enter Your Username

UNIX (Solaris/Linux) QUICK REFERENCE CARD Logging In Directory Commands At the Login: prompt, enter your username. At the Password: prompt, enter ls Lists files in current directory your system password. Linux is case-sensitive, so enter upper and lower case ls -l Long listing of files letters as required for your username, password and commands. ls -a List all files, including hidden files ls -lat Long listing of all files sorted by last Exiting or Logging Out modification time. ls wcp List all files matching the wildcard Enter logout and press <Enter> or type <Ctrl>-D. pattern Changing your Password ls dn List files in the directory dn tree List files in tree format Type passwd at the command prompt. Type in your old password, then your new cd dn Change current directory to dn password, then re-enter your new password for verification. If the new password cd pub Changes to subdirectory “pub” is verified, your password will be changed. Many systems age passwords; this cd .. Changes to next higher level directory forces users to change their passwords at predetermined intervals. (previous directory) cd / Changes to the root directory Changing your MS Network Password cd Changes to the users home directory cd /usr/xx Changes to the subdirectory “xx” in the Some servers maintain a second password exclusively for use with Microsoft windows directory “usr” networking, allowing you to mount your home directory as a Network Drive. mkdir dn Makes a new directory named dn Type smbpasswd at the command prompt. Type in your old SMB passwword, rmdir dn Removes the directory dn (the then your new password, then re-enter your new password for verification. -

System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 15 SP1

SUSE Linux Enterprise Server 15 SP1 System Analysis and Tuning Guide System Analysis and Tuning Guide SUSE Linux Enterprise Server 15 SP1 An administrator's guide for problem detection, resolution and optimization. Find how to inspect and optimize your system by means of monitoring tools and how to eciently manage resources. Also contains an overview of common problems and solutions and of additional help and documentation resources. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xiii -

Command Line Interface (Shell)

Command Line Interface (Shell) 1 Organization of a computer system users applications graphical user shell interface (GUI) operating system hardware (or software acting like hardware: “virtual machine”) 2 Organization of a computer system Easier to use; users applications Not so easy to program with, interactive actions automate (click, drag, tap, …) graphical user shell interface (GUI) system calls operating system hardware (or software acting like hardware: “virtual machine”) 3 Organization of a computer system Easier to program users applications with and automate; Not so convenient to use (maybe) typed commands graphical user shell interface (GUI) system calls operating system hardware (or software acting like hardware: “virtual machine”) 4 Organization of a computer system users applications this class graphical user shell interface (GUI) operating system hardware (or software acting like hardware: “virtual machine”) 5 What is a Command Line Interface? • Interface: Means it is a way to interact with the Operating System. 6 What is a Command Line Interface? • Interface: Means it is a way to interact with the Operating System. • Command Line: Means you interact with it through typing commands at the keyboard. 7 What is a Command Line Interface? • Interface: Means it is a way to interact with the Operating System. • Command Line: Means you interact with it through typing commands at the keyboard. So a Command Line Interface (or a shell) is a program that lets you interact with the Operating System via the keyboard. 8 Why Use a Command Line Interface? A. In the old days, there was no choice 9 Why Use a Command Line Interface? A. -

Introduction to Unix

Introduction to Unix Editing with emacs Compiling with gcc What is Unix? q UNIX is an operating system first developed in the 1960s - by operating system, we mean the suite of programs that make the computer work q There are many different versions of UNIX, although they share common similarities - the most popular varieties of UNIX are Sun Solaris, GNU/Linux, and MacOS X q The UNIX operating system is made up of three parts: - the kernel, the shell and the programs Files and Processes q Everything in UNIX is either a file or a process: - A process is an executing program identified by a unique process identifier - a file is a collection of data created by users using text editors, running compilers, etc. q All the files are grouped together in the directory structure - The file-system is arranged in a hierarchical structure, like an inverted tree Tree Directory Structure root directory home directory current directory Concepts q Root directory q Current directory q Home directory q Parent directory q Absolute path q Relative path Tree Directory Structure Unix command: root ls directory Output: Sub a b c Unix command: home ls /home/jane/data directory current Output: directory Sub a b c Unix command: ls ~/data Output: Sub a b c Tree Directory Structure Unix command: root ls ~ directory Output: data setup Unix command: home ls .. directory Output: current directory data setup Unix command: ls ./../.. Output: jim jane Unix command: ls ./../../jim Output: calendar Tree Directory Structure Unix commands: root cd ./../../../work directory current ls directory Output: setups bkup home Unix command: directory ls ./. -

ISCLI–Industry Standard CLI Command Reference for the IBM Flex System Fabric EN4093 10Gb Scalable Switch

IBM Networking OS ISCLI–Industry Standard CLI Command Reference for the IBM Flex System Fabric EN4093 10Gb Scalable Switch IBM Networking OS ISCLI–Industry Standard CLI Command Reference for the IBM Flex System Fabric EN4093 10Gb Scalable Switch Note: Before using this information and the product it supports, read the general information in the Safety information and Environmental Notices and User Guide documents on the IBM Documentation CD and the Warranty Information document that comes with the product. First Edition (April 2012) © Copyright IBM Corporation 2012 US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Preface . .1 Who Should Use This Book . .1 How This Book Is Organized . .1 Typographic Conventions . .2 How to Get Help . .4 Chapter 1. ISCLI Basics . .5 Accessing the ISCLI . .5 ISCLI Command Modes . .5 Global Commands . .9 Command Line Interface Shortcuts . 11 CLI List and Range Inputs . 11 Command Abbreviation . 11 Tab Completion . 11 User Access Levels . 12 Idle Timeout . 13 Chapter 2. Information Commands . 15 System Information. 16 Error Disable and Recovery Information . 16 SNMPv3 System Information . 17 SNMPv3 USM User Table Information . 19 SNMPv3 View Table Information . 20 SNMPv3 Access Table Information . 21 SNMPv3 Group Table Information. 22 SNMPv3 Community Table Information. 22 SNMPv3 Target Address Table Information . 23 SNMPv3 Target Parameters Table Information. 23 SNMPv3 Notify Table Information . 24 SNMPv3 Dump Information . 25 General System Information. 26 Show Recent Syslog Messages . 27 User Status . 27 © Copyright IBM Corp. 2012 v Layer 2 Information . 28 FDB Information . 30 Show All FDB Information. -

An Introduction to DNS and DNS Tools

An Introduction to DNS and DNS Tools http://0-delivery.acm.org.innopac.lib.ryerson.ca/10.1145/380000/374803... An Introduction to DNS and DNS Tools Neil Anuskiewicz Abstract The explosive growth of the Internet was made possible, in part, by DNS. The domain name system (DNS) hums along behind the scenes and, as with running water, we largely take it for granted. That this system just works is a testament to the hackers who designed and developed DNS and the open-source package called Bind, thereby introducing a scalable Internet to the world. Before DNS and Bind, /etc/hosts was the only way to translate IP addresses to human-friendly hostnames and vice versa. This article will introduce the concepts of DNS and three commands with which you can examine DNS information: host, dig and nslookup. The DNS is a distributed, hierarchical database where authority flows from the top (or root) of the hierarchy downward. When Linux Journal registered linuxjournal.com, they got permission from an entity that had authority at the root or top level. The Internet Corporation for Assigned Names and Numbers (ICANN) and a domain name registrar, transferred authority for linuxjournal.com to Linux Journal, which now has the authority to create subdomains such as embedded.linuxjournal.com, without the involvement of ICANN and a domain name registrar. When trying to understand the structure of the DNS, think of an inverted tree--the very structure of the UNIX filesystem. Each branch of the tree is within a zone of authority; more than one branch of this tree can be within a single zone. -

Controlling Gpios on Rpi Using Ping Command

Ver. 3 Department of Engineering Science Lab – Controlling PI Controlling Raspberry Pi 3 Model B Using PING Commands A. Objectives 1. An introduction to Shell and shell scripting 2. Starting a program at the Auto-start 3. Knowing your distro version 4. Understanding tcpdump command 5. Introducing tshark utility 6. Interfacing RPI to an LCD 7. Understanding PING command B. Time of Completion This laboratory activity is designed for students with some knowledge of Raspberry Pi and it is estimated to take about 5-6 hours to complete. C. Requirements 1. A Raspberry Pi 3 Model 3 2. 32 GByte MicroSD card à Give your MicroSD card to the lab instructor for a copy of Ubuntu. 3. USB adaptor to power up the Pi 4. Read Lab 2 – Interfacing with Pi carefully. D. Pre-Lab Lear about ping and ICMP protocols. F. Farahmand 9/30/2019 1 Ver. 3 Department of Engineering Science Lab – Controlling PI E. Lab This lab has two separate parts. Please make sure you read each part carefully. Answer all the questions. Submit your codes via Canvas. 1) Part I - Showing IP Addresses on the LCD In this section we learn how to interface an LCD to the Pi and run a program automatically at the boot up. a) Interfacing your RPI to an LCD In this section you need to interface your 16×2 LCD with Raspberry Pi using 4-bit mode. Please note that you can choose any type of LCD and interface it to your PI, including OLED. Below is the wiring example showing how to interface a 16×2 LCD to RPI. -

Traceroute Mac Traceroute Mac

2] Chapter 2 Catalyst 3560 Switch Cisco IOS Commands traceroute mac traceroute mac Use the traceroute mac privileged EXEC command to display the Layer 2 path taken by the packets from the specified source MAC address to the specified destination MAC address. traceroute mac [interface interface-id] {source-mac-address} [interface interface-id] {destination-mac-address} [vlan vlan-id] [detail] Syntax Description interface interface-id (Optional) Specify an interface on the source or destination switch. source-mac-address Specify the MAC address of the source switch in hexadecimal format. destination-mac-address Specify the MAC address of the destination switch in hexadecimal format. vlan vlan-id (Optional) Specify the VLAN on which to trace the Layer 2 path that the packets take from the source switch to the destination switch. Valid VLAN IDs are from 1 to 4094. detail (Optional) Specify that detailed information appears. Defaults There is no default. Command Modes Privileged EXEC Command History Release Modification 12.1(19)EA1 This command was first introduced. Usage Guidelines For Layer 2 traceroute to function properly, Cisco Discovery Protocol (CDP) must be enabled on all the switches in the network. Do not disable CDP. When the switch detects a device in the Layer 2 path that does not support Layer 2 traceroute, the switch continues to send Layer 2 trace queries and lets them time out. The maximum number of hops identified in the path is ten. Layer 2 traceroute supports only unicast traffic. If you specify a multicast source or destination MAC address, the physical path is not identified, and an error message appears.