Hate Lingo: a Target-Based Linguistic Analysis of Hate Speech in Social Media

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Menaquale, Sandy

“Prejudice is a burden that confuses the past, threatens the future, and renders the present inaccessible.” – Maya Angelou “As long as there is racial privilege, racism will never end.” – Wayne Gerard Trotman “Not everything that is faced can be changed, but nothing can be changed until it is faced.” James Baldwin “Ours is not the struggle of one day, one week, or one year. Ours is not the struggle of one judicial appointment or presidential term. Ours is the struggle of a lifetime, or maybe even many lifetimes, and each one of us in every generation must do our part.” – John Lewis COLUMBIA versus COLUMBUS • 90% of the 14,000 workers on the Central Pacific were Chinese • By 1880 over 100,000 Chinese residents in the US YELLOW PERIL https://iexaminer.org/yellow-peril-documents-historical-manifestations-of-oriental-phobia/ https://www.nytimes.com/2019/05/14/us/california-today-chinese-railroad-workers.html BACKGROUND FOR USA IMMIGRATION POLICIES • 1790 – Nationality and Citizenship • 1803 – No Immigration of any FREE “Negro, mulatto, or other persons of color” • 1848 – If we annex your territory and you remain living on it, you are a citizen • 1849 – Legislate and enforce immigration is a FEDERAL Power, not State or Local • 1854 – Negroes, Native Americans, and now Chinese may not testify against whites GERMAN IMMIGRATION https://www.pewresearch.org/wp-content/uploads/2014/05/FT_15.09.28_ImmigationMapsGIF.gif?w=640 TO LINCOLN’S CREDIT CIVIL WAR IMMIGRATION POLICIES • 1862 – CIVIL WAR LEGISLATION ABOUT IMMIGRATION • Message to Congress December -

A Comparative Study of French-Canadian and Mexican-American Contemporary Poetry

A COMPARATIVE STUDY OF FRENCH-CANADIAN AND MEXICAN-AMERICAN CONTEMPORARY POETRY by RODERICK JAMES MACINTOSH, B.A., M.A. A DISSERTATION IN SPANISH Submitted to the Graduate Faculty of Texas Tech University in Partial Fulfillment of the Requirements for the Degree of DOCTOR OP PHILOSOPHY Approved Accepted May, 1981 /V<9/J^ ACKNOWLEDGMENTS I am T«ry grateful to Dr. Edmundo Garcia-Giron for his direction of this dissertation and to the other mem bers of my committee, Dr. Norwood Andrews, Dr. Alfred Cismaru, Dr. Aldo Finco and Dr. Faye L. Bianpass, for their helpful criticism and advice. 11 ' V^-^'s;-^' CONTENTS ACKNOWI£DGMENTS n I. k BRIEF HISTORY OF QUE3EC 1 II• A BRIEF HISTORY OF MEXICAN-AMERICANS ^9 III. A LITERARY HISTORY OF QUEBEC 109 IV. A BRIEF OUTLINE OF ^MEXICAN LITERATURE 164 7» A LITERARY HISTORY OF HffiXICAN-AT/lERICANS 190 ' VI. A COMPARATIVE LOOK AT CANADZkll FRENCH AND MEXICAN-AMERICAN SPANISH 228 VII- CONTEMPORARY PRSNCK-CANADIAN POETRY 2^7 VIII. CONTEMPORARY TffiCICAN-AMERICAN POETRY 26? NOTES 330 BIBLIOGRAPHY 356 111 A BRIEF HISTORY OF QUEBEC In 153^ Jacques Cartier landed on the Gaspe Penin sula and established French sovereignty in North America. Nevertheless, the French did not take effective control of their foothold on this continent until 7^ years later when Samuel de Champlain founded the settlement of Quebec in 1608, at the foot of Cape Diamond on the St. Laurence River. At first, the settlement was conceived of as a trading post for the lucrative fur trade, but two difficul ties soon becam,e apparent—problems that have plagued French Canada to the present day—the difficulty of comirunication across trackless forests and m.ountainous terrain and the rigors of the Great Canadian Winter. -

The Chinese in Hawaii: an Annotated Bibliography

The Chinese in Hawaii AN ANNOTATED BIBLIOGRAPHY by NANCY FOON YOUNG Social Science Research Institute University of Hawaii Hawaii Series No. 4 THE CHINESE IN HAWAII HAWAII SERIES No. 4 Other publications in the HAWAII SERIES No. 1 The Japanese in Hawaii: 1868-1967 A Bibliography of the First Hundred Years by Mitsugu Matsuda [out of print] No. 2 The Koreans in Hawaii An Annotated Bibliography by Arthur L. Gardner No. 3 Culture and Behavior in Hawaii An Annotated Bibliography by Judith Rubano No. 5 The Japanese in Hawaii by Mitsugu Matsuda A Bibliography of Japanese Americans, revised by Dennis M. O g a w a with Jerry Y. Fujioka [forthcoming] T H E CHINESE IN HAWAII An Annotated Bibliography by N A N C Y F O O N Y O U N G supported by the HAWAII CHINESE HISTORY CENTER Social Science Research Institute • University of Hawaii • Honolulu • Hawaii Cover design by Bruce T. Erickson Kuan Yin Temple, 170 N. Vineyard Boulevard, Honolulu Distributed by: The University Press of Hawaii 535 Ward Avenue Honolulu, Hawaii 96814 International Standard Book Number: 0-8248-0265-9 Library of Congress Catalog Card Number: 73-620231 Social Science Research Institute University of Hawaii, Honolulu, Hawaii 96822 Copyright 1973 by the Social Science Research Institute All rights reserved. Published 1973 Printed in the United States of America TABLE OF CONTENTS FOREWORD vii PREFACE ix ACKNOWLEDGMENTS xi ABBREVIATIONS xii ANNOTATED BIBLIOGRAPHY 1 GLOSSARY 135 INDEX 139 v FOREWORD Hawaiians of Chinese ancestry have made and are continuing to make a rich contribution to every aspect of life in the islands. -

(FCC) Complaints About Saturday Night Live (SNL), 2019-2021 and Dave Chappelle, 11/1/2020-12/10/2020

Description of document: Federal Communications Commission (FCC) Complaints about Saturday Night Live (SNL), 2019-2021 and Dave Chappelle, 11/1/2020-12/10/2020 Requested date: 2021 Release date: 21-December-2021 Posted date: 12-July-2021 Source of document: Freedom of Information Act Request Federal Communications Commission Office of Inspector General 45 L Street NE Washington, D.C. 20554 FOIAonline The governmentattic.org web site (“the site”) is a First Amendment free speech web site and is noncommercial and free to the public. The site and materials made available on the site, such as this file, are for reference only. The governmentattic.org web site and its principals have made every effort to make this information as complete and as accurate as possible, however, there may be mistakes and omissions, both typographical and in content. The governmentattic.org web site and its principals shall have neither liability nor responsibility to any person or entity with respect to any loss or damage caused, or alleged to have been caused, directly or indirectly, by the information provided on the governmentattic.org web site or in this file. The public records published on the site were obtained from government agencies using proper legal channels. Each document is identified as to the source. Any concerns about the contents of the site should be directed to the agency originating the document in question. GovernmentAttic.org is not responsible for the contents of documents published on the website. Federal Communications Commission Consumer & Governmental Affairs Bureau Washington, D.C. 20554 December 21, 2021 VIA ELECTRONIC MAIL FOIA Nos. -

Canadian Inclusive Language Glossary the Canadian Cultural Mosaic Foundation Would Like to Honour And

Lan- guage De- Coded Canadian Inclusive Language Glossary The Canadian Cultural Mosaic Foundation would like to honour and acknowledgeTreaty aknoledgment all that reside on the traditional Treaty 7 territory of the Blackfoot confederacy. This includes the Siksika, Kainai, Piikani as well as the Stoney Nakoda and Tsuut’ina nations. We further acknowledge that we are also home to many Métis communities and Region 3 of the Métis Nation. We conclude with honoring the city of Calgary’s Indigenous roots, traditionally known as “Moh’Kinsstis”. i Contents Introduction - The purpose Themes - Stigmatizing and power of language. terminology, gender inclusive 01 02 pronouns, person first language, correct terminology. -ISMS Ableism - discrimination in 03 03 favour of able-bodied people. Ageism - discrimination on Heterosexism - discrimination the basis of a person’s age. in favour of opposite-sex 06 08 sexuality and relationships. Racism - discrimination directed Classism - discrimination against against someone of a different or in favour of people belonging 10 race based on the belief that 14 to a particular social class. one’s own race is superior. Sexism - discrimination Acknowledgements 14 on the basis of sex. 17 ii Language is one of the most powerful tools that keeps us connected with one another. iii Introduction The words that we use open up a world of possibility and opportunity, one that allows us to express, share, and educate. Like many other things, language evolves over time, but sometimes this fluidity can also lead to miscommunication. This project was started by a group of diverse individuals that share a passion for inclusion and justice. -

Asians in Minnesota Oral History Project Minnesota Historical Society

Isabel Suzanne Joe Wong Narrator Sarah Mason Interviewer June 8, 1982 July 13, 1982 Minneapolis, Minnesota Sarah Mason -SM Isabel Suzanne Joe Wong -IW SM: I’m talking to Isie Wong in Minneapolis on June 8, 1982. And this isProject an interview conducted for the Minnesota Historical Society by Sarah Mason. Can we just begin with your parents and your family then? IW: Oh, okay. What I know about my family is basically . my family’s history is basically what I was told by my father and by my mother. So, you know,History that is just from them. Society SM: Yes. Oral IW: My father was born in Canton of a family of nine children, and he was the last one. He was the baby. And apparently they had some money because they were able to raise . I think it was four girls and the five boys. SM: Oh. Historical IW: My father’s mother died when he was about eight years old. And the father . I don’t know if I should say this, was a . .Minnesota . he was . he was addicted to opium as all men of that time were, you know. I mean, menin of money were able to smoke opium. SM: Oh. Yes. Minnesota IW: And so, little by little, he would sell off his son and his children to, you know, maintain that habit. Asians SM: Yes. IW: The mother’s dying words were, “Don’t ever sell my youngest son.” But the father just was so drugged by opium that he . eventually, he did sell my father. -

Chinese at Home : Or, the Man of Tong and His Land

THE CHINESE AT HOME J. DYER BALL M.R.A.S. ^0f Vvc.' APR 9 1912 A. Jt'f, & £#f?r;CAL D'visioo DS72.I Section .e> \% Digitized by the Internet Archive in 2016 https://archive.org/details/chineseathomeorm00ball_0 THE CHINESE AT HOME >Di TSZ YANC. THE IN ROCK ORPHAN LITTLE THE ) THE CHINESE AT HOME OR THE MAN OF TONG AND HIS LAND l By BALL, i.s.o., m.r.a.s. J. DYER M. CHINA BK.K.A.S., ETC. Hong- Kong Civil Service ( retired AUTHOR OF “THINGS CHINESE,” “THE CELESTIAL AND HIS RELIGION FLEMING H. REYELL COMPANY NEW YORK. CHICAGO. TORONTO 1912 CONTENTS PAGE PREFACE . Xi CHAPTER I. THE MIDDLE KINGDOM . .1 II. THE BLACK-HAIRED RACE . .12 III. THE LIFE OF A DEAD CHINAMAN . 21 “ ” IV. T 2 WIND AND WATER, OR FUNG-SHUI > V. THE MUCH-MARRIED CHINAMAN . -45 VI. JOHN CHINAMAN ABROAD . 6 1 . vii. john chinaman’s little ones . 72 VIII. THE PAST OF JOHN CHINAMAN . .86 IX. THE MANDARIN . -99 X. LAW AND ORDER . Il6 XI. THE DIVERSE TONGUES OF JOHN CHINAMAN . 129 XII. THE DRUG : FOREIGN DIRT . 144 XIII. WHAT JOHN CHINAMAN EATS AND DRINKS . 158 XIV. JOHN CHINAMAN’S DOCTORS . 172 XV. WHAT JOHN CHINAMAN READS . 185 vii Contents CHAPTER PAGE XVI. JOHN CHINAMAN AFLOAT • 199 XVII. HOW JOHN CHINAMAN TRAVELS ON LAND 2X2 XVIII. HOW JOHN CHINAMAN DRESSES 225 XIX. THE CARE OF THE MINUTE 239 XX. THE YELLOW PERIL 252 XXI. JOHN CHINAMAN AT SCHOOL 262 XXII. JOHN CHINAMAN OUT OF DOORS 279 XXIII. JOHN CHINAMAN INDOORS 297 XXIV. -

Race and Cricket: the West Indies and England At

RACE AND CRICKET: THE WEST INDIES AND ENGLAND AT LORD’S, 1963 by HAROLD RICHARD HERBERT HARRIS Presented to the Faculty of the Graduate School of The University of Texas at Arlington in Partial Fulfillment of the Requirements for the Degree of DOCTOR OF PHILOSOPHY THE UNIVERSITY OF TEXAS AT ARLINGTON August 2011 Copyright © by Harold Harris 2011 All Rights Reserved To Romelee, Chamie and Audie ACKNOWLEDGEMENTS My journey began in Antigua, West Indies where I played cricket as a boy on the small acreage owned by my family. I played the game in Elementary and Secondary School, and represented The Leeward Islands’ Teachers’ Training College on its cricket team in contests against various clubs from 1964 to 1966. My playing days ended after I moved away from St Catharines, Ontario, Canada, where I represented Ridley Cricket Club against teams as distant as 100 miles away. The faculty at the University of Texas at Arlington has been a source of inspiration to me during my tenure there. Alusine Jalloh, my Dissertation Committee Chairman, challenged me to look beyond my pre-set Master’s Degree horizon during our initial conversation in 2000. He has been inspirational, conscientious and instructive; qualities that helped set a pattern for my own discipline. I am particularly indebted to him for his unwavering support which was indispensable to the inclusion of a chapter, which I authored, in The United States and West Africa: Interactions and Relations , which was published in 2008; and I am very grateful to Stephen Reinhardt for suggesting the sport of cricket as an area of study for my dissertation. -

The US and the World: Chinese Immigrants

The U.S. and the World: Chinese Immigrants Instructions This module will introduce the history of Chinese immigrants in the nineteenth century. It will also show one of the ways that the U.S. was (and is) connected to the rest of the world. Begin by reading the article “For Further Information.” This will provide you with information about the immigrants, the world they came from and their lives in the United States. A crucial part of this history is the hostility they encountered and the eventual legislation that stopped almost all Chinese immigration. The four documents, which you should read next, show both the hostility toward the Chinese and the viewpoints of two of their defenders. When you have read the article and the documents, take the quiz and participate in the discussion. For Further Information: Chinese Immigrants to America, 1849-1882 In January, 1848 a workman at a mill east of what became Sacramento, California discovered gold. New of this discovery spread rapidly over most of the world, and gold seekers from many nations flooded into California. Among the immigrants were thousands of Chinese farmers from the Pearl River delta in southeastern China. By 1851 25,000 had arrived in California, where they made up one quarter of the total population. Most of the immigrants were young men from farming families in Guangdong province. This area was located close to the port city of Guanzhou, known in the West as Canton. For many years it had been the center of foreign trade in China and until the first Opium War (1839-42) was the only port that allowed Westerners—from the United States, Britain, and other European countries—to enter China. -

From Working Arm to Wetback: the Mexican Worker and American National Identity, 1942-1964

Dominican Scholar Graduate Master's Theses, Capstones, and Culminating Projects Student Scholarship 1-2009 From Working Arm to Wetback: The Mexican Worker and American National Identity, 1942-1964 Mark Brinkman Dominican University of California https://doi.org/10.33015/dominican.edu/2009.hum.01 Survey: Let us know how this paper benefits you. Recommended Citation Brinkman, Mark, "From Working Arm to Wetback: The Mexican Worker and American National Identity, 1942-1964" (2009). Graduate Master's Theses, Capstones, and Culminating Projects. 67. https://doi.org/10.33015/dominican.edu/2009.hum.01 This Master's Thesis is brought to you for free and open access by the Student Scholarship at Dominican Scholar. It has been accepted for inclusion in Graduate Master's Theses, Capstones, and Culminating Projects by an authorized administrator of Dominican Scholar. For more information, please contact [email protected]. FROM WORKING ARM TO WETBACK: THE MEXICAN WORKER AND AMERICAN NATIONAL IDENTITY, 1942-1964 A thesis submitted to the faculty of Dominican University in partial fulfillment of the requirements for the Master of Arts in Humanities by Mark Brinkman San Rafael, California January 12, 2009 © Copyright 2009 - by Mark Brinkman All rights reserved Thesis Certification THESIS: FROM WORKING ARM TO WETBACK: THE MEXICAN WORKER AND AMERICAN NATIONAL IDENTITY, 1942-1964 AUTHOR: Mark Brinkman APPROVED: Martin Anderson, PhD Primary Reader Christian Dean, PhD Secondary Reader Abstract This thesis explores America’s treatment of the Mexican worker in the United States between 1942 and 1964, the years in which an international guest worker agreement between the United States and Mexico informally known as the Bracero Program was in place, and one in which heightened fears of illegal immigration resulted in Operation Wetback, one of the largest deportation programs in U.S. -

Aow 1516 13 Redskins

1. Mark your confusion. 2. Show evidence of a close reading. 3. Write a 1+ page reflection. California Becomes First State to Ban 'Redskins' Nickname Source: M. Alex Johnson, Time.com, October 11, 2015 California became the first state to ban schools from using the "Redskins" team name or mascot Sunday, a move the National Congress of American Indians said should be a "shining example" for the rest of the country. The law, which Gov. Jerry Brown signed Sunday morning, goes into effect Jan. 1, 2017. It's believed to affect only four public schools using the mascot, which many Indian groups and activists find offensive, but its impact is significant symbolically — California has the largest enrollment of public school students in the nation, according to the National Center for Education Statistics. The state Assembly overwhelmingly approved the California Racial Mascots Act in May, about a month before the Obama administration went on record telling the Washington Redskins that they would have to change their name before they would be allowed to move to a stadium in Washington, D.C., from their current home in suburban Maryland. In a joint statement with the nonprofit group Change the Mascot, the National Congress of American Indians praised California for "standing on the right side of history by bringing an end to the use of the demeaning and damaging R-word slur in the state's schools." "They have set a shining example for other states across the country, and for the next generation, by demonstrating a commitment to the American ideals of inclusion and mutual respect," it said. -

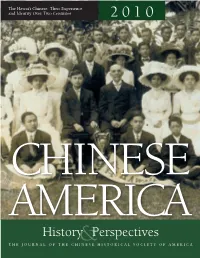

CHSA HP2010.Pdf

The Hawai‘i Chinese: Their Experience and Identity Over Two Centuries 2 0 1 0 CHINESE AMERICA History&Perspectives thej O u r n a l O f T HE C H I n E s E H I s T O r I C a l s OCIET y O f a m E r I C a Chinese America History and PersPectives the Journal of the chinese Historical society of america 2010 Special issUe The hawai‘i Chinese Chinese Historical society of america with UCLA asian american studies center Chinese America: History & Perspectives – The Journal of the Chinese Historical Society of America The Hawai‘i Chinese chinese Historical society of america museum & learning center 965 clay street san francisco, california 94108 chsa.org copyright © 2010 chinese Historical society of america. all rights reserved. copyright of individual articles remains with the author(s). design by side By side studios, san francisco. Permission is granted for reproducing up to fifty copies of any one article for educa- tional Use as defined by thed igital millennium copyright act. to order additional copies or inquire about large-order discounts, see order form at back or email [email protected]. articles appearing in this journal are indexed in Historical Abstracts and America: History and Life. about the cover image: Hawai‘i chinese student alliance. courtesy of douglas d. l. chong. Contents Preface v Franklin Ng introdUction 1 the Hawai‘i chinese: their experience and identity over two centuries David Y. H. Wu and Harry J. Lamley Hawai‘i’s nam long 13 their Background and identity as a Zhongshan subgroup Douglas D.