A New Method for Calculating Luminous Flux Using High Dynamic Range Imaging and Its Applications

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Glossary Physics (I-Introduction)

1 Glossary Physics (I-introduction) - Efficiency: The percent of the work put into a machine that is converted into useful work output; = work done / energy used [-]. = eta In machines: The work output of any machine cannot exceed the work input (<=100%); in an ideal machine, where no energy is transformed into heat: work(input) = work(output), =100%. Energy: The property of a system that enables it to do work. Conservation o. E.: Energy cannot be created or destroyed; it may be transformed from one form into another, but the total amount of energy never changes. Equilibrium: The state of an object when not acted upon by a net force or net torque; an object in equilibrium may be at rest or moving at uniform velocity - not accelerating. Mechanical E.: The state of an object or system of objects for which any impressed forces cancels to zero and no acceleration occurs. Dynamic E.: Object is moving without experiencing acceleration. Static E.: Object is at rest.F Force: The influence that can cause an object to be accelerated or retarded; is always in the direction of the net force, hence a vector quantity; the four elementary forces are: Electromagnetic F.: Is an attraction or repulsion G, gravit. const.6.672E-11[Nm2/kg2] between electric charges: d, distance [m] 2 2 2 2 F = 1/(40) (q1q2/d ) [(CC/m )(Nm /C )] = [N] m,M, mass [kg] Gravitational F.: Is a mutual attraction between all masses: q, charge [As] [C] 2 2 2 2 F = GmM/d [Nm /kg kg 1/m ] = [N] 0, dielectric constant Strong F.: (nuclear force) Acts within the nuclei of atoms: 8.854E-12 [C2/Nm2] [F/m] 2 2 2 2 2 F = 1/(40) (e /d ) [(CC/m )(Nm /C )] = [N] , 3.14 [-] Weak F.: Manifests itself in special reactions among elementary e, 1.60210 E-19 [As] [C] particles, such as the reaction that occur in radioactive decay. -

Lecture 3: the Sensor

4.430 Daylighting Human Eye ‘HDR the old fashioned way’ (Niemasz) Massachusetts Institute of Technology ChriChristoph RstophReeiinhartnhart Department of Architecture 4.4.430 The430The SeSensnsoror Building Technology Program Happy Valentine’s Day Sun Shining on a Praline Box on February 14th at 9.30 AM in Boston. 1 Happy Valentine’s Day Falsecolor luminance map Light and Human Vision 2 Human Eye Outside view of a human eye Ophtalmogram of a human retina Retina has three types of photoreceptors: Cones, Rods and Ganglion Cells Day and Night Vision Photopic (DaytimeVision): The cones of the eye are of three different types representing the three primary colors, red, green and blue (>3 cd/m2). Scotopic (Night Vision): The rods are repsonsible for night and peripheral vision (< 0.001 cd/m2). Mesopic (Dim Light Vision): occurs when the light levels are low but one can still see color (between 0.001 and 3 cd/m2). 3 VisibleRange Daylighting Hanbook (Reinhart) The human eye can see across twelve orders of magnitude. We can adapt to about 10 orders of magnitude at a time via the iris. Larger ranges take time and require ‘neural adaptation’. Transition Spaces Outside Atrium Circulation Area Final destination 4 Luminous Response Curve of the Human Eye What is daylight? Daylight is the visible part of the electromagnetic spectrum that lies between 380 and 780 nm. UV blue green yellow orange red IR 380 450 500 550 600 650 700 750 wave length (nm) 5 Photometric Quantities Characterize how a space is perceived. Illuminance Luminous Flux Luminance Luminous Intensity Luminous Intensity [Candela] ~ 1 candela Courtesy of Matthew Bowden at www.digitallyrefreshing.com. -

Light and Illumination

ChapterChapter 3333 -- LightLight andand IlluminationIllumination AAA PowerPointPowerPointPowerPoint PresentationPresentationPresentation bybyby PaulPaulPaul E.E.E. Tippens,Tippens,Tippens, ProfessorProfessorProfessor ofofof PhysicsPhysicsPhysics SouthernSouthernSouthern PolytechnicPolytechnicPolytechnic StateStateState UniversityUniversityUniversity © 2007 Objectives:Objectives: AfterAfter completingcompleting thisthis module,module, youyou shouldshould bebe ableable to:to: •• DefineDefine lightlight,, discussdiscuss itsits properties,properties, andand givegive thethe rangerange ofof wavelengthswavelengths forfor visiblevisible spectrum.spectrum. •• ApplyApply thethe relationshiprelationship betweenbetween frequenciesfrequencies andand wavelengthswavelengths forfor opticaloptical waves.waves. •• DefineDefine andand applyapply thethe conceptsconcepts ofof luminousluminous fluxflux,, luminousluminous intensityintensity,, andand illuminationillumination.. •• SolveSolve problemsproblems similarsimilar toto thosethose presentedpresented inin thisthis module.module. AA BeginningBeginning DefinitionDefinition AllAll objectsobjects areare emittingemitting andand absorbingabsorbing EMEM radiaradia-- tiontion.. ConsiderConsider aa pokerpoker placedplaced inin aa fire.fire. AsAs heatingheating occurs,occurs, thethe 1 emittedemitted EMEM waveswaves havehave 2 higherhigher energyenergy andand 3 eventuallyeventually becomebecome visible.visible. 4 FirstFirst redred .. .. .. thenthen white.white. LightLightLight maymaymay bebebe defineddefineddefined -

Black Body Radiation and Radiometric Parameters

Black Body Radiation and Radiometric Parameters: All materials absorb and emit radiation to some extent. A blackbody is an idealization of how materials emit and absorb radiation. It can be used as a reference for real source properties. An ideal blackbody absorbs all incident radiation and does not reflect. This is true at all wavelengths and angles of incidence. Thermodynamic principals dictates that the BB must also radiate at all ’s and angles. The basic properties of a BB can be summarized as: 1. Perfect absorber/emitter at all ’s and angles of emission/incidence. Cavity BB 2. The total radiant energy emitted is only a function of the BB temperature. 3. Emits the maximum possible radiant energy from a body at a given temperature. 4. The BB radiation field does not depend on the shape of the cavity. The radiation field must be homogeneous and isotropic. T If the radiation going from a BB of one shape to another (both at the same T) were different it would cause a cooling or heating of one or the other cavity. This would violate the 1st Law of Thermodynamics. T T A B Radiometric Parameters: 1. Solid Angle dA d r 2 where dA is the surface area of a segment of a sphere surrounding a point. r d A r is the distance from the point on the source to the sphere. The solid angle looks like a cone with a spherical cap. z r d r r sind y r sin x An element of area of a sphere 2 dA rsin d d Therefore dd sin d The full solid angle surrounding a point source is: 2 dd sind 00 2cos 0 4 Or integrating to other angles < : 21cos The unit of solid angle is steradian. -

Multidisciplinary Design Project Engineering Dictionary Version 0.0.2

Multidisciplinary Design Project Engineering Dictionary Version 0.0.2 February 15, 2006 . DRAFT Cambridge-MIT Institute Multidisciplinary Design Project This Dictionary/Glossary of Engineering terms has been compiled to compliment the work developed as part of the Multi-disciplinary Design Project (MDP), which is a programme to develop teaching material and kits to aid the running of mechtronics projects in Universities and Schools. The project is being carried out with support from the Cambridge-MIT Institute undergraduate teaching programe. For more information about the project please visit the MDP website at http://www-mdp.eng.cam.ac.uk or contact Dr. Peter Long Prof. Alex Slocum Cambridge University Engineering Department Massachusetts Institute of Technology Trumpington Street, 77 Massachusetts Ave. Cambridge. Cambridge MA 02139-4307 CB2 1PZ. USA e-mail: [email protected] e-mail: [email protected] tel: +44 (0) 1223 332779 tel: +1 617 253 0012 For information about the CMI initiative please see Cambridge-MIT Institute website :- http://www.cambridge-mit.org CMI CMI, University of Cambridge Massachusetts Institute of Technology 10 Miller’s Yard, 77 Massachusetts Ave. Mill Lane, Cambridge MA 02139-4307 Cambridge. CB2 1RQ. USA tel: +44 (0) 1223 327207 tel. +1 617 253 7732 fax: +44 (0) 1223 765891 fax. +1 617 258 8539 . DRAFT 2 CMI-MDP Programme 1 Introduction This dictionary/glossary has not been developed as a definative work but as a useful reference book for engi- neering students to search when looking for the meaning of a word/phrase. It has been compiled from a number of existing glossaries together with a number of local additions. -

Spectral Light Measuring

Spectral Light Measuring 1 Precision GOSSEN Foto- und Lichtmesstechnik – Your Guarantee for Precision and Quality GOSSEN Foto- und Lichtmesstechnik is specialized in the measurement of light, and has decades of experience in its chosen field. Continuous innovation is the answer to rapidly changing technologies, regulations and markets. Outstanding product quality is assured by means of a certified quality management system in accordance with ISO 9001. LED – Light of the Future The GOSSEN Light Lab LED technology has experience rapid growth in recent years thanks to the offers calibration services, for our own products, as well as for products from development of LEDs with very high light efficiency. This is being pushed by other manufacturers, and issues factory calibration certificates. The optical the ban on conventional light bulbs with low energy efficiency, as well as an table used for this purpose is subject to strict test equipment monitoring, and ever increasing energy-saving mentality and environmental awareness. LEDs is traced back to the PTB in Braunschweig, Germany (German Federal Institute have long since gone beyond their previous status as effects lighting and are of Physics and Metrology). Aside from the PTB, our lab is the first in Germany being used for display illumination, LED displays and lamps. Modern means to be accredited for illuminance by DAkkS (German accreditation authority), of transportation, signal systems and street lights, as well as indoor and and is thus authorized to issue internationally recognized DAkkS calibration outdoor lighting, are no longer conceivable without them. The brightness and certificates. This assures that acquired measured values comply with official color of LEDs vary due to manufacturing processes, for which reason they regulations and, as a rule, stand up to legal argumentation. -

STHF-R Ultra High Flux Gamma Probe Data Sheet

Features STHF-R™ Ultra High ■ Measurement of H*(10) Flux Gamma Probe ambient gamma dose equivalent rate up to 1000 Sv/h (100 000 R/h) Description ■ To be connected to The STHF-R ultra high flux Radiagem™, MIP 10 Digital™ probe is designed for the or Avior ® meters measurements of very high ■ Waterproof: 80 m (262.5 ft) gamma dose-equivalent rates water depth up to 1000 Sv/h. ■ Detector: Silicon diode This probe is especially ■ 5 kSv maximum integrated designed for ultra high flux dose measurement which can be found in pools in nuclear ■ Compact portable design for power plants or in recycling detector and detector cable facilities. Effectively this on reel probes box is stainless steel based and waterproof up to 80 m (164 ft) underwater. It can be laid underwater in the storage pools Borated water. An optional ballast weight can be supplied to ease underwater measurements. The STHF-R probe is composed of two matched units: ■ The measurement probe including the silicon diode and the associated analog electronics. ■ An interface case which houses the processing electronics that are more sensitive to radiation; this module can be remotely located up to 50 m (164 ft) from the measurement spot. ■ An intermediate connection point with 50 m length cable to which the interface case can be connected. The STHF-R probe can be connected directly to Avior, Radiagem or MIP 10 Digital meters. The STHF-R unit receives power from the survey meter during operation. STHF-R instruments include key components of hardware circuitry (high voltage power supply, amplifier, discriminator, etc.). -

LFC-050-LE Light Flux Color and Luminous Efficacy Measurement Systems Why Choose Lightfluxcolor

LFC-050-LE Light Flux Color and Luminous Efficacy Measurement Systems Why Choose LightFluxColor • Calibrations are traceable to NIST (USA) which are accepted and recognized globally. Ideal For Flux, Color & AC/DC The most economical and • Calibrated lamp standards NVLAP Electrical Characterization of: reliable solution for photometry accreditation Lab Code 200951-0 of light source needs! Automotive Lamps Traffic Lighting (ISO 17025) LED Clusters Architectural Lighting • Spectral flux standards (calibration LightFluxColor-LE Series Systems are the performed at each wavelength) are LED Bulbs Railway Lighting most affordable and reliable systems for testing supplied with each system for highest LED lighting products. Whether you are a possible accuracy. manufacturer of LED luminaires, street lights, • Single software controls all electronics and solar powered LED lanterns, LED bulbs, LightFluxColor-LE Series Systems also include provides optical and electrical data. or any other type of LED lighting product, a highly sensitive mini-calibrated CCD Array Spectrometer with spectral range from LightFluxColor Systems will meet all your testing • Competitive systems only provide 250 to 850 nm. This low noise and broad spectral requirements. LightFluxColor Systems allow luminous flux standards with CCT luminaire manufacturers to test LED products response spectrometer provide instantaneous calibration which limits overall for photometric performance. measurement of radiometric, photometric, and system accuracy. color characteristics of the LED sources. • AC/DC operation in one packaged LightFluxColor-LE Series Systems allow testing system users to test AC/DC characterization of lamps The fast results from the spectrometer helps at various input frequencies along with lumens to increase the rate of product development, • An auxiliary lamp is provided for and color parameters. -

Radiometry of Light Emitting Diodes Table of Contents

TECHNICAL GUIDE THE RADIOMETRY OF LIGHT EMITTING DIODES TABLE OF CONTENTS 1.0 Introduction . .1 2.0 What is an LED? . .1 2.1 Device Physics and Package Design . .1 2.2 Electrical Properties . .3 2.2.1 Operation at Constant Current . .3 2.2.2 Modulated or Multiplexed Operation . .3 2.2.3 Single-Shot Operation . .3 3.0 Optical Characteristics of LEDs . .3 3.1 Spectral Properties of Light Emitting Diodes . .3 3.2 Comparison of Photometers and Spectroradiometers . .5 3.3 Color and Dominant Wavelength . .6 3.4 Influence of Temperature on Radiation . .6 4.0 Radiometric and Photopic Measurements . .7 4.1 Luminous and Radiant Intensity . .7 4.2 CIE 127 . .9 4.3 Spatial Distribution Characteristics . .10 4.4 Luminous Flux and Radiant Flux . .11 5.0 Terminology . .12 5.1 Radiometric Quantities . .12 5.2 Photometric Quantities . .12 6.0 References . .13 1.0 INTRODUCTION Almost everyone is familiar with light-emitting diodes (LEDs) from their use as indicator lights and numeric displays on consumer electronic devices. The low output and lack of color options of LEDs limited the technology to these uses for some time. New LED materials and improved production processes have produced bright LEDs in colors throughout the visible spectrum, including white light. With efficacies greater than incandescent (and approaching that of fluorescent lamps) along with their durability, small size, and light weight, LEDs are finding their way into many new applications within the lighting community. These new applications have placed increasingly stringent demands on the optical characterization of LEDs, which serves as the fundamental baseline for product quality and product design. -

• Flux and Luminosity • Brightness of Stars • Spectrum of Light • Temperature and Color/Spectrum • How the Eye Sees Color

Stars • Flux and luminosity • Brightness of stars • Spectrum of light • Temperature and color/spectrum • How the eye sees color Which is of these part of the Sun is the coolest? A) Core B) Radiative zone C) Convective zone D) Photosphere E) Chromosphere Flux and luminosity • Luminosity - A star produces light – the total amount of energy that a star puts out as light each second is called its Luminosity. • Flux - If we have a light detector (eye, camera, telescope) we can measure the light produced by the star – the total amount of energy intercepted by the detector divided by the area of the detector is called the Flux. Flux and luminosity • To find the luminosity, we take a shell which completely encloses the star and measure all the light passing through the shell • To find the flux, we take our detector at some particular distance from the star and measure the light passing only through the detector. How bright a star looks to us is determined by its flux, not its luminosity. Brightness = Flux. Flux and luminosity • Flux decreases as we get farther from the star – like 1/distance2 • Mathematically, if we have two stars A and B Flux Luminosity Distance 2 A = A B Flux B Luminosity B Distance A Distance-Luminosity relation: Which star appears brighter to the observer? Star B 2L L d Star A 2d Flux and luminosity Luminosity A Distance B 1 =2 = LuminosityB Distance A 2 Flux Luminosity Distance 2 A = A B Flux B Luminosity B DistanceA 1 2 1 1 =2 =2 = Flux = 2×Flux 2 4 2 B A Brightness of stars • Ptolemy (150 A.D.) grouped stars into 6 `magnitude’ groups according to how bright they looked to his eye. -

Radiometric and Photometric Measurements with TAOS Photosensors Contributed by Todd Bishop March 12, 2007 Valid

TAOS Inc. is now ams AG The technical content of this TAOS application note is still valid. Contact information: Headquarters: ams AG Tobelbaderstrasse 30 8141 Unterpremstaetten, Austria Tel: +43 (0) 3136 500 0 e-Mail: [email protected] Please visit our website at www.ams.com NUMBER 21 INTELLIGENT OPTO SENSOR DESIGNER’S NOTEBOOK Radiometric and Photometric Measurements with TAOS PhotoSensors contributed by Todd Bishop March 12, 2007 valid ABSTRACT Light Sensing applications use two measurement systems; Radiometric and Photometric. Radiometric measurements deal with light as a power level, while Photometric measurements deal with light as it is interpreted by the human eye. Both systems of measurement have units that are parallel to each other, but are useful for different applications. This paper will discuss the differencesstill and how they can be measured. AG RADIOMETRIC QUANTITIES Radiometry is the measurement of electromagnetic energy in the range of wavelengths between ~10nm and ~1mm. These regions are commonly called the ultraviolet, the visible and the infrared. Radiometry deals with light (radiant energy) in terms of optical power. Key quantities from a light detection point of view are radiant energy, radiant flux and irradiance. SI Radiometryams Units Quantity Symbol SI unit Abbr. Notes Radiant energy Q joule contentJ energy radiant energy per Radiant flux Φ watt W unit time watt per power incident on a Irradiance E square meter W·m−2 surface Energy is an SI derived unit measured in joules (J). The recommended symbol for energy is Q. Power (radiant flux) is another SI derived unit. It is the derivative of energy with respect to time, dQ/dt, and the unit is the watt (W). -

Use and Measurement of Mass Flux and Mass Discharge

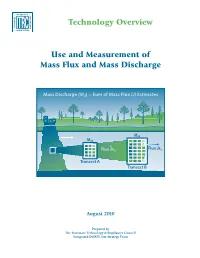

Technology Overview Use and Measurement of Mass Flux and Mass Discharge Mass Discharge (Md) = Sum of Mass Flux (J) Estimates MdB MdA B Flux JAi,j Flux J i,j Transect A Transect B August 2010 Prepared by The Interstate Technology & Regulatory Council Integrated DNAPL Site Strategy Team ABOUT ITRC Established in 1995, the Interstate Technology & Regulatory Council (ITRC) is a state-led, national coalition of personnel from the environmental regulatory agencies of all 50 states and the District of Columbia, three federal agencies, tribes, and public and industry stakeholders. The organization is devoted to reducing barriers to, and speeding interstate deployment of, better, more cost-effective, innovative environmental techniques. ITRC operates as a committee of the Environmental Research Institute of the States (ERIS), a Section 501(c)(3) public charity that supports the Environmental Council of the States (ECOS) through its educational and research activities aimed at improving the environment in the United States and providing a forum for state environmental policy makers. More information about ITRC and its available products and services can be found on the Internet at www.itrcweb.org. DISCLAIMER ITRC documents and training are products designed to help regulators and others develop a consistent approach to their evaluation, regulatory approval, and deployment of specific technologies at specific sites. Although the information in all ITRC products is believed to be reliable and accurate, the product and all material set forth within are provided without warranties of any kind, either express or implied, including but not limited to warranties of the accuracy or completeness of information contained in the product or the suitability of the information contained in the product for any particular purpose.