Lossless Text Compression Using GPT-2 Language Model and Huffman Coding

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Package 'Brotli'

Package ‘brotli’ May 13, 2018 Type Package Title A Compression Format Optimized for the Web Version 1.2 Description A lossless compressed data format that uses a combination of the LZ77 algorithm and Huffman coding. Brotli is similar in speed to deflate (gzip) but offers more dense compression. License MIT + file LICENSE URL https://tools.ietf.org/html/rfc7932 (spec) https://github.com/google/brotli#readme (upstream) http://github.com/jeroen/brotli#read (devel) BugReports http://github.com/jeroen/brotli/issues VignetteBuilder knitr, R.rsp Suggests spelling, knitr, R.rsp, microbenchmark, rmarkdown, ggplot2 RoxygenNote 6.0.1 Language en-US NeedsCompilation yes Author Jeroen Ooms [aut, cre] (<https://orcid.org/0000-0002-4035-0289>), Google, Inc [aut, cph] (Brotli C++ library) Maintainer Jeroen Ooms <[email protected]> Repository CRAN Date/Publication 2018-05-13 20:31:43 UTC R topics documented: brotli . .2 Index 4 1 2 brotli brotli Brotli Compression Description Brotli is a compression algorithm optimized for the web, in particular small text documents. Usage brotli_compress(buf, quality = 11, window = 22) brotli_decompress(buf) Arguments buf raw vector with data to compress/decompress quality value between 0 and 11 window log of window size Details Brotli decompression is at least as fast as for gzip while significantly improving the compression ratio. The price we pay is that compression is much slower than gzip. Brotli is therefore most effective for serving static content such as fonts and html pages. For binary (non-text) data, the compression ratio of Brotli usually does not beat bz2 or xz (lzma), however decompression for these algorithms is too slow for browsers in e.g. -

A Novel Coding Architecture for Multi-Line Lidar Point Clouds

This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination. IEEE TRANSACTIONS ON INTELLIGENT TRANSPORTATION SYSTEMS 1 A Novel Coding Architecture for Multi-Line LiDAR Point Clouds Based on Clustering and Convolutional LSTM Network Xuebin Sun , Sukai Wang , Graduate Student Member, IEEE, and Ming Liu , Senior Member, IEEE Abstract— Light detection and ranging (LiDAR) plays an preservation of historical relics, 3D sensing for smart city, indispensable role in autonomous driving technologies, such as well as autonomous driving. Especially for autonomous as localization, map building, navigation and object avoidance. driving systems, LiDAR sensors play an indispensable role However, due to the vast amount of data, transmission and storage could become an important bottleneck. In this article, in a large number of key techniques, such as simultaneous we propose a novel compression architecture for multi-line localization and mapping (SLAM) [1], path planning [2], LiDAR point cloud sequences based on clustering and convolu- obstacle avoidance [3], and navigation. A point cloud consists tional long short-term memory (LSTM) networks. LiDAR point of a set of individual 3D points, in accordance with one or clouds are structured, which provides an opportunity to convert more attributes (color, reflectance, surface normal, etc). For the 3D data to 2D array, represented as range images. Thus, we cast the 3D point clouds compression as a range image instance, the Velodyne HDL-64E LiDAR sensor generates a sequence compression problem. Inspired by the high efficiency point cloud of up to 2.2 billion points per second, with a video coding (HEVC) algorithm, we design a novel compression range of up to 120 m. -

Overview of the ASPIRE Project June 12-16, 2017 - the Hague, NL

14th International Planetary Probes Workshop Overview of the ASPIRE Project June 12-16, 2017 - The Hague, NL. Clara O’Farrell, Ian Clark & Mark Adler Jet Propulsion Laboratory, California Institute of Technology © 2017 California Institute of Technology. Government Sponsorship Acknowledged. ASPIRE Disk-Gap-Band (DGB) Parachute Heritage MSL (2012) MER (2004) • Developed in the 60s & 70s Viking (1974) for Viking – High Altitude Testing – Wind Tunnel Testing – Low Altitude Drop • Successfully used on 5 Mars missions – Leveraged Viking development Viking BLDT Test MER Drop Test MSL Wind Tunnel Test Image credit: mars.nasa.gov June 12-16, 2017 14th International Planetary Probes Workshop 2 jpl.nasa.gov Aerospace in recent DGB designs: Clark & Tanner, IEEE Broadcloth stress Conference Paper 2466 (2017): (per unit length) Broadcloth ultimate load estimated by treating the disk as a pressure vessel: Disk diameter have been eroding jpl.nasa.gov 3 180 Actual Flight Load ASPIRE 160 Parachute Design Load Broadcloth Ultimate Load DGB Heritage & Design Margins Strength margins may 140 f b l 120 • 3 0 1 x , 100 d a o L e 80 t u h c a r 60 a P 40 parachutes well below those achieved in supersonic tests 20 stresses seen in subsonic testing may not bound the 14th International Planetary Probes Workshop -Densityringsail Supersonic0 Decelerators Project saw failures of two 9. 19 12 12 12 S V V V V P S O P M 1 . P ik ik ik ik at pi p ho S m 7 2 2 2 E i i i i h r p e L m m m m D n n n n f it o n M - g g g g in rt i 1 M M M M II A A I I d u x . -

Concepts and Approaches for Mars Exploration1

June 24, 2012 Concepts and Approaches for Mars Exploration1 ‐ Report of a Workshop at LPI, June 12‐14, 2012 – Stephen Mackwell2 (LPI) Michael Amato (NASA Goddard), Bobby Braun (Georgia Institute of Technology), Steve Clifford (LPI), John Connolly (NASA Johnson), Marcello Coradini (ESA), Bethany Ehlmann (Caltech), Vicky Hamilton (SwRI), John Karcz (NASA Ames), Chris McKay (NASA Ames), Michael Meyer (NASA HQ), Brian Mulac (NASA Marshall), Doug Stetson (SSECG), Dale Thomas (NASA Marshall), and Jorge Vago (ESA) Executive Summary Recent deep cuts in the budget for Mars exploration at NASA necessitate a reconsideration of the Mars robotic exploration program within NASA’s Science Mission Directorate (SMD), especially in light of overlapping requirements with future planning for human missions to the Mars environment. As part of that reconsideration, a workshop on “Concepts and Approaches for Mars Exploration” was held at the USRA Lunar and Planetary Institute in Houston, TX, on June 12‐14, 2012. Details of the meeting, including abstracts, video recordings of all sessions, and plenary presentations, can be found at http://www.lpi.usra.edu/meetings/marsconcepts2012/. Participation in the workshop included scientists, engineers, and graduate students from academia, NASA Centers, Federal Laboratories, industry, and international partner organizations. Attendance was limited to 185 participants in order to facilitate open discussion of the critical issues for Mars exploration in the coming decades. As 390 abstracts were submitted by individuals interested in participating in the workshop, the Workshop Planning Team carefully selected a subset of the abstracts for presentation based on their appropriateness to the workshop goals, and ensuring that a broad diverse suite of concepts and ideas was presented. -

Zlib Home Site

zlib Home Site http://zlib.net/ A Massively Spiffy Yet Delicately Unobtrusive Compression Library (Also Free, Not to Mention Unencumbered by Patents) (Not Related to the Linux zlibc Compressing File-I/O Library) Welcome to the zlib home page, web pages originally created by Greg Roelofs and maintained by Mark Adler . If this page seems suspiciously similar to the PNG Home Page , rest assured that the similarity is completely coincidental. No, really. zlib was written by Jean-loup Gailly (compression) and Mark Adler (decompression). Current release: zlib 1.2.6 January 29, 2012 Version 1.2.6 has many changes over 1.2.5, including these improvements: gzread() can now read a file that is being written concurrently gzgetc() is now a macro for increased speed Added a 'T' option to gzopen() for transparent writing (no compression) Added deflatePending() to return the amount of pending output Allow deflateSetDictionary() and inflateSetDictionary() at any time in raw mode deflatePrime() can now insert bits in the middle of the stream ./configure now creates a configure.log file with all of the results Added a ./configure --solo option to compile zlib with no dependency on any libraries Fixed a problem with large file support macros Fixed a bug in contrib/puff Many portability improvements You can also look at the complete Change Log . Version 1.2.5 fixes bugs in gzseek() and gzeof() that were present in version 1.2.4 (March 2010). All users are encouraged to upgrade immediately. Version 1.2.4 has many changes over 1.2.3, including these improvements: -

16.1 Digital “Modes”

Contents 16.1 Digital “Modes” 16.5 Networking Modes 16.1.1 Symbols, Baud, Bits and Bandwidth 16.5.1 OSI Networking Model 16.1.2 Error Detection and Correction 16.5.2 Connected and Connectionless 16.1.3 Data Representations Protocols 16.1.4 Compression Techniques 16.5.3 The Terminal Node Controller (TNC) 16.1.5 Compression vs. Encryption 16.5.4 PACTOR-I 16.2 Unstructured Digital Modes 16.5.5 PACTOR-II 16.2.1 Radioteletype (RTTY) 16.5.6 PACTOR-III 16.2.2 PSK31 16.5.7 G-TOR 16.2.3 MFSK16 16.5.8 CLOVER-II 16.2.4 DominoEX 16.5.9 CLOVER-2000 16.2.5 THROB 16.5.10 WINMOR 16.2.6 MT63 16.5.11 Packet Radio 16.2.7 Olivia 16.5.12 APRS 16.3 Fuzzy Modes 16.5.13 Winlink 2000 16.3.1 Facsimile (fax) 16.5.14 D-STAR 16.3.2 Slow-Scan TV (SSTV) 16.5.15 P25 16.3.3 Hellschreiber, Feld-Hell or Hell 16.6 Digital Mode Table 16.4 Structured Digital Modes 16.7 Glossary 16.4.1 FSK441 16.8 References and Bibliography 16.4.2 JT6M 16.4.3 JT65 16.4.4 WSPR 16.4.5 HF Digital Voice 16.4.6 ALE Chapter 16 — CD-ROM Content Supplemental Files • Table of digital mode characteristics (section 16.6) • ASCII and ITA2 code tables • Varicode tables for PSK31, MFSK16 and DominoEX • Tips for using FreeDV HF digital voice software by Mel Whitten, KØPFX Chapter 16 Digital Modes There is a broad array of digital modes to service various needs with more coming. -

Arthur C. Clarke 2001: a Space Odyssey

Volume 33, Issue 2 AIAAAIAA HoustonHouston SectionSection www.aiaa-houston.orgwww.aiaa-houston.org April 2008 Arthur C. Clarke 1917 - 2008 2001: A Space Odyssey - 40 Years Later Yesterday’s Tomorrow Artwork by Jon C. Rogers and Pat Rawlings AIAA Houston Horizons April 2008 Page 1 April 2008 T A B L E O F C O N T E N T S From the Acting Editor 3 HOUSTON Chair’s Corner 4 2001: A Space Odyssey - 40 Years Later: Yesterday’s Tomorrow 5 Horizons is a quarterly publication of the Houston section of the American Institute of Aeronautics and Astronautics. International Space Activities Committee (ISAC) 14 Arthur C. Clarke: A Prophet Vindicated by Gregory Benford 16 Acting Editor: Douglas Yazell [email protected] Book Review (Subject: Ellington Field in Houston) & Staying Informed 18 Assistant Editors: Scholarship & Annual Technical Symposium (ATS 2008) 19 Jon Berndt Dr. Rattaya Yalamanchili Lunch-and-Learn Summary: Mars Rovers by Dr. Mark Adler/JPL 20 Don Kulba Robert Beremand Dinner Meeting Summary: John Frassanito & Associates 21 Lunch & Learn: Sailing the Space Station with Zero-Propellant Guidance 22 AIAA Houston Section Executive Council Membership 23 Chair: Douglas Yazell Inaugural Space Center Lecture Series: Harrison Schmitt of Apollo 17 24 Chair-Elect: Chad Brinkley Past Chair: Dr. Jayant Ramakrishnan Yuri’s Night Houston by AAS, co-sponsored by AIAA Houston Section 26 Secretary: Sarah Shull Constellation Earth, Michel Bonavitacola, AAAF , Toulouse, France 27 Treasurer: Tim Propp Calendar 30 JJ Johnson Sean Carter Cranium Cruncher and a Pre-College Event: Engineer for a Day 31 Vice-Chair, Vice-Chair, Operations Branch Technical Branch Odds and Ends: EAA Houston Chapter 12, James C. -

Compresso: Efficient Compression of Segmentation Data for Connectomics

Compresso: Efficient Compression of Segmentation Data For Connectomics Brian Matejek, Daniel Haehn, Fritz Lekschas, Michael Mitzenmacher, Hanspeter Pfister Harvard University, Cambridge, MA 02138, USA bmatejek,haehn,lekschas,michaelm,[email protected] Abstract. Recent advances in segmentation methods for connectomics and biomedical imaging produce very large datasets with labels that assign object classes to image pixels. The resulting label volumes are bigger than the raw image data and need compression for efficient stor- age and transfer. General-purpose compression methods are less effective because the label data consists of large low-frequency regions with struc- tured boundaries unlike natural image data. We present Compresso, a new compression scheme for label data that outperforms existing ap- proaches by using a sliding window to exploit redundancy across border regions in 2D and 3D. We compare our method to existing compression schemes and provide a detailed evaluation on eleven biomedical and im- age segmentation datasets. Our method provides a factor of 600-2200x compression for label volumes, with running times suitable for practice. Keywords: compression, encoding, segmentation, connectomics 1 Introduction Connectomics|reconstructing the wiring diagram of a mammalian brain at nanometer resolution|results in datasets at the scale of petabytes [21,8]. Ma- chine learning methods find cell membranes and create cell body labelings for every neuron [18,12,14] (Fig. 1). These segmentations are stored as label volumes that are typically encoded in 32 bits or 64 bits per voxel to support labeling of millions of different nerve cells (neurons). Storing such data is expensive and transferring the data is slow. To cut costs and delays, we need compression methods to reduce data sizes. -

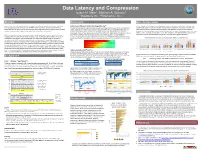

Data Latency and Compression

Data Latency and Compression Joseph M. Steim1, Edelvays N. Spassov2 1Quanterra Inc., 2Kinemetrics, Inc. Abstract DIscussion More Compression Because of interest in the capability of digital seismic data systems to provide low-latency data for “Early Compression and Latency related to Origin Queuing[5] Lossless Lempel-Ziv (LZ) compression methods (e.g. gzip, 7zip, and more recently “brotli” introduced by Warning” applications, we have examined the effect of data compression on the ability of systems to Plausible data compression methods reduce the volume (number of bits) required to represent each data Google for web-page compression) are often used for lossless compression of binary and text files. How deliver data with low latency, and on the efficiency of data storage and telemetry systems. Quanterra Q330 sample. Compression of data therefore always reduces the minimum required bandwidth (bits/sec) for does the best example of modern LZ methods compare with FDSN Level 1 and 2 for waveform data? systems are widely used in telemetered networks, and are considered in particular. transmission compared with transmission of uncompressed data. The volume required to transmit Segments of data representing up to one month of various levels of compressibility were compressed using compressed data is variable, depending on characteristics of the data. Higher levels of compression require Levels 1,2 and 3, and brotli using “quality” 1 and 6. The compression ratio (relative to the original 32 bits) Q330 data acquisition systems transmit data compressed in Steim2-format packets. Some studies have less minimum bandwidth and volume, and maximize the available unused (“free”) bandwidth for shows that in all cases the compression using L1,L2, or L3 is significantly greater than brotli. -

Parquet Data Format Performance

Parquet data format performance Jim Pivarski Princeton University { DIANA-HEP February 21, 2018 1 / 22 What is Parquet? 1974 HBOOK tabular rowwise FORTRAN first ntuples in HEP 1983 ZEBRA hierarchical rowwise FORTRAN event records in HEP 1989 PAW CWN tabular columnar FORTRAN faster ntuples in HEP 1995 ROOT hierarchical columnar C++ object persistence in HEP 2001 ProtoBuf hierarchical rowwise many Google's RPC protocol 2002 MonetDB tabular columnar database “first” columnar database 2005 C-Store tabular columnar database also early, became HP's Vertica 2007 Thrift hierarchical rowwise many Facebook's RPC protocol 2009 Avro hierarchical rowwise many Hadoop's object permanance and interchange format 2010 Dremel hierarchical columnar C++, Java Google's nested-object database (closed source), became BigQuery 2013 Parquet hierarchical columnar many open source object persistence, based on Google's Dremel paper 2016 Arrow hierarchical columnar many shared-memory object exchange 2 / 22 What is Parquet? 1974 HBOOK tabular rowwise FORTRAN first ntuples in HEP 1983 ZEBRA hierarchical rowwise FORTRAN event records in HEP 1989 PAW CWN tabular columnar FORTRAN faster ntuples in HEP 1995 ROOT hierarchical columnar C++ object persistence in HEP 2001 ProtoBuf hierarchical rowwise many Google's RPC protocol 2002 MonetDB tabular columnar database “first” columnar database 2005 C-Store tabular columnar database also early, became HP's Vertica 2007 Thrift hierarchical rowwise many Facebook's RPC protocol 2009 Avro hierarchical rowwise many Hadoop's object permanance and interchange format 2010 Dremel hierarchical columnar C++, Java Google's nested-object database (closed source), became BigQuery 2013 Parquet hierarchical columnar many open source object persistence, based on Google's Dremel paper 2016 Arrow hierarchical columnar many shared-memory object exchange 2 / 22 Developed independently to do the same thing Google Dremel authors claimed to be unaware of any precedents, so this is an example of convergent evolution. -

The Deep Learning Solutions on Lossless Compression Methods for Alleviating Data Load on Iot Nodes in Smart Cities

sensors Article The Deep Learning Solutions on Lossless Compression Methods for Alleviating Data Load on IoT Nodes in Smart Cities Ammar Nasif *, Zulaiha Ali Othman and Nor Samsiah Sani Center for Artificial Intelligence Technology (CAIT), Faculty of Information Science & Technology, University Kebangsaan Malaysia, Bangi 43600, Malaysia; [email protected] (Z.A.O.); [email protected] (N.S.S.) * Correspondence: [email protected] Abstract: Networking is crucial for smart city projects nowadays, as it offers an environment where people and things are connected. This paper presents a chronology of factors on the development of smart cities, including IoT technologies as network infrastructure. Increasing IoT nodes leads to increasing data flow, which is a potential source of failure for IoT networks. The biggest challenge of IoT networks is that the IoT may have insufficient memory to handle all transaction data within the IoT network. We aim in this paper to propose a potential compression method for reducing IoT network data traffic. Therefore, we investigate various lossless compression algorithms, such as entropy or dictionary-based algorithms, and general compression methods to determine which algorithm or method adheres to the IoT specifications. Furthermore, this study conducts compression experiments using entropy (Huffman, Adaptive Huffman) and Dictionary (LZ77, LZ78) as well as five different types of datasets of the IoT data traffic. Though the above algorithms can alleviate the IoT data traffic, adaptive Huffman gave the best compression algorithm. Therefore, in this paper, Citation: Nasif, A.; Othman, Z.A.; we aim to propose a conceptual compression method for IoT data traffic by improving an adaptive Sani, N.S. -

Key-Based Self-Driven Compression in Columnar Binary JSON

Otto von Guericke University of Magdeburg Department of Computer Science Master's Thesis Key-Based Self-Driven Compression in Columnar Binary JSON Author: Oskar Kirmis November 4, 2019 Advisors: Prof. Dr. rer. nat. habil. Gunter Saake M. Sc. Marcus Pinnecke Institute for Technical and Business Information Systems / Database Research Group Kirmis, Oskar: Key-Based Self-Driven Compression in Columnar Binary JSON Master's Thesis, Otto von Guericke University of Magdeburg, 2019 Abstract A large part of the data that is available today in organizations or publicly is provided in semi-structured form. To perform analytical tasks on these { mostly read-only { semi-structured datasets, Carbon archives were developed as a column-oriented storage format. Its main focus is to allow cache-efficient access to fields across records. As many semi-structured datasets mainly consist of string data and the denormalization introduces redundancy, a lot of storage space is required. However, in Carbon archives { besides a deduplication of strings { there is currently no compression implemented. The goal of this thesis is to discuss, implement and evaluate suitable compression tech- niques to reduce the amount of storage required and to speed up analytical queries on Carbon archives. Therefore, a compressor is implemented that can be configured to apply a combination of up to three different compression algorithms to the string data of Carbon archives. This compressor can be applied with a different configuration per column (per JSON object key). To find suitable combinations of compression algo- rithms for each column, one manual and two self-driven approaches are implemented and evaluated. On a set of ten publicly available semi-structured datasets of different kinds and sizes, the string data can be compressed down to about 53% on average, reducing the whole datasets' size by 20%.