I Came to Drop Bombs Auditing the Compression Algorithm Weapons Cache

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Compression: Dictionary-Based Coding 2 / 37 Dictionary-Based Coding Dictionary-Based Coding

Dictionary-based Coding already coded not yet coded search buffer look-ahead buffer cursor (N symbols) (L symbols) We know the past but cannot control it. We control the future but... Last Lecture Last Lecture: Predictive Lossless Coding Predictive Lossless Coding Simple and effective way to exploit dependencies between neighboring symbols / samples Optimal predictor: Conditional mean (requires storage of large tables) Affine and Linear Prediction Simple structure, low-complex implementation possible Optimal prediction parameters are given by solution of Yule-Walker equations Works very well for real signals (e.g., audio, images, ...) Efficient Lossless Coding for Real-World Signals Affine/linear prediction (often: block-adaptive choice of prediction parameters) Entropy coding of prediction errors (e.g., arithmetic coding) Using marginal pmf often already yields good results Can be improved by using conditional pmfs (with simple conditions) Heiko Schwarz (Freie Universität Berlin) — Data Compression: Dictionary-based Coding 2 / 37 Dictionary-based Coding Dictionary-Based Coding Coding of Text Files Very high amount of dependencies Affine prediction does not work (requires linear dependencies) Higher-order conditional coding should work well, but is way to complex (memory) Alternative: Do not code single characters, but words or phrases Example: English Texts Oxford English Dictionary lists less than 230 000 words (including obsolete words) On average, a word contains about 6 characters Average codeword length per character would be limited by 1 -

Package 'Brotli'

Package ‘brotli’ May 13, 2018 Type Package Title A Compression Format Optimized for the Web Version 1.2 Description A lossless compressed data format that uses a combination of the LZ77 algorithm and Huffman coding. Brotli is similar in speed to deflate (gzip) but offers more dense compression. License MIT + file LICENSE URL https://tools.ietf.org/html/rfc7932 (spec) https://github.com/google/brotli#readme (upstream) http://github.com/jeroen/brotli#read (devel) BugReports http://github.com/jeroen/brotli/issues VignetteBuilder knitr, R.rsp Suggests spelling, knitr, R.rsp, microbenchmark, rmarkdown, ggplot2 RoxygenNote 6.0.1 Language en-US NeedsCompilation yes Author Jeroen Ooms [aut, cre] (<https://orcid.org/0000-0002-4035-0289>), Google, Inc [aut, cph] (Brotli C++ library) Maintainer Jeroen Ooms <[email protected]> Repository CRAN Date/Publication 2018-05-13 20:31:43 UTC R topics documented: brotli . .2 Index 4 1 2 brotli brotli Brotli Compression Description Brotli is a compression algorithm optimized for the web, in particular small text documents. Usage brotli_compress(buf, quality = 11, window = 22) brotli_decompress(buf) Arguments buf raw vector with data to compress/decompress quality value between 0 and 11 window log of window size Details Brotli decompression is at least as fast as for gzip while significantly improving the compression ratio. The price we pay is that compression is much slower than gzip. Brotli is therefore most effective for serving static content such as fonts and html pages. For binary (non-text) data, the compression ratio of Brotli usually does not beat bz2 or xz (lzma), however decompression for these algorithms is too slow for browsers in e.g. -

Data Preparation & Descriptive Statistics

Data Preparation & Descriptive Statistics (ver. 2.4) Oscar Torres-Reyna Data Consultant [email protected] PU/DSS/OTR http://dss.princeton.edu/training/ Basic definitions… For statistical analysis we think of data as a collection of different pieces of information or facts. These pieces of information are called variables. A variable is an identifiable piece of data containing one or more values. Those values can take the form of a number or text (which could be converted into number) In the table below variables var1 thru var5 are a collection of seven values, ‘id’ is the identifier for each observation. This dataset has information for seven cases (in this case people, but could also be states, countries, etc) grouped into five variables. id var1 var2 var3 var4 var5 1 7.3 32.27 0.1 Yes Male 2 8.28 40.68 0.56 No Female 3 3.35 5.62 0.55 Yes Female 4 4.08 62.8 0.83 Yes Male 5 9.09 22.76 0.26 No Female 6 8.15 90.85 0.23 Yes Female 7 7.59 54.94 0.42 Yes Male PU/DSS/OTR Data structure… For data analysis your data should have variables as columns and observations as rows. The first row should have the column headings. Make sure your dataset has at least one identifier (for example, individual id, family id, etc.) id var1 var2 var3 var4 var5 First row should have the variable names 1 7.3 32.27 0.1 Yes Male 2 8.28 40.68 0.56 No Female Cross-sectional data 3 3.35 5.62 0.55 Yes Female 4 4.08 62.8 0.83 Yes Male 5 9.09 22.76 0.26 No Female 6 8.15 90.85 0.23 Yes Female 7 7.59 54.94 0.42 Yes Male id year var1 var2 var3 1 2000 7 74.03 0.55 Group 1 1 2001 2 4.6 0.44 At least one identifier 1 2002 2 25.56 0.77 2 2000 7 59.52 0.05 Cross-sectional time series data Group 2 2 2001 2 16.95 0.94 or panel data 2 2002 9 1.2 0.08 3 2000 9 85.85 0.5 Group 3 3 2001 3 98.85 0.32 3 2002 3 69.2 0.76 PU/DSS/OTR NOTE: See: http://www.statistics.com/resources/glossary/c/crossdat.php Data format (ASCII)… ASCII (American Standard Code for Information Interchange). -

Contrasting the Performance of Compression Algorithms on Genomic Data

Contrasting the Performance of Compression Algorithms on Genomic Data Cornel Constantinescu, IBM Research Almaden Outline of the Talk: • Introduction / Motivation • Data used in experiments • General purpose compressors comparison • Simple Improvements • Special purpose compression • Transparent compression – working on compressed data (prototype) • Parallelism / Multithreading • Conclusion Introduction / Motivation • Despite the large number of research papers and compression algorithms proposed for compressing genomic data generated by sequencing machines, by far the most commonly used compression algorithm in the industry for FASTQ data is gzip. • The main drawbacks of the proposed alternative special-purpose compression algorithms are: • slow speed of either compression or decompression or both, and also their • brittleness by making various limiting assumptions about the input FASTQ format (for example, the structure of the headers or fixed lengths of the records [1]) in order to further improve their specialized compression. 1. Ibrahim Numanagic, James K Bonfield, Faraz Hach, Jan Voges, Jorn Ostermann, Claudio Alberti, Marco Mattavelli, and S Cenk Sahinalp. Comparison of high-throughput sequencing data compression tools. Nature Methods, 13(12):1005–1008, October 2016. Fast and Efficient Compression of Next Generation Sequencing Data 2 2 General Purpose Compression of Genomic Data As stated earlier, gzip/zlib compression is the method of choice by the industry for FASTQ genomic data. FASTQ genomic data is a text-based format (ASCII readable text) for storing a biological sequence and the corresponding quality scores. Each sequence letter and quality score is encoded with a single ASCII character. FASTQ data is structured in four fields per record (a “read”). The first field is the SEQUENCE ID or the header of the read. -

Third Party Software Component List: Targeted Use: Briefcam® Fulfillment of License Obligation for All Open Sources: Yes

Third Party Software Component List: Targeted use: BriefCam® Fulfillment of license obligation for all open sources: Yes Name Link and Copyright Notices Where Available License Type OpenCV https://opencv.org/license.html 3-Clause Copyright (C) 2000-2019, Intel Corporation, all BSD rights reserved. Copyright (C) 2009-2011, Willow Garage Inc., all rights reserved. Copyright (C) 2009-2016, NVIDIA Corporation, all rights reserved. Copyright (C) 2010-2013, Advanced Micro Devices, Inc., all rights reserved. Copyright (C) 2015-2016, OpenCV Foundation, all rights reserved. Copyright (C) 2015-2016, Itseez Inc., all rights reserved. Apache Logging http://logging.apache.org/log4cxx/license.html Apache Copyright © 1999-2012 Apache Software Foundation License V2 Google Test https://github.com/abseil/googletest/blob/master/google BSD* test/LICENSE Copyright 2008, Google Inc. SAML 2.0 component for https://github.com/jitbit/AspNetSaml/blob/master/LICEN MIT ASP.NET SE Copyright 2018 Jitbit LP Nvidia Video Codec https://github.com/lu-zero/nvidia-video- MIT codec/blob/master/LICENSE Copyright (c) 2016 NVIDIA Corporation FFMpeg 4 https://www.ffmpeg.org/legal.html LesserGPL FFmpeg is a trademark of Fabrice Bellard, originator v2.1 of the FFmpeg project 7zip.exe https://www.7-zip.org/license.txt LesserGPL 7-Zip Copyright (C) 1999-2019 Igor Pavlov v2.1/3- Clause BSD Infralution.Localization.Wp http://www.codeproject.com/info/cpol10.aspx CPOL f Copyright (C) 2018 Infralution Pty Ltd directShowlib .net https://github.com/pauldotknopf/DirectShow.NET/blob/ LesserGPL -

Full Document

R&D Centre for Mobile Applications (RDC) FEE, Dept of Telecommunications Engineering Czech Technical University in Prague RDC Technical Report TR-13-4 Internship report Evaluation of Compressibility of the Output of the Information-Concealing Algorithm Julien Mamelli, [email protected] 2nd year student at the Ecole´ des Mines d'Al`es (N^ımes,France) Internship supervisor: Luk´aˇsKencl, [email protected] August 2013 Abstract Compression is a key element to exchange files over the Internet. By generating re- dundancies, the concealing algorithm proposed by Kencl and Loebl [?], appears at first glance to be particularly designed to be combined with a compression scheme [?]. Is the output of the concealing algorithm actually compressible? We have tried 16 compression techniques on 1 120 files, and the result is that we have not found a solution which could advantageously use repetitions of the concealing method. Acknowledgments I would like to express my gratitude to my supervisor, Dr Luk´aˇsKencl, for his guidance and expertise throughout the course of this work. I would like to thank Prof. Robert Beˇst´akand Mr Pierre Runtz, for giving me the opportunity to carry out my internship at the Czech Technical University in Prague. I would also like to thank all the members of the Research and Development Center for Mobile Applications as well as my colleagues for the assistance they have given me during this period. 1 Contents 1 Introduction 3 2 Related Work 4 2.1 Information concealing method . 4 2.2 Archive formats . 5 2.3 Compression algorithms . 5 2.3.1 Lempel-Ziv algorithm . -

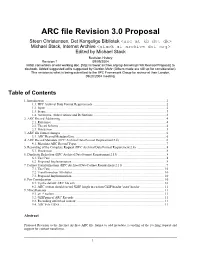

ARC File Revision 3.0 Proposal

ARC file Revision 3.0 Proposal Steen Christensen, Det Kongelige Bibliotek <ssc at kb dot dk> Michael Stack, Internet Archive <stack at archive dot org> Edited by Michael Stack Revision History Revision 1 09/09/2004 Initial conversion of wiki working doc. [http://crawler.archive.org/cgi-bin/wiki.pl?ArcRevisionProposal] to docbook. Added suggested edits suggested by Gordon Mohr (Others made are still up for consideration). This revision is what is being submitted to the IIPC Framework Group for review at their London, 09/20/2004 meeting. Table of Contents 1. Introduction ............................................................................................................................2 1.1. IIPC Archival Data Format Requirements .......................................................................... 2 1.2. Input ...........................................................................................................................2 1.3. Scope ..........................................................................................................................3 1.4. Acronyms, Abbreviations and Definitions .......................................................................... 3 2. ARC Record Addressing ........................................................................................................... 4 2.1. Reference ....................................................................................................................4 2.2. The ari Scheme ............................................................................................................ -

Pack, Encrypt, Authenticate Document Revision: 2021 05 02

PEA Pack, Encrypt, Authenticate Document revision: 2021 05 02 Author: Giorgio Tani Translation: Giorgio Tani This document refers to: PEA file format specification version 1 revision 3 (1.3); PEA file format specification version 2.0; PEA 1.01 executable implementation; Present documentation is released under GNU GFDL License. PEA executable implementation is released under GNU LGPL License; please note that all units provided by the Author are released under LGPL, while Wolfgang Ehrhardt’s crypto library units used in PEA are released under zlib/libpng License. PEA file format and PCOMPRESS specifications are hereby released under PUBLIC DOMAIN: the Author neither has, nor is aware of, any patents or pending patents relevant to this technology and do not intend to apply for any patents covering it. As far as the Author knows, PEA file format in all of it’s parts is free and unencumbered for all uses. Pea is on PeaZip project official site: https://peazip.github.io , https://peazip.org , and https://peazip.sourceforge.io For more information about the licenses: GNU GFDL License, see http://www.gnu.org/licenses/fdl.txt GNU LGPL License, see http://www.gnu.org/licenses/lgpl.txt 1 Content: Section 1: PEA file format ..3 Description ..3 PEA 1.3 file format details ..5 Differences between 1.3 and older revisions ..5 PEA 2.0 file format details ..7 PEA file format’s and implementation’s limitations ..8 PCOMPRESS compression scheme ..9 Algorithms used in PEA format ..9 PEA security model .10 Cryptanalysis of PEA format .12 Data recovery from -

A Novel Coding Architecture for Multi-Line Lidar Point Clouds

This article has been accepted for inclusion in a future issue of this journal. Content is final as presented, with the exception of pagination. IEEE TRANSACTIONS ON INTELLIGENT TRANSPORTATION SYSTEMS 1 A Novel Coding Architecture for Multi-Line LiDAR Point Clouds Based on Clustering and Convolutional LSTM Network Xuebin Sun , Sukai Wang , Graduate Student Member, IEEE, and Ming Liu , Senior Member, IEEE Abstract— Light detection and ranging (LiDAR) plays an preservation of historical relics, 3D sensing for smart city, indispensable role in autonomous driving technologies, such as well as autonomous driving. Especially for autonomous as localization, map building, navigation and object avoidance. driving systems, LiDAR sensors play an indispensable role However, due to the vast amount of data, transmission and storage could become an important bottleneck. In this article, in a large number of key techniques, such as simultaneous we propose a novel compression architecture for multi-line localization and mapping (SLAM) [1], path planning [2], LiDAR point cloud sequences based on clustering and convolu- obstacle avoidance [3], and navigation. A point cloud consists tional long short-term memory (LSTM) networks. LiDAR point of a set of individual 3D points, in accordance with one or clouds are structured, which provides an opportunity to convert more attributes (color, reflectance, surface normal, etc). For the 3D data to 2D array, represented as range images. Thus, we cast the 3D point clouds compression as a range image instance, the Velodyne HDL-64E LiDAR sensor generates a sequence compression problem. Inspired by the high efficiency point cloud of up to 2.2 billion points per second, with a video coding (HEVC) algorithm, we design a novel compression range of up to 120 m. -

Steganography and Vulnerabilities in Popular Archives Formats.| Nyxengine Nyx.Reversinglabs.Com

Hiding in the Familiar: Steganography and Vulnerabilities in Popular Archives Formats.| NyxEngine nyx.reversinglabs.com Contents Introduction to NyxEngine ............................................................................................................................ 3 Introduction to ZIP file format ...................................................................................................................... 4 Introduction to steganography in ZIP archives ............................................................................................. 5 Steganography and file malformation security impacts ............................................................................... 8 References and tools .................................................................................................................................... 9 2 Introduction to NyxEngine Steganography1 is the art and science of writing hidden messages in such a way that no one, apart from the sender and intended recipient, suspects the existence of the message, a form of security through obscurity. When it comes to digital steganography no stone should be left unturned in the search for viable hidden data. Although digital steganography is commonly used to hide data inside multimedia files, a similar approach can be used to hide data in archives as well. Steganography imposes the following data hiding rule: Data must be hidden in such a fashion that the user has no clue about the hidden message or file's existence. This can be achieved by -

My Sons Postponed to March 18-19 Artist Series Please: Return Those Books!

» LWA Darlings Don Colonial Garb for Minuet Ans^nsçhuetz, Bekkdal, Jensen and Ladw ig Lib*.Ire Honored at Traditional Banquet A dainty Mozart minuet intro-1 duced Lawrence college’s four Best tian. president of the Spanish Loved senior women at the annual club. « colonial banquet last Monday eve Tekla Bekkedal as vice-president ning in the dining hall of the First of the Student Christian association, Congregational church. Chosen for the Best Loved honor this year and is active in the International were Mary Anschuetz, Tekla Bek- Relations club, the German club kedal, Mary Ellen Jensen and Joan tind on The Lawrentian staff. She Ladwig. Complete with powdered wras chosen for membership in hair, they were dressed in tradi Sigrna, underclass scholastic group, tional colonial costumes of George and is now a counselor to freshman and Martha Washington and James women. and Dolly Madison. Miss Jensen is yico-president and The best loved tradition, which Pled*e mistress of Alpha Chi Ome- has been observed for more than 20 *?a- ^lcr social sorority, and also is years, is sponsored by the Lawrencei^fHiated w'ith Sigma Alpha Iota, Women’s association, under the so- professional music sorority. A music Cial chairmanship this year of Viv- major, she sings in the college con- ia n Grady and Betty Wheeler. V iv - cert choir and plays in several in- ia n was toastmistress for the ban- strumental groups. Last fall she was quet and Mrs. Kenneth Davis, Ap-¡chosen attendant to the homecom- pleton. a Best Loved in 1947, gave ¡ ‘ " 8 queen and she has also served a toast to the new electees and pre- as a counselor to freshman women, tented them with small bracelets on! Best Loved banquets are not behalf of last year’s group. -

Lossless Compression of Internal Files in Parallel Reservoir Simulation

Lossless Compression of Internal Files in Parallel Reservoir Simulation Suha Kayum Marcin Rogowski Florian Mannuss 9/26/2019 Outline • I/O Challenges in Reservoir Simulation • Evaluation of Compression Algorithms on Reservoir Simulation Data • Real-world application - Constraints - Algorithm - Results • Conclusions 2 Challenge Reservoir simulation 1 3 Reservoir Simulation • Largest field in the world are represented as 50 million – 1 billion grid block models • Each runs takes hours on 500-5000 cores • Calibrating the model requires 100s of runs and sophisticated methods • “History matched” model is only a beginning 4 Files in Reservoir Simulation • Internal Files • Input / Output Files - Interact with pre- & post-processing tools Date Restart/Checkpoint Files 5 Reservoir Simulation in Saudi Aramco • 100’000+ simulations annually • The largest simulation of 10 billion cells • Currently multiple machines in TOP500 • Petabytes of storage required 600x • Resources are Finite • File Compression is one solution 50x 6 Compression algorithm evaluation 2 7 Compression ratio Tested a number of algorithms on a GRID restart file for two models 4 - Model A – 77.3 million active grid blocks 3.5 - Model K – 8.7 million active grid blocks 3 - 15.6 GB and 7.2 GB respectively 2.5 2 Compression ratio is between 1.5 1 compression ratio compression - From 2.27 for snappy (Model A) 0.5 0 - Up to 3.5 for bzip2 -9 (Model K) Model A Model K lz4 snappy gzip -1 gzip -9 bzip2 -1 bzip2 -9 8 Compression speed • LZ4 and Snappy significantly outperformed other algorithms